A Simple Consumption Function using Quarterly Taiwan Data

Here is a simple model of real private consumption in Taiwan from 1990.1~2003.1

We begin with a function relating consumption to income. This can be written as

t Ct Ao(Yt ) e

where Ct = real private consumption, Yt = real GDP, and εt = a random variable.

Actually this is not correct. Real private consumption should be a function of disposable income and not real GDP. Unfortunately, we do not have quarterly data on disposable income. In fact, no one does. People report their taxes only once a year. We do have annual data on income taxes and disposable income, but we do not have quarterly data. d We might try to use a proxy variable. To make things simple, we could use Yt = Y – G, where G = government expenditure. We can use this because G and Income Taxes are closely related.

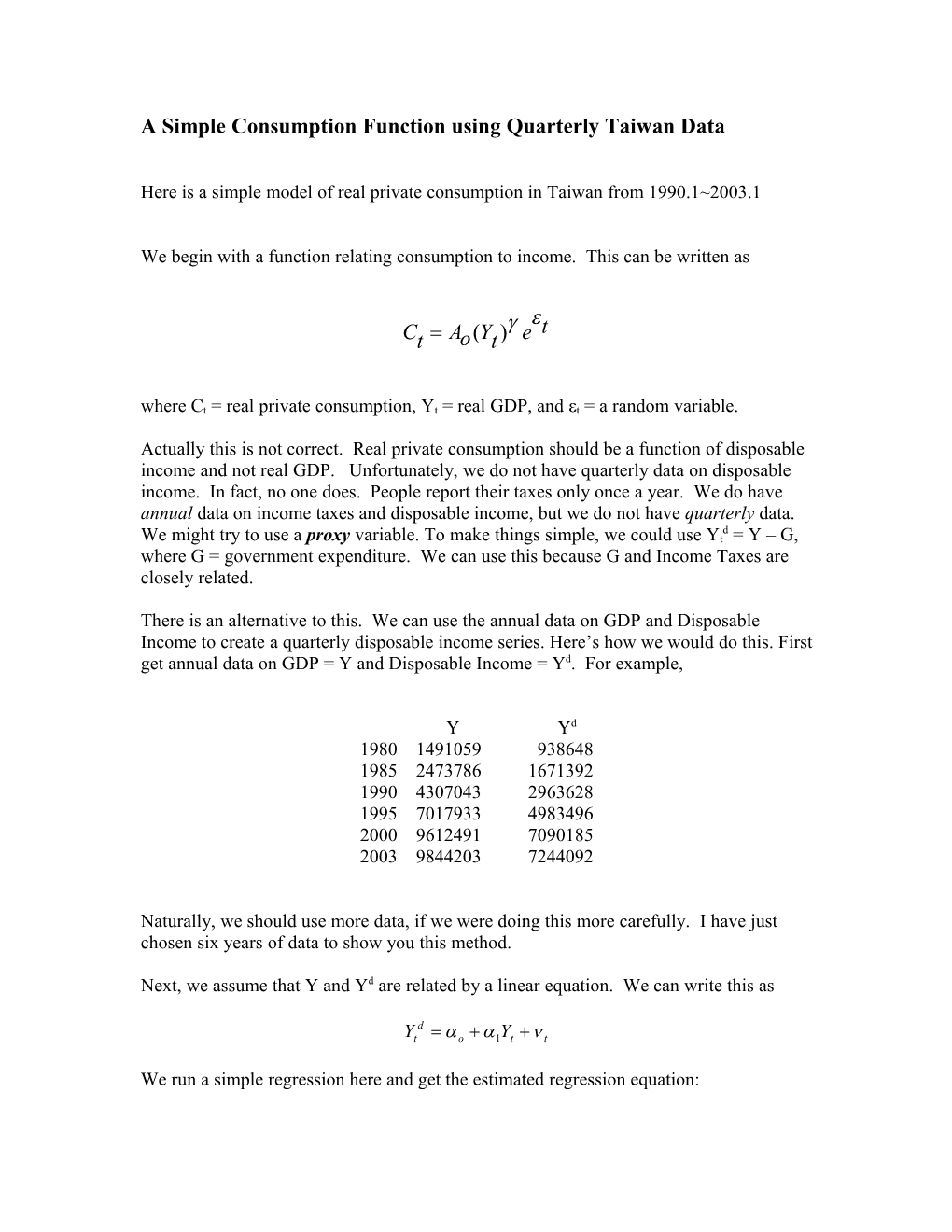

There is an alternative to this. We can use the annual data on GDP and Disposable Income to create a quarterly disposable income series. Here’s how we would do this. First get annual data on GDP = Y and Disposable Income = Yd. For example,

Y Yd 1980 1491059 938648 1985 2473786 1671392 1990 4307043 2963628 1995 7017933 4983496 2000 9612491 7090185 2003 9844203 7244092

Naturally, we should use more data, if we were doing this more carefully. I have just chosen six years of data to show you this method.

Next, we assume that Y and Yd are related by a linear equation. We can write this as

d Yt o 1Yt t

We run a simple regression here and get the estimated regression equation: ˆ d Yt 232236 0.756475Yt

We use this equation to change our quarterly Y=GDP data into quarterly Yd = disposable income data.

In the graph above Y = GDP, ydg = Y-G, and yd1 = -232236 +0.756475Y are graphed together. The three series look very much the same, but there are still differences. We will get different results using one or the other, especially since taking logs complicates the difference between the three series. Nevertheless, the correlation between any pair of these series is ρ = 0.999.

Let’s use the variable Y-G as a proxy to our disposable income variable Yd and see the results of the estimation. We begin by taking the logs of C and Yd. This makes the regression equal to

d log(Ct ) log(Ao) log(Yt ) t We are interested in estimating the coefficient γ in the above regression. In running this regression we assume that the random term εt does not have autocorrelation.

The random terms εt will be statistically independent if each εt is unrelated to past εt’s. If the εt’s are normally distributed, this means that the correlation between εt and εt-k is zero for k = 1,2,3,... If the residuals ˆt show autocorrelation, then the t-statistics will generally be biased and we cannot use them to make inferences about the statistical significance of the estimated coefficients.

Another important point is that consumption generally shows a marked seasonality. This means that we must use periodic dummy variables in the regression.

OLS estimates using the 101 observations 1978:1-2003:1 Dependent variable: lc

VARIABLE COEFFICIENT STDERROR T STAT 2Prob(t > |T|)

0) const -1.01766 0.0894155 -11.381 < 0.00001 *** 39) lyd 1.04099 0.00642124 162.116 < 0.00001 *** 40) dq1 0.147266 0.00962654 15.298 < 0.00001 *** 41) dq2 0.00388797 0.00972127 0.400 0.690086 42) dq3 0.0685361 0.00971251 7.056 < 0.00001 ***

Mean of dependent variable = 13.4512 Standard deviation of dep. var. = 0.560413 Sum of squared residuals = 0.113178 Standard error of residuals = 0.0343357 Unadjusted R-squared = 0.996396 Adjusted R-squared = 0.996246 F-statistic (4, 96) = 6635.86 (p-value < 0.00001) Durbin-Watson statistic = 0.427302 First-order autocorrelation coeff. = 0.77752

This shows thatˆ 1.04099 . Note how that the t-statistics are extremely large. We should suspect the validity of such exaggerated statistics. The high R2 and the low D-W statistic tell us that something is very wrong about this regression. The problem of autocorrelation appears to be serious. But, we need a formal test to be confident that the regression has this problem.

Using the Breusch-Godfrey test which Gretl gives us, we get the following result. Breusch-Godfrey test for autocorrelation up to order 4 OLS estimates using the 97 observations 1979:1-2003:1 Dependent variable: uhat

VARIABLE COEFFICIENT STDERROR T STAT 2Prob(t > |T|)

0) const 0.00619665 0.0571871 0.108 0.913959 39) lyd -0.000483105 0.00409669 -0.118 0.906395 40) dq1 -0.00248228 0.00585382 -0.424 0.672568 41) dq2 0.000196056 0.00591649 0.033 0.973640 42) dq3 0.00117033 0.00591035 0.198 0.843491 45) uhat_1 0.697539 0.105563 6.608 < 0.00001 *** 46) uhat_2 -0.103064 0.128792 -0.800 0.425728 47) uhat_3 0.0458960 0.128974 0.356 0.722802 48) uhat_4 0.246601 0.103212 2.389 0.019018 **

Unadjusted R-squared = 0.662594

Test statistic: LMF = 43.203254, with p-value = P(F(4,88) > 43.2033) = 5.23e-020

Alternative statistic: TR^2 = 64.271572, with p-value = P(Chi-square(4) > 64.2716) = 3.66e-013

Ljung-Box Q' = 185.553 with p-value = P(Chi-square(4) > 185.553) = 0

There are 3 tests given ....(1) the LMF test or the Lagrange Multiplier F test and (2) the Chi-Square test and (3) the Ljung-Box portmanteau Q test. The first test has an incredibly small p-value = 0.0000000000000000000523 while the second test has a larger (but still incredibly small) p-value =0.000000000000366. The last has a p-value that is so small that the computer simply gives it the value of zero. These tests all clearly show that the regression has autocorrelation.

We can also see that there is an autocorrelation problem by looking at the correlogram

(autocorrelation function or ACF) of ˆt . The correlogram (ACF) is shown below in the top half of the diagram on the next page. We will discuss this graph in depth next semester. For now we note that if the lines extend outside of the red lines, then there is autocorrelation in the regression. Obviously this is true for our regression this time.

How can we fix this problem of autocorrelation?

There are three possible reasons for the autocorrelation:

(A) There is a variable which we have not included and it is autocorrelated. (B) The regression equation needs to include more lagged variables since the dynamics are complicated.

(C) There has been some structural change.

We will assume that the dynamics of the regression are wrong. To solve this problem we simply use the Cochrane-Orcutt procedure given in Gretl. We also will restrict the observation period to the interval 1990.1 ~ 2003.1 because of obvious structural change which has occurred. This results in the following estimated regression

Performing iterative calculation of rho...

ITER RHO ESS 1 0.43385 0.00886648 2 0.44625 0.00886476 3 0.44782 0.00886473 final 0.44803 Model 5: Cochrane-Orcutt estimates using the 52 observations 1990:2-2003:1 Dependent variable: lc

VARIABLE COEFFICIENT STDERROR T STAT 2Prob(t > |T|)

0) const -0.101447 0.217993 -0.465 0.643818 39) lyd 0.977363 0.0152106 64.256 < 0.00001 *** 40) dq1 0.138392 0.00437068 31.664 < 0.00001 *** 41) dq2 0.0153863 0.00497972 3.090 0.003360 *** 42) dq3 0.0771602 0.00436161 17.691 < 0.00001 ***

Statistics based on the rho-differenced data:

Sum of squared residuals = 0.00886473 Standard error of residuals = 0.0137336 Unadjusted R-squared = 0.996928 Adjusted R-squared = 0.996667 F-statistic (4, 47) = 1514.76 (p-value < 0.00001) Durbin-Watson statistic = 1.65391 First-order autocorrelation coeff. = 0.107774

This shows that ˆ 0.977363. Note that the Durbin Watson statistic has risen to D-W = 1.65391. We can now look at the correlogram of the residuals from this C-O estimation.

Remember that the correlogram is a graph showing the estimated autocorrelations for ˆt .

We define the estimated autocorrelation of ˆt as

T ˆ ˆ t tk tk1 ˆk T for k = 1,2,3,...T/4 ˆ2 t t1

and then we graph these as ˆ1, ˆ2 , ˆ3 ,..., ˆT / 4 against the lags k=1,2,3,...,T/4. This is shown on the next page (top graph labeled ACF). The correlogram shows that except for lags 4, 6, and 7 all autocorrelations are near zero. Moreover, the autocorrelations at lags 4, 6, and 7 do not exceed the 5% confidence bounds (i.e. they are inside the red lines) and are therefore considered statistically insignificant. They are all essentially zero.

This indicates that most of the autocorrelation problem has been eliminated.

Our final estimated regression (using Cochrane-Orcutt) is the following:

d log(Ct ) 0.101447 0.977363log(Yt ) t with 0.44803 t t 1 t

MPC The income elasticity of consumption is equal to ˆ 0.977363 . It follows that APC we can estimated the average MPC for Taiwan during the period 1990.1~2003.1 by ______multiplying ˆ by APC . This gives us ______MPC ˆ APC (0.977363)(0.69567) 0.68

This means that on average in Taiwan during the observation period, a $1000NT increase in disposable income would result (during the quarter) in an increase in private consumption of $680NT. Note also how that the MPC and the APC are very close to each other.