Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2008]

CHAPTER IV

Linear Algebraic equations

Topics Mathematical background Graphical method Cramer’s rule Gauss elimination Gauss-Jordan

I. Mathematical background

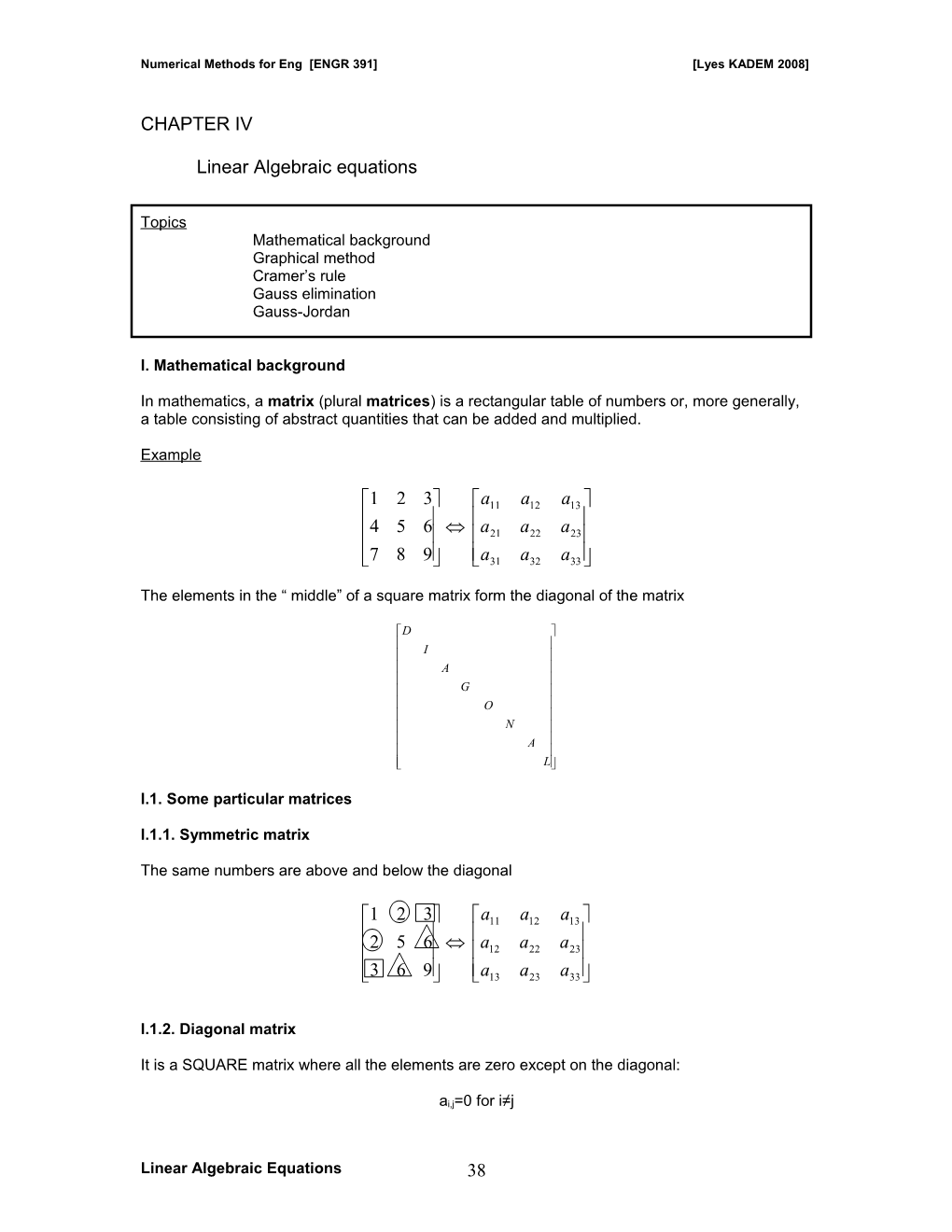

In mathematics, a matrix (plural matrices) is a rectangular table of numbers or, more generally, a table consisting of abstract quantities that can be added and multiplied.

Example

1 2 3 a11 a12 a13 4 5 6 a a a 21 22 23 7 8 9 a31 a32 a33

The elements in the “ middle” of a square matrix form the diagonal of the matrix

D I A G O N A L

I.1. Some particular matrices

I.1.1. Symmetric matrix

The same numbers are above and below the diagonal

1 2 3 a11 a12 a13 2 5 6 a a a 12 22 23 3 6 9 a13 a23 a33

I.1.2. Diagonal matrix

It is a SQUARE matrix where all the elements are zero except on the diagonal:

ai,j=0 for i≠j

Linear Algebraic Equations 38 Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2008]

1 0 0 a11 0 0 0 5 0 0 a 0 22 0 0 9 0 0 a33 I.1.3. Identity matrix it is a diagonal matrix where all the elements on the diagonal are equal to 1. Noted as [ I ]

ai,j=0 for i≠j AND ai,i=1

1 0 0 [ I ]= 0 1 0 0 0 1 I.1.4. Upper triangular matrix

It is a matrix where all the elements below the diagonal are equal to zero.

1 2 3 a11 a12 a13 0 5 6 0 a a 22 23 0 0 9 0 0 a33

I.1.5. Lower triangular matrix

It is a matrix where all the elements above the diagonal are equal to zero.

1 0 0 a11 0 0 2 5 0 a a 0 12 22 3 6 9 a13 a23 a33

I.1.6. Banded matrix

A banded matrix has all elements equal to zero, with the exception of a band centered on the main diagonal.

1 5 0 0 5 2 4 0 0 2 3 9 0 0 8 4

I.2. Operations on the matrices

Linear Algebraic Equations 39 Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2008]

I.2.1. Multiplication of matrices

If we consider a matrix [A] and a matrix [B] and the product of [A] and [B] is equal [C]

Therefore;

n Cij aik bik k1 Example:

1 2 3 2 1 3 16 41 21 = 4 5 6 4 8 6 40 92 54 7 8 9 2 8 2 64 143 87

Procedure

2 1 3 + 4 8 6 + 2 8 2

1 2 3 16 41 21 4 5 6 40 92 54 7 8 9 64 143 87

I.2.2. Inverse matrix

The inverse matrix [A]-1 can only be computed for a square matrix and non-singular matrix (det(A)0)

The inverse matrix is defined as a matrix that if multiplied by the matrix [A] will give the identity matrix [I]:

[A] [A]-1 = [I]

And for 22 matrix:

1 1 a22 a12 A a11a22 a12a21 a21 a11

Linear Algebraic Equations 40 Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2008]

I.2.3. Transpose matrix

The transpose of a matrix [A], noted [A]T is computed as:

T a11 a12 a13 a11 a21 a31 a a a = a a a 21 22 23 12 22 32 a a a a31 a32 a33 13 23 33

Example

T 1 2 3 1 4 7 4 5 6 2 5 8 7 8 9 3 6 9

I.2.4. Trace of a matrix

The trace of a matrix, noted tr[A] is defined as:

n trA aii i1

The sum the elements on the diagonal

Example

1 2 3 [A]= 4 5 6 ; tr[A] = 1+5+9 = 15 7 8 9 + I.2.5. Matrix augmentation

A matrix is augmented by the addition of a column (or more) to the original matrix.

Example

Augmentation of the matrix [A]

1 2 3 4 5 6 7 8 9

1 2 3 1 0 0 By the identity matrix 4 5 6 0 1 0 7 8 9 0 0 1

Linear Algebraic Equations 41 Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2008]

II. Solving small numbers of equations

In this part we will describe several methods that appropriate for solving small (n3) sets of simultaneous equations.

II.1. Graphical method (n=2; mostly)

Consider we have to solve the following system:

3x1 x2 5 x1 2x2 3

In this method, we plot two equations on a Cartesian coordinates with one axis for x 1 and the other for x2. Because we are considering linear equations, each equation is a straight line.

Therefore;

x 3x 5 2 1 1 3 x x 2 2 1 2

The intersection of the two lines represents the solution.

General form:

a11x1 a12 x2 b1 a21 x1 a22 x2 b2

b a x a b 1 11 1 11 1 x2 x1 a12 a12 a12 and; b a x a b x 2 21 1 21 x 2 2 1 a22 a22 a22

Slop Intercept

The graphical method can be used for n=3 (3 equations), but beyond, it will be very complex to determine the solution.

However, this technique is very useful to visualize the properties of the solutions:

- No solution - Infinite solutions - ill-conditioned system (the slopes are too close)

Linear Algebraic Equations 42 Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2008]

x2 x2 x2 Eq.1 Eq.1 Eq.1 Eq.2 Eq.2 Eq.2

x1 x1 x1

Figure.4.1. Graphical method: non-solution; infinite solutions; ill-conditioned

Example

Solve graphically the following system

3x1 x2 5 x1 2x2 3

II.2. Determinants and the Cramer’s rule

II.2.1. Determinant

For a matrix [A], such as:

a11 a12 a13 a a a [A]= 21 22 23 a31 a32 a33

The determinant D is

a11 a12 a13 a11 a12 D= a21 a22 a23 ; the second determinant is: D= a11a22 a12a21 a21 a22 a31 a32 a33

The third order case is:

a22 a23 a21 a23 a21 a22 D = a11 a12 a13 a32 a33 a31 a33 a31 a32

Linear Algebraic Equations 43 Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2008]

The 22 determinants are called minors.

Note

A determinant equal zero means that the matrix is singular and the system is ill-conditioned.

II.2.2. Cramer’s rule

The Cramer’s rule can be used to solve system of algebraic equations. To solve the system, x1 and x2 are written under the form:

b1 a12 a13

b2 a22 a23 b a a x 3 23 33 1 D

a11 b1 a13

a21 b2 a23 a b a x 31 3 33 2 D

And the same thing for x3 When the number of equations exceeds 3, the Cramer’s rule becomes impractical because the computation of the determinants is very time consuming.

Gabriel Cramer (July 31, 1704 - January 4, 1752) was a Swiss mathematician, born in Geneva. He showed promise in mathematics from an early age. At 18 he received his doctorate and at 20 he was co- chair of mathematics. In 1728 he proposed a solution to the St. Petersburg Paradox that came very close to the concept of expected utility theory given ten years later by Daniel Bernoulli.

Example

Solve using the Cramer’s rule the following system

3x1 x2 5 x1 2x2 3

Linear Algebraic Equations 44 Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2008]

II.3. The elimination of unknowns

To illustrate this well known procedure, let us take a simple system of equations with two equations:

(1) a11x1 a12 x2 b1 (2) a21 x1 a22 x2 b2

Step I. We multiply (1) by a21 and (2) by a11, thus

a11a21x1 a12a21x2 b1a21 a11a21x1 a11a22 x2 b2a11

By subtracting

a11a22 x2 a12a21x2 b2a11 b1a21

Therefore;

a11b2 a21b1 x2 a11a22 a12a21

Step II. And by replacing in the above equations:

a22b1 a12b2 x1 a11a22 a12a21

Note

Compare the to the Cramer’s law… it is exactly the same.

The problem with this method is that it is very time consuming for a large number of equations.

II.3.1. Naïve Gauss elimination

Using elimination of unknowns method (above), we:

1- Manipulated the equations to eliminate one unknown. We solved for the other unknown and; 2- We back-substituted it in one of the original equations to solve for other unknowns.

So, we will try to expand these steps: elimination and back-substitution to a large number of equations. The first method that will be introduced is the Gauss elimination method.

This technique is called naïve, because it does not avoid the problem of dividing by zero. This point has to be taken into account when implementing this technique on computers.

Linear Algebraic Equations 45 Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2008]

INCLUDEPICTURECarl Friedrich Gauss "http://upload.wikimedia.org/wikipedia/commons/thumb/9/9b/Carl_Friedrich_Gauss.jpg/468px-Carl_Friedrich_Gauss.jpg" (Gauß) (30 April 1777 – 23 February 1855) was a \* MERGEFORMATINET German mathematician and scientist of profound genius who contributed significantly to many fields, including number theory, analysis, differential geometry, geodesy, magnetism, astronomy and optics. Sometimes known as "the prince of mathematicians" and "greatest mathematician since antiquity", Gauss had a remarkable influence in many fields of mathematics and science and is ranked as one of history's most influential mathematicians.

Consider the following general system

a11x1 a12 x2 a13 x3 ... a1n xn b1 . . an1x1 an2 x2 an3 x3 ... ann xnn bn

- Forward elimination of unknown:

The principle is to eliminate at each step one unknown, starting from x1 to xn-1:

a21 We multiply the first equation by and we subtract it from the second equation. We will get: a11

a a a 21 21 21 a22 a12 x2 ... a2n a1n xn b2 b1 a11 a11 a11

a’22 a’2n b’2

Hence;

a'2 x2 ... a'n xn b'2

Note that a11 has been removed from eq.2

The modified system is: pivot coefficient or element pivot equation a11x1 a12 x2 a13 x3 ... a1n xn b1 a' x a' x ... a' x b' 22 2 23 3 2n n 2 . an1x1 an2 x2 an3 x3 ... ann xnn bn

Linear Algebraic Equations 46 Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2008]

a32 We do the same thing with x2, i.e, we multiply by , and subtract the result from the third a'22 equation, and so on …

The final result will be an upper triangular system:

a x a x a x ... a x b 11 1 12 2 13 3 1n n 1 a'22 x2 a'23 x3 ... a'2n xn b'2 a''33 x3 ... a''3n xn b''3 . n1 n1 ann xn bn

You can notice that xn can be found directly using the last equation. Then, a back-substitution is performed:

bn1 xn (n1) ann and n (i1) (i1) bi aij x j ji1 for i=(n-1), …, 1 xi (i1) aii

II.3.1.1. Operation counting

We can show that the total effort in naïve Gauss elimination is:

2n3 2n3 n2 nincrease 3 3

The first term is due to forward elimination and the second to backward elimination.

Two useful conclusions from this result:

- As the system gets larger, the computation time increases greatly. - Most of the effort is incurred in the elimination step. Thus to improve the method, efforts have to be focused on this step.

Linear Algebraic Equations 47 Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2008]

II.3.1.2. Pitfalls of elimination methods

II.3.1.2.1. Division by zero

Example:

0x1 4x2 5x3 8 7x1 3x2 1x3 5 2x1 1x2 5x3 7

Here we have a division by zero if we replace in the above formula for Gauss elimination (the same thing will happen if we use a very small number). The pivoting technique has been developed to avoid this problem.

II.3.1.2.2. Round-off errors

The large number of equations to be solved induces a propagation of the errors. A rough rule of thumb is that round-off error may be important when dealing with 100 or more equations.

Always substitute your answers back into the original equations to check whenever a substantial errors has occurred

II.3.1.2.3. ill-conditioned systems

A well-conditioned system means that a small change in one or more of the coefficients results in a similar change in the solution.

If we consider the simple system:

a b 11 1 x2 x1 a11x1 a12 x2 b1 a12 a12 ; thus a21 x1 a22 x2 b2 a b x 21 x 2 2 1 a22 a22

If the slopes are nearly equal it’s an ill-conditioned system:

a a 11 21 a11a22 a21a12 a11a22 a21a12 0 a12 a22

a11 a12 Which is the determinant of the matrix: a21 a22

Therefore, an ill-conditioned system is a system with a determinant close to zero.

In the special case of a determinant equal zero, the system has no solution or an infinite number of solutions. However, you must be prudent, because the determinant is influenced by the values of the coefficients.

Linear Algebraic Equations 48 Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2008]

II.3.1.2.4. Singular systems

II.3.1.2.4.1. Evaluation of the determinant using Gauss-elimination

We can show that for a triangular matrix, the determinant can be simply computed as the product of its diagonal elements:

D a11a22a33...ann

The extreme case of ill-conditioning is when two equations are the same. We will have, therefore: n unknowns n-1 equations

It is not always obvious to detect such cases when dealing with a large number of equations. We will develop, therefore, a technique to automatically detect such singularities.

- A singular system has a determinant equal zero. - Using Gauss elimination, we know that the determinant is equal to the product of the elements on the diagonal. - Hence, we have to detect if a zero element is created in the diagonal during elimination stage.

II.3.1.3. Techniques for improving the solution

To circumvent some of the pitfalls discussed above, some improvement can be used:

-a- More significant figures

You can increase the number of figures, however, this has a price (computational time, memory, …)

-b- Pivoting

Problems may arise when a pivot is zero or close to zero. The idea of pivoting is to look for the largest element in the same column below the zero pivot and then to switch the equation corresponding to this equation with the equation corresponding to the near zero pivot. This is called partial pivoting.

If the column and rows are searched for the highest element and then switched, this is called complete pivoting. But is rarely used because it adds complexity to the program.

-c- Scaling

It is important to use unites that lead to the same order of magnitude for all the coefficients (exp: voltage can be used in mV or MV).

-d- Complex systems

If we have to solve system with complex numbers, we have to convert this system into a real system:

Linear Algebraic Equations 49 Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2008]

CZ W (1)

C A iB Where: Z X iY (2) W U iV

If we replace (2) into (1) and equating real and complex parts

AX BY U BX AY V

However, a system of n equations in converted to a system of 2n real equations.

The only solutions is to use a programming language that allow complex data types (Fortran, Matlab, Scilab, Labview, …).

II.3.1.4. Non-linear systems of equations

If we have to solve the following system of equations:

f1 x1, x2 , x3 ,..., xn 0 f 2 x1, x2 , x3 ,..., xn 0 . . f n x1, x2 , x3 ,..., xn 0

One approach is to use a multi-dimensional version of the Newton-Raphson method.

If we write a Taylor serie for each equation:

For the kth equation for example:

f k,i f k,i1 f k,i x1,i1 x1,i .... x1

f k,i1 is set to zero at the root, and we can write the system under the following form:

Linear Algebraic Equations 50 Numerical Methods for Eng [ENGR 391] [Lyes KADEM 2008]

f f f 1,i 1,i ... 1,i x x x 1 2 n . Z . f f f n,i n,i n,i x1 x2 xn

T X i x1,i x2,i ...xn,i T X i1 x1,i1 x2,i1 ...xn,i1 T Fi f1,i f 2,i ... f n,i

And the system can be expressed as

ZX i1 Fi ZX i This system can be solved using Gauss elimination.

II.3.1.5. Gauss Jordan

It is a variation of Gauss elimination. The differences are:

- When an unknown is eliminated from an equation, it is also eliminated from all other equations. - All rows are normalized by dividing them by their pivot element.

Hence, the elimination step results in an identity matrix rather than a triangular matrix. Back substitution is, therefore, not necessary.

All the techniques developed for Gauss elimination are still valid for Gauss-Jordan elimination. However, Gauss-Jordan requires more computational work than Gauss elimination (approximately 50% more operations).

Linear Algebraic Equations 51