CEE6110 Probabilistic and Statistical Methods in Engineering Homework 9. Multiple Regression Solution

1. Weber Streamflow Multiple Regression

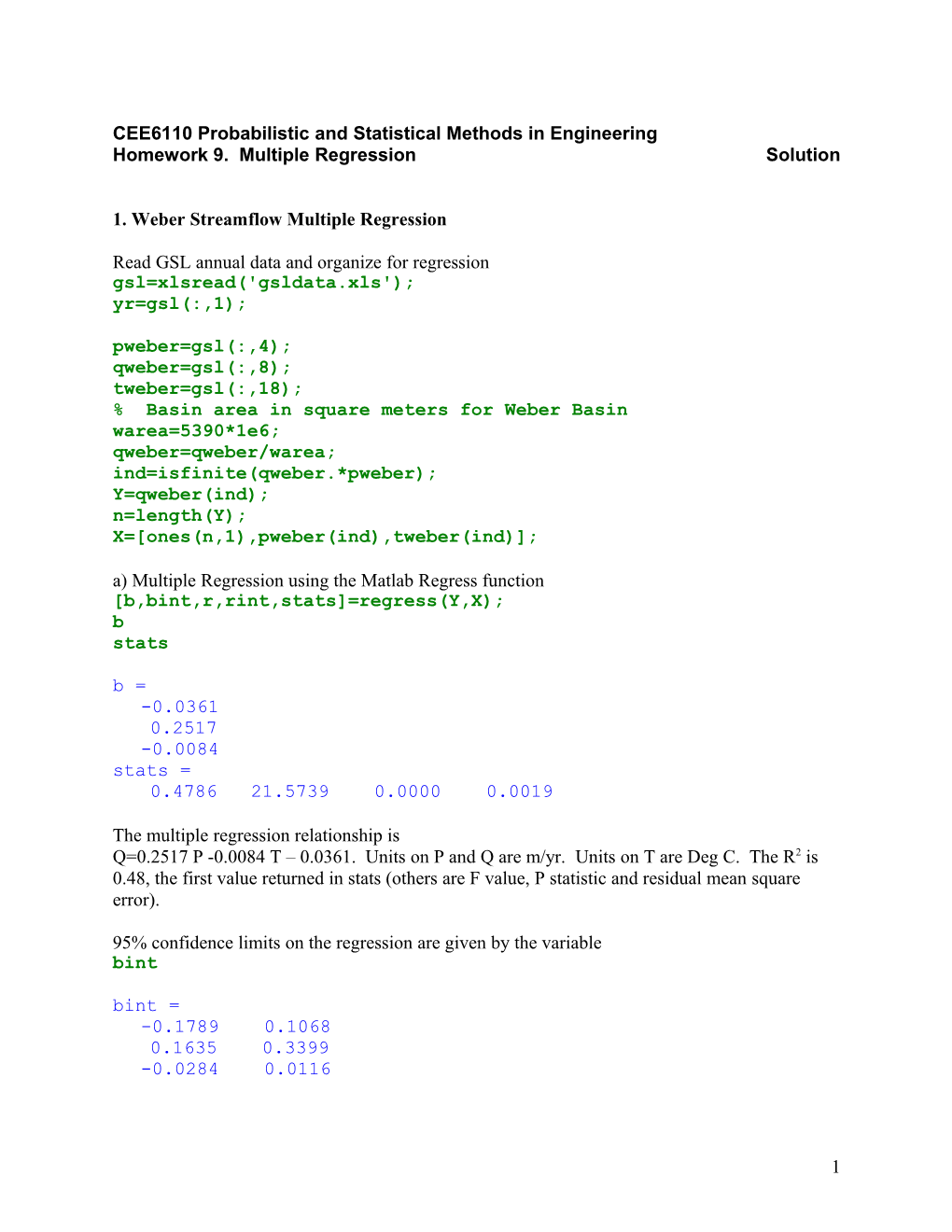

Read GSL annual data and organize for regression gsl=xlsread('gsldata.xls'); yr=gsl(:,1); pweber=gsl(:,4); qweber=gsl(:,8); tweber=gsl(:,18); % Basin area in square meters for Weber Basin warea=5390*1e6; qweber=qweber/warea; ind=isfinite(qweber.*pweber); Y=qweber(ind); n=length(Y); X=[ones(n,1),pweber(ind),tweber(ind)]; a) Multiple Regression using the Matlab Regress function [b,bint,r,rint,stats]=regress(Y,X); b stats b = -0.0361 0.2517 -0.0084 stats = 0.4786 21.5739 0.0000 0.0019

The multiple regression relationship is Q=0.2517 P -0.0084 T – 0.0361. Units on P and Q are m/yr. Units on T are Deg C. The R2 is 0.48, the first value returned in stats (others are F value, P statistic and residual mean square error).

95% confidence limits on the regression are given by the variable bint bint = -0.1789 0.1068 0.1635 0.3399 -0.0284 0.0116

1 Stated in terms of confidence intervals the regression is Q=a + b P + c T where -0.18 < a < 0.11 0.16 < b < 0.34 -0.028 < c < 0.012 Interpreting these, neither a nor c look to be significantly different from 0. The stepwise regression in part b will address this.

For completeness let's examine the data and residuals visually Predicted versus observed plot yp=X*b; plot(Y,yp,'.') line([0,0.24],[0,0.24]) xlabel('Observed') ylabel('Modeled') title('Weber Streamflow predicted from T and P')

W e b e r S t r e a m f l o w p r e d i c t e d f r o m T a n d P 0 . 2 5

0 . 2

0 . 1 5 d e l e d o M 0 . 1

0 . 0 5

0 0 0 . 0 5 0 . 1 0 . 1 5 0 . 2 0 . 2 5 O b s e r v e d

This has a subtle suggestion of nonlinearity in that the high observed flows are undermodeled. A step down the powers, e.g. log tranform of the output might address this, but because this effect is small I did not pursue it. subplot(2,1,1) plot(X(:,2),r,'.') xlabel('Precipitation') ylabel('Residual') ylim([-0.1, 0.15])

2 subplot(2,1,2) plot(X(:,3),r,'.') xlabel('Temperature') ylabel('Residual')

0 . 1 l

a 0 . 0 5 u d i s

e 0 R - 0 . 0 5

- 0 . 1 0 . 4 0 . 5 0 . 6 0 . 7 0 . 8 0 . 9 1 P r e c i p i t a t i o n

0 . 1 5

0 . 1 l

a 0 . 0 5 u d i s

e 0 R - 0 . 0 5

- 0 . 1 4 4 . 5 5 5 . 5 6 6 . 5 7 T e m p e r a t u r e

These plots are generally good. There are subtle suggestions of heteroskedacity with variance increasing with P and decreasing with T, but these effects are not very pronounced. subplot(2,1,1) plot(yp,r,'.') xlabel('Predicted') ylabel('Residual') ylim([-0.1, 0.15]) subplot(2,1,2) probplot(r) xlabel('Residual') ylabel('Probability')

3 0 . 1 l

a 0 . 0 5 u d i s

e 0 R - 0 . 0 5

- 0 . 1 0 0 . 0 2 0 . 0 4 0 . 0 6 0 . 0 8 0 . 1 0 . 1 2 0 . 1 4 0 . 1 6 0 . 1 8 P r e d i c t e d P r o b a b i l i t y p l o t f o r N o r m a l d i s t r i b u t i o n 0 . 9 9 9 9 0 .0 9 . 9 9 59 00 . 9 . 9 95 0 .0 9 . 59 y t i

l 0 . 7 5 i

b 0 . 5 a

b 0 . 2 5 o r 0 . 1

P 0 . 0 5 00 . 0 . 0 15 0 .0 0 . 0 0 51 0 . 0 0 0 1 - 0 . 1 - 0 . 0 5 0 0 . 0 5 0 . 1 0 . 1 5 R e s i d u a l

Interpreting these figures the residuals do appear to be normally distributed and not related to either of the explanatory variables or the regression predictions themselves. b) Stepwise regression stepwise(X(:,[2:3]),Y)

Following is the result

C o e f f i c i e n t s w i t h E r r o r B a r s C o e f f . t - s t a t p - v a l

X 1 0 . 2 6 5 3 6 2 6 . 5 3 3 8 0 . 0 0 0 0

X 2 - 0 . 0 0 8 3 8 1 8 7 - 0 . 8 4 4 2 0 . 4 0 2 9

0 0 . 1 0 . 2 0 . 3

M o d e l H i s t o r y 0 . 0 6 E

S 0 . 0 5 M R

0 . 0 4 1 2

4 Following are the figures that are produced by the added variable tool. A d d e d v a r i a b l e p l o t f o r X 2 A d j u s t e d f o r X 1 0 . 1 2 A d j u s t e d d a t a 0 . 1 F i t : y = - 0 . 0 0 8 3 8 1 8 7 * x 0 . 0 8 9 5 % c o n f . b o u n d s

0 . 0 6

0 . 0 4 s l a u d i 0 . 0 2 s e r

Y 0

- 0 . 0 2

- 0 . 0 4

- 0 . 0 6

- 0 . 0 8 - 1 . 5 - 1 - 0 . 5 0 0 . 5 1 1 . 5 X 2 r e s i d u a l s

P a r t i a l r e g r e s s i o n l e v e r a g e p l o t f o r X 1 0 . 2 5 A d j u s t e d d a t a 0 . 2 F i t : y = 0 . 2 6 5 3 6 2 * x 9 5 % c o n f . b o u n d s

0 . 1 5 s l 0 . 1 a u d i s e r 0 . 0 5 Y

0

- 0 . 0 5

- 0 . 1 - 0 . 3 - 0 . 2 - 0 . 1 0 0 . 1 0 . 2 0 . 3 0 . 4 X 1 r e s i d u a l s The first figure above that matlab refers to as an added variable plot appears when X2 is selected. X2 was not an explanatory variable at the end of the stepwise regression process so this plot shows the residuals that would result versus X2 if X2 were to be included. The fact that there is no trend in this graph supports leaving X2 out of the regression.

The second figure above is what Helsel and Hirsch (page 301) refer to as a partial resicual plot and it shows the residuals that would result if X1 was left out of the regression. The linear relationship between X1 residuals and Y residuals is supportive of X1 being included. Dashed lines on these plots depict 95% confidence limits on the slope parameter, computed from a T distribution (e.g. Kottegoda and Rosso 6.2.18)

5 Taken together these plots indicate that the first variable (Precipitation) is a significant explanatory variable, but that adding temperature is not justified in terms of the additional explanatory power it provides. The adjusted variable plot for X2 (temperature) above shows this. 95% confidence bounds on the coefficient associated with temperature include 0 so the coefficient is not significantly different from 0.

The stepwise regression relationship is therefore Q=0.265 P – 0.091.

R2 is 0.47 Mean square error of residuals is 0.0436^2 ans = 0.0019

Use regress to confirm regression statistics and obtain 95% confidence intervals

[b,bint,r,rint,stats]=regress(Y,X(:,[1,2])); b bint stats b = -0.0913 0.2654 bint = -0.1466 -0.0359 0.1837 0.3470 stats = 0.4707 42.6908 0.0000 0.0019

The result is Q = a P + b with -0.1466 < b < -0.0359 0.1837 < a < 0.3470

Testing residuals yp=X(:,[1,2])*b; plot(Y,yp,'.') line([0,0.24],[0,0.24]) xlabel('Observed') ylabel('Modeled') title('Weber Streamflow predicted from P only')

6 W e b e r S t r e a m f l o w p r e d i c t e d f r o m P o n l y 0 . 2 5

0 . 2

0 . 1 5 d e l e d o M 0 . 1

0 . 0 5

0 0 0 . 0 5 0 . 1 0 . 1 5 0 . 2 0 . 2 5 O b s e r v e d

This has the same subtle suggestion of nonlinearity as noted for the T and P regression above. The high observed flows are slightly undermodeled. subplot(3,1,1) plot(X(:,2),r,'.') xlabel('Precipitation') ylabel('Residual') ylim([-0.1, 0.15]) subplot(3,1,2) plot(yp,r,'.') xlabel('Predicted') ylabel('Residual') ylim([-0.1, 0.15]) subplot(3,1,3) probplot(r) xlabel('Residual') ylabel('Probability')

7 0 . 1 l a u d i

s 0 e R - 0 . 1 0 . 4 0 . 5 0 . 6 0 . 7 0 . 8 0 . 9 1 P r e c i p i t a t i o n

0 . 1 l a u d i

s 0 e R - 0 . 1 0 0 . 0 2 0 . 0 4 0 . 0 6 0 . 0 8 0 . 1 0 . 1 2 0 . 1 4 0 . 1 6 0 . 1 8 P r e d i c t e d P r o b a b i l i t y p l o t f o r N o r m a l d i s t r i b u t i o n

0 .0 9 . 9 9 9

y 0 . 9 9 t

i 0 . 9 5

l 0 . 9 i 0 . 7 5 b 0 . 5 a 0 . 2 5 b 0 .0 0 . 51 o r

P 0 . 0 0 0 1 - 0 . 0 8 - 0 . 0 6 - 0 . 0 4 - 0 . 0 2 0 0 . 0 2 0 . 0 4 0 . 0 6 0 . 0 8 0 . 1 0 . 1 2 R e s i d u a l

The residuals are normal and not obviously correlated with the explanatory or predicted variables.

Kottegoda and Rosso (page 374) also present an example of plotting the dependent variable against residuals. This is shown below and does show a linear relationship. This is to be expected and does not indicate a problem with the regression because residuals are constructed from the dependent variable minus the prediction, so large residuals are expected for large values of the dependent variable. plot(Y,r,'.') xlabel('Streamflow') ylabel('Residual')

0 . 1 5

0 . 1

l 0 . 0 5 a u d i s

e 0 R

- 0 . 0 5

- 0 . 1 0 0 . 0 5 0 . 1 0 . 1 5 0 . 2 0 . 2 5 S t r e a m f l o w c) Use regstats to obtain leverage and Cook's distance stats = regstats(Y,X(:,2),'linear','all'); subplot(2,1,1) plot(X(:,2),stats.leverage,'.')

8 xlabel('Precipitation') ylabel('Leverage') subplot(2,1,2) plot(X(:,2),stats.cookd,'.') xlabel('Precipitation') ylabel('Cook Distance')

0 . 2

0 . 1 5 e g a r 0 . 1 e v e L 0 . 0 5

0 0 . 4 0 . 5 0 . 6 0 . 7 0 . 8 0 . 9 1 P r e c i p i t a t i o n

0 . 2

0 . 1 5 e c n a t

s 0 . 1 i D

k o o 0 . 0 5 C

0 0 . 4 0 . 5 0 . 6 0 . 7 0 . 8 0 . 9 1 P r e c i p i t a t i o n

As is expected for linear regression the leverage increases for the points near the edge of the domain. This is of course only the diagonal of the hat matrix giving the leverage of each point on the regression at its own location. The Cook distances are less than the value of 1 that would flag them as outliers. Nevertheless there is some concern that larger Cook's distances are all for the larger precipitation values where the regression is underestimating the flow. There is a suggestion of nonlinearity that this model is not capturing.

2. Great Salt Lake Volume change prediction.

Read GSL annual data and use in stepwise regression gsl=xlsread('gsldata.xls'); ind=isfinite(gsl(:,7).*gsl(:,17)); % To avoid missing data yr=gsl(ind,1); plake=gsl(ind,2); tlake=gsl(ind,17); qbear=gsl(ind,7); qweber=gsl(ind,8); qjordan=gsl(ind,9); larea=gsl(ind,12); lvol=gsl(ind,13);

9 volinv=1./lvol; dvol=gsl(ind,11)-gsl(ind,10); X=[plake,tlake,qbear,qweber,qjordan,larea,lvol,volinv]; Y=dvol; stepwise(X,Y) a) Stepwise regression selects the variables qbear, lvol and plake with the following equation Dvol=-2.9e9+7.02e9*plake+1.879*qbear-0.1145*lvol (1) R2=0.955 MSE=(4.4244e8)^2

MSE = 1.9575e+017

The stepwise output is shown

C o e f f i c i e n t s w i t h E r r o r B a r s C o e f f . t - s t a t p - v a l

X 1 7 . 0 2 0 2 9 e + 0 0 9 7 . 4 7 7 3 0 . 0 0 0 0

X 2 - 1 . 4 9 7 9 6 e + 0 0 8 - 1 . 5 7 8 1 0 . 1 2 2 6 X 3 1 . 8 7 9 0 5 1 9 . 5 3 8 8 0 . 0 0 0 0

X 4 0 . 6 3 3 1 7 6 1 . 2 3 3 7 0 . 2 2 4 7 X 5 4 . 6 3 6 4 7 1 . 0 9 1 1 0 . 2 8 1 9

X 6 - 0 . 0 2 5 8 0 6 3 - 0 . 0 6 9 0 0 . 9 4 5 4 X 7 - 0 . 1 1 4 5 9 1 - 8 . 6 5 7 6 0 . 0 0 0 0

X 8 4 . 4 4 9 3 3 e + 0 1 8 0 . 2 9 3 9 0 . 7 7 0 4 - 2 0 2 4 19 x 1 0

9 x 1 0 M o d e l H i s t o r y 3

2 E S M

R 1

0 1 2 3 4

The coefficients with error bars graph above is not very informative because the variables shown have different scales. Following is the stepwise output with scaled coefficients plotted.

10 S c a l e d C o e f f i c i e n t s w i t h E r r o r B a r s C o e f f . t - s t a t p - v a l

X 1 6 . 0 5 5 5 8 e + 0 0 8 7 . 4 7 7 3 0 . 0 0 0 0

X 2 - 1 . 1 2 3 2 9 e + 0 0 8 - 1 . 5 7 8 1 0 . 1 2 2 6 X 3 1 . 6 7 0 1 5 e + 0 0 9 1 9 . 5 3 8 8 0 . 0 0 0 0

X 4 2 . 0 4 9 9 6 e + 0 0 8 1 . 2 3 3 7 0 . 2 2 4 7 X 5 9 . 7 9 3 7 5 e + 0 0 7 1 . 0 9 1 1 0 . 2 8 1 9

X 6 - 2 . 0 0 9 2 1 e + 0 0 7 - 0 . 0 6 9 0 0 . 9 4 5 4 X 7 - 6 . 3 7 7 3 2 e + 0 0 8 - 8 . 6 5 7 6 0 . 0 0 0 0

X 8 6 . 0 2 2 5 4 e + 0 0 7 0 . 2 9 3 9 0 . 7 7 0 4 - 1 - 0 . 5 0 0 . 5 1 1 . 5 2 9 x 1 0

9 x 1 0 M o d e l H i s t o r y 3

2 E S M

R 1

0 1 2 3 4

An alternate, arguably more physical model would take area as the explanatory variable in preference to lvol. The stepwise output for this set of variables is

S c a l e d C o e f f i c i e n t s w i t h E r r o r B a r s C o e f f . t - s t a t p - v a l

X 1 6 . 4 4 2 2 5 e + 0 0 8 7 . 6 3 3 5 0 . 0 0 0 0

X 2 - 9 . 3 5 4 6 7 e + 0 0 7 - 1 . 2 2 1 2 0 . 2 2 9 3 X 3 1 . 6 2 5 6 8 e + 0 0 9 1 8 . 3 9 0 4 0 . 0 0 0 0

X 4 3 . 0 9 6 0 2 e + 0 0 8 1 . 8 1 3 9 0 . 0 7 7 4 X 5 1 . 2 3 9 3 4 e + 0 0 8 1 . 2 9 9 8 0 . 2 0 1 3

X 6 - 6 . 0 6 5 5 8 e + 0 0 8 - 7 . 9 6 8 1 0 . 0 0 0 0 X 7 - 6 . 1 7 8 9 7 e + 0 0 8 - 2 . 0 7 9 7 0 . 0 4 4 2

X 8 - 5 . 4 8 2 1 1 e + 0 0 8 - 0 . 9 3 8 6 0 . 3 5 3 7 - 2 - 1 0 1 2 9 x 1 0

9 x 1 0 M o d e l H i s t o r y 3

2 E S M

R 1

0 1 2 3 4 5 6 The resulting model is

Dvol=-2.0e9+7.469e9*plake+1.829*qbear-0.779*larea (2) R2=0.95 MSE=(4.66303e8)^2

11 ans = 2.1744e+017 b) To obtain confidence limits and assess the adequacy of the regression use the matlab regress function Model 1 [b,bint,r,rint,stats]=regress(Y,[ones(length(Y),1),X(:, [1,3,7])]); bint(1,:) % These accessed separately so that values are not lost in rounding bint(2,:) bint(3,:) bint(4,:) ans = 1.0e+009 * -3.6525 -2.1452 ans = 1.0e+009 * 5.1227 8.9178 ans = 1.6847 2.0734 ans = -0.1413 -0.0878

The model is

Dvol=1 + 2 plake +3 qbear+4 lvol with 95% confidence intervals

-3.65e9 < 1 < -2.15e9

5.122e9 < 2 < 8.918e9

1.685 < 3 < 2.073

-0.141 < 4 < -0.0878

Test the residuals for normality and independence Modeled versus observed plot yp=[ones(length(Y),1),X(:,[1,3,7])]*b; plot(Y,yp,'.') line([-4e9,6e9],[-4e9,6e9]) xlabel('Observed') ylabel('Modeled')

12 9 x 1 0 6

5

4

3

2 d e l

e 1 d o M 0

- 1

- 2

- 3

- 4 - 6 - 4 - 2 0 2 4 6 O b s e r v e d 9 x 1 0 subplot(3,1,1) plot(yp,r,'.') xlabel('Predicted') ylabel('Residual') subplot(3,1,2) plot(X(:,1),r,'.') xlabel('plake') ylabel('Residual') subplot(3,1,3) plot(X(:,3),r,'.') xlabel('qbear') ylabel('Residual')

13 9 x 1 0 1 l a u d

i 0 s e R - 1 - 4 - 3 - 2 - 1 0 1 2 3 4 5 6 P r e d i c t e d 9 x 1 0 9 x 1 0 1 l a u d

i 0 s e R - 1 0 . 2 0 . 2 5 0 . 3 0 . 3 5 0 . 4 0 . 4 5 0 . 5 0 . 5 5 p l a k e 9 x 1 0 1 l a u d

i 0 s e R - 1 0 0 . 5 1 1 . 5 2 2 . 5 3 3 . 5 4 4 . 5 5 q b e a r 9 x 1 0 subplot(2,1,1) plot(X(:,7),r,'.') xlabel('lvol') ylabel('Residual') subplot(2,1,2) probplot(r) xlabel('Residual') ylabel('Probability')

9 x 1 0 1

0 . 5 l a u d

i 0 s e R - 0 . 5

- 1 1 1 . 5 2 2 . 5 3 3 . 5 4 l v o l 1 0 x 1 0 P r o b a b i l i t y p l o t f o r N o r m a l d i s t r i b u t i o n 0 . 9 9 9 9 0 .0 9 . 9 9 59 00 . 9 . 9 95 0 .0 9 . 59 y t i

l 0 . 7 5 i

b 0 . 5 a

b 0 . 2 5 o r 0 . 1

P 0 . 0 5 00 . 0 . 0 15 0 .0 0 . 0 0 51 0 . 0 0 0 1 - 1 - 0 . 8 - 0 . 6 - 0 . 4 - 0 . 2 0 0 . 2 0 . 4 0 . 6 0 . 8 1 R e s i d u a l 9 x 1 0

14 From these diagnostic plots the residuals appear normal and uncorrelated and this is a suitable model

Model 2 (with larea as an explanatory variable) [b,bint,r,rint,stats]=regress(Y,[ones(length(Y),1),X(:, [1,3,6])]); bint(1,:) % These accessed separately so that values are not lost in rounding bint(2,:) bint(3,:) bint(4,:) ans = 1.0e+009 * -3.0245 -1.0760 ans = 1.0e+009 * 5.4912 9.4460 ans = 1.6280 2.0300 ans = -0.9767 -0.5815

The model is

Dvol=1 + 2 plake +3 qbear+4 larea with 95% confidence intervals

-3.02e9 < 1 < -1.08e9

5.49e9 < 2 < 9.45e9

1.628 < 3 < 2.03

-0.977 < 4 < -0.582

Test the residuals for normality and independence Modeled versus observed plot yp=[ones(length(Y),1),X(:,[1,3,6])]*b; plot(Y,yp,'.') line([-4e9,6e9],[-4e9,6e9]) xlabel('Observed') ylabel('Modeled')

15 9 x 1 0 6

5

4

3

2 d e l

e 1 d o M 0

- 1

- 2

- 3

- 4 - 6 - 4 - 2 0 2 4 6 O b s e r v e d 9 x 1 0 subplot(3,1,1) plot(yp,r,'.') xlabel('Predicted') ylabel('Residual') subplot(3,1,2) plot(X(:,1),r,'.') xlabel('plake') ylabel('Residual') subplot(3,1,3) plot(X(:,3),r,'.') xlabel('qbear') ylabel('Residual')

16 9 x 1 0 2 l a u d

i 0 s e R - 2 - 4 - 3 - 2 - 1 0 1 2 3 4 5 6 P r e d i c t e d 9 x 1 0 9 x 1 0 2 l a u d

i 0 s e R - 2 0 . 2 0 . 2 5 0 . 3 0 . 3 5 0 . 4 0 . 4 5 0 . 5 0 . 5 5 p l a k e 9 x 1 0 2 l a u d

i 0 s e R - 2 0 0 . 5 1 1 . 5 2 2 . 5 3 3 . 5 4 4 . 5 5 q b e a r 9 x 1 0 subplot(2,1,1) plot(X(:,6),r,'.') xlabel('larea') ylabel('Residual') subplot(2,1,2) probplot(r) xlabel('Residual') ylabel('Probability')

9 x 1 0 2

1 l a u d

i 0 s e R - 1

- 2 2 . 5 3 3 . 5 4 4 . 5 5 5 . 5 6 l a r e a 9 x 1 0 P r o b a b i l i t y p l o t f o r N o r m a l d i s t r i b u t i o n 0 . 9 9 9 9 0 .0 9 . 9 9 59 00 . 9 . 9 95 0 .0 9 . 59 y t i

l 0 . 7 5 i

b 0 . 5 a

b 0 . 2 5 o r 0 . 1

P 0 . 0 5 00 . 0 . 0 15 0 .0 0 . 0 0 51 0 . 0 0 0 1 - 1 . 5 - 1 - 0 . 5 0 0 . 5 1 1 . 5 R e s i d u a l 9 x 1 0

17 From these diagnostic plots the residuals also appear normal and uncorrelated for model 2. c) Discussion. The scaled inputs view in the stepwise regression toolbar gives the following:

S c a l e d C o e f f i c i e n t s w i t h E r r o r B a r s C o e f f . t - s t a t p - v a l

X 1 1 . 4 8 1 6 2 e + 0 0 9 6 . 9 2 2 4 0 . 0 0 0 0

X 2 - 7 . 8 5 9 2 1 e + 0 0 8 - 2 . 7 2 1 9 0 . 0 0 9 4 X 3 1 . 7 7 2 5 6 e + 0 0 9 1 1 . 6 2 0 4 0 . 0 0 0 0

X 4 1 . 7 4 9 0 1 e + 0 0 9 1 1 . 0 0 8 4 0 . 0 0 0 0 X 5 5 . 4 1 1 2 5 e + 0 0 8 1 . 7 9 2 8 0 . 0 8 0 2

X 6 - 1 . 3 3 2 9 e + 0 0 8 - 0 . 4 2 6 5 0 . 6 7 1 9 X 7 - 1 . 2 4 6 3 2 e + 0 0 8 - 0 . 3 9 8 7 0 . 6 9 2 1

X 8 1 . 1 7 5 5 3 e + 0 0 8 0 . 3 7 6 0 0 . 7 0 8 8 - 1 0 1 2 9 This indicates that X3 and X4 (qbearx 1 0 and qweber) are the strongest predictors of lake volume change, followed by X1 (precipitation on the lake) and X5 (Jordan river flow). This makes physical sense.9 The predictors related to lake volume, X6, X7 and X8 do not show significant x 1 0 M o d e l H i s t o r y explanatory2 . 0 2 9 6 power at this stage. However once the predictor X3 (qbear) has been included the lake volume variables take on explanatory power. This suggests that these variables explanatory 2 . 0 2 9 6 E capabilityS is limited compared to the streamflow and direct precipitation on the lake, but still M importantR 2 . 0 2 9 6 because they explain a different aspect of the lake volume response. Physically this is likely2 . 0 2 to 9 6 be the effect of lake area on evaporation loss. Physically it is informative to examine the magnitude of the regression coefficients with1 respect to the mass balance equation for the lake. The mass balance equation is dvol=plake+qbear+qweber+qjordan-evaporation loss (+- other effects such as groundwater inflow) The regression coefficients for plake and qbear in the models are 7e9 and 1.9. The average lake area is mean(larea) ans = 3.9781e+009

From pure mass balance considerations one would expect the coefficient on plake to be the lake area because the volume of lake input from precipitation is precipitation depth times area. One would also expect the coefficient on qbear to be close to 1 because the streamflow adds directly to volume. The fact that the regression coefficients are larger than these "physical" values is due to these variables serving to quantify other effects such as inflow from the other streams and also potentially evaporation loss. From the coefficient on lake area in model 2 being 0.78 one might infer that the annual evaporation loss from the lake is on the order of 0.78 m, however, just as plake and qbear coefficients were not exactly as may have been physically inferred this inference may be similarly uncertain.

Another concern in this model is multi-collinearity (see Helsel and Hirsch page 305)

Evaluate variance inflation factor (VIF) nv=size(X);

18 Xm=mean(X); for i=1:nv(1) Xr(i,:)=X(i,:)./Xm; % Rescale to avoid problems with numerics end for iy=1:nv(2) ic=1; clear ij for j=1:nv(2) if j ~=iy; ij(ic)=j; ic=ic+1; end end yt=Xr(:,iy); xt=[ones(nv(1),1),Xr(:,ij)]; [b,bint,r,rint,stats]=regress(yt,xt); r2(iy)=stats(1); vif(iy)=1/(1-r2(iy)); end r2 vif r2 = Columns 1 through 7 0.4632 0.1848 0.8680 0.8617 0.5577 0.9976 0.9825 Column 8 0.9953 vif = Columns 1 through 7 1.8630 1.2267 7.5745 7.2282 2.2611 417.4532 57.2264 Column 8 210.9333 subplot(2,1,1); scatter(1:8,r2); xlabel('variable'); ylabel('R2') subplot(2,1,2); scatter(1:8,vif); xlabel('variable'); ylabel('VIF')

19 1

2 0 . 5 R

0 1 2 3 4 5 6 7 8 v a r i a b l e

5 0 0

4 0 0

3 0 0 F I V 2 0 0

1 0 0

0 1 2 3 4 5 6 7 8 v a r i a b l e

We see that VIF is large for the last 3 variables, lake volume, lake area and inverse lake volume indicating strong co-linearity between these. Only one should be included in the regression at a time. There are also fairly significant collinearities between the three streamflow variables, but none greater than the threshold of 10 that Helsel and Hirch indicate.

20 3. Streamflow as a function of Watershed Attributes

Reading in and organizing the data watershed=xlsread('watershed.xls','global model'); [ind,char]=xlsread('watershed.xls','variables'); abname=char(1:34,2); % abbreviated variable name catname=char(1:34,5); % variable category

The explanatory variables are in columns 1 to 34 of watershed as follows: 1 AWCH_AVE 13 MAXWD_WS 25 SHAPE1 2 BDH_AVE 14 MEANP_WS 26 SHAPE2 3 CARB 15 MINP_WS 27 SQ_KM 4 DDEN 16 MINWD_WS 28 TMAX_WS 5 ELEV_MAX 17 OMH_AVE 29 TMEAN_WS 6 ELEV_MIN 18 PRMH_AVE 30 TMIN_WS 7 ELEV_STD 19 RDH_AVE 31 WTDH_AVE 8 ELEV_WS 20 RH_WS 32 XWD_WS 9 FST32AVE 21 RRMEAN 33 USGS_Area_mi2 10 KFCT_AVE 22 RRMEDIAN 34 USGS_Area_km2 11 LST32AVE 23 SD_TMAX_WS 12 MAXP_WS 24 SD_TMIN_WS

The response variable of interest, QMEAN is in column 36.

Calculate relief and relief ratio relief=watershed(:,5)-watershed(:,6); relrat=relief./sqrt(watershed(:,34)*1000000); % USGS area in m^2 X=[watershed(:,[1:26,28:32,34]),relief,relrat]; % Leave out the area in hectares because we already have it in km2 varname=abname([1:26,28:32,34]); varcat=catname([1:26,28:32,34]); varname{length(varname)+1}='Relief'; % add on Relief name varname{length(varname)+1}='ReliefRatio'; % add on Relief ratio varcat{length(varcat)}='GIS'; % last one is USGS area varcat{length(varcat)+1}='TOPO'; % Classify these as topo varcat{length(varcat)+1}='TOPO'; Y=watershed(:,36);

Initial exploratory analysis of the data. The following script implements rudimentary exploratory data analysis explore.m nr=5; nc=3; nf=ceil(length(varname)/(nr*nc)); for i1=1:nf tt=figure();

21 set(tt,'Position',[420,530,840,630]) for i2=1:nc if((i2-1)*nr+(i1-1)*nr*nc 22 0 0 0 2 0 0 0 4 0 0 0 6 0 0 0 2 0 0 0 4 0 0 0 6 0 0 0 2 0 0 0 4 0 0 0 6 QMean QMean QMean 0 0 0 0 0 0 H C W A E V A 2 3 T S L V E L E 0 0 1 3 2 2 6 3 . 0 - 1 2 4 9 4 1 . 0 2 . 0 1 3 1 1 9 0 . 0 - 0 0 2 M A 2 N I E V 0 1 x 0 0 3 4 . 0 3 4 0 0 1 8 1 6 0 5 . 0 V E L E P X A M H D B 7 0 5 0 3 . 0 - 0 0 0 5 7 9 3 1 2 . 0 0 0 5 5 . 1 A S W E V D T S 0 0 0 0 1 0 0 0 1 2 0 0 0 D W X A M V E L E 0 5 -0.27306 B R A C 0.26015 0 5 4 6 3 8 3 0 . 0 - 2 W 0 0 1 W S S 0 1 x 0 0 1 0 5 1 4 4 0 0 2 0 0 7 8 4 5 5 . 0 E V A 2 3 T S F P N A E M 0 5 2 2 N E D D 0 0 0 2 4 9 3 7 1 . 0 - 7 3 8 0 1 . 0 0 0 3 4 W S 0 1 x 0 0 0 4 0 5 3 6 -3 0 0 0 T C F K V E L E P N I M 2 6 7 8 7 1 . 0 5 . 0 2 3 4 5 6 0 . 0 - 0 5 3 8 6 5 5 0 . 0 - A M W E V X A 4 S 0 1 x 0 0 1 1 6 4 23 From this initial exploration we see the following variables with significant correlations: following explorationsee the variables we initial From this magnitude. to their font text sized according correlation, plots are scatter numbers in the The ------Average relative humidity (0.43) relative humidity Average ofwet days(0.50) Number (0.51) month precipitation Wettest Area(0.54) Basin (0.55) precipitation Mean annual Relief (0.35) Relief (0.36) Min elevation 0 0 0 2 0 0 0 4 0 0 0 6 0 0 0 2 0 0 0 4 0 0 0 6 0 0 0 2 0 0 0 4 0 0 0 6 0 0 0 2 0 0 0 4 0 0 0 6 QMean QMean QMean QMean 0 0 0 0 0 0 0 0 D W N I M N A E M R R 2 E P A H S 4 4 1 0 5 . 0 0 0 1 1 8 8 5 1 . 0 - 5 . 0 D W X 0.1064 6 0 1 . -0 8 4 2 1 . 0 1 5 W W S 0 0 2 S 0 1 2 1 0 0 3 0 0 1 0 0 N A I D E M R R H M O X A M T 0 0 2 0 8 5 1 4 2 . 0 5 . 0 6 0 6 2 1 . 0 - 8 1 7 6 2 0 . 0 - 5 A S G S U W 0 0 3 E V 9 1 0 4 5 . 0 0 0 0 2 S 0 0 4 0 1 A 1 a e r 0 0 0 4 0 0 1 - k 2 m 0 0 D S 0 0 0 6 H M R P N A E M T 0 2 0 T X A M 6 2 5 1 9 0 . 0 9 8 0 3 1 . 0 0 1 6 3 2 3 3 0 . 0 - A 0 0 1 0 4 W E V W 0 S S 0 0 2 0 6 0 2 6 4 1 5 3 . 0 f e i l e R 0 0 4 - 0 0 2 D S 0 0 2 - H D R N I M T 0 2 T 2 8 4 9 1 . 0 4 6 3 3 2 . 0 6 0 5 6 1 . 0 N I M 0 5 A 0 1 x W E V 0 4 4 W 0 S S 4 0 0 1 0 0 2 0 6 0 0 4 0 4 o i t a R f e i l e R 2 5 2 6 0 3 . 0 - H D T W 1 E P A H S 4 6 8 2 4 . 0 0 6 H R 5 8 3 3 2 . 0 - 5 . 0 2 2 6 9 9 0 . 0 - 5 W 4 A S 0 8 E V 0 0 1 6 1 6 24 After accounting for basin size, climate variables are dominating, followed by topography. Stepwise Regression. clear beta betaci ans coeftab history stats in out % Clear variables for repeat runs stepwise(X,Y) C o e f f i c i e n t s w i t h E r r o r B a r s X 2 7 0 . 2 9 7 8 2 5 0 . 3 8 8 1 0 . 6 9 8 2 X 2 8 0 . 3 7 3 4 3 7 0 . 3 0 0 3 0 . 7 6 4 1 X 2 9 1 . 0 3 5 3 1 . 1 3 3 2 0 . 2 5 8 0 X 3 0 - 1 3 7 . 9 6 - 1 . 6 0 4 3 0 . 1 0 9 7 X 3 1 - 0 . 3 4 1 9 1 9 - 0 . 2 4 8 4 0 . 8 0 4 0 X 3 2 0 . 4 2 7 0 4 9 1 6 . 3 4 0 7 0 . 0 0 0 0 X 3 3 0 . 0 2 7 3 7 3 8 3 . 8 8 5 5 0 . 0 0 0 1 X 3 4 - 7 9 . 8 2 4 2 - 1 . 8 0 3 9 0 . 0 7 2 2 0 5 1 0 1 5 4 x 1 0 M o d e l H i s t o r y 8 0 0 6 0 0 E S M R 4 0 0 2 0 0 1 2 3 4 5 6 7 8 9 The variables selected were the following: varselect=[12, 32, 14, 33, 9, 2, 7, 1]; varname(varselect) ans = 'MAXP_WS' 'USGS_Area_km2' 'MEANP_WS' 'Relief' 'FST32AVE' 'BDH_AVE' 'ELEV_STD' 'AWCH_AVE' The first 4 variables that enter the regression are consistent with the corelations, but some of those that follow, like FST32AVE (mean day of first freeze), BDH_AVE (soil bulk density) have 25 low correlation. The stepwise variables were exported and from the history we find that the sequence of mean square errors is history.rmse ans = 624.9804 539.8462 395.5490 367.9412 358.1751 347.4912 341.8770 340.2928 338.6557 Following the first 5 variables the RMSE reductions are small The regression parameters are: for i=1:8 beta(varselect(i)) end ans = -6.9673 ans = 0.4270 ans = 1.8720 ans = 0.0274 ans = 5.2428 ans = 438.0282 ans = -0.0814 ans = -1.5984e+003 Verifying using regress [b,bint,r,rint,stats]=regress(Y, [ones(length(Y),1),X(:,varselect)]); for i=1:9 b(i) end 26 ans = -2.6722e+003 ans = -6.9673 ans = 0.4270 ans = 1.8720 ans = 0.0274 ans = 5.2428 ans = 438.0282 ans = -0.0814 ans = -1.5984e+003 The regression equation is QMEAN=-2672 -6.976 MAXP_WS + 0.427 USGS_Area_km2 + 1.87 MEANP_WS + 0.027 Relief + 5.24 FST32AVE + 438 BDH_AVE – 0.0814 ELEV_STD – 1598 AWCH_AVE Interpreting this equation is challenging due to the different units involved. Some of the terms have the wrong physical sense, such as the negative coefficient on MAXP_WS. Examine residuals yp=[ones(length(Y),1),X(:,varselect)]*b; plot(Y,yp,'.') line([0,3e3],[0,3e3]) xlabel('Observed') ylabel('Modeled') 27 3 0 0 0 2 5 0 0 2 0 0 0 1 5 0 0 d e l e d o M 1 0 0 0 5 0 0 0 - 5 0 0 0 5 0 0 1 0 0 0 1 5 0 0 2 0 0 0 2 5 0 0 3 0 0 0 3 5 0 0 4 0 0 0 4 5 0 0 O b s e r v e d The curvature in this suggests that some transformation of Y down the ladder of powers is required. Check independence and heterskedacity of residuals subplot(2,1,1) plot(yp,r,'.') xlabel('Predicted') ylabel('Residual') subplot(2,1,2) probplot(r) xlabel('Residual') ylabel('Probability') 28 2 0 0 0 1 0 0 0 l a u d i 0 s e R - 1 0 0 0 - 2 0 0 0 - 5 0 0 0 5 0 0 1 0 0 0 1 5 0 0 2 0 0 0 2 5 0 0 P r e d i c t e d P r o b a b i l i t y p l o t f o r N o r m a l d i s t r i b u t i o n 0 . 9 9 9 9 0 .0 9 . 9 9 59 00 . 9 . 9 95 0 .0 9 . 59 y t i l 0 . 7 5 i b 0 . 5 a b 0 . 2 5 o r 0 . 1 P 0 . 0 5 00 . 0 . 0 15 0 .0 0 . 0 0 51 0 . 0 0 0 1 - 1 5 0 0 - 1 0 0 0 - 5 0 0 0 5 0 0 1 0 0 0 1 5 0 0 2 0 0 0 R e s i d u a l The residuals are heteroskedastic and depart from normality. for iv=1:4 subplot(2,2,iv) plot(X(:,varselect(iv)),r,'.') xlabel(varname(varselect(iv))) ylabel('Residual') end 2 0 0 0 2 0 0 0 1 0 0 0 1 0 0 0 l l a a u u d d i i 0 0 s s e e R R - 1 0 0 0 - 1 0 0 0 - 2 0 0 0 - 2 0 0 0 0 2 0 0 4 0 0 6 0 0 8 0 0 0 2 0 0 0 4 0 0 0 6 0 0 0 M A X P S U S G S r e a m 2 W A k 2 0 0 0 2 0 0 0 1 0 0 0 1 0 0 0 l l a a u u d d i i 0 0 s s e e R R - 1 0 0 0 - 1 0 0 0 - 2 0 0 0 - 2 0 0 0 0 1 0 0 0 2 0 0 0 3 0 0 0 4 0 0 0 0 1 2 3 4 M E A N P S R e l i e f 4 W x 1 0 29 for iv=5:8 subplot(2,2,iv-4) plot(X(:,varselect(iv)),r,'.') xlabel(varname(varselect(iv))) ylabel('Residual') end 2 0 0 0 2 0 0 0 1 0 0 0 1 0 0 0 l l a a u u d i 0 d i 0 s s e e R R - 1 0 0 0 - 1 0 0 0 - 2 0 0 0 - 2 0 0 0 2 0 0 2 5 0 3 0 0 3 5 0 1 1 . 2 1 . 4 1 . 6 1 . 8 B D H V E F S T 3 2 A V E A 2 0 0 0 2 0 0 0 1 0 0 0 1 0 0 0 l l a a u u d d i i 0 0 s s e e R R - 1 0 0 0 - 1 0 0 0 - 2 0 0 0 - 2 0 0 0 0 5 0 0 0 1 0 0 0 0 0 . 0 5 0 . 1 0 . 1 5 0 . 2 0 . 2 5 E L E V T D A W C H V E S A I do not infer much from these last variable scatter plots – but the observed versus modeled residual plot suggested transformation. Take logs of streamflow and drainage area. The latter on physical grounds because of the anticipated relationship between flow and area and suggested by the clustering of area on the left of the scatter plot suggesting that it has a skewed distribution like streamflow. Also on physical grounds it may be better to consider Q/Area as a response variable. Y=log(watershed(:,36)); Xorig=X; X(:,32)=log(Xorig(:,32)); varname(32) ans = 'USGS_Area_km2' Follow the same procedure as before. Exploratory analysis using explore.m. 30 QMean QMean QMean 0 1 0 1 0 1 5 - 5 - 5 - 0 5 0 5 0 5 0 0 0 H C W A E V A 2 3 T S L V E L E 0 0 1 1 5 4 1 3 2 . 0 - 1 5 7 5 1 . 0 2 . 0 6 2 8 2 1 . 0 0 0 2 M A 2 N I E V 0 1 x 0 0 3 4 . 0 3 4 0 0 1 1 9 2 9 4 . 0 V E L E P X A M H D B 1 0 9 3 3 . 0 - 0 0 0 5 5 1 2 2 2 . 0 0 0 5 5 . 1 A S W E V D T S 0 0 0 0 1 0 0 0 1 2 0 0 0 D W X A M V E L E 3 2 5 5 3 . 0 0 5 B R A C 8 9 7 4 1 . 0 - 0 5 6 3 2 6 5 0 . 0 - 2 W 0 0 1 W S S 0 1 x 0 0 1 0 5 1 4 4 0 0 2 0 0 2 7 8 4 5 . 0 E V A 2 3 T S F P N A E M 0 5 2 3 8 8 1 3 . 0 - 2 N E D D 0 0 0 2 3 8 3 0 1 . 0 - 0 0 3 4 W S 0 1 x 0 0 0 4 0 5 3 6 -3 0 0 0 T C F K V E L E P N I M 2 3 6 5 3 2 . 0 5 . 0 8 6 3 0 9 0 . 0 - 0 5 7 3 1 1 5 0 . 0 A M W E V X A 4 S 0 1 x 0 0 1 1 6 4 31 stepwise(X,Y) variables forrepeat runs clear betabetaci anscoeftabhistorystatsinout %Clear regressionStepwise are with: the strongestNow correlations - - - - Mean annual precip.Mean annual ratioRelief daysWet area Basin QMean QMean QMean QMean 0 1 0 1 0 1 0 1 5 - 5 - 5 - 5 - 0 5 0 5 0 5 0 5 0 0 0 0 D W N I M N A E M R R 2 E P A H S 4 5 6 0 6 . 0 0 0 1 8 2 2 1 2 . 0 - 5 2 5 2 2 . 0 5 . 0 D W X 2 6 5 4 0 . 0 - 1 5 W W S 0 0 2 S 0 1 2 1 0 0 3 0 0 1 0 0 N A I D E M R R H M O X A M T 0 0 2 0 9 1 6 8 1 . 0 - 7 6 1 8 1 . 0 - 9 3 8 5 2 . 0 5 . 0 5 A S G S U W 0 0 3 0.7504 E V S 0 0 4 0 1 A 1 5 a e r 0 0 1 - k 2 m 0 0 0.0020804 D S H M R P N A E M T 0 1 0 2 0 T 7 2 7 0 2 . 0 X A M 5 3 4 6 9 0 . 0 - 0 1 A 0 0 1 0 4 W E V W 0 S S 0 0 2 0 6 0 2 6 1 2 6 3 . 0 f e i l e R 0 0 4 - 0 0 2 D S 0 0 2 - H D R N I M T 0 2 8 2 2 3 3 . 0 0.26775 T N I M 0 5 5 5 9 3 3 0 . 0 A 0 1 x W E V 0 4 4 W 0 S S 4 0 0 1 0 0 2 0 6 0 0 4 7 5 5 6 5 . 0 - 0 4 o i t a R f e i l e R 2 2 4 1 2 5 . 0 H D T W 1 E P A H S 0 6 H R -0.28928 5 . 0 3 7 3 3 3 0 . 0 - 5 W 4 A S 0 8 E V 0 0 1 6 1 6 32 C o e f f i c i e n t s w i t h E r r o r B a r s C o e f f . t - s t a t p - v a l X 1 - 6 . 8 3 9 4 8 - 4 . 9 8 6 5 0 . 0 0 0 0 X 2 0 . 2 4 9 1 5 5 0 . 7 4 6 9 0 . 4 5 5 7 X 3 0 . 0 0 0 5 5 4 5 4 4 0 . 2 6 6 8 0 . 7 8 9 8 X 4 1 1 2 . 2 6 5 1 . 3 7 0 9 0 . 1 7 1 4 X 5 4 . 5 8 6 5 e - 0 0 5 7 . 9 6 6 2 0 . 0 0 0 0 X 6 1 . 0 4 6 6 9 e - 0 0 5 0 . 9 3 0 9 0 . 3 5 2 6 X 7 2 . 7 5 1 5 8 e - 0 0 5 0 . 5 9 2 0 0 . 5 5 4 3 X 8 2 . 7 2 7 1 9 e - 0 0 5 1 . 8 5 6 6 0 . 0 6 4 3 0 1 0 0 2 0 0 3 0 0 M o d e l H i s t o r y 2 1 . 5 E S M R 1 0 . 5 2 4 6 8 1 0 1 2 The stepwise variables were exported and from the history we find the variables and the order that they entered this regression is: dd=size(history.in); h2=history.in(:,2:dd(2)); for i=1:(dd(1)-1) varselect(i)=find(h2(i+1,:)-h2(i,:)>0); end varselect varname(varselect) varselect = 12 31 32 1 5 16 13 34 15 20 18 ans = 'MAXP_WS' 'XWD_WS' 'USGS_Area_km2' 'AWCH_AVE' 'ELEV_MAX' 'MINWD_WS' 'MAXWD_WS' 'ReliefRatio' 'MINP_WS' 'RH_WS' 'PRMH_AVE' 33 These are generally consistent with the scatter plots and correlations. The sequence of mean square errors is history.rmse ans = 1.6443 1.4329 1.2855 0.6499 0.6067 0.5973 0.5783 0.5655 0.5566 0.5470 0.5443 0.5406 The regression parameters are: for i=1:length(varselect) beta(varselect(i)) end ans = 0.0030 ans = 0.0202 ans = 0.7785 ans = -6.8395 ans = 4.5865e-005 ans = -0.3735 ans = 0.0176 ans = -0.2800 ans = 0.0271 ans = 0.0177 ans = -0.0257 34 Verifying using regress [b,bint,r,rint,stats]=regress(Y, [ones(length(Y),1),X(:,varselect)]); for i=1:(length(varselect)+1) b(i) end ans = -2.7197 ans = 0.0030 ans = 0.0202 ans = 0.7785 ans = -6.8395 ans = 4.5865e-005 ans = -0.3735 ans = 0.0176 ans = -0.2800 ans = 0.0271 ans = 0.0177 ans = -0.0257 The regression equation is ln(QMEAN)=-2.72 + 0.003 MAXP_WS + 0.02 XWD_WS + 0.779 ln(USGS_Area_km2) -6.84 AWCH_AVE + 0.0000459 ELEV_MAX – 0.373 MINWD_WS + 0 0176 MAXWD_WS – 0.28 ReliefRatio + 0.027 MINP_WS + 0.0177 RH_WS – 0.0257 PRMH_AVE Interpreting this equation is challenging due to the different units involved. Most of the terms seem to have the correct physical sense. I do not know why releif ration should decrease mean annual runoff, but this is one among several things that should be further investigated. Examine residuals yp=[ones(length(Y),1),X(:,varselect)]*b; plot(Y,yp,'.') line([0,9],[0,9]) 35 xlabel('Observed') ylabel('Modeled') 1 0 9 8 7 6 d e l e 5 d o M 4 3 2 1 0 - 1 0 1 2 3 4 5 6 7 8 9 O b s e r v e d The residuals here appear to be much better behaved. Check independence and heterskedacity of residuals subplot(2,1,1) plot(yp,r,'.') xlabel('Predicted') ylabel('Residual') subplot(2,1,2) probplot(r) xlabel('Residual') ylabel('Probability') 36 2 0 l a u d i s e R - 2 - 4 0 1 2 3 4 5 6 7 8 9 1 0 P r e d i c t e d P r o b a b i l i t y p l o t f o r N o r m a l d i s t r i b u t i o n 0 . 9 9 9 9 0 .0 9 . 9 9 59 00 . 9 . 9 95 0 .0 9 . 59 y t i l 0 . 7 5 i b 0 . 5 a b 0 . 2 5 o r 0 . 1 P 0 . 0 5 00 . 0 . 0 15 0 .0 0 . 0 0 51 0 . 0 0 0 1 - 3 - 2 . 5 - 2 - 1 . 5 - 1 - 0 . 5 0 0 . 5 1 1 . 5 2 R e s i d u a l The top plot indicates that the residuals are not heteroskedastic, but the bottom plot indicates some departures from normality, although not quite as bad as the non transformed regression. for iv=1:4 subplot(2,2,iv) plot(X(:,varselect(iv)),r,'.') xlabel(varname(varselect(iv))) ylabel('Residual') end 37 2 2 0 0 l l a a u u d d i i s s e e R - 2 R - 2 - 4 - 4 0 2 0 0 4 0 0 6 0 0 8 0 0 0 1 0 0 2 0 0 3 0 0 M A X P S X W D S W W 2 2 1 1 l l a 0 a 0 u u d d i i s s e e - 1 - 1 R R - 2 - 2 - 3 - 3 2 4 6 8 1 0 0 . 0 5 0 . 1 0 . 1 5 0 . 2 0 . 2 5 U S G S r e a m 2 A W C H V E A k A for iv=5:8 subplot(2,2,iv-4) plot(X(:,varselect(iv)),r,'.') xlabel(varname(varselect(iv))) ylabel('Residual') end 2 2 0 0 l l a a u u d d i i s s e e R - 2 R - 2 - 4 - 4 0 2 4 6 0 2 4 6 8 E L E V A X 4 M I N W D S M x 1 0 W 2 2 1 1 l l a 0 a 0 u u d d i i s s e e - 1 - 1 R R - 2 - 2 - 3 - 3 0 5 0 1 0 0 1 5 0 0 2 4 6 M A X W D S R e l i e f R a t i o W for iv=9:11 38 subplot(2,2,iv-8) plot(X(:,varselect(iv)),r,'.') xlabel(varname(varselect(iv))) ylabel('Residual') end 2 2 0 0 l l a a u u d d i i s s e e R - 2 R - 2 - 4 - 4 0 2 0 4 0 6 0 8 0 4 0 6 0 8 0 1 0 0 M I N P S R H S W W 2 1 l a 0 u d i s e - 1 R - 2 - 3 0 5 1 0 1 5 2 0 P R M H V E A Some of these plots indicate heteroskedacity. If I was pursuing this further I would try a regression of QMEAN/Area. I would also try log transforms of some of the variables that physically are expected to be more linear than exponentially related to QMEAN, such as all the precipitation ones. One could also try log tranforms of everything. One should also examine leverage and cooks distance and carefully evaluate any outliers. However this is enough for a learning experience and the second model above explains nearly 90% of the variance in ln(QMEAN). 39