Biostatistics 278, discussion 2: Splus code for principal component analysis and multidimensional scaling Steve Horvath

3 We will describe here how to fit a hyperplane around points xi in R . Thus we want to estimate the center "mu", the orthogonal directional components || x V ||2 "Vq", and the scores "lambda" such that i i q i is minimized. #First we simulate the data mu_c(1,1,1) # the following gives the direction of the principal components. # note that Vq is an orthogonal matrix. Vq_cbind( c(1/sqrt(2),0,1/sqrt(2)), c(1,1,-1)/sqrt(3) ) #the first component has standard deviation 1, the second has sd=3 r1_rnorm(10,sd=1) r2_rnorm(10,sd=3) # in the following each column corresponds to lambda_i lambda_rbind(r1,r2) # now we estimate the individual observations xhelp_mu+Vq %*% lambda # observations correspond to the rows of the xx matrix: xx_t(xhelp) # here we fit the principal components model # first import 2 libraries library(class) library(MASS) pca1_princomp( xx, cor=F)

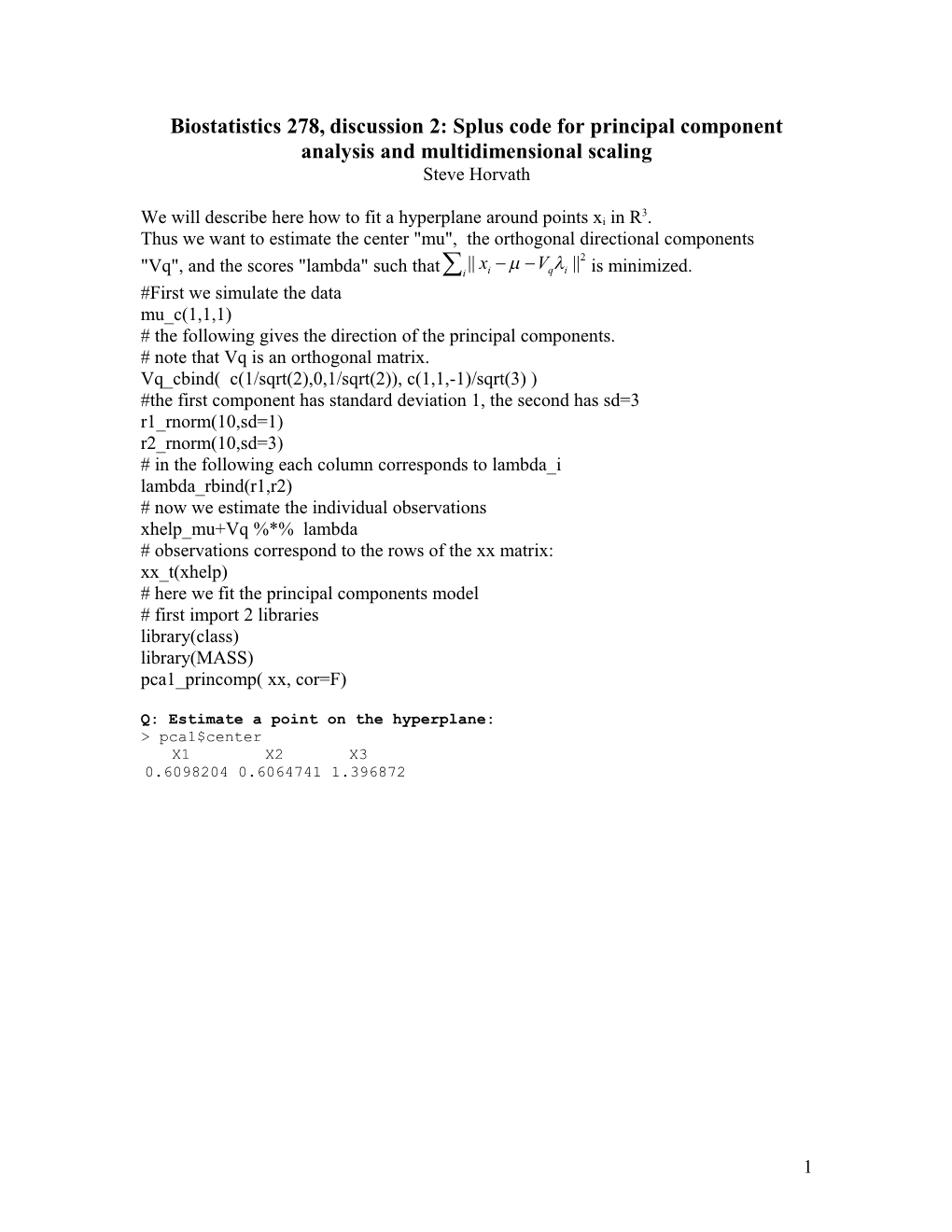

Q: Estimate a point on the hyperplane: > pca1$center X1 X2 X3 0.6098204 0.6064741 1.396872

1 Q: let us recover the the standard deviations of r1 and r2. > summary(pca1) Importance of components: Comp. 1 Comp. 2 Comp. 3 Standard deviation 2.8789458 0.89826425 1.782250e-008 Proportion of Variance 0.9112853 0.08871471 3.492404e-017 Cumulative Proportion 0.9112853 1.00000000 1.000000e+000

#Comment: note that Splus lists the components in the order # of decreasing estimated standard deviation. # the first component has sd=2.87 which is close to 3, # the second sd is close to 1, and the third is close to 0. plot(pca1)

x

0.911 8 6 s e c n a 4 i r a V 2

1

1 0

Comp. 1 Comp. 2 Comp. 3

2 Q: Let us estimate Vq. #Recall that Vq is given by > Vq [,1] [,2] [1,] 0.7071068 0.5773503 [2,] 0.0000000 0.5773503 [3,] 0.7071068 -0.5773503 loadings(pca1) > loadings(pca1) Comp. 1 Comp. 2 Comp. 3 X1 -0.564 -0.718 0.408 X2 -0.577 -0.816 X3 0.591 -0.696 -0.408 > # Note that up to a minus sign, the first 2 components correspond to # Vq[,2] and Vq[,1] # the components are listed in the order of importance. # the third component has importance very close to 0.

Q: Recover the original score values (aka principal components) lambda. score.original_t(lambda) # The column corresponding to the first original lambda row # corresponds to minus the second principal component: rbind(score.original[,1],-pca1$scores[,2]) > rbind(score.original[,1],-pca1$scores[,2]) [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [1,] 1.136336 0.4521117 0.4871856 0.3036164 0.07695348 -0.7557866 -1.784773 0.9218859 [2,] 1.168094 0.3529931 0.3939785 0.3908880 0.10363944 -0.7632875 -1.796500 0.8935233 [,9] [,10] [1,] 0.4253107 -1.215515 [2,] 0.4578873 -1.201216 >

# the estimate of the second original score (row of lambda) # is off by about -.67. This is due to the inaccurate center estimate > pca1$scores[,1]+score.original[,2] [1] -0.6597695 -0.6740067 -0.6732845 -0.6750570 -0.6799370 -0.6960766 -0.7156588 [8] -0.6644131 -0.6732652 -0.7046005

Comment: pca1$scores is identical to predict(pca1)

3 Q: Visualize lambda. # Here we will use eqscplot, which plot scatterplots # with geometrically equal scales par(mfrow=c(2,1)) eqscplot(pca1$scores[,1:2],xlab="first principal component", ylab="second principal component") eqscplot(-score.original[,2:1],xlab="minus second original lambda component", ylab="minus first original component") t 5 . n 1 e n o 0 p . 1 m o c

5 l . a 0 p i c 0 n . i r 0 p

d n o c e 0 s . 1 -

-4 -2 0 2 4

first principal component t 5 n . e 1 n o p 0 . m 1 o c

l 5 a . n 0 i g i r 0 o .

t 0 s r i f

s u n i 0 m . 1 -

-4 -2 0 2 4 6 minus second original lambda component

4 Example for genes

#import the data # these data are slightly different from the data set which you find on the webpage # specify in the import options that the first column contains (gene) names # and the first row contains sample names dat1_t(MicroarrayExample) # only look at the 30 most varying genes pca2_princomp(dat1[,1:30]) par(mfrow=c(1,1)) plot(pca2)

x

0.268 0 0 0 0 0 6

0.443 0 0 s 0 e 0 c 0 n 4 a i r

a 0.556 V 0.654

0 0.734 0 0 0 0

2 0.782 0.823 0.862 0.892 0.913 0

Comp. 1Comp. 2Comp. 3Comp. 4Comp. 5Comp. 6Comp. 7Comp. 8Comp. 9Comp. 10

Message: among the 30 most varying genes, one needs about 10 to explain 90 percent of the variance.

5 par(mfrow=c(1,1)) eqscplot(pca2$scores[,1:2],type="n",xlab="first principal component", ylab="second principal component") text(pca2$scores[,1:2],labels=dimnames(dat1)[[1]])

A3

0 A4 0

0 D8 1 D1 D13 A1 D7 0 O2 t D16 D2 0 n 5

e A5

n D10D19

o D14 p m o

c N4 D11 0

l D12 a O3 D3 p

i N3 c D15 n

i A2 r N2 D17 0

p N1

0 O1 D4 d

5 N5 D6 n - o c e

s D5 D9 0 0 0

1 N6 - 0

0 D18 5 1 - N7

-2000 -1000 0 1000 2000

first principal component

# Message: although the first 2 principal components explain only 44% of the variation of the 30 most varying genes, they reveal some structure: N's, A's, D's and O's are close together.

6 Q: Do the first 2 principal components have a nice interpretation in terms of the original 30 most varying genes? signif(loadings(pca2)[,1:2], 2) Comp. 1 Comp. 2 hi31687.f.at -0.1800 0.3500 32052.at -0.2100 0.3500 1346.at 0.0170 -0.0034 AFFX.CreX.3.at -0.2900 0.0640 32243.g.at -0.2000 0.1300 31525.s.at -0.1000 0.2600 870.f.at -0.0280 -0.0038 37149.s.at 0.1500 0.0750 36780.at -0.0640 0.3900 40484.g.at 0.0260 0.1600 41745.at 0.1300 0.0430 40185.at -0.2100 0.2900 AFFX.HUMGAPDH/M33197.M.at 0.2500 0.1900 AFFX.HSAC07/X00351.M.at 0.3200 0.2500 AFFX.HUMGAPDH/M33197.5.at 0.2300 0.1800 AFFX.CreX.5.at -0.2000 0.0420 35083.at -0.0720 0.2000 216.at -0.4200 0.0440 31957.r.at 0.0053 0.1100 36627.at -0.0930 0.1500 35905.s.at 0.1000 0.0750 35185.at 0.0920 0.0310 AFFX.BioDn.3.at -0.1400 0.0490 AFFX.HSAC07/X00351.5.at 0.3100 0.1800 31557.at 0.1600 0.1600 37864.s.at 0.0300 0.0250 201.s.at 0.2500 0.2400 608.at 0.0370 0.1800 1693.s.at 0.1400 -0.0084 37043.at 0.0640 0.1400 >

Message: I don't see a nice interpretation. But if we had functional info on the genes an interpretation may be possible.

7 Multi-dimensional scaling (distance methods) References: Venables and Ripley: Modern Applied Statistics with Splus. Springer Hastie, Tibshirani, Friedman: The elements of Statistical Learning.

Distance methods are based on representing the cases in a low dimensional Euclidean space so that their proximity reflects the similarity of their variables. The most obvious distance method is multi-dimensional scaling which seeks a configuration in R^d such that distances between point best match (in a sense to be defined) those of the distance matrix. Only the classical or metric form of MDS is implemented in Splus, which is also known as principal coordinate analysis. cmd1_cmdscale(dist(dat1[,1:30]),k=2,eig=T) par(mfrow=c(1,1)) cmd1$points_-cmd1$points eqscplot(cmd1$points,type="n") text(cmd1$points,labels=dimnames(dat1)[[1]])

A3

0 A4 0

0 D8 1 D1 D13 A1 D7 0 O2 D16 D2 0

5 A5 D10D19 D14

N4 D11 0 O3 D3 D12 N3 A2 D15 N2 D17

0 N1

0 O1 D4

5 N5 D6 -

D5 D9 0 0 0

1 N6 - 0

0 D18 5 1 - N7

-2000 -1000 0 1000 2000

cmd1$points

Comment: using classical MDS with a Euclidean distance is precisely equivalent to plotting the first k principal components.

8 Question: How good is the fit? Answer: Calculate a measure of stress: dist.original_dist(dat1[,1:30]) dist.MDS_dist(cmd1$points) sum(( dist.original-dist.MDS)^2/sum(dist.original^2))

[1] 0.1735688 >

Message: The fit is not great. The closer to 0 the better.

9 A non-metric form of MDS is Sammons (1996) non-linear mapping. Here the following stress function is being minimized: 2 (dii' || zi zi' ||) ii' dii'

An iterative algorithm is used, which will usually converge in around 50 iterations. As this is necessarily an O(n^2) calculation, it is slow for large datasets. Further, since the configuration is only determined up to rotations and reflections (by convention the centroid is at the origin), the result can vary considerably from machine to machine. In this release the algorithm has been modified by adding a step-length search (magic) to ensure that it always goes downhill.

> sammon1_sammon(dist(dat1[,1:30] )) Initial stress : 0.18853 stress after 10 iters: 0.08861, magic = 0.018 stress after 20 iters: 0.07804, magic = 0.500 stress after 30 iters: 0.07619, magic = 0.500 stress after 40 iters: 0.07549, magic = 0.500 stress after 50 iters: 0.07542, magic = 0.500 par(mfrow=c(1,1)) sammon1$points_-sammon1$points eqscplot(sammon1$points,type="n") text(sammon1$points,labels=dimnames(dat1)[[1]])

D8 0 0 0 2 D7 A3 A4 D16 D1 D10 0 0 0

1 A5 D2 D13 O2 A1 D19 O3 D15

0 D12 D14 N4 A2 D17 N3 N2 D6 O1 D11 N1 0 0 0

1 D4 - N5 D5 D9 D3 N6 0

0 D18 0 2

- N7

-3000 -2000 -1000 0 1000 2000 3000 sammon1$points

THE END

10