Lab 4: Text Classification

Evgueni N. Smirnov

August 27, 2008

1. Introduction Text mining is concerned with the task of extracting relevant information from natural language text and to search for interesting relationships between the extracted entities. Text classification is one of the basic techniques in the area of text mining. It is one of the more difficult data-mining problems, since it deals with very high-dimensional data sets with arbitrary patterns of missing data. In this lab session you will study three text classification problems. You will try to build classifiers for each of these problems using Weka.

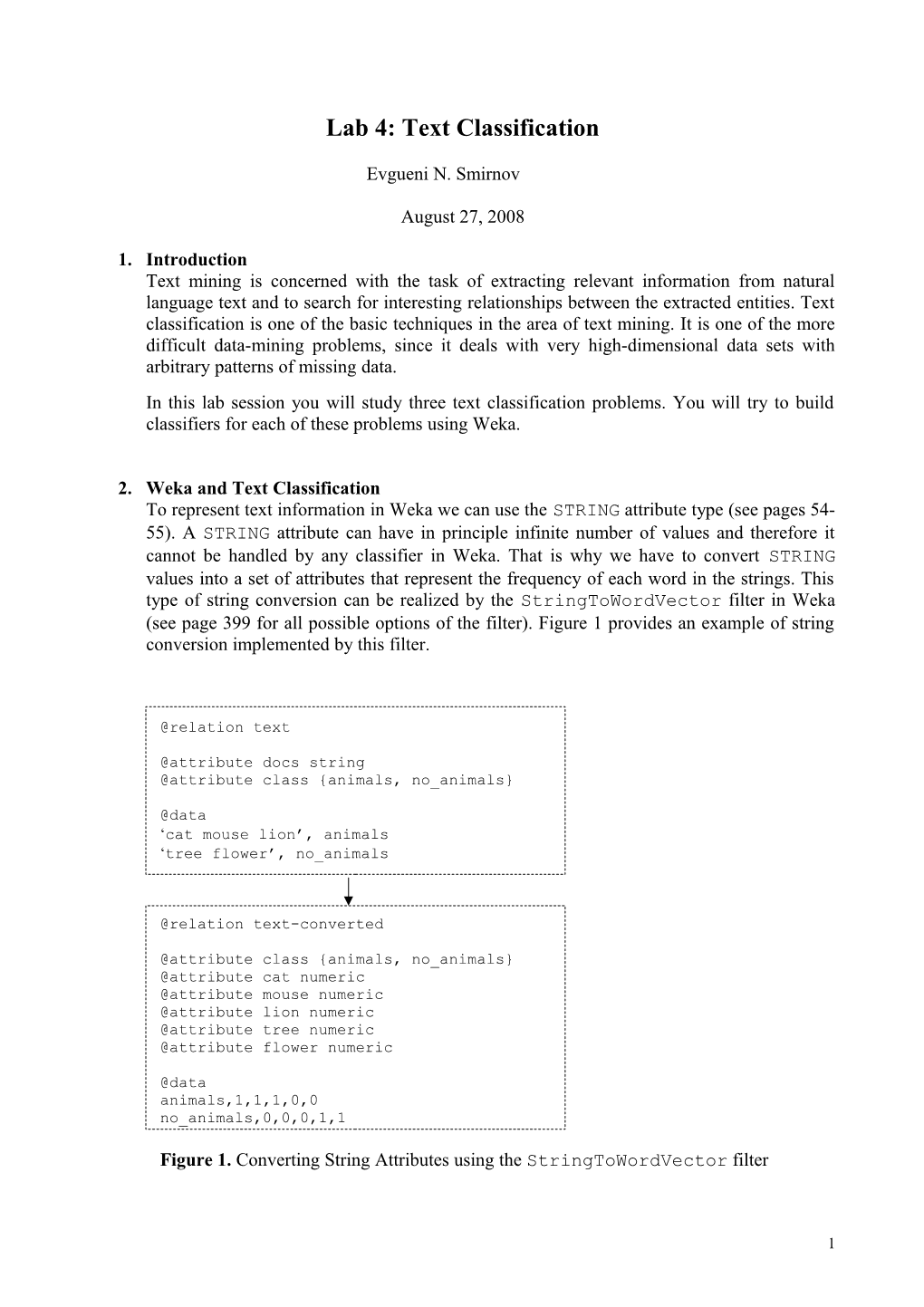

2. Weka and Text Classification To represent text information in Weka we can use the STRING attribute type (see pages 54- 55). A STRING attribute can have in principle infinite number of values and therefore it cannot be handled by any classifier in Weka. That is why we have to convert STRING values into a set of attributes that represent the frequency of each word in the strings. This type of string conversion can be realized by the StringToWordVector filter in Weka (see page 399 for all possible options of the filter). Figure 1 provides an example of string conversion implemented by this filter.

@relation text

@attribute docs string @attribute class {animals, no_animals}

@data ‘cat mouse lion’, animals ‘tree flower’, no_animals

@relation text-converted

@attribute class {animals, no_animals} @attribute cat numeric @attribute mouse numeric @attribute lion numeric @attribute tree numeric @attribute flower numeric

@data animals,1,1,1,0,0 no_animals,0,0,0,1,1

Figure 1. Converting String Attributes using the StringToWordVector filter

1 Please note that after the conversion the class attribute becomes always the first attribute (see Figure 1). This may cause some problems, since in Weka by default the last attribute is the class attribute. To overcome this obstacle please go to the “Preprocess” tab and click on the “Edit” button. The button will activate a database view of the data. Please right-click on the class attribute. It will activate a menu in which you have to select the option “Attribute as Class”.

Once you have converted your text data into the word-frequency attribute representation, you can start training and testing any classifier in the standard for Weka way. In this lab we will use four classifiers: the Nearest Neighbor Classifier (IBk), the Naïve Bayes Classifier (NB), the J48 Decision Tree Learner, and the JRip Classifier.

3. The Data-Mining Text Problem

3.1 Description The data-mining text problem is a relatively simple problem. It is defined as follows below:

Given:

A collection of 189 sentences about data mining derived from Wikipedia given in English, French, Spanish, and German.

Find: Classifiers that recognize the language of a given text.

The data for the task are given in file dataMining.arff that can be downloaded from the course website.

3.2 Task Your task is to train the IBk, NB, J48, and JRip classifiers on the dataMining.arff as well as to analyze these classifiers and the data. To accomplish this task please follow the following main scenario:

Main Scenario. 1. Study the data file dataMining.arff. 2. Load the data file dataMining.arff in Weka. 3. Study the StringToWordVector filter in Weka. 4. Convert the data using the StringToWordVector filter. 5. Set again the class attribute as it is described above (in italic). 6. Train the IBk, NB, J48, and JRip classifiers in the standard Weka way. 7. Test the IBk, NB, J48, and JRip classifiers using the 10-fold cross validation.

Once you have followed the main scenario, you can write down the statistics and confusion matrices of the classifiers as well as the text representations of the J48 and JRip

2 classifiers. You can use this information in order to answer to two types of questions: language-related questions and classifier-related questions.

The language-related questions:

Are the languages presented in the data related? How can you show it?

How the explicit classifiers (like the J48 and JRip classifiers) recognize the languages? What would happen if we could use stop lists for all the four languages when preparing the data?

The classifier-related questions:

The accuracy of the NB, J48, and JRip classifiers are relatively good. Why is that? In this context, please note the IBk classifier has very low accuracy for low values of the parameter k. When you increase the value of k to let say 59 the accuracy becomes 90.48%. How can you explain this phenomena?

Another issue is the computational performance of the classifiers. Due to the high- dimensional data (1902 numeric attributes) training of some of the classifiers (like JRip) takes time. To reduce this time you can reduce the number of the attributes by selecting the most relevant ones. The selection can be realized using some of the attribute-selection methods from the “Select Attributes” pane of the Weka Explorer. Once the attributes indices have been identified, you can select the attributes using the attribute filter Remove. Then, please repeat the experiments and record again the statistics and confusion matrices of the classifiers. As you will see after the experiments, in addition to the time reduction, the IBk classifier suddenly becomes quite accurate for low values of k. How can you explain this result?

4. The Music-Jokes Text Problem

4.1 Description The music-jokes text problem is a bit harder problem. It is defined as follows below:

Given:

A collection of 409 music jokes in English from http://www.mit.edu/~jcb/jokes/. The jokes are labeled as funny or not funny by Dr. Jeroen Donkers.

Find: Classifiers that recognize whether a joke is funny or not funny.

The data for the task are given in file musicJokes.arff that can be downloaded from the course website.

4.2 Task Your task is to train the IBk, NB, J48, and JRip classifiers on the musicJokes.arff as well as to analyze these classifiers and the data. To accomplish this task please follow

3 the scenario from section 3 and set the option useStoplist of the StringToWordVector filter to true. Once you have finished training, please write down the statistics and confusion matrices of the classifiers.

Using the text representations of the J48 and JRip classifiers can you hypothesize what makes a joke funny?

Since none of the classifiers has accuracy of greater than 65%, please try to improve the classifiers by adjusting the options of the StringToWordVector filter and the classifiers used. In addition, you can select the most promising attributes using some of the attribute-selection methods from the “Select Attributes” tab, or you can use the meta CostSensitiveClassifier classifier to adjust the accuracy using the costs.

5. The Problem of American and Chinese Styles of English Sentence Construction

5.1 Description The problem of American and Chinese styles of English sentence construction is the most difficult problem. It is defined as follows below:

Given:

. A collection of 132 pair of sentences written in English by Americans and Chinese living in US (see http://www.englishdaily626.com/c-mistakes.php for more info).

Find: . Classifiers that recognize whether a sentence has been written by an American or a Chinese.

The data for the task are given in file americanChineseStyles.arff that can be downloaded from the course website.

5.2 Task Your task is to train the IBk, NB, J48, and JRip classifiers on the americanChineseStyles.arff as well as to analyze these classifiers and the data. To accomplish this task please follow the scenario from section 3. The accuracy of the classifiers you will design will vary between 7.95% till 50%. In this context, the main question is: can you employ the classifier with low accuracy to design an acceptable classifier?

Note: If during the experiments Weka crashes due to the memory requirements, please set the option maxheap in file C:\Program Files\Weka-3-4\ RunWeka.ini to 512m or 1024m.

4