Computational Methods for Data Analysis – 2014/15

Lab 3: Linear Regression and Logistic Regression for Data Analysis

In the Cognitive Sciences linear and logistic regression are intensively used for data analysis. In this lab we explore this use. Along the way, we’ll also look some more at the way statistical tests are done in R. The lab follows rather closely the presentation in chapters 4.3, 6.1, 6.2 and 6.3 of Baayen’s book.

Comparing paired vectors

(Baayen ch. 4.3, p. 82-84)

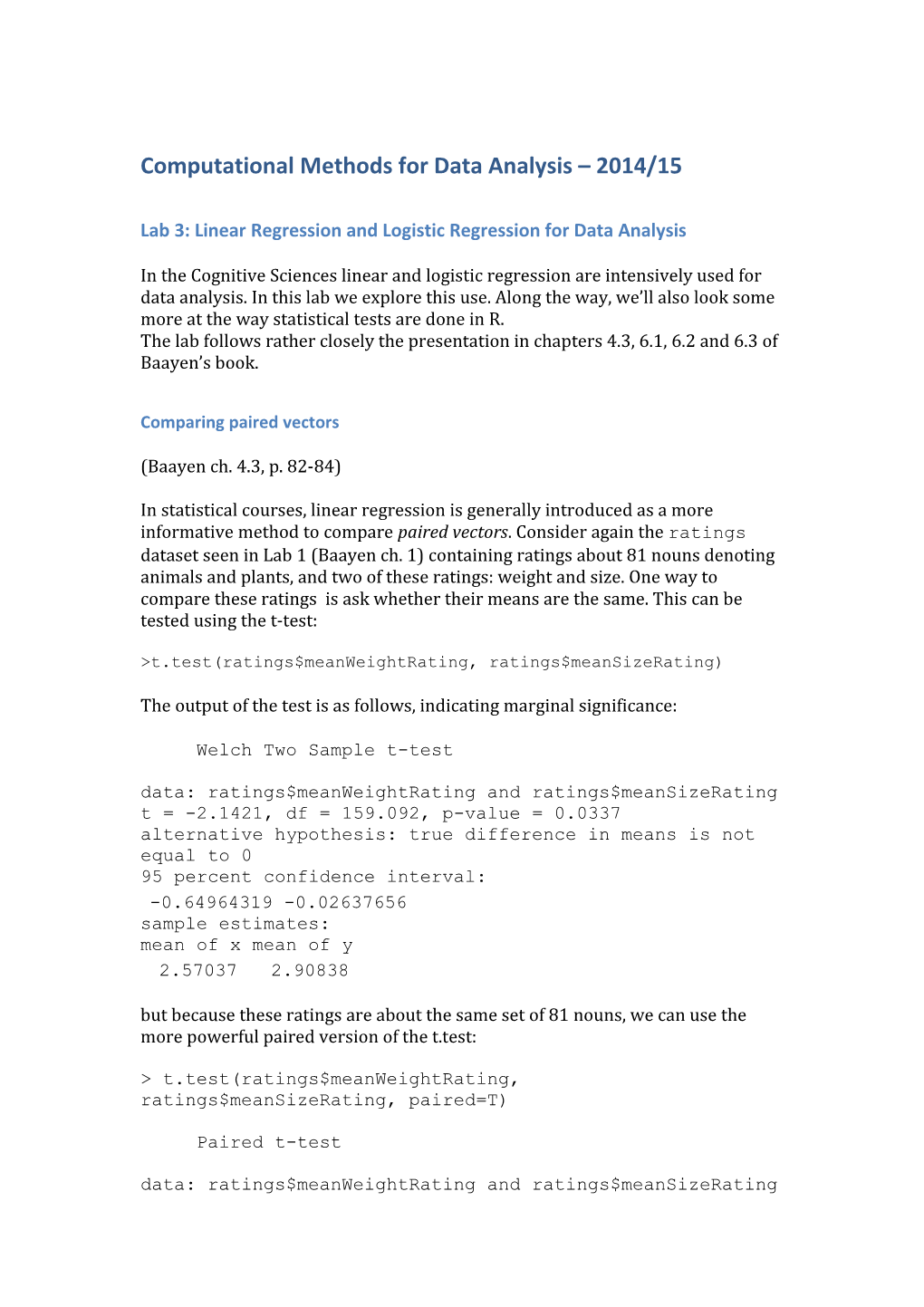

In statistical courses, linear regression is generally introduced as a more informative method to compare paired vectors. Consider again the ratings dataset seen in Lab 1 (Baayen ch. 1) containing ratings about 81 nouns denoting animals and plants, and two of these ratings: weight and size. One way to compare these ratings is ask whether their means are the same. This can be tested using the t-test:

>t.test(ratings$meanWeightRating, ratings$meanSizeRating)

The output of the test is as follows, indicating marginal significance:

Welch Two Sample t-test data: ratings$meanWeightRating and ratings$meanSizeRating t = -2.1421, df = 159.092, p-value = 0.0337 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -0.64964319 -0.02637656 sample estimates: mean of x mean of y 2.57037 2.90838 but because these ratings are about the same set of 81 nouns, we can use the more powerful paired version of the t.test:

> t.test(ratings$meanWeightRating, ratings$meanSizeRating, paired=T)

Paired t-test data: ratings$meanWeightRating and ratings$meanSizeRating t = -36.0408, df = 80, p-value < 2.2e-16 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -0.3566737 -0.3193460 sample estimates: mean of the differences -0.3380099

This gives us much stronger evidence that the two means are in fact different.

Much of data analysis is about avoiding simple-minded applications of such tests. For instance, an issue that is sometimes forgotten is that these tests are based on the assumption that weight ratings and size ratings are normally distributed. To be sure of our results we better check if this assumption is justified. In the first instance, this can be done graphically, with a boxplot:

> par(mfrow=c(1,2)) > boxplot(ratings$meanWeightRating, ratings$meanSizeRating, names=c("weight", "size"), ylab = "mean rating")

The boxplot indicates a certain degree of asymmetry, but this is pretty mild – the vector of differences doesn’t have much asymmetry

> boxplot(ratings$meanWeightRating - ratings$meanSizeRating, names="difference", ylab = "mean rating difference")

A stronger test is given by normality tests such as the Shapiro-Wilk test. This also indicates that the vector of differences is normal:

> shapiro.test(ratings$meanWeightRating-ratings$meanSizeRating)

Shapiro-Wilk normality test data: ratings$meanWeightRating - ratings$meanSizeRating W = 0.9644, p-value = 0.02374

But in case of uncertainty we could use the Wilcoxon signed rank test with paired=T instead of the t-test:

> wilcox.test(ratings$meanWeightRating, ratings$meanSizeRating, paired = T)

Linear regression for paired vectors analysis

What some of you may have already known before this course is that linear regression is a way to carry out an even more systematic test, to compare the entire distribution instead of just the mean. A scatterplot of the two distributions, produced as follows: > plot(ratings$meanWeightRating, ratings$meanSizeRating, xlab = "mean weight rating", ylab = "mean size rating") indicates that the data points pattern along a straight line. This can be highlighted by plotting a line through the scatterplot using abline(). abline takes two parameters—an intercept and a slope—and adds the line specified by the parameters to a plot

> plot(c(-4,4), c(-4,4)) > abline(2,-2)

The slope and intercept of the line through the scatterplot of weight ratings and size ratings can be computed using lm():

> ratings.lm = lm(meanSizeRating ~ meanWeightRating, data = ratings)

We saw in a previous lab that ratings.lm is a linear model. The intercept and slope specified by this model can be seen by typing ratings.lm at the prompt:

>ratings.lm

Call: lm(formula = meanSizeRating ~ meanWeightRating, data = ratings)

Coefficients: (Intercept) meanWeightRating 0.5270 0.9265

R makes it very easy to add the regression line to a scatterplot: we just need to feed the linear model to abline():

> abline(ratings.lm)

The degree to which the data points cluster around the regression line is called correlation, and is quantified by a correlation coefficient. The correlation coefficient of a sample, indicated with r, takes values between +1 (perfect correlation) and -1 (perfect negative correlation). r can be seen as a measure of how useful it is to fit a regression line through the data. The function mvrnorm.fnc can be used to visualize the fit between a regression line and data. It takes as input a value of r. Try increasing values of r:

>mvrnormplot.fnc(r = -0.067) >mvrnormplot.fnc(r = 0.2) >mvrnormplot.fnc(r = 0.61) >mvrnormplot.fnc(r = 0.888) The measure used to indicate how much of the scatter is accounted for by the regression line is r2, typically written R2. Going back to the weights/size example, we can use summary to get the information about R2.

>summary(ratings.lm)

Let’s look in detail the output of this command. summary() shows us: - the residuals (see lectures); - a table with the coefficients of the model. This specifies predictor by predictor the extent to which that predictor gives us information about the dependent variable. If the coefficient is 0, there is no relation at all. The p value tells that whether the coefficient is significantly different from 0. - the residual standard error R2 quantifies on a scale from 0 to 1 the proportion of the variance that the model explains. We get r by taking the square root of R2.

Limits of linear regression

One problem with linear regression is the unordinate effect of outliers. Consider the plot comparing the frequencies of plural and singular words in ratings, produced as follows:

> plot(ratings$FreqSingular, ratings$FreqPlural) and now add a regression line:

> abline(lm(FreqPlural ~ FreqSingular, data=ratings), lty = 1) you’ll see that the regression line is greatly affected by the four points with singular frequency greater than 500. If we remove these four points as follows:

> abline(lm(FreqPlural ~� FreqSingular,data = ratings[ratings$FreqSingular < 500, ]), lty = 2) we get a very different picture.

The second problem is exemplified by the following plot:

> with(ratings, plot(meanSizeRating, meanFamiliarity)) if we plot the regression line

> ratings.lm = lm(meanSizeRating ~ meanFamiliarity, data = ratings) > abline(ratings.lm)

We see that the regression line is not very informative Part of the problem is that there seem to be two groups of items. If we fit them separately the results are better. We can do this by creating separate data frames

> plants = ratings[ratings$Class == "plant", ] > animals = ratings[ratings$Class == "animal", ]

Then adding the points separately, first for plants

> points(plants$meanFamiliarity, plants$meanSizeRating, pch = ‘p’, col = "darkgrey") > lines(lowess(plants$meanFamiliarity, plants$meanSizeRating), col = "darkgrey") and then for animals

> points(animals$meanFamiliarity, animals$meanSizeRating, pch = ‘a’) > lines(lowess(animals$meanFamiliarity,animals$meanSizeRating))

Now we can fit separate models:

> plants.lm = lm(meanSizeRating ~ meanFamiliarity, plants) > abline(coef(plants.lm), col = "darkgrey", lty = 2) > animals.lm = lm(meanSizeRating ~ meanFamiliarity, animals) > abline(coef(animals.lm), lty = 2)

But we also need non-linearity

> plants.lm = lm(meanSizeRating ~ meanFamiliarity + I(meanFamiliarity^2), data = plants)

> summary(plants.lm)$coef

Logistic regression

Baayen, Chap 6.3

For classification, we can use Generalized Linear Models, implemented using the function glm().

Consider the visual lexical decision dataset , english, and let’s look at the variable CorrectLexdec.

We can build a logistic model for this prediction task as follows:

> english.glm = glm(cbind(english$CorrectLexdec,30 -english$CorrectLexdec) ~ Voice + MeanBigramFrequency + LengthInLetters + Ncount + WordCategory + poly(WrittenFrequency, 2) + poly(WrittenSpokenFrequencyRatio, 2) + poly(FamilySize, 2) + InflectionalEntropy + NumberComplexSynsets + AgeSubject, english, family = "binomial")

Let us now inspect the output of summary

Summary(english.glm)

More linear regression

Baayen ch. 6.1 and Chap 6.2