Autofocus System and Evaluation Methodologies: a Literature Review

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ronin-S Release Notes

Ronin-S Release Notes Date: 2019.08.28 Firmware: v1.9.0.80 Ronin App iOS: v1.2.2 Ronin App Android: v1.2.2 DJI Pro Assistant for Ronin (PC): v2.0.2 DJI Pro Assistant for Ronin (Mac): v2.0.2 User Manual: v1.2 What’s New? Added video recording, autofocus, and focus pull support for Sony A9 and A6400 cameras with supported E-mount lenses using a Multi-Camera Control Cable (Micro-USB). To use autofocus on the Sony A9 and A6400, press halfway down on the camera control button of the gimbal Added photo capture, video recording, autofocus, and focus pull support for Canon EOS RP cameras with supported RF mount lenses using a Multi-Camera Control Cable (Type-C). To use autofocus on the Canon EOS RP, press halfway down on the camera control button of the gimbal. Added photo capture, video recording, autofocus, and focus pull support for Canon M50 cameras with supported EF-M mount lenses using a Multi-Camera Control Cable (Micro-USB). To use autofocus on the Canon M50, press halfway down on the camera control button on the gimbal. Added photo capture, video recording, autofocus, and focus pull support for Canon EOS 6D and EOS 80D cameras with supported EF mount lenses using a Multi-Camera Control Cable (Mini USB). To use autofocus on the Canon EOS 6D and EOS 80D, press halfway down on the camera control button of the gimbal. Added photo capture, video recording, autofocus, and focus pull support for Panasonic G9 cameras with supported Macro 4/3 mount lenses using a Multi-Camera Control Cable (Micro USB). -

Autofocus Camera with Liquid Lens

Autofocus Camera with Liquid Lens Pixelink has developed a family of USB 3.0 autofocus cameras that seamlessly integrate with liquid lenses providing you with cutting-edge solutions ideal for high-speed imaging applications. Features » One push, high-speed, point to point focus » Seamless integration with liquid lenses » Focus range of millimeters to infinity, in less than 20 ms » Easy integration with Pixelink SDK Benefits » Large range of optical variation: Liquid interface allows for large phase shi variations » Sturdiness : Tested for over 100 million cycles with zero performance degradation » Shock resistance: Excellent output before and aer shock tests » High-speed: Reconfigures in tens of milliseconds » Low power: Dissipates ~15mW, 10x lower than other systems Applications » Bar-Code Reading » Biometrics - Facial and Retinal » Inspection » Biotechnology » Medical Applications 1900 City Park Drive, Suite 410, O�awa, Ontario K1J 1A3, Canada Tel: +1.833.247.1211 (Canada) Tel: 613.247.1211 (North America) pixelink.com Select the Camera and Autofocus Lens that Fits Your Application Pixelink has a full line of USB 3.0 autofocus cameras including: Camera Model Color Space Sensor Resolution Sensor Size PL-D721CU Color ON Semi Vita 1300 1.3 MP 1/2” PL-D721MU Monochrome ON Semi Vita 1300 1.3 MP 1/2” PL-D722CU Color ON Semi Vita 2000 2.3 MP 2/3” PL-D722MU Monochrome ON Semi Vita 2000 2.3 MP 2/3” PL-D729MU Monochrome ON Semi Mano 9600 9.5 MP 2/3” PL-D732CU Color CMOSIS CMV 2000 2.2 MP 2/3” PL-D732MU Monochrome CMOSIS CMV 2000 2.2 MP 2/3” PL-D732MU-NIR -

Detection and Depletion of Digital Cameras: an Exploration Into Protecting Personal Privacy in the Modern World Jessica Sanford Union College - Schenectady, NY

Union College Union | Digital Works Honors Theses Student Work 6-2016 Detection and Depletion of Digital Cameras: An Exploration into Protecting Personal Privacy in the Modern World Jessica Sanford Union College - Schenectady, NY Follow this and additional works at: https://digitalworks.union.edu/theses Part of the Photography Commons, Privacy Law Commons, and the Technology and Innovation Commons Recommended Citation Sanford, Jessica, "Detection and Depletion of Digital Cameras: An Exploration into Protecting Personal Privacy in the Modern World" (2016). Honors Theses. 206. https://digitalworks.union.edu/theses/206 This Open Access is brought to you for free and open access by the Student Work at Union | Digital Works. It has been accepted for inclusion in Honors Theses by an authorized administrator of Union | Digital Works. For more information, please contact [email protected]. Detection and Deflection of Digital Cameras: An Exploration into Protecting Personal Privacy in the Modern World By Jessica Sanford * * * * * * * * * Submitted in partial fulfillment of the requirements for Honors in the Department of Computer Science UNION COLLEGE June, 2016 ! 1 ABSTRACT SANFORD, JESSICA !Detection and Deflection of Digital Cameras: An Exploration into Protecting Personal Privacy in the Modern World. Department of Computer Science, June 2016. ADVISOR: John Rieffel As all forms of technology become more integrated into our daily lives, personal privacy has become a major concern. Everyday devices, such as mobile phones, have surveillance capabilities simply by having a digital camera as part of the device. And while privacy and secrecy seem to go hand in hand, it is not always the case that one does not care about privacy because they have nothing to hide. -

The Lightest and Smallest Full-Frame Eos Camera*

THE LIGHTEST AND SMALLEST FULL-FRAME EOS CAMERA* The Portable Performer. Stepping up to a full-frame camera has never been easier. The mirrorless EOS RP – the lightest and most compact full-frame EOS camera to date* - includes a 26.2 MP CMOS sensor, fast and accurate autofocus, 4K video, and our powerful DIGIC 8 processor for exceptional results, even in low light. It’s compatible with RF lenses as well as all EF/EF-S lenses**, has helpful features like Feature Assistant and Creative Assist, and is versatile and comfortable to use with both a vari-angle touchscreen LCD and an electronic viewfinder. Wherever you travel, the EOS RP helps you capture the world with quality, control and ease. SIZED TO MOVE. GO FULL FRAME. EF/EF-S LENS COMPATIBILITY. EASE TO PLEASE. The EOS RP is the lightest and smallest full-frame The EOS RP is equipped with a 26.2 MP full-frame In addition to compatibility with RF lenses, the Getting the results you want is made easier with EOS camera*, making it incredibly easy to carry image sensor. This full-frame size gives you EOS RP can also be used with all EF/EF-S lenses a number of helpful and convenient options, on all your travels or even when you’re just feeling amazing flexibility when choosing a lens and using the optional Mount Adapter EF-EOS R, including a vari-angle LCD, a built-in EVF, a inspired at home or around the neighborhood. outstanding image quality when shooting images providing exceptional flexibility for a wide variety convenient Mode Dial to select shooting modes and video. -

A Map of the Canon EOS 6D

CHAPTER 1 A Map of the Canon EOS 6D f you’ve used the Canon EOS 6D, you know it delivers high-resolution images and Iprovides snappy performance. Equally important, the camera offers a full comple- ment of automated, semiautomatic, and manual creative controls. You also probably know that the 6D is the smallest and lightest full-frame dSLR available (at this writing), yet it still provides ample stability in your hands when you’re shooting. Controls on the back of the camera are streamlined, clearly labeled, and within easy reach during shooting. The exterior belies the power under the hood: the 6D includes Canon’s robust autofocus and metering systems and the very fast DIGIC 5+ image processor. There’s a lot that is new on the 6D, but its intuitive design makes it easy for both nov- ice and experienced Canon shooters to jump right in. This chapter provides a roadmap to using the camera controls and the camera menus. COPYRIGHTED MATERIAL This chapter is designed to take you under the hood and help fi nd your way around the Canon EOS 6D quickly and easily. Exposure: ISO 100, f/2.8, 1/60 second, with a Canon 28-70mm f/2.8 USM. 005_9781118516706-ch01.indd5_9781118516706-ch01.indd 1515 55/14/13/14/13 22:09:09 PMPM Canon EOS 6D Digital Field Guide The Controls on the Canon EOS 6D There are several main controls that you can use together or separately to control many functions on the 6D. Once you learn these controls, you can make camera adjustments more effi ciently. -

Nikon D810 Setup Guide Nikon D810 Setup Guide

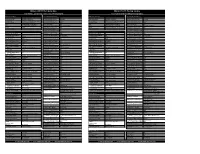

Nikon D810 Setup Guide Nikon D810 Setup Guide For Nature, Landscape and Travel Photography For Portrait and Wedding Photography External Controls Custom Setting Menus External Controls Custom Setting Menus Exposure Mode Aperture Priority Custom settings bank A Exposure Mode Aperture Priority Custom settings bank B Metering Mode 3D Matrix Metering a1 AF-C priority select Release Metering Mode 3D Matrix Metering a1 AF-C priority select Release Bracketing Off (unless HDR photography) a2 AF-S priority select Focus Bracketing Off (unless HDR photography) a2 AF-S priority select Focus Shooting Mode CH (Continuous High) a3 Focus track lock-on OFF Shooting Mode CH (Continuous High) a3 Focus track lock-on OFF WB Variable, dep. on situation a4 AF Activation ON WB Variable, dep. on situation a4 AF Activation ON ISO 64 - 6400 dep. on situation a5 Focus point illumination ON, ON, Squares ISO 100 - 6400 dep. on situation a5 Focus point illumination ON, ON, Squares QUAL RAW a6 AF point illumination ON QUAL JPEG or RAW dep. on situation a6 AF point illumination ON Autofocus Mode AF-S or AF-C dynamic 21-points a7 Focus point wrap ON Autofocus Mode AF-S or AF-C dynamic 21-points a7 Focus point wrap ON a8 Number of focus points 51 a8 Number of focus points 51 Shooting Menu a9 Store by orientation ON Shooting Menu a9 Store by orientation ON Shooting Menu Bank A a10 Built-in AF assist illum OFF Shooting Menu Bank B a10 Built-in AF assist illum OFF Extended menu banks ON a11 Limit AF-area mode All checked Extended menu banks ON a11 Limit AF-area mode All checked Storage folder Default a12 Autofocus mode restr. -

Camera Settings Guide

Camera Settings Guide • " " and " " are trademarks or registered trademarks of Sony Corporation. • All other company and product names mentioned herein are used for identification purposes only and may be the trademarks or registered trademarks of their respective owners. TM and ® symbols are not included in this booklet. • Screen displays and effects used to illustrate some functions are simulated. Conventional autofocus has until now dealt with space alone. Sony goes one step further — a big step, with an innovative image sensor that picks up both space and time to capture moving subjects with new clarity. Sony spells the beginning of a new autofocus era. 4D FOCUS allows you to take crisper photos than ever. Plain old autofocus is a thing of the past. The future of photography is in motion. What is 4D FOCUS? Space: 3D Time: 4D 4D FOCUS Area Depth Time Wide Fast Steadfast The wide AF area, covering nearly the Fast Hybrid AF, combining phase- An advanced AF algorithm accurately entire frame, allows focusing on a detection AF and contrast-detection AF, predicts subject’s next move. Precise AF subject positioned even off the center instantly detects distance to the subject tracking allows focus to be maintained of the frame. to focus accurately. even on fast-moving subjects. Meeting your focusing demands Basic AF performance of Wide Fast Steadfast Focusing over wide area Instant focusing! Once it's focused, it never lets go The 6000 employs a focal plane phase- Advanced Fast Hybrid AF combines phase- With Focus Mode set to AF-C, the camera detection AF sensor with 179 AF points spread detection AF and contrast-detection AF to achieve displays one or more small green frames to cover nearly the entire frame. -

HD Camcorder

PUB. DIE-0508-000 HD Camcorder Instruction Manual COPYRIGHT WARNING: Unauthorized recording of copyrighted materials may infringe on the rights of copyright owners and be contrary to copyright laws. 2 Trademark Acknowledgements • SD, SDHC and SDXC Logos are trademarks of SD-3C, LLC. • Microsoft and Windows are trademarks or registered trademarks of Microsoft Corporation in the United States and/or other countries. • macOS is a trademark of Apple Inc., registered in the U.S. and other countries. • HDMI, the HDMI logo and High-Definition Multimedia Interface are trademarks or registered trademarks of HDMI Licensing LLC in the United States and other countries. • “AVCHD”, “AVCHD Progressive” and the “AVCHD Progressive” logo are trademarks of Panasonic Corporation and Sony Corporation. • Manufactured under license from Dolby Laboratories. “Dolby” and the double-D symbol are trademarks of Dolby Laboratories. • Other names and products not mentioned above may be trademarks or registered trademarks of their respective companies. • This device incorporates exFAT technology licensed from Microsoft. • “Full HD 1080” refers to Canon camcorders compliant with high-definition video composed of 1,080 vertical pixels (scanning lines). • This product is licensed under AT&T patents for the MPEG-4 standard and may be used for encoding MPEG-4 compliant video and/or decoding MPEG-4 compliant video that was encoded only (1) for a personal and non- commercial purpose or (2) by a video provider licensed under the AT&T patents to provide MPEG-4 compliant video. No license is granted or implied for any other use for MPEG-4 standard. Highlights of the Camcorder The Canon XA15 / XA11 HD Camcorder is a high-performance camcorder whose compact size makes it ideal in a variety of situations. -

The Mark of Legends a Revolution in Speed and Performance

THE MARK OF LEGENDS A REVOLUTION IN SPEED AND PERFORMANCE BLAZING SPEED, STELLAR QUALITY FAST FOCUS AND ACCURATE METERING Up to 14 fps* in Viewfinder / Up to 16 fps* in Live View Mode 61 AF Points at f/8† and Low-intensity Limit iTR AF with 360,000-pixel Metering Sensor Delivering outstanding performance at speeds of up to 14 fps*, and up to 16 fps* in Live View, the of EV -3 The amazingly advanced 360,000-pixel RGB+IR EOS-1D X Mark II camera is loaded with technologies that help facilitate speedy operation. It features The EOS-1D X Mark II camera features a new metering sensor and dedicated DIGIC 6 Image a new mirror mechanism designed for highly precise operation with reduced vibration even at incredibly 61-point High Density Reticular AF II system with Processor enhance the EOS-1D X Mark II camera’s fast speeds, a CMOS sensor with high-speed signal reading that enables speedy image capture and 41 cross-type points. AF point coverage is expanded impressive AF performance for both stills and many other features that help ensure that camera operations are performed quickly and precisely. in the vertical dimension, and AI Servo AF now video by increasing the camera’s ability to accommodates sudden changes in subject speed, recognize subjects for faster, more precise AF, 20.2 Megapixel Full-frame CMOS Sensor noise reduction in dark portions of the image Dual DIGIC 6+ Image Processors at default settings, even better than before. The metering and exposure compensation. This Intelligent Viewfinder II The EOS-1D X Mark II camera features a -

Canon EOS 6D: from Snapshots to Great Shots

Canon EOS 6D: From Snapshots to Great Shots Colby Brown Canon EOS 6D: From Snapshots to Great Shots Colby Brown Peachpit Press www.peachpit.com To report errors, please send a note to [email protected] Peachpit Press is a division of Pearson Education. Copyright © 2013 by Peachpit Press Project Editor: Valerie Witte Production Editor: Tracey Croom Copyeditor: Scout Festa Proofreader: Patricia Pane Composition: WolfsonDesign Indexer: Rebecca Plunkett Cover Image: Colby Brown Cover Design: Aren Straiger Interior Design: Riezebos Holzbaur Design Group Back Cover Author Photo: Colby Brown Notice of Rights All rights reserved. No part of this book may be reproduced or transmitted in any form by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of the publisher. For information on getting permission for reprints and excerpts, contact permissions@ peachpit.com. Notice of Liability The information in this book is distributed on an “As Is” basis, without warranty. While every precaution has been taken in the preparation of the book, neither the author nor Peachpit shall have any liability to any person or entity with respect to any loss or damage caused or alleged to be caused directly or indirectly by the instructions contained in this book or by the computer software and hardware products described in it. Trademarks All Canon products are trademarks or registered trademarks of Canon Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and Peachpit was aware of a trademark claim, the designations appear as requested by the owner of the trademark. -

Ronin-S Release Notes

Ronin-S Release Notes Date: 2019.03.14 Firmware: v1.8.0.70 Ronin App iOS: v1.1.8 Ronin App Android: v1.1.8 DJI Pro Assistant for Ronin (PC): v2.0.2 DJI Pro Assistant for Ronin (Mac): v2.0.2 User Manual: v1.2 What’s New? Added five speed settings of focus for Nikon cameras (requires Ronin app v1.1.8 or later). Added three speed settings of focus for Canon cameras (requires Ronin app v1.1.8 or later). Added two speed settings of focus for Panasonic cameras (requires Ronin app v1.1.8 or later). Added photo capture, video recording, autofocus, and focus pull support for Canon EOS 6D Mark II cameras with supported EF lenses. To use autofocus on the Canon EOS 6D Mark II, press halfway down on the gimbal’s camera control button. Added photo capture, video recording, autofocus, and focus pull support for Canon EOS R cameras with supported RF mount lenses. To use autofocus on the Canon EOS R, press halfway down on the gimbal’s camera control button. Added photo capture, video recording, autofocus, and focus pull support for Nikon Z6 cameras with supported Nikkor lenses. To use autofocus on the Nikon Z6, press halfway down on the gimbal’s camera control button. Added video recording, autofocus, and focus pull support for Sony A7M3 and A7R3 cameras with supported E-mount lenses using MCC-C cable. Photo capture is not currently available. To use autofocus on the Sony A7M3 and A7R3 cameras, press halfway down on the gimbal’s camera control button. -

Owner's Manual

Owner’s Manual BL00004926-201 EN Introduction Thank you for your purchase of this product. Be sure that you have read this manual and understood its contents be- fore using the camera. Keep the manual where it will be read by all who use the product. For the Latest Information The latest versions of the manuals are available from: http://fujifilm-dsc.com/en/manual/ The site can be accessed not only from your computer but also from smartphones and tab- lets. For information on fi rmware updates, visit: http://www.fujifilm.com/support/digital_cameras/software/ fujifilm firmware ii P Chapter Index Menu List iv 1 Before You Begin 1 2 First Steps 19 3 Basic Photography and Playback 35 4 Movie Recording and Playback 41 5 Taking Photographs 47 6 The Shooting Menus 97 7 Playback and the Playback Menu 123 8 The Setup Menus 141 9 Shortcuts 157 10 Peripherals and Optional Accessories 163 11 Connections 169 12 Technical Notes 181 iii Menu List Camera menu options are listed below. Shooting Menus Menu List Adjust settings when shooting photos or movies. N See page 97 for details. SHOOTING MENU P SHOOTING MENU P A SCENE POSITION 98 M TOUCH ZOOM 112 d ADVANCED FILTER 98 l MOUNT ADAPTOR SETTING 113 F AF/MF SETTING 98 m SHOOT WITHOUT LENS 115 R RELEASE TYPE 101 c MF ASSIST 115 A A N ISO 102 C PHOTOMETRY 115 O IMAGE SIZE 103 v INTERLOCK SPOT AE & 116 T IMAGE QUALITY 104 FOCUS AREA U DYNAMIC RANGE 105 p FLASH SET-UP 116 P FILM SIMULATION 106 W MOVIE SET-UP 117 X FILM SIMULATION BKT 107 L IS MODE 120 B SELF-TIMER 107 W DIGITAL IMAGE STABILIZER 120 B B o INTERVAL TIMER SHOOTING 108 r WIRELESS COMMUNICATION 121 P TIME-LAPSE MOVIE MODE 109 x SHUTTER TYPE 121 D WHITE BALANCE 110 T ELECTRONIC ZOOM SETTING 122 f COLOR 110 q SHARPNESS 110 r HIGHLIGHT TONE 110 s SHADOW TONE 111 C h NOISE REDUCTION 111 K LONG EXPOSURE NR 111 S AE BKT SETTING 112 K TOUCH SCREEN MODE 112 iv Menu List Playback Menus Adjust playback settings.