Data Compression: Challenging the Traditional Algorithms and Moving One Step Closer to Entropy

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

End-To-End Enterprise Encryption: a Look at Securezip® Technology

End-to-End Enterprise Encryption: A Look at SecureZIP® Technology TECHNICAL WHITE PAPER WP 700.xxxx End-to-End Enterprise Encryption: A Look at SecureZIP Technology Table of Contents SecureZIP Executive Summary 3 SecureZIP: The Next Generation of ZIP 4 PKZIP: The Foundation for SecureZIP 4 Implementation of ZIP Encryption 5 Hybrid Cryptosystem 6 Crytopgraphic Calculation Sources 7 Digital Signing 7 In Step with the Data Protection Market’s Needs 7 Conclusion 8 WP-SZ-032609 | 2 End-to-End Enterprise Encryption: A Look at SecureZIP Technology End-to-End Enterprise Encryption: A Look at SecureZIP Technology Every day sensitive data is exchanged within your organization, both internally and with external partners. Personal health & insurance data of your employees is shared between your HR department and outside insurance carriers. Customer PII (Personally Identifiable Information) is transferred from your corporate headquarters to various offices around the world. Payment transaction data flows between your store locations and your payments processor. All of these instances involve sensitive data and regulated information that must be exchanged between systems, locations, and partners; a breach of any of them could lead to irreparable damage to your reputation and revenue. Organizations today must adopt a means for mitigating the internal and external risks of data breach and compromise. The required solution must support the exchange of data across operating systems to account for both the diversity of your own infrastructure and the unknown infrastructures of your customers, partners, and vendors. Moreover, that solution must integrate naturally into your existing workflows to keep operational cost and impact to minimum while still protecting data end-to-end. -

The Basic Principles of Data Compression

The Basic Principles of Data Compression Author: Conrad Chung, 2BrightSparks Introduction Internet users who download or upload files from/to the web, or use email to send or receive attachments will most likely have encountered files in compressed format. In this topic we will cover how compression works, the advantages and disadvantages of compression, as well as types of compression. What is Compression? Compression is the process of encoding data more efficiently to achieve a reduction in file size. One type of compression available is referred to as lossless compression. This means the compressed file will be restored exactly to its original state with no loss of data during the decompression process. This is essential to data compression as the file would be corrupted and unusable should data be lost. Another compression category which will not be covered in this article is “lossy” compression often used in multimedia files for music and images and where data is discarded. Lossless compression algorithms use statistic modeling techniques to reduce repetitive information in a file. Some of the methods may include removal of spacing characters, representing a string of repeated characters with a single character or replacing recurring characters with smaller bit sequences. Advantages/Disadvantages of Compression Compression of files offer many advantages. When compressed, the quantity of bits used to store the information is reduced. Files that are smaller in size will result in shorter transmission times when they are transferred on the Internet. Compressed files also take up less storage space. File compression can zip up several small files into a single file for more convenient email transmission. -

Steganography and Vulnerabilities in Popular Archives Formats.| Nyxengine Nyx.Reversinglabs.Com

Hiding in the Familiar: Steganography and Vulnerabilities in Popular Archives Formats.| NyxEngine nyx.reversinglabs.com Contents Introduction to NyxEngine ............................................................................................................................ 3 Introduction to ZIP file format ...................................................................................................................... 4 Introduction to steganography in ZIP archives ............................................................................................. 5 Steganography and file malformation security impacts ............................................................................... 8 References and tools .................................................................................................................................... 9 2 Introduction to NyxEngine Steganography1 is the art and science of writing hidden messages in such a way that no one, apart from the sender and intended recipient, suspects the existence of the message, a form of security through obscurity. When it comes to digital steganography no stone should be left unturned in the search for viable hidden data. Although digital steganography is commonly used to hide data inside multimedia files, a similar approach can be used to hide data in archives as well. Steganography imposes the following data hiding rule: Data must be hidden in such a fashion that the user has no clue about the hidden message or file's existence. This can be achieved by -

Users Manual

Users Manual PKZIP® Server SecureZIP® Server SecureZIP Partner Copyright © 1997-2007 PKWARE, Inc. All Rights Reserved. No part of this publication may be reproduced, transmitted, transcribed, stored in a retrieval system, or translated into any other language in whole or in part, in any form or by any means, whether it be electronic, mechanical, magnetic, optical, manual or otherwise, without prior written consent of PKWARE, Inc. PKWARE, INC., DISCLAIMS ALL WARRANTIES AS TO THIS SOFTWARE, WHETHER EXPRESS OR IMPLIED, INCLUDING WITHOUT LIMITATION ANY IMPLIED WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, FUNCTIONALITY, DATA INTEGRITY, OR PROTECTION. PKWARE IS NOT LIABLE FOR INCIDENTAL OR CONSEQUENTIAL DAMAGES. Portions of this software include RSA BSAFE ® cryptographic or security protocol software from RSA Security Inc. This software includes portions that are copyright © The OpenLDAP Foundation, 1998- 2003 and are used under the OpenLDAP Public License. The text of this license is indented below: The OpenLDAP Public License Version 2.7, 7 September 2001 Redistribution and use of this software and associated documentation ("Software"), with or without modification, are permitted provided that the following conditions are met: 1. Redistributions of source code must retain copyright statements and notices, 2. Redistributions in binary form must reproduce applicable copyright statements and notices, this list of conditions, and the following disclaimer in the documentation and/or other materials provided with the distribution, and 3. Redistributions must contain a verbatim copy of this document. The OpenLDAP Foundation may revise this license from time to time. Each revision is distinguished by a version number. You may use this Software under terms of this license revision or under the terms of any subsequent revision of the license. -

Archiving.Pdf

Archiving Zip. Compression. Stuff like that. Written by Dan Gookin Published by Quantum Particle Bottling Co., Coeur d’Alene, ID, 83814 USA Copyright ©2008 by Quantum Particle Bottling Co., Inc. All Rights Reserved. This work cannot be reproduced or distributed without written permission of the copyright holder. Various copyrights and trademarks may or may not appear in this text. It is assumed that the trademark or copyright is owned by whoever owns it, and the use of that material here is in no way considered an infringement or abuse of the copyright or trademark. Further, there is. Oh, wait. Never mind. I’m just making all this up anyway. I’m not a lawyer. I hate lawyers. For additional information on this or other publications from Quantum Particle Bottling Co., please visit http://www. wambooli.com/ Second Edition December, 2008 Table of Contents Archiving .........................................................................................................2 What the Heck is Archiving? ............................................................................4 Historical Nonsense About Compressed Folders and ZIP Files ..........................5 The Bad Old Modem Days .............................................................................6 Packing Multiple Files Into a Single Library ..................................................6 Better than Library Files, Compressed Archives ............................................7 Enter the ARC file format ..............................................................................8 -

ES 201 684 V1.1.1 (1999-05) ETSI Standard

Draft ES 201 684 V1.1.1 (1999-05) ETSI Standard Integrated Services Digital Network (ISDN); File Transfer Profile; B-channel aggregation and synchronous compression 2 ETSI Draft ES 201 684 V1.1.1 (1999-05) Reference DES/DTA-005069 (flc00icp.PDF) Keywords B-channel, FT, ISDN, Terminal ETSI Postal address F-06921 Sophia Antipolis Cedex - FRANCE Office address 650 Route des Lucioles - Sophia Antipolis Valbonne - FRANCE Tel.: +33 4 92 94 42 00 Fax: +33 4 93 65 47 16 Siret N° 348 623 562 00017 - NAF 742 C Association à but non lucratif enregistrée à la Sous-Préfecture de Grasse (06) N° 7803/88 Internet [email protected] Individual copies of this ETSI deliverable can be downloaded from http://www.etsi.org If you find errors in the present document, send your comment to: [email protected] Copyright Notification No part may be reproduced except as authorized by written permission. The copyright and the foregoing restriction extend to reproduction in all media. © European Telecommunications Standards Institute 1999. All rights reserved. ETSI 3 ETSI Draft ES 201 684 V1.1.1 (1999-05) Contents Intellectual Property Rights ............................................................................................................................... 4 Foreword............................................................................................................................................................ 4 1 Scope....................................................................................................................................................... -

PKWARE Secures Marquee Investment Partners

FOR IMMEDIATE RELEASE PKWARE® Announces Latest Release of PKZIP® & SecureZIP® for IBM® z/OS® Milwaukee, WI (September 28, 2010) - PKWARE, Inc., a leading provider of data-centric security and compression software, today announced the latest version 12 release of PKZIP and SecureZIP for z/OS on System z. Users of the IBM z/OS mainframe looking to further enhance its ability to maximize data center efficiency will find that new versions of PKZIP and SecureZIP for z/OS provide a solution with unparalleled usability. “PKWARE is an IBM PartnerWorld Advanced Partner and is committed to serving the needs of the mainframe z/OS community,” said Joe Sturonas, Chief Technology Officer for PKWARE. “We [PKWARE] are committed, with each new release, to provide a product that allows our customers the maximum opportunity to not only leverage the z/OS capabilities IBM offers, but to improve their overall data center operations.” PKZIP for z/OS provides file management and data compression, resulting in more efficient data exchange through reduced transmission times and storage requirements. This improves overall data center operations and significantly reduces costs. The PKZIP family of products is interoperable so that data zipped and compressed on the mainframe can be unzipped and extracted on all major enterprise computing platforms. SecureZIP for z/OS is an optimal solution for providing durable data security to protect privacy, whether data resides on z/OS or is in motion to other platforms. SecureZIP for z/OS also leverages existing investments in hardware cryptography within the mainframe environment. Moreover, it includes the cost reduction capabilities of PKZIP and is also interoperable so that data encrypted on the mainframe can be decrypted and used on other operating systems, providing users comprehensive data protection across all major enterprise computing platforms. -

PKZIP /Securezip™ for Iseries

PKZIP®/SecureZIP™ for iSeries User’s Guide SZIU-V8R2000 PKWARE Inc. PKWARE Inc. 648 N Plankinton Avenue, Suite 220 Milwaukee, WI 53203 Sales: 937-847-2374 Sales - Email: [email protected] Support: 937-847-2687 Support - http://www.pkware.com/business_and_developers/support Fax: 414-289-9789 Web Site: http://www.pkware.com 8.2 Edition (2005) SecureZIP™ for iSeries, PKZIP® for iSeries, PKZIP for MVS, SecureZIP for zSeries, PKZIP for zSeries, PKZIP for OS/400, PKZIP for UNIX, and PKZIP for Windows are just a few of the many members in the PKZIP® family. PKWARE, Inc. would like to thank all the individuals and companies -- including our customers, resellers, distributors, and technology partners -- who have helped make PKZIP the industry standard for trusted ZIP solutions. PKZIP enables our customers to efficiently and securely transmit and store information across systems of all sizes, ranging from desktops to mainframes. This edition applies to the following PKWARE, Inc. licensed programs: PKZIP for iSeries (Version 8, Release 2, 2005) SecureZIP for iSeries (Version 8, Release 2, 2005) SecureZIP for iSeries Reader (Version 8, Release 2, 2005) SecureZIP for iSeries SecureLink (Version 8, Release 2, 2005) PKZIP is a registered trademark of PKWARE Inc. SecureZIP is a trademark of PKWARE Inc. Other product names mentioned in this manual may be a trademark or registered trademarks of their respective companies and are hereby acknowledged. Any reference to licensed programs or other material, belonging to any company, is not intended to state or imply that such programs or material are available or may be used. The copyright in this work is owned by PKWARE, Inc., and the document is issued in confidence for the purpose only for which it is supplied. -

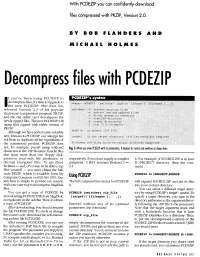

Decompress Files with PCDEZIP

With PCDEZIP you can confidently download files compressed with PKZIP, Version 2.0. BY BOB FLANDERS AND MICHAEL HOLMES Decompress files with PCDEZIP f you've been using PCUNZIP to PCDEZIP's syntax decompress files, it's time to upgrade to usage: PCDEZIP [switches] zipfile [target\1 [filespec 1...11 I the new PCDEZIP. Phil Katz has released Version 2.0 of his popular switches: -f freshen existing files shareware compression program, PKZIP, extract new and updated files do not prompt on overwrite and the old utility can't decompress the ZIP directory newly zipped files. The new PCDEZIP will -d create directories unzip files zipped with either version of -t test file integrity PKZIP. zipfile .ZIP file Although we have added some valuable new features to PCDEZIP, our attempt has target \ the target directory; trailing backslash required not been to duplicate all the capabilities of the commercial product. PCDEZIP does filenames are files to be extracted; wildcards supported not, for example, permit using wildcard Fig 1: When you enter PCDEZIP with no parameters, it displays its syntax and switches as shown here characters in the .ZIP filename, handle files that span more than one floppy disk, preserve read-only file attributes, or respectively. If you don't supply a compiler it. For example, if SOURCE.ZIP is in your decrypt encrypted files. To get these parameter, C.BAT assumes Borland C++ D :\ PROJECT directory, then the com- facilities — and, of course, to be able to zip 3.1. mand files yourself — you must obtain the full- scale PKZIP, which is available from My PCDEZIP D:\PROJECT\SOURCE Computer Company on (02) 565 1991. -

PKZIP Stream Cipher 1 PKZIP

PKZIP PKZIP Stream Cipher 1 PKZIP Phil Katz’s ZIP program Katz invented zip file format o ca 1989 Before that, Katz created PKARC utility o ARC compression was patented by SEA, Inc. o SEA successfully sued Katz Katz then invented zip o ZIP was much better than SEA’s ARC o He started his own company, PKWare Katz died of alcohol abuse at age 37 in 2000 PKZIP Stream Cipher 2 PKZIP PKZIP compresses files using zip Optionally, it encrypts compressed file o Uses a homemade stream cipher o PKZIP cipher due to Roger Schlafly o Schlafly has PhD in math (Berkeley, 1980) PKZIP cipher is susceptible to attack o Attack is nontrivial, has significant work factor, lots of memory required, etc. PKZIP Stream Cipher 3 PKZIP Cipher Generates 1 byte of keystream per step 96 bit internal state o State: 32-bit words, which we label X,Y,Z o Initial state derived from a password Of course, password guessing is possible o We do not consider password guessing here Cipher design seems somewhat ad hoc o No clear design principles o Uses shifts, arithmetic operations, CRC, etc. PKZIP Stream Cipher 4 PKZIP Encryption Given o Current state: X, Y, Z (32-bit words) o p = byte of plaintext to encrypt o Note: upper case for 32-bit words, lower case bytes Then the algorithm is… k = getKeystreamByte(Z) c = p ⊕ k update(X, Y, Z, p) Next, we define getKeystreamByte, update PKZIP Stream Cipher 5 PKZIP getKeystreamByte Let “∨” be binary OR Define 〈X〉i…j as bits i thru j (inclusive) of X o As usual, bits numbered left-to-right from 0 Shift X by n bits to right: -

Virus Bulletin, June 1990

June 1990 ISSN 0956-9979 THE AUTHORITATIVE INTERNATIONAL PUBLICATION ON COMPUTER VIRUS PREVENTION, RECOGNITION AND REMOVAL Editor: Edward Wilding Technical Editor: Fridrik Skulason, University of Iceland Editorial Advisors: Jim Bates, Bates Associates, UK, Phil Crewe, Fingerprint, UK, Dr. Jon David, USA, David Ferbrache, Heriot-Watt University, UK, Dr. Bertil Fortrie, Data Encryption Technologies, Holland, Hans Gliss, Datenschutz Berater, West Germany, Ross M. Greenberg, Software Concepts Design, USA, Dr. Harold Joseph Highland, Compulit Microcomputer Security Evaluation Laboratory, USA, Dr. Jan Hruska, Sophos, UK, Dr. Keith Jackson, Walsham Contracts, UK, Owen Keane, Barrister, UK, Yisrael Radai, Hebrew University, Israel, John Laws, RSRE, UK, David T. Lindsay, Digital Equipment Corporation, UK, Martin Samociuk, Network Security Management, UK, John Sherwood, Sherwood Associates, UK, Roger Usher, Coopers&Lybrand, UK, Dr. Ken Wong, BIS Applied Systems, UK KNOWN IBM PC VIRUSES CONTENTS (UPDATE) 11 EDITORIAL TOOLS & TECHNIQUES Computer Viruses as Weapon Dynamic Decompression, Systems 2 LZEXE and the Virus Problem 12 PROCEDURES WORM PROGRAMS Training and Awareness 3 The Internet Worm - Action and Reaction 13 FEATURE ARTICLE PRODUCT REVIEW The Bulgarian Computer Viruses - ‘The Virus Factory’ 6 Certus - Tools To Fight Viruses With 18 ANTI-VIRUS SOFTWARE END-NOTES & NEWS 20 FOR IBM PCs 10 VIRUS BULLETIN ©1990 Virus Bulletin Ltd, England./90/$0.00+2.50 This bulletin is available only to qualified subscribers. No part of this publication may be reproduced, stored in a retrieval system, or transmitted by any form or by any means, electronic, magnetic, optical or photocopying, without the prior written permission of the publishers or a licence permitting restricted copying issued by the Copyright Licencing Agency. -

Lossless Compression: State of the Art Many More Variants

Lossless compression: state of the art Many more variants In our lessons we’ve seen some of the most common algorithms for lossless compression Literature and applications present some other algorithms and many more variants Popular applications have proprietary encoding schemes 2 State of the art (2005) Windows . .zip . .Cab . .RAR . .ACE . .7z (7-Zip) Linux . .gz (gzip) . .bz2 (bzip2) . .Z (Compress) Mac . .zip . .sit (Stuffit) 3 zip format - I The ZIP file format was originally created by Phil Katz, founder of PKWARE Katz publicly released technical documentation on the ZIP file format, along with the first version of his PKZIP archiver, in January 1989. Katz had converted compression routines of a previously available archival program, ARC, from C to optimized assembler code He has been processed for for copyright infringement and condemned 4 zip format - II Then he created his own file format, and the .zip format he designed was a much more efficient compression format than .ARC In the mid 1990s, as more new computers included graphical user interfaces, some authors proposed shareware compression programs with a GUI The most famous, in Windows environment, is Winzip (www.winzip.com) zip format uses a a combination of the LZ77 algorithm and Huffman coding 5 zip format - III In the late 1990s, various file manager software started integrating support for the zip format into the file manager user interface Windows Explorer (Windows Me, Windows XP) Finder (Mac OS X) Nautilus file manager used with GNOME Konqueror file