2.7 Turing Machines and Grammars We Now Turn Our Attention Back to Turing Machines As Language Acceptors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Computability and Complexity

Computability and Complexity Lecture Notes Winter Semester 2016/2017 Wolfgang Schreiner Research Institute for Symbolic Computation (RISC) Johannes Kepler University, Linz, Austria [email protected] July 18, 2016 Contents List of Definitions5 List of Theorems7 List of Theses9 List of Figures9 Notions, Notations, Translations 12 1. Introduction 18 I. Computability 23 2. Finite State Machines and Regular Languages 24 2.1. Deterministic Finite State Machines...................... 25 2.2. Nondeterministic Finite State Machines.................... 30 2.3. Minimization of Finite State Machines..................... 38 2.4. Regular Languages and Finite State Machines................. 43 2.5. The Expressiveness of Regular Languages................... 58 3. Turing Complete Computational Models 63 3.1. Turing Machines................................ 63 3.1.1. Basics.................................. 63 3.1.2. Recognizing Languages........................ 68 3.1.3. Generating Languages......................... 72 3.1.4. Computing Functions.......................... 74 3.1.5. The Church-Turing Thesis....................... 79 Contents 3 3.2. Turing Complete Computational Models.................... 80 3.2.1. Random Access Machines....................... 80 3.2.2. Loop and While Programs....................... 84 3.2.3. Primitive Recursive and µ-recursive Functions............ 95 3.2.4. Further Models............................. 106 3.3. The Chomsky Hierarchy............................ 111 3.4. Real Computers................................ -

Recursively Enumerable and Recursive Languages

Review • Languages and Grammars – Alphabets, strings, languages • Regular Languages – Deterministic Finite and Nondeterministic Automata – Equivalence of NFA and DFA Regular Expressions CS 301 - Lecture 23 – Regular Grammars – Properties of Regular Languages – Languages that are not regular and the pumping lemma Recursive and Recursively • Context Free Languages – Context Free Grammars – Derivations: leftmost, rightmost and derivation trees Enumerable Languages – Parsing and ambiguity – Simplifications and Normal Forms – Nondeterministic Pushdown Automata – Pushdown Automata and Context Free Grammars Fall 2008 – Deterministic Pushdown Automata – Pumping Lemma for context free grammars – Properties of Context Free Grammars • Turing Machines – Definition, Accepting Languages, and Computing Functions – Combining Turing Machines and Turing’s Thesis – Turing Machine Variations – Universal Turing Machine and Linear Bounded Automata – Today: Recursive and Recursively Enumerable Languages Definition: A language is recursively enumerable Recursively Enumerable if some Turing machine accepts it and Recursive Languages 1 Let L be a recursively enumerable language Definition: A language is recursive and M the Turing Machine that accepts it if some Turing machine accepts it and halts on any input string For string w : if w∈ L then M halts in a final state In other words: if w∉ L then M halts in a non-final state A language is recursive if there is or loops forever a membership algorithm for it Let L be a recursive language and M the Turing Machine -

Computability

Computability Tao Jiang Ming Li Department of Computer Science Department of Computer Science McMaster University University of Waterloo Hamilton, Ontario L8S 4K1, Canada Waterloo, Ontario N2L 3G1, Canada Bala Ravikumar Kenneth W. Regan Department of Computer Science Department of Computer Science University of Rhode Island State University of New York at Bu®alo Kingston, RI 02881, USA Bu®alo, NY 14260, USA 1 Introduction In the last two chapters, we have introduced several important computational models, including Turing machines, and Chomsky's hierarchy of formal grammars. In this chapter, we will explore the limits of mechanical computation as de¯ned by these models. We begin with a list of fundamental problems for which automatic computational solution would be very useful. One of these is the universal simulation problem: can one design a single algorithm that is capable of simulating any algorithm? Turing's demonstration that the answer is yes [Turing, 1936] supplied the proof for Babbage's dream of a single machine that could be programmed to carry out any computational task. We introduce a simple Turing machine programming language called \GOTO" in order to facilitate our own design of a universal machine. Next, we describe the schemes of primitive recur- sion and ¹-recursion, which enable a concise, mathematical description of computable functions that is independent of any machine model. We show that the ¹-recursive functions are the same as those computable on a Turing machine, and describe some computable functions including one that solves a second problem on our list. The success in solving ends there, however. We show in the last section of this chapter that 1 all of the remaining problems on our list are unsolvable by Turing machines, and subject to the Church-Turing thesis, have no mechanical or human solver at all. -

Turing Machines: an Introduction

CIT 596 – Theory of Computation 1 Turing Machines: An Introduction We have seen several abstract models of computing devices: Deterministic Finite Automata, Nondeterministic Finite Automata, Non- deterministic Finite Automata with ²-Transitions, Pushdown Automata, and Deterministic Pushdown Automata. However, none of the above “seem to be” as powerful as a real com- puter, right? We now turn our attention to a much more powerful abstract model of a computing device: a Turing machine. This model is believed to do everything that a real computer can do. °c Marcelo Siqueira — Spring 2005 CIT 596 – Theory of Computation 2 Turing Machines: An Introduction A Turing machine is somewhat similar to a finite automaton, but there are important differences: 1. A Turing machine can both write on the tape and read from it. 2. The read-write head can move both to the left and to the right. 3. The tape is infinite. 4. The special states for rejecting and accepting take immediate effect. °c Marcelo Siqueira — Spring 2005 CIT 596 – Theory of Computation 3 Turing Machines: An Introduction Formally, a Turing machine is a 7-tuple, (Q, Σ, Γ, δ, q0, qaccept, qreject), where Q is the (finite) set of states, Σ is the input alphabet such that Σ does not contain the special blank symbol t, Γ is the tape alphabet such that t∈ Γ and Σ ⊆ Γ, δ : Q × Γ → Q × Γ ×{L, R} is the transition function, q0 ∈ Q is the start state, qaccept ∈ Q is the accept state, and qreject ∈ Q is the reject state, where qaccept 6= qreject. -

Theory of Computation Summary

Theory of Computation (CS 46) Sets and Functions We write 2A for the set of subsets of A. Definition. A set, A, is countably infinite if there exists a bijection from A to the natural numbers. Note. This allows us to enumerate A, using the order from the bijection. Definition. A set is countable if it is finite or countably infinite. It is uncountable otherwise. Theorem. If A is a subset of a countable set, then A is countable. Theorem. N ´ N is countable. Corollary. A countable union of countable sets is countable. Programs Definition. A program is a finite string (ordered set of symbols) over a finite alphabet. Note. This is distinct from the process associated with the program, which may be infinite. Theorem. The number of programs is countable. Proof. The number of strings of length k is finite, and the set of all programs is the union of this countable number of countable sets. Theorem. The number of functions from the natural numbers to {0,1} is uncountably infinite. Corollary. There are more functions than possible programs. Thus, some functions are not computable. Languages Definition. An alphabet (S) is a finite set of symbols. A string is a finite sequence from an alphabet. The set of all strings over an alphabet is denoted S*. · |w| is the length of the string w. · ak = aa … a (k times) Definition. A language is a subset of S*. The following operations are defined on languages: · L1 + L2 is the union of the two languages · L1 · L2 = {l1l2 | l1 ÎL1 and l2 Î L2} is the concatenation of two languages. -

QUESTION BANK SOLUTION Unit 1 Introduction to Finite Automata

FLAT 10CS56 QUESTION BANK SOLUTION Unit 1 Introduction to Finite Automata 1. Obtain DFAs to accept strings of a’s and b’s having exactly one a.(5m )(Jun-Jul 10) 2. Obtain a DFA to accept strings of a’s and b’s having even number of a’s and b’s.( 5m )(Jun-Jul 10) L = {Œ,aabb,abab,baba,baab,bbaa,aabbaa,---------} 3. Give Applications of Finite Automata. (5m )(Jun-Jul 10) String Processing Consider finding all occurrences of a short string (pattern string) within a long string (text string). This can be done by processing the text through a DFA: the DFA for all strings that end with the pattern string. Each time the accept state is reached, the current position in the text is output. Finite-State Machines A finite-state machine is an FA together with actions on the arcs. Statecharts Statecharts model tasks as a set of states and actions. They extend FA diagrams. Lexical Analysis Dept of CSE, SJBIT 1 FLAT 10CS56 In compiling a program, the first step is lexical analysis. This isolates keywords, identifiers etc., while eliminating irrelevant symbols. A token is a category, for example “identifier”, “relation operator” or specific keyword. 4. Define DFA, NFA & Language? (5m)( Jun-Jul 10) Deterministic finite automaton (DFA)—also known as deterministic finite state machine—is a finite state machine that accepts/rejects finite strings of symbols and only produces a unique computation (or run) of the automaton for each input string. 'Deterministic' refers to the uniqueness of the computation. Nondeterministic finite automaton (NFA) or nondeterministic finite state machine is a finite state machine where from each state and a given input symbol the automaton may jump into several possible next states. -

Languages and Finite Automata

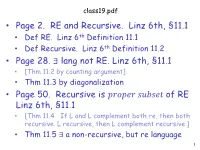

class19.pdf • Page 2. RE and Recursive. Linz 6th, §11.1 • Def RE. Linz 6th Definition 11.1 • Def Recursive. Linz 6th Definition 11.2 • Page 28. ∃ lang not RE. Linz 6th, §11.1 • [Thm 11.2 by counting argument] • Thm 11.3 by diagonalization • Page 50. Recursive is 푝푟표푝푒푟 푠푢푏푠푒푡 of RE Linz 6th, §11.1 • [Thm 11.4 If L and L complement both re, then both recursive. L recursive, then L complement recursive.] • Thm 11.5 ∃ a non-recursive, but re language 1 Recursively Enumerable and Recursive Languages 2 Recall Definition (class 18.pdf) Definition 10.4, Linz, 6th, page 279 Let S be a set of strings. An enumeration procedure for S is a Turing Machine that generates all strings of one by one and each string is generated in finite time. 3 Definition: A language is recursively enumerable if some Turing machine accepts it 4 Let L be a recursively enumerable language and M the Turing Machine that accepts it For string w : if w L then halts in a final state if w L then halts in a non-final state or loops forever 5 Definition (11.2, page 287): A language is recursive if some Turing machine accepts it and halts on any input string In other words: A recursive language has a membership algorithm. 6 Let L be a recursive language and M the Turing Machine that accepts it L is a recursive language if there is a Turing Machine M such that For any string w : if w L then halts in a final state if w L then halts in a non-final state 7 We will prove: 1. -

Theory of Computation Final Exam

Theory of Computation Final Exam. 2007 NAME: .................................................. Student ID.: .................................................. 1 1 (72 pts)) True or false? (Score = Right - 2 Wrong.) Mark ‘O’ for true; ‘×’ for false. i 1. .....O..... {a |i is prime} is not context free. n n 2. .....X..... {(a b) |n ≥ 1} is context free. n m 3. .....X..... {(a b) |m, n ≥ 1} is context free. 4. .....O..... If L1 is context free and L2 is regular, then L1/L2 is context free. (Note that L1/L2 = {x | ∃y ∈ L2, xy ∈ L1}) 5. .....X..... If L1/L2 and L1 are context free, then L2 must be recursive. 6. .....X..... If L1 and L1 ∪ L2 are context free, then L2 must be context free. 7. .....O..... If L1 is context free and L2 is regular, then L1 − L2 is context free. 8. .....X..... If L1 is regular and L2 is context free, then L1 − L2 is context free. 9. .....O..... If L1 is regular and L2 is context-free, then L1 ∩ L2 must be a CFL. 10. .....O..... If L1 and L2 are CFLs, then L1 ∪ L2 must be a CFL. R R 11. .....O..... If L is context free, then L (={x |x ∈ L}) is also context free. 12. .....X..... Nondeterministic and deterministic versions of PDAs are equivalent. 13. .....O..... If a language L does not satisfy the conditions stated in the pumping lemma for CFLs, then L is not context-free. 14. .....X..... Every infinite set of strings over a single letter alphabet Σ (={a}) contains an infinite context free subset. 15. .....X..... Every infinite context-free set contains an infinite regular subset. -

Public Sector Exams

Theory & Practice Book Computer Science & IT for Public Sector Exams ISRO, DRDO, BARC, BEL, HAL, NTPC, ONGC, BHEL, SAIL, GAIL, MTNL, FCI, ECL, ATC, DMRC, HLL, UPRVNL, CSPEB, OPTCL.... Corporate Office: 44-A/1, Kalu Sarai, New Delhi-16 | Regd. Office: 25-A, Ber Sarai, New Delhi-16 Website: www.madeeasypublications.org | Phone: 011-26560862, 011-32059862 MADE EASY Publications Corporate Office: 44-A/1, Kalu Sarai, New Delhi-16; Regd. Office: 25-A, Ber Sarai, New Delhi-16 Website: www.madeeasypublications.org; Phone: 011-26560862, 011-9958995830 E-mail: [email protected]; [email protected] Theory & Practice Book for Computer Science & IT Copyright © 2013, by MADE EASY Publications. All rights are reserved. No part of this publication may be reproduced, stored in or introduced into a retrieval system, or transmitted in any form or by any means (electronic, mechanical, photo-copying, recording or otherwise), without the prior written permission of the above mentioned publisher of this book. First Edition: 2010 Second Edition: 2012 Third Edition: 2013 IBSN: 978-93-81069-32-5 Typesetat: MADE EASY Publications, New Delhi-110016 PREFACE “Theory & Practice Book on Computer Science” contains theory including basic concepts and formulae and nearly 3000 questions with answers and explanations. The authors (MADE EASY Team) are very well aware of the requirements of the Public Sector Examinations like ISRO, DRDO, BARC, BEL, HAL, NTPC, ONGC, BHEL, SAIL, GAIL, MTNL, ECL, ATC, DMRC, HLL, UPRVNL, CSPEB, OPTCL....etc. Therefore content of this book includes such questions similar to which questions are normally asked in the Public Sector Examinations. -

Faculty of Degree Engineering - 083 Department of CE / IT – 07 / 16 Multiple Choice Questions Bank Theory of Computation 2160704

Faculty of Degree Engineering - 083 Department of CE / IT – 07 / 16 Multiple Choice Questions Bank Theory Of Computation 2160704 1 Let R1 and R2 be regular sets defined over alphabet ∑ then A R1 UNION R2 is regular B R1 INTERSECTION R2 is regular C ∑ INTERSECTION R2 IS NOT REGULAR D R2* IS NOT REGULAR Ans. R1 UNION R2 is regular 2 Consider the production of the grammar S->AA A->aa A->bb Describe the language specified by the production grammar. A L = {aaaa,aabb,bbaa,bbbb} B L = {abab,abaa,aaab,baaa} C L = {aaab,baba,bbaa,bbbb} D L = {aaaa,abab,bbaa,aaab} Ans. L = {aaaa,aabb,bbaa,bbbb} 3 Give a production grammar that specified language L = {ai b2i >= 1} A {S->aSbb,S->abb} B {S->aSb, S->b} C {S->aA,S->b,A->b} D None of the above Ans. {S->aSbb,S->abb} 4 Which of the following String can be obtained by the language L = {ai b2i / i >=1} A aaabbbbbb B aabbb C abbabbba D aaaabbbabb. Ans. aaabbbbbb 5 Give a production grammar for the language L = {x/x ∈ (a,b)*, the number of a’s in x is multiple of 3}. A {S->bS,S->b,S->aA,S->bA,A->aB,B->bB,B->aS,S->a} B {S->aS,S->bA,A->bB,B->bBa,B->bB} C {S->aaS,S->bbA,A->bB,B->ba} 1 Prepared By: Prof. Janvi P. Patel Prof. Bhoomi M. Bangoria Computer/IT Engineering Faculty of Degree Engineering - 083 Department of CE / IT – 07 / 16 Multiple Choice Questions Bank Theory Of Computation 2160704 D None of the above Ans. -

Csci 311, Models of Computation Chapter 11 a Hierarchy of Formal Languages and Automata

CSci 311, Models of Computation Chapter 11 A Hierarchy of Formal Languages and Automata H. Conrad Cunningham 29 December 2015 Contents Introduction . 1 11.1 Recursive and Recursively Enumerable Languages . 2 11.1.1 Aside: Countability . 2 11.1.2 Definition of Recursively Enumerable Language . 2 11.1.3 Definition of Recursive Language . 2 11.1.4 Enumeration Procedure for Recursive Languages . 3 11.1.5 Enumeration Procedure for Recursively Enumerable Lan- guages . 3 11.1.6 Languages That are Not Recursively Enumerable . 4 11.1.7 A Language That is Not Recursively Enumerable . 5 11.1.8 A Language That is Recursively Enumerable but Not Recursive . 6 11.2 Unrestricted Grammars . 6 11.3 Context-Sensitive Grammars and Languages . 6 11.3.1 Linz Example 11.2 . 7 11.3.2 Linear Bounded Automata (lba) . 8 11.3.3 Relation Between Recursive and Context-Sensitive Lan- guages . 8 11.4 The Chomsky Hierarchy . 9 1 Copyright (C) 2015, H. Conrad Cunningham Acknowledgements: MS student Eli Allen assisted in preparation of these notes. These lecture notes are for use with Chapter 11 of the textbook: Peter Linz. Introduction to Formal Languages and Automata, Fifth Edition, Jones and Bartlett Learning, 2012.The terminology and notation used in these notes are similar to those used in the Linz textbook.This document uses several figures from the Linz textbook. Advisory: The HTML version of this document requires use of a browser that supports the display of MathML. A good choice as of December 2015 seems to be a recent version of Firefox from Mozilla. -

Computability

Computability COMP2600 — Formal Methods for Software Engineering Katya Lebedeva Australian National University Semester 2, 2016 Slides created by Katya Lebedeva COMP 2600 — Turing Machines 1 Definition of “Computability” Church-Turing Thesis The “Church-Turing thesis” is the general belief that the notion of “computable in principle” can be expressed in terms of • Turing’s Machines • Church’s Lambda Calculus • Godel’s¨ Recursive Functions • Kleene’s Formal Systems • Markov’s Algorithms • ::: COMP 2600 — Turing Machines 2 Definition of computability in terms of TMs: A computable function is a function that can be implemented by a TM. Definition of computability in terms of l-calculus: A computable function is a function that can be implemented as a l-term. These definitions are equivalent because • for every TM, we can write a l-term that simulates it • for every l-term, we can build a TM that simulates it We could also give definitions of computable function in terms of other models of computation. Thus, the “Church-Turing thesis” is the belief that any of these definitions is an adequate characterisation of “computability in principle”. COMP 2600 — Turing Machines 3 Why TMs? TMs are a terrible model for thinking about fast computation. But when talking about computability, we are not interested in finding fast algorithms, or even finding algorithms at all! We are interested to prove that some problems cannot be solved by any com- putational device, i.e. that they are undecidable. TM is an extremely simple model, but powerful enough to describe arbitrary algorithms. Importantly, TMs are powerful enough to simulate other TMs and simple enough to build up this simulation from scratch.