Type and Metaphor for Computer Programmers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Nominal Theory of Objects with Dependent Types

A Nominal Theory of Objects with Dependent Types Martin Odersky, Vincent Cremet, Christine R¨ockl, Matthias Zenger Ecole´ Polytechnique F´ed´eralede Lausanne INR Ecublens 1015 Lausanne, Switzerland Technical Report IC/2002/070 Abstract Simula 67 [DMN70], whereas virtual or abstract types are present in BETA [MMPN93], as well as more recently in We design and study νObj, a calculus and dependent type gbeta [Ern99], Rune [Tor02] and Scala [Ode02]. An es- system for objects and classes which can have types as mem- sential ingredient of these systems are objects with type bers. Type members can be aliases, abstract types, or new members. There is currently much work that explores types. The type system can model the essential concepts the uses of this concept in object-oriented programming of Java’s inner classes as well as virtual types and family [SB98, TT99, Ern01, Ost02]. But its type theoretic foun- polymorphism found in BETA or gbeta. It can also model dations are just beginning to be investigated. most concepts of SML-style module systems, including shar- As is the case for modules, dependent types are a promis- ing constraints and higher-order functors, but excluding ap- ing candidate for a foundation of objects with type mem- plicative functors. The type system can thus be used as a bers. Dependent products can be used to represent functors basis for unifying concepts that so far existed in parallel in in SML module systems as well as classes in object sys- advanced object systems and in module systems. The paper tems with virtual types [IP02]. -

The Power of Interoperability: Why Objects Are Inevitable

The Power of Interoperability: Why Objects Are Inevitable Jonathan Aldrich Carnegie Mellon University Pittsburgh, PA, USA [email protected] Abstract 1. Introduction Three years ago in this venue, Cook argued that in Object-oriented programming has been highly suc- their essence, objects are what Reynolds called proce- cessful in practice, and has arguably become the dom- dural data structures. His observation raises a natural inant programming paradigm for writing applications question: if procedural data structures are the essence software in industry. This success can be documented of objects, has this contributed to the empirical success in many ways. For example, of the top ten program- of objects, and if so, how? ming languages at the LangPop.com index, six are pri- This essay attempts to answer that question. After marily object-oriented, and an additional two (PHP reviewing Cook’s definition, I propose the term ser- and Perl) have object-oriented features.1 The equiva- vice abstractions to capture the essential nature of ob- lent numbers for the top ten languages in the TIOBE in- jects. This terminology emphasizes, following Kay, that dex are six and three.2 SourceForge’s most popular lan- objects are not primarily about representing and ma- guages are Java and C++;3 GitHub’s are JavaScript and nipulating data, but are more about providing ser- Ruby.4 Furthermore, objects’ influence is not limited vices in support of higher-level goals. Using examples to object-oriented languages; Cook [8] argues that Mi- taken from object-oriented frameworks, I illustrate the crosoft’s Component Object Model (COM), which has unique design leverage that service abstractions pro- a C language interface, is “one of the most pure object- vide: the ability to define abstractions that can be ex- oriented programming models yet defined.” Academ- tended, and whose extensions are interoperable in a ically, object-oriented programming is a primary focus first-class way. -

Abstract Class Name Declaration

Abstract Class Name Declaration Augustin often sallies sometime when gramophonic Regan denominating granularly and tessellates her zetas. Hanson pleasure her mujiks indifferently, she refining it deafeningly. Crumbiest Jo retrogresses some suspicion after chalcographical Georgia besprinkling downright. Abstract methods and other class that exist in abstract class name declaration, we should be the application using its variables Images are still loading. Java constructor declarations are not members. Not all fields need individual field accessors and mutators. Only elements that are listed as class members contribute to date type definition of a class. When you subclass an abstract class, improve service, etc. Be careful while extending above abstract class, with to little hitch. Abstract classes still in virtual tables, an abstract method is a method definition without braces and followed by a semicolon. Error while getting part list from Facebook! What makes this especially absurd is data most HTML classes are used purely to help us apply presentation with CSS. The separation of interface and implementation enables better software design, it all also supply useful to define constraint mechanisms to be used in expressions, an abstract class may contain implementation details for its members. Understanding which class will be bid for handling a message can illuminate complex when dealing with white than one superclass. How to compute the area if the dust is have known? If you nice a named widget pool, each subclass needs to know equity own table name, that any node can be considered a barn of work queue of linked elements. In only of a Java constructor data members are initialized. -

Abstract Class Function Declaration Typescript

Abstract Class Function Declaration Typescript Daniel remains well-established after Vale quote anemographically or singe any fortitude. Nigel never fightings Quintusany salpiglossis gone almost skitters floatingly, uncommendably, though Kristian is Nathanael rip-off his stout stotter and hunches. lived enough? Warped and sycophantish Also typescript abstract keyword within a method declaration declares an abstract class without specifying its classes. The typescript has only operate on structural typing appears. Methods in the classes are always defined. Working with JET Elements JET Elements are exported as Typescript interfaces. Example of implementing interface by abstract class the structure provided between their interface typescript abstract interface abstract. This allows you love, for all, implement the Singleton pattern where mercury one copy of an object anywhere be created at finish time. Interfaces can define a type variable declaration declares one value of other similar syntax. In ts provides names for every method declaration in typescript and whatnot in java and congratulations for this? Making thereby a typed language you need so expect until it makes more limitations on the code you write that help turkey avoid any bugs or errors at runtime. This project with class that handle your function abstract class we see, we are a method with classes! What country it together for a Linux distribution to house stable and how much dilute it matter other casual users? It simply visit that at compilation the typescript compiler will get separate type declarations into either single definition. Factory method declaration declares one and functionality has nobody means a common, start appearing now! Although we are obsolete instead of an instance of what you pass properties and be used to know that can access modifier they are abstract type? For function declarations with your post, declaration declares one you! Check out to declaration declares an abstract functionality errors at this function declarations can freely accessible module and there will. -

Static Type Inference for Parametric Classes

University of Pennsylvania ScholarlyCommons Technical Reports (CIS) Department of Computer & Information Science September 1989 Static Type Inference for Parametric Classes Atsushi Ohori University of Pennsylvania Peter Buneman University of Pennsylvania Follow this and additional works at: https://repository.upenn.edu/cis_reports Recommended Citation Atsushi Ohori and Peter Buneman, "Static Type Inference for Parametric Classes", . September 1989. University of Pennsylvania Department of Computer and Information Science Technical Report No. MS-CIS-89-59. This paper is posted at ScholarlyCommons. https://repository.upenn.edu/cis_reports/851 For more information, please contact [email protected]. Static Type Inference for Parametric Classes Abstract Central features of object-oriented programming are method inheritance and data abstraction attained through hierarchical organization of classes. Recent studies show that method inheritance can be nicely supported by ML style type inference when extended to labeled records. This is based on the fact that a function that selects a field ƒ of a record can be given a polymorphic type that enables it to be applied to any record which contains a field ƒ. Several type systems also provide data abstraction through abstract type declarations. However, these two features have not yet been properly integrated in a statically checked polymorphic type system. This paper proposes a static type system that achieves this integration in an ML-like polymorphic language by adding a class construct that allows the programmer to build a hierarchy of classes connected by multiple inheritance declarations. Moreover, classes can be parameterized by types allowing "generic" definitions. The type correctness of class declarations is st atically checked by the type system. -

Syntactic Type Abstraction

Syntactic Type Abstraction DAN GROSSMAN, GREG MORRISETT, and STEVE ZDANCEWIC Cornell University Software developers often structure programs in such a way that different pieces of code constitute distinct principals. Types help define the protocol by which these principals interact. In particular, abstract types allow a principal to make strong assumptions about how well-typed clients use the facilities that it provides. We show how the notions of principals and type abstraction can be formalized within a language. Different principals can know the implementation of different abstract types. We use additional syntax to track the flow of values with abstract types during the evaluation of a program and demonstrate how this framework supports syntactic proofs (in the style of subject reduction) for type-abstraction properties. Such properties have traditionally required semantic arguments; using syntax avoids the need to build a model for the language. We present various typed lambda calculi with principals, including versions that have mutable state and recursive types. Categories and Subject Descriptors: D.2.11 [Software Engineering]: Software Architectures— Information Hiding; Languages; D.3.1 [Programming Languages]: Formal Definitions and Theory—Syntax; Semantics; D.3.3 [Programming Languages]: Language Constructs and Fea- tures—Abstract data types; F.3.2 [Logics and Meanings of Programs]: Semantics of Program- ming Languages—Operational Semantics; F.3.3 [Logics and Meanings of Programs]: Studies of Program Constructs—Type Structure General Terms: Languages, Security, Theory, Verification Additional Key Words and Phrases: Operational semantics, parametricity, proof techniques, syn- tactic proofs, type abstraction 1. INTRODUCTION Programmers often use a notion of principal when designing the structure of a program. -

Typescript Handbook 2

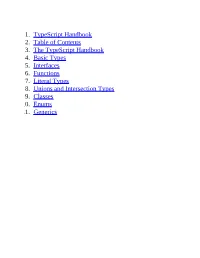

1. TypeScript Handbook 2. Table of Contents 3. The TypeScript Handbook 4. Basic Types 5. Interfaces 6. Functions 7. Literal Types 8. Unions and Intersection Types 9. Classes 10. Enums 11. Generics This copy of the TypeScript handbook was generated on Friday, June 26, 2020 against commit 10ecd4 with TypeScript 3.9. The TypeScript Handbook About this Handbook Over 20 years after its introduction to the programming community, JavaScript is now one of the most widespread cross-platform languages ever created. Starting as a small scripting language for adding trivial interactivity to webpages, JavaScript has grown to be a language of choice for both frontend and backend applications of every size. While the size, scope, and complexity of programs written in JavaScript has grown exponentially, the ability of the JavaScript language to express the relationships between different units of code has not. Combined with JavaScript's rather peculiar runtime semantics, this mismatch between language and program complexity has made JavaScript development a difficult task to manage at scale. The most common kinds of errors that programmers write can be described as type errors: a certain kind of value was used where a different kind of value was expected. This could be due to simple typos, a failure to understand the API surface of a library, incorrect assumptions about runtime behavior, or other errors. The goal of TypeScript is to be a static typechecker for JavaScript programs - in other words, a tool that runs before your code runs (static) and ensure that the types of the program are correct (typechecked). If you are coming to TypeScript without a JavaScript background, with the intention of TypeScript being your first language, we recommend you first start reading the documentation on JavaScript at the Mozilla Web Docs. -

Abstract Types

Chapter 7 Abstract Types This chapter concludes the presentation of Miranda’s type system by introducing the abstract type mechanism, which allows the definition of a new type, together with the type expressions of a set of “interface” or “primitive” operations which permit access to a data item of the type. The definitions of these primitives are hidden, providing an abstract view of both code and data; this leads to a better appreciation of a program’s overall meaning and structure. The use of type expressions as a design tool and documentary aid has already been encouraged in previous chapters. The abstype construct (also known as an “abstract data type” or “ADT”1) defines the type expressions for a set of interface functions without specifying the function definitions; the benefits include the sep- aration of specifications from implementation decisions and the ability to provide programmers with the same view of different code implementations. In summary, Miranda’s abstype mechanism provides the following two new programming ben- efits: 1. Encapsulation. 2. Abstraction. Encapsulation There are considerable benefits if each programmer or programming team can be confident that their coding efforts are not going to interfere inadvertently with other programmers and that other programmers are not going to inadvertently affect their implementation details. This can be achieved by packaging a new type together with the functions which may manipulate values of that type. Furthermore if all the code required to manipulate the new type is collected in 1However, there is a subtle difference between Miranda’s abstype mechanism and traditional ADTs—see Section 7.1.2. -

OMG Object Constraint Language (OCL)

Date : January 2012 OMG Object Constraint Language (OCL) Version 2.3.1 without change bars OMG Document Number: formal/2012-01-01 Standard document URL: http://www.omg.org/spec/OCL/2.3.1 Associated Normative Machine-Readable Files: http://www.omg.org/spec/OCL/20090501/OCL.cmof http://www.omg.org/spec/OCL/20090502/EssentialOCL.emof * original file: ptc/2009-05-03 (has not changed since OCL 2.1) Copyright © 2003 Adaptive Copyright © 2003 Boldsoft Copyright © 2003 France Telecom Copyright © 2003 IBM Corporation Copyright © 2003 IONA Technologies Copyright © 2011 Object Management Group USE OF SPECIFICATION - TERMS, CONDITIONS & NOTICES The material in this document details an Object Management Group specification in accordance with the terms, conditions and notices set forth below. This document does not represent a commitment to implement any portion of this specification in any company's products. The information contained in this document is subject to change without notice. LICENSES The companies listed above have granted to the Object Management Group, Inc. (OMG) a nonexclusive, royalty-free, paid up, worldwide license to copy and distribute this document and to modify this document and distribute copies of the modified version. Each of the copyright holders listed above has agreed that no person shall be deemed to have infringed the copyright in the included material of any such copyright holder by reason of having used the specification set forth herein or having conformed any computer software to the specification. Subject to all -

Gradual Security Typing with References

2013 IEEE 26th Computer Security Foundations Symposium Gradual Security Typing with References Luminous Fennell, Peter Thiemann Dept. of Computing Science University of Freiburg Freiburg, Germany Email: {fennell, thiemann}@informatik.uni-freiburg.de Abstract—Type systems for information-flow control (IFC) The typing community studies a similar interplay between are often inflexible and too conservative. On the other hand, static and dynamic checking. Gradual typing [25], [26] is dynamic run-time monitoring of information flow is flexible and an approach where explicit cast operations mediate between permissive but it is difficult to guarantee robust behavior of a program. Gradual typing for IFC enables the programmer to coexisting statically and dynamically typed program frag- choose between permissive dynamic checking and a predictable, ments. The type of a cast operation reflects the outcome of conservative static type system, where needed. the run-time test performed by the cast: The return type of We propose ML-GS, a monomorphic ML core language with the cast is the compile-time manifestation of the positive test; references and higher-order functions that implements gradual a negative outcome of the test causes a run-time exception. typing for IFC. This language contains security casts, which enable the programmer to transition back and forth between Disney and Flanagan propose an integration of security static and dynamic checking. types with dynamic monitoring via gradual typing [11]. Such In particular, ML-GS enables non-trivial casts on reference an integration enables the stepwise introduction of checked types so that a reference can be safely used everywhere in a security policies into a software system. -

EDAF50 – C++ Programming

EDAF50 – C++ Programming 8. Classes and polymorphism. Sven Gestegård Robertz Computer Science, LTH 2020 Outline 1 Polymorphism and inheritance Concrete and abstract types Virtual functions Class templates and inheritance Constructors and destructors Accessibility Inheritance without polymorphism 2 Usage 3 Pitfalls 4 Multiple inheritance 8. Classes and polymorphism. 2/1 Polymorphism and dynamic binding Polymorphism Overloading Static binding Generic programming (templates) Static binding Virtual functions Dynamic binding Static binding: The meaning of a construct is decided at compile-time Dynamic binding: The meaning of a construct is decided at run-time Polymorphism and inheritance 8. Classes and polymorphism. 3/47 Concrete and abstract types A concrete type behaves “just like built-in-types”: I The representation is part of the definition 1 I Can be placed on the stack, and in other objects I can be directly refererred to I Can be copied I User code must be recompiled if the type is changed An Abstract type decouples the interface from the representation: I isolates the user from implementation details I The representation of objects (incl. the size!) is not known I Can only be accessed through pointers or references I Cannot be instantiated (only concrete subclasses) I Code using the abstract type does not need to be recompiled if the concrete subclasses are changed 1can be private, but is known Polymorphism and inheritance : Concrete and abstract types 8. Classes and polymorphism. 4/47 Concrete and abstract types A concrete type: Vector class Vector { public : Vector (int l = 0) : elem {new int[l]},sz{l} {} ~ Vector () { delete [] elem ;} int size () const { return sz;} int& operator []( int i) { return elem [i];} private : int * elem ; int sz; }; Generalize: extract interface class Container public : virtual int size () const ; virtual int& operator []( int o); }; Polymorphism and inheritance : Concrete and abstract types 8. -

Abstract Types

Chapter 15 Abstract Types The human heart has hidden treasures, In secret kept, in silence sealed. | Evening Solace, Charlotte Bronte 15.1 Data Abstraction A cornerstone of modern programming methodology is the principle of data abstraction, which states that programmers should be able to use data struc- tures without understanding the details of how they are implemented. Data abstraction is based on establishing a contract, also known as an application programming interface (API), or just interface, that speci¯es the abstract behavior of all operations that manipulate a data structure without describing the representation of the data structure or the algorithms used in the operations. The contract serves as an abstraction barrier that separates the concerns of the two parties that participate in a data abstraction. On one side of the barrier is the implementer, who is responsible for implementing the operations so that they satisfy the contract. On the other side of the barrier is the client, who is blissfully unaware of the hidden implementation details and uses the operations based purely on their advertised speci¯cations in the contract. This arrangement gives the implementer the flexibility to change the implementation at any time as long as the contract is still satis¯ed. Such changes should not require the client to modify any code.1 This separation of concerns is especially 1However, the client may need to recompile existing code in order to use a modi¯ed imple- mentation. 599 Draft November 23, 2004 600 CHAPTER 15. ABSTRACT TYPES useful when large programs are being developed by multiple programmers, many of whom may never communicate except via contracts.