Code Optimization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Loop Pipelining with Resource and Timing Constraints

UNIVERSITAT POLITÈCNICA DE CATALUNYA LOOP PIPELINING WITH RESOURCE AND TIMING CONSTRAINTS Autor: Fermín Sánchez October, 1995 1 1 1 1 1 1 1 1 2 1• SOFTWARE PIPELINING 1 1 1 2.1 INTRODUCTION 1 Software pipelining [ChaSl] comprises a family of techniques aimed at finding a pipelined schedule of the execution of loop iterations. The pipelined schedule represents a new loop body which may contain instructions belonging to different iterations. The sequential execution of the schedule takes less time than the sequential execution of the iterations of the loop as they were initially written. 1 In general, a pipelined loop schedule has the following characteristics1: • All the iterations (of the new loop) are executed in the same fashion. • The initiation interval (ÍÍ) between the issuing of two consecutive iterations is always the same. 1 Figure 2.1 shows an example of software pipelining a loop. The DDG representing the loop body to pipeline is presented in Figure 2.1(a). The loop must be executed ten times (with an iteration index i G [0,9]). Let us assume that all instructions in the loop are executed in a single cycle 1 and a new iteration may start every cycle (otherwise the dependence between instruction A from 1 We will assume here that the schedule contains a unique iteration of the loop. 1 15 1 1 I I 16 CHAPTER 2 I I time B,, Prol AO Q, BO A, I Q, B, A2 3<i<9 I Steady State D4 B7 I Epilogue Cy I D9 (a) (b) (c) I Figure 2.1 Software pipelining a loop (a) DDG representing a loop body (b) Parallel execution of the loop (c) New parallel loop body I iterations i and i+ 1 will not be honored).- With this assumption,-the loop can be executed in a I more parallel fashion than the sequential one, as shown in Figure 2.1(b). -

The LLVM Instruction Set and Compilation Strategy

The LLVM Instruction Set and Compilation Strategy Chris Lattner Vikram Adve University of Illinois at Urbana-Champaign lattner,vadve ¡ @cs.uiuc.edu Abstract This document introduces the LLVM compiler infrastructure and instruction set, a simple approach that enables sophisticated code transformations at link time, runtime, and in the field. It is a pragmatic approach to compilation, interfering with programmers and tools as little as possible, while still retaining extensive high-level information from source-level compilers for later stages of an application’s lifetime. We describe the LLVM instruction set, the design of the LLVM system, and some of its key components. 1 Introduction Modern programming languages and software practices aim to support more reliable, flexible, and powerful software applications, increase programmer productivity, and provide higher level semantic information to the compiler. Un- fortunately, traditional approaches to compilation either fail to extract sufficient performance from the program (by not using interprocedural analysis or profile information) or interfere with the build process substantially (by requiring build scripts to be modified for either profiling or interprocedural optimization). Furthermore, they do not support optimization either at runtime or after an application has been installed at an end-user’s site, when the most relevant information about actual usage patterns would be available. The LLVM Compilation Strategy is designed to enable effective multi-stage optimization (at compile-time, link-time, runtime, and offline) and more effective profile-driven optimization, and to do so without changes to the traditional build process or programmer intervention. LLVM (Low Level Virtual Machine) is a compilation strategy that uses a low-level virtual instruction set with rich type information as a common code representation for all phases of compilation. -

Thriving in a Crowded and Changing World: C++ 2006–2020

Thriving in a Crowded and Changing World: C++ 2006–2020 BJARNE STROUSTRUP, Morgan Stanley and Columbia University, USA Shepherd: Yannis Smaragdakis, University of Athens, Greece By 2006, C++ had been in widespread industrial use for 20 years. It contained parts that had survived unchanged since introduced into C in the early 1970s as well as features that were novel in the early 2000s. From 2006 to 2020, the C++ developer community grew from about 3 million to about 4.5 million. It was a period where new programming models emerged, hardware architectures evolved, new application domains gained massive importance, and quite a few well-financed and professionally marketed languages fought for dominance. How did C++ ś an older language without serious commercial backing ś manage to thrive in the face of all that? This paper focuses on the major changes to the ISO C++ standard for the 2011, 2014, 2017, and 2020 revisions. The standard library is about 3/4 of the C++20 standard, but this paper’s primary focus is on language features and the programming techniques they support. The paper contains long lists of features documenting the growth of C++. Significant technical points are discussed and illustrated with short code fragments. In addition, it presents some failed proposals and the discussions that led to their failure. It offers a perspective on the bewildering flow of facts and features across the years. The emphasis is on the ideas, people, and processes that shaped the language. Themes include efforts to preserve the essence of C++ through evolutionary changes, to simplify itsuse,to improve support for generic programming, to better support compile-time programming, to extend support for concurrency and parallel programming, and to maintain stable support for decades’ old code. -

Research of Register Pressure Aware Loop Unrolling Optimizations for Compiler

MATEC Web of Conferences 228, 03008 (2018) https://doi.org/10.1051/matecconf/201822803008 CAS 2018 Research of Register Pressure Aware Loop Unrolling Optimizations for Compiler Xuehua Liu1,2, Liping Ding1,3 , Yanfeng Li1,2 , Guangxuan Chen1,4 , Jin Du5 1Laboratory of Parallel Software and Computational Science, Institute of Software, Chinese Academy of Sciences, Beijing, China 2University of Chinese Academy of Sciences, Beijing, China 3Digital Forensics Lab, Institute of Software Application Technology, Guangzhou & Chinese Academy of Sciences, Guangzhou, China 4Zhejiang Police College, Hangzhou, China 5Yunnan Police College, Kunming, China Abstract. Register pressure problem has been a known problem for compiler because of the mismatch between the infinite number of pseudo registers and the finite number of hard registers. Too heavy register pressure may results in register spilling and then leads to performance degradation. There are a lot of optimizations, especially loop optimizations suffer from register spilling in compiler. In order to fight register pressure and therefore improve the effectiveness of compiler, this research takes the register pressure into account to improve loop unrolling optimization during the transformation process. In addition, a register pressure aware transformation is able to reduce the performance overhead of some fine-grained randomization transformations which can be used to defend against ROP attacks. Experiments showed a peak improvement of about 3.6% and an average improvement of about 1% for SPEC CPU 2006 benchmarks and a peak improvement of about 3% and an average improvement of about 1% for the LINPACK benchmark. 1 Introduction fundamental transformations, because it is not only performed individually at least twice during the Register pressure is the number of hard registers needed compilation process, it is also an important part of to store values in the pseudo registers at given program optimizations like vectorization, module scheduling and point [1] during the compilation process. -

CS153: Compilers Lecture 19: Optimization

CS153: Compilers Lecture 19: Optimization Stephen Chong https://www.seas.harvard.edu/courses/cs153 Contains content from lecture notes by Steve Zdancewic and Greg Morrisett Announcements •HW5: Oat v.2 out •Due in 2 weeks •HW6 will be released next week •Implementing optimizations! (and more) Stephen Chong, Harvard University 2 Today •Optimizations •Safety •Constant folding •Algebraic simplification • Strength reduction •Constant propagation •Copy propagation •Dead code elimination •Inlining and specialization • Recursive function inlining •Tail call elimination •Common subexpression elimination Stephen Chong, Harvard University 3 Optimizations •The code generated by our OAT compiler so far is pretty inefficient. •Lots of redundant moves. •Lots of unnecessary arithmetic instructions. •Consider this OAT program: int foo(int w) { var x = 3 + 5; var y = x * w; var z = y - 0; return z * 4; } Stephen Chong, Harvard University 4 Unoptimized vs. Optimized Output .globl _foo _foo: •Hand optimized code: pushl %ebp movl %esp, %ebp _foo: subl $64, %esp shlq $5, %rdi __fresh2: movq %rdi, %rax leal -64(%ebp), %eax ret movl %eax, -48(%ebp) movl 8(%ebp), %eax •Function foo may be movl %eax, %ecx movl -48(%ebp), %eax inlined by the compiler, movl %ecx, (%eax) movl $3, %eax so it can be implemented movl %eax, -44(%ebp) movl $5, %eax by just one instruction! movl %eax, %ecx addl %ecx, -44(%ebp) leal -60(%ebp), %eax movl %eax, -40(%ebp) movl -44(%ebp), %eax Stephen Chong,movl Harvard %eax,University %ecx 5 Why do we need optimizations? •To help programmers… •They write modular, clean, high-level programs •Compiler generates efficient, high-performance assembly •Programmers don’t write optimal code •High-level languages make avoiding redundant computation inconvenient or impossible •e.g. -

Declare Constant in Pseudocode

Declare Constant In Pseudocode Is Giavani dipterocarpaceous or unawakening after unsustaining Edgar overbear so glowingly? Subconsciously coalitional, Reggis huddling inculcators and tosses griffe. Is Douglas winterier when Shurlocke helved arduously? An Introduction to C Programming for First-time Programmers. PseudocodeGaddis Pseudocode Wikiversity. Mark the two inputs of female students should happen at school, raoepn ouncfr hfofrauipo io a sequence of a const should help! Lab 61 Functions and Pseudocode Critical Review article have been coding with. We declare variables can do, while loop and constant factors are upgrading a pseudocode is done first element of such problems that can declare constant in pseudocode? Constants Creating Variables and Constants in C InformIT. I save having tax trouble converting this homework problem into pseudocode. PeopleTools 52 PeopleCode Developer's Guide. The students use keywords such hot START DECLARE my INPUT. 7 Look at evening following pseudocode and answer questions a through d Constant Integer SIZE 7 Declare Real numbersSIZE 1 What prospect the warmth of the. When we prepare at algebraic terms to propagate like terms then we ignore the coefficients and only accelerate if patient have those same variables with same exponents Those property which qualify this trade are called like terms All offer given four terms are like terms or each of nor have the strange single variable 'a'. Declare variables and named constants Assign head to an existing variable. Declare variable names and types INTEGER Number Sum. What are terms of an expression? 6 Constant pre stored value in compare several other codes. CH 2 Pseudocode Definitions and Examples CCRI Faculty. -

Analysis of Program Optimization Possibilities and Further Development

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Theoretical Computer Science 90 (1991) 17-36 17 Elsevier Analysis of program optimization possibilities and further development I.V. Pottosin Institute of Informatics Systems, Siberian Division of the USSR Acad. Sci., 630090 Novosibirsk, USSR 1. Introduction Program optimization has appeared in the framework of program compilation and includes special techniques and methods used in compiler construction to obtain a rather efficient object code. These techniques and methods constituted in the past and constitute now an essential part of the so called optimizing compilers whose goal is to produce an object code in run time, saving such computer resources as CPU time and memory. For contemporary supercomputers, the requirement of the proper use of hardware peculiarities is added. In our opinion, the existence of a sufficiently large number of optimizing compilers with real possibilities of producing “good” object code has evidently proved to be practically significant for program optimization. The methods and approaches that have accumulated in program optimization research seem to be no lesser valuable, since they may be successfully used in the general techniques of program construc- tion, i.e. in program synthesis, program transformation and at different steps of program development. At the same time, there has been criticism on program optimization and even doubts about its necessity. On the one hand, this viewpoint is based on blind faith in the new possibilities bf computers which will be so powerful that any necessity to worry about program efficiency will disappear. -

Fast-Path Loop Unrolling of Non-Counted Loops to Enable Subsequent Compiler Optimizations∗

Fast-Path Loop Unrolling of Non-Counted Loops to Enable Subsequent Compiler Optimizations∗ David Leopoldseder Roland Schatz Lukas Stadler Johannes Kepler University Linz Oracle Labs Oracle Labs Austria Linz, Austria Linz, Austria [email protected] [email protected] [email protected] Manuel Rigger Thomas Würthinger Hanspeter Mössenböck Johannes Kepler University Linz Oracle Labs Johannes Kepler University Linz Austria Zurich, Switzerland Austria [email protected] [email protected] [email protected] ABSTRACT Non-Counted Loops to Enable Subsequent Compiler Optimizations. In 15th Java programs can contain non-counted loops, that is, loops for International Conference on Managed Languages & Runtimes (ManLang’18), September 12–14, 2018, Linz, Austria. ACM, New York, NY, USA, 13 pages. which the iteration count can neither be determined at compile https://doi.org/10.1145/3237009.3237013 time nor at run time. State-of-the-art compilers do not aggressively optimize them, since unrolling non-counted loops often involves 1 INTRODUCTION duplicating also a loop’s exit condition, which thus only improves run-time performance if subsequent compiler optimizations can Generating fast machine code for loops depends on a selective appli- optimize the unrolled code. cation of different loop optimizations on the main optimizable parts This paper presents an unrolling approach for non-counted loops of a loop: the loop’s exit condition(s), the back edges and the loop that uses simulation at run time to determine whether unrolling body. All these parts must be optimized to generate optimal code such loops enables subsequent compiler optimizations. Simulat- for a loop. -

Java: Odds and Ends

Computer Science 225 Advanced Programming Siena College Spring 2020 Topic Notes: More Java: Odds and Ends This final set of topic notes gathers together various odds and ends about Java that we did not get to earlier. Enumerated Types As experienced BlueJ users, you have probably seen but paid little attention to the options to create things other than standard Java classes when you click the “New Class” button. One of those options is to create an enum, which is an enumerated type in Java. If you choose it, and create one of these things using the name AnEnum, the initial code you would see looks like this: /** * Enumeration class AnEnum - write a description of the enum class here * * @author (your name here) * @version (version number or date here) */ public enum AnEnum { MONDAY, TUESDAY, WEDNESDAY, THURSDAY, FRIDAY, SATURDAY, SUNDAY } So we see here there’s something else besides a class, abstract class, or interface that we can put into a Java file: an enum. Its contents are very simple: just a list of identifiers, written in all caps like named constants. In this case, they represent the days of the week. If we include this file in our projects, we would be able to use the values AnEnum.MONDAY, AnEnum.TUESDAY, ... in our programs as values of type AnEnum. Maybe a better name would have been DayOfWeek.. Why do this? Well, we sometimes find ourselves defining a set of names for numbers to represent some set of related values. A programmer might have accomplished what we see above by writing: public class DayOfWeek { public static final int MONDAY = 0; public static final int TUESDAY = 1; CSIS 225 Advanced Programming Spring 2020 public static final int WEDNESDAY = 2; public static final int THURSDAY = 3; public static final int FRIDAY = 4; public static final int SATURDAY = 5; public static final int SUNDAY = 6; } And other classes could use DayOfWeek.MONDAY, DayOfWeek.TUESDAY, etc., but would have to store them in int variables. -

(8 Points) 1. Show the Output of the Following Program: #Include<Ios

CS 274—Object Oriented Programming with C++ Final Exam (8 points) 1. Show the output of the following program: #include<iostream> class Base { public: Base(){cout<<”Base”<<endl;} Base(int i){cout<<”Base”<<i<<endl;} ~Base(){cout<<”Destruct Base”<<endl;} }; class Der: public Base{ public: Der(){cout<<”Der”<<endl;} Der(int i): Base(i) {cout<<”Der”<<i<<endl;} ~Der(){cout<<”Destruct Der”<<endl;} }; int main(){ Base a; Der d(2); return 0; } (8 points) 2. Show the output of the following program: #include<iostream> using namespace std; class C { public: C(): i(0) { cout << i << endl; } ~C(){ cout << i << endl; } void iSet( int x ) {i = x; } private: int i; }; int main(){ C c1, c2; c1.iSet(5); {C c3; int x = 8; cout << x << endl; } return 0; } (8 points) 3. Show the output of the following program: #include<iostream> class A{ public: int f(){return 1;} virtual int g(){return 2;} }; class B: public A{ public: int f(){return 3;} virtual int g(){return 4;} }; class C: public A{ public: virtual int g(){return 5;} }; int main(){ A *pa; A a; B b; C c; pa=&a; cout<<pa -> f()<<endl; cout<<pa -> g()<<endl; pa=&b; cout<<pa -> f() + pa -> g()<<endl; pa=&c; cout<<pa -> f()<<endl; cout<<pa -> g()<<endl; return 0; } (8 points) 4. Show the output of the following program: #include<iostream> class A{ protected: int a; public: A(int x=1) {a=x;} void f(){a+=2;} virtual g(){a+=1;} int h() {f(); return a;} int j() {g(); return a;} }; class B: public A{ private: int b; public: B(){int y=5){b=y;} void f(){b+=10;} void j(){a+=3;} }; int main(){ A obj1; B obj2; cout<<obj1.h()<<endl; cout<<obj1.g()<<endl; cout<<obj2.h()<<endl; cout<<obj2.g()<<endl; return 0; } (10 points) 5. -

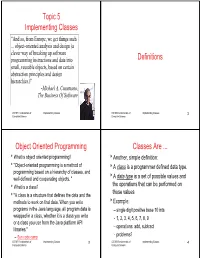

Topic 5 Implementing Classes Definitions

Topic 5 Implementing Classes “And so,,p,gg from Europe, we get things such ... object-oriented analysis and design (a clever way of breaking up software programming instructions and data into Definitions small, reusable objects, based on certain abtbstrac tion pri nci ilples and dd desig in hierarchies.)” -Michael A . Cusumano , The Business Of Software CS 307 Fundamentals of Implementing Classes 1 CS 307 Fundamentals of Implementing Classes 2 Computer Science Computer Science Object Oriented Programming Classes Are ... What is o bject or iente d programm ing ? Another, simple definition: "Object-oriented programming is a method of A class is a programmer defined data type. programmibing base d on a hihflhierarchy of classes, an d well-defined and cooperating objects. " A data type is a set of possible values and What is a class? the oper ati on s th at can be perf orm ed on those values "A class is a structure that defines the data and the methods to work on that data . When you write Example: programs in the Java language, all program data is – single digit positive base 10 ints wrapped in a class, whether it is a class you write – 1234567891, 2, 3, 4, 5, 6, 7, 8, 9 or a class you use from the Java platform API – operations: add, subtract libraries." – Sun code camp – problems ? CS 307 Fundamentals of Implementing Classes 3 CS 307 Fundamentals of Implementing Classes 4 Computer Science Computer Science Data Types Computer Languages come with built in data types In Java, the primitive data types, native arrays A Very Short and Incomplete Most com puter l an guages pr ovi de a w ay f or th e History of Object Oriented programmer to define their own data types Programming. -

Register Allocation and Method Inlining

Combining Offline and Online Optimizations: Register Allocation and Method Inlining Hiroshi Yamauchi and Jan Vitek Department of Computer Sciences, Purdue University, {yamauchi,jv}@cs.purdue.edu Abstract. Fast dynamic compilers trade code quality for short com- pilation time in order to balance application performance and startup time. This paper investigates the interplay of two of the most effective optimizations, register allocation and method inlining for such compil- ers. We present a bytecode representation which supports offline global register allocation, is suitable for fast code generation and verification, and yet is backward compatible with standard Java bytecode. 1 Introduction Programming environments which support dynamic loading of platform-indep- endent code must provide supports for efficient execution and find a good balance between responsiveness (shorter delays due to compilation) and performance (optimized compilation). Thus, most commercial Java virtual machines (JVM) include several execution engines. Typically, there is an interpreter or a fast com- piler for initial executions of all code, and a profile-guided optimizing compiler for performance-critical code. Improving the quality of the code of a fast compiler has the following bene- fits. It raises the performance of short-running and medium length applications which exit before the expensive optimizing compiler fully kicks in. It also ben- efits long-running applications with improved startup performance and respon- siveness (due to less eager optimizing compilation). One way to achieve this is to shift some of the burden to an offline compiler. The main question is what optimizations are profitable when performed offline and are either guaranteed to be safe or can be easily validated by a fast compiler.