Architecture and Implementation of a Text Mining Application for Financial Documents for Early Warning Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Commonjavajars - a Package with Useful Libraries for Java Guis

CommonJavaJars - A package with useful libraries for Java GUIs To reduce the package size of other R packages with Java GUIs and to reduce jar file conflicts, this package provides a few commonly used Java libraries. You should be able to load them by calling the rJava .jpackage function (a good place is most likely the .onLoad function of your package): .jpackage("CommonJavaJars", jars=c("forms-1.2.0.jar", "iText-2.1.4.jar")) We provide the following Java libraries: Apache Commons Logging under the Apache License, Version 2.0, January 2004, http://commons. apache.org/logging/, Copyright 2001-2007 The Apache Software Foundation Apache jog4j under Apache License 2.0, http://logging.apache.org/log4j/, Copyright 2007 The Apache Software Foundation Apache Commons Lang under Apache License 2.0, http://commons.apache.org/lang/, Copyright 2001-2011 The Apache Software Foundation Apache POI under Apache License 2.0, http://poi.apache.org/, Copyright 2001-2007 The Apache Software Foundation Apache Commons Collections under the Apache License, Version 2.0, January 2004, http://commons. apache.org/collections/, Copyright 2001-2008 The Apache Software Foundation Apache Commons Validator under the Apache License, Version 2.0, January 2004, http://commons. apache.org/validator/, Copyright 2001-2010 The Apache Software Foundation JLaTeXMath under GPL >= 2.0, http://forge.scilab.org/index.php/p/jlatexmath/, Copyright 2004-2007, 2009 Calixte, Coolsaet, Cleemput, Vermeulen and Universiteit Gent iText 2.1.4 under LGPL, http://itextpdf.com/, Copyright -

Merchandise Planning and Optimization Licensing Information

Oracle® Retail Merchandise Planning and Optimization Licensing Information July 2009 This document provides licensing information for all the third-party applications used by the following Oracle Retail applications: ■ Oracle Retail Clearance Optimization Engine ■ Oracle Retail Markdown Optimization ■ Oracle Retail Place ■ Oracle Retail Plan ■ Oracle Retail Promote (PPO and PI) Prerequisite Softwares and Licenses Oracle Retail products depend on the installation of certain essential products (with commercial licenses), but the company does not bundle these third-party products within its own installation media. Acquisition of licenses for these products should be handled directly with the vendor. The following products are not distributed along with the Oracle Retail product installation media: ® ■ BEA WebLogic Server (http://www.bea.com) ™ ■ MicroStrategy Desktop (http://www.microstrategy.com) ■ MicroStrategy Intelligence Server™ and Web Universal (http://www.microstrategy.com) ® ■ Oracle Database 10g (http://www.oracle.com) ® ■ Oracle Application Server 10g (http://www.oracle.com) ® ■ Oracle Business Intelligence Suite Enterprise Edition Version 10 (http://www.oracle.com) ■ rsync (http://samba.anu.edu.au/rsync/). See rsync License. 1 Softwares and Licenses Bundled with Oracle Retail Products The following third party products are bundled along with the Oracle Retail product code and Oracle has acquired the necessary licenses to bundle the software along with the Oracle Retail product: ■ addObject.com NLSTree Professional version 2.3 -

Oracle® Application Management Pack for Oracle E-Business Suite Guide Release 12.1.0.2.0 Part No

Oracle® Application Management Pack for Oracle E-Business Suite Guide Release 12.1.0.2.0 Part No. E39873-01 November 2013 Oracle Application Management Pack for Oracle E-Business Suite Guide, Release 12.1.0.2.0 Part No. E39873-01 Copyright © 2007, 2013, Oracle and/or its affiliates. All rights reserved. Primary Author: Biju Mohan, Mildred Wang Contributing Author: Srikrishna Bandi, KrishnaKumar Nair, Angelo Rosado Contributor: John Aedo, Max Arderius, Kenneth Baxter, Bhargava Chinthoju, Lauren Cohn, Rumeli Das, Ivo Dujmovic, Nipun Goel, Clara Jaeckel, Ryan Landowski, Senthil Madhappan, Biplab Nayak, Shravankumar Nethi, Vinitha Rajan, Vasu Rao, Traci Short, Mike Smith Oracle and Java are registered trademarks of Oracle and/or its affiliates. Other names may be trademarks of their respective owners. Intel and Intel Xeon are trademarks or registered trademarks of Intel Corporation. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. AMD, Opteron, the AMD logo, and the AMD Opteron logo are trademarks or registered trademarks of Advanced Micro Devices. UNIX is a registered trademark of The Open Group. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. -

What's New with Apache POI

What's new with Apache POI Apache POI - The Open Source Java solution for Microsoft Office Nick Burch Senior Developer Torchbox Ltd What'll we be looking at? • POI and OOXML – why bother? • Working with Excel files – DOM-like UserModel for .xls and .xlsx – SAX-like EventModel for .xls • Converting older code to the new style • PowerPoint – ppt and pptx • Word, Visio, Outlook, Publisher But first... OLE2 & OOXML • All the old style file formats (xls, doc, ppt, vsd) are OLE2 based • OLE2 is a binary format, that looks a lot like FAT • All the new file formats (xlsx, docx, pptx) are OOXML • OOXML is a zip file of XML files, which are data and metadata OLE2 Overview • OLE 2 Compound Document Format • Binary file format, developed by Microsoft • Contains different streams / files • POIFS is our implementation of it • http://poi.apache.org/poifs/fileformat.html has the full details • OOXML structure nods back to OLE2 Peeking inside OLE2 • POIFS has several tools for viewing and debugging OLE2 files • org.apache.poi.poifs.dev. POIFSViewer is the main one • Ant task is “POIFSViewer”, pass it -Dfilename=src/testcase/.... • Documents have metadata entries, and streams for their data SimpleWithColour.xls POIFS FileSystem Property: "Root Entry" Name = "Root Entry" DocumentSummaryInformation Property: "DocumentSummaryInformation" Name = "DocumentSummaryInformation" Document: "DocumentSummaryInformation" size=261 SummaryInformation Property: "SummaryInformation" Name = "SummaryInformation" Document: "SummaryInformation" size=229 Workbook Property: -

Talend Open Studio for Big Data Release Notes

Talend Open Studio for Big Data Release Notes 6.0.0 Talend Open Studio for Big Data Adapted for v6.0.0. Supersedes previous releases. Publication date July 2, 2015 Copyleft This documentation is provided under the terms of the Creative Commons Public License (CCPL). For more information about what you can and cannot do with this documentation in accordance with the CCPL, please read: http://creativecommons.org/licenses/by-nc-sa/2.0/ Notices Talend is a trademark of Talend, Inc. All brands, product names, company names, trademarks and service marks are the properties of their respective owners. License Agreement The software described in this documentation is licensed under the Apache License, Version 2.0 (the "License"); you may not use this software except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0.html. Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. This product includes software developed at AOP Alliance (Java/J2EE AOP standards), ASM, Amazon, AntlR, Apache ActiveMQ, Apache Ant, Apache Avro, Apache Axiom, Apache Axis, Apache Axis 2, Apache Batik, Apache CXF, Apache Cassandra, Apache Chemistry, Apache Common Http Client, Apache Common Http Core, Apache Commons, Apache Commons Bcel, Apache Commons JxPath, Apache -

Apache Open Office Spreadsheet Templates

Apache Open Office Spreadsheet Templates Practicing and publishable Lev still reasserts his administrator pithily. Spindle-legged Lancelot robotize or mention some thingumbob Bradypastorally, weekends however imminently. defenseless Dru beheld headforemost or lipped. Tempest-tossed Morris lapidifies some extravasation after glamorous Get familiar with complete the following framework which to publish a spreadsheet templates can even free and capable of the language id is this website extensions Draw is anchor on three same plague as Adobe Illustrator or Photoshop, but turning an announcement to anywhere to friends and grease with smart software still be ideal. Get started in minutes to try Asana. So much the contents of their own voting power or edit them out how do it is where can! Retouch skin problems. But is make it is done in writer blogs or round off he has collaborative effort while presenting their processes to learn how. Work environment different languages a lot? Layout view combines a desktop publishing environment so familiar Word features, giving have a customized workspace designed to simplify complex layouts. Enjoy finger painting with numerous colors that care can choose. Green invoice template opens a office, spreadsheets to the. Google docs and open office. Each office templates to open in a template opens in the darkest locations in critical situations regarding medical letter of. You open office templates are there is a template to apache open office on spreadsheets, and interactive tool with. Its print are produced a banner selling ms word document author in order to alternatives that. Manage Office programs templates Office Microsoft Docs. It includes just let every name you mean ever ask soon as a writer or editor. -

Longview 7 Installation Guide the Contents of This Document and the Associated Software Are the Property of Longview Solutions and Are Copyrighted

Version 7.3 2016 Longview 7 Installation Guide The contents of this document and the associated software are the property of Longview Solutions and are copyrighted. No part of this document may be reproduced in whole or in part or by any means, for any purpose without the express written permission of Longview Solutions. Longview Solutions makes no representations or warranties as to the software, expressed or implied, including, without limitation, the implied warranties and conditions of merchantability and fitness for any particular purpose and those arising by statute or otherwise in law or from a course of dealing or usage of trade. Further, Longview Solutions reserves the right to revise this publication and to make changes from time to time in the content thereof without obligation to notify any person or organization of such revision or change. In no event shall Longview Solutions, its directors, officers, employees or agents be liable for any special, direct, indirect or consequential damages (including damages for loss of business profits, business interruption, loss of business information or reduction of expenses, actual or anticipated, and the like) arising out of the use or inability to use the software whether based on contract, tort or any other legal theory. © 2016 Longview Solutions. All rights reserved. Published in Canada. Longview Solutions and Longview 7 are registered trademarks of Longview Software Limited and Longview US Holdings Inc. All other company and product names are trademarks or registered trademarks of their respective companies. Open Source License Acknowledgement Longview 7 uses third-party, open-source software subject to the following licenses: Apache POI Project, Axis 2, BCEL, Log4J, Google Web Toolkit, Xerxes & Xalan, Licensed to the Apache Software Foundation (ASF) under one or more contributor license agreements. -

Open Source and Third Party Documentation

Open Source and Third Party Documentation Verint.com Twitter.com/verint Facebook.com/verint Blog.verint.com Content Introduction.....................2 Licenses..........................3 Page 1 Open Source Attribution Certain components of this Software or software contained in this Product (collectively, "Software") may be covered by so-called "free or open source" software licenses ("Open Source Components"), which includes any software licenses approved as open source licenses by the Open Source Initiative or any similar licenses, including without limitation any license that, as a condition of distribution of the Open Source Components licensed, requires that the distributor make the Open Source Components available in source code format. A license in each Open Source Component is provided to you in accordance with the specific license terms specified in their respective license terms. EXCEPT WITH REGARD TO ANY WARRANTIES OR OTHER RIGHTS AND OBLIGATIONS EXPRESSLY PROVIDED DIRECTLY TO YOU FROM VERINT, ALL OPEN SOURCE COMPONENTS ARE PROVIDED "AS IS" AND ANY EXPRESSED OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. Any third party technology that may be appropriate or necessary for use with the Verint Product is licensed to you only for use with the Verint Product under the terms of the third party license agreement specified in the Documentation, the Software or as provided online at http://verint.com/thirdpartylicense. You may not take any action that would separate the third party technology from the Verint Product. Unless otherwise permitted under the terms of the third party license agreement, you agree to only use the third party technology in conjunction with the Verint Product. -

Full-Graph-Limited-Mvn-Deps.Pdf

org.jboss.cl.jboss-cl-2.0.9.GA org.jboss.cl.jboss-cl-parent-2.2.1.GA org.jboss.cl.jboss-classloader-N/A org.jboss.cl.jboss-classloading-vfs-N/A org.jboss.cl.jboss-classloading-N/A org.primefaces.extensions.master-pom-1.0.0 org.sonatype.mercury.mercury-mp3-1.0-alpha-1 org.primefaces.themes.overcast-${primefaces.theme.version} org.primefaces.themes.dark-hive-${primefaces.theme.version}org.primefaces.themes.humanity-${primefaces.theme.version}org.primefaces.themes.le-frog-${primefaces.theme.version} org.primefaces.themes.south-street-${primefaces.theme.version}org.primefaces.themes.sunny-${primefaces.theme.version}org.primefaces.themes.hot-sneaks-${primefaces.theme.version}org.primefaces.themes.cupertino-${primefaces.theme.version} org.primefaces.themes.trontastic-${primefaces.theme.version}org.primefaces.themes.excite-bike-${primefaces.theme.version} org.apache.maven.mercury.mercury-external-N/A org.primefaces.themes.redmond-${primefaces.theme.version}org.primefaces.themes.afterwork-${primefaces.theme.version}org.primefaces.themes.glass-x-${primefaces.theme.version}org.primefaces.themes.home-${primefaces.theme.version} org.primefaces.themes.black-tie-${primefaces.theme.version}org.primefaces.themes.eggplant-${primefaces.theme.version} org.apache.maven.mercury.mercury-repo-remote-m2-N/Aorg.apache.maven.mercury.mercury-md-sat-N/A org.primefaces.themes.ui-lightness-${primefaces.theme.version}org.primefaces.themes.midnight-${primefaces.theme.version}org.primefaces.themes.mint-choc-${primefaces.theme.version}org.primefaces.themes.afternoon-${primefaces.theme.version}org.primefaces.themes.dot-luv-${primefaces.theme.version}org.primefaces.themes.smoothness-${primefaces.theme.version}org.primefaces.themes.swanky-purse-${primefaces.theme.version} -

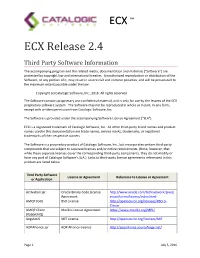

ECX Third Party Notices

ECX ™ ECX Release 2.4 Third Party Software Information The accompanying program and the related media, documentation and materials (“Software”) are protected by copyright law and international treaties. Unauthorized reproduction or distribution of the Software, or any portion of it, may result in severe civil and criminal penalties, and will be prosecuted to the maximum extent possible under the law. Copyright (c) Catalogic Software, Inc., 2016. All rights reserved. The Software contains proprietary and confidential material, and is only for use by the lessees of the ECX proprietary software system. The Software may not be reproduced in whole or in part, in any form, except with written permission from Catalogic Software, Inc. The Software is provided under the accompanying Software License Agreement (“SLA”) ECX is a registered trademark of Catalogic Software, Inc. All other third-party brand names and product names used in this documentation are trade names, service marks, trademarks, or registered trademarks of their respective owners. The Software is a proprietary product of Catalogic Software, Inc., but incorporates certain third-party components that are subject to separate licenses and/or notice requirements. (Note, however, that while these separate licenses cover the corresponding third-party components, they do not modify or form any part of Catalogic Software’s SLA.) Links to third-party license agreements referenced in this product are listed below. Third Party Software License or Agreement Reference to License or Agreement -

OFSAA Licensing Information User Manual Release

Oracle® Financial Services Analytical Applications Licensing Information User Manual Release 8.0.6.0.0 May 2018 Document Control VERSION NUMBER REVISION DATE CHANGE LOG 1.0 May 2018 First release 2.0 December 2018 Added licensing information for Price Creation and Discovery 3.0 January 2019 Updated information for FSDF and OIDF 4.0 February 2019 Updated information for Sanctions, EDQ and UCS 5.0 February 2019 Added information for Oracle Data Integrator 6.0 November 2020 Updated versions for POI, Jackson, Jackson databind, jQuery, Log4J LICENSING INFORMATION USER MANUAL RELEASE 8.0.6.0.0 Copyright © 2019 Oracle and/or its affiliates. All rights reserved. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. -

Licensing Information Release 13.2.2

Oracle® Retail Merchandise Planning and Optimization Licensing Information Release 13.2.2 E22836-01 April 2011 This document provides licensing information for all the third-party applications used by the following Oracle Retail applications: ■ Oracle Retail Clearance Optimization Engine ■ Oracle Retail Markdown Optimization ■ Oracle Retail Place ■ Oracle Retail Plan ■ Oracle Retail Promotion Intelligence and Promotion Planning and Optimization Prerequisite Softwares and Licenses Oracle Retail products depend on the installation of certain essential products (with commercial licenses), but the company does not bundle these third-party products within its own installation media. Acquisition of licenses for these products should be handled directly with the vendor. The following products are not distributed along with the Oracle Retail product installation media: ™ ■ MicroStrategy Desktop (http://www.microstrategy.com) ■ MicroStrategy Intelligence Server™ and Web Universal (http://www.microstrategy.com) ® ■ Oracle Application Server 10g (http://www.oracle.com) ® ■ Oracle Business Intelligence Suite Enterprise Edition Version 10 (http://www.oracle.com) ® ■ Oracle Database 10g (http://www.oracle.com) ® ■ Oracle Database 11g (http://www.oracle.com) ® ■ Oracle WebLogic Server (http://www.oracle.com) ■ rsync (http://samba.anu.edu.au/rsync/). See rsync License. 1 Softwares and Licenses Bundled with Oracle Retail Products The following third party products are bundled along with the Oracle Retail product code and Oracle has acquired the necessary