Tomcat Load Balancing for PAS for Openedge

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

List of NMAP Scripts Use with the Nmap –Script Option

List of NMAP Scripts Use with the nmap –script option Retrieves information from a listening acarsd daemon. Acarsd decodes ACARS (Aircraft Communication Addressing and Reporting System) data in real time. The information retrieved acarsd-info by this script includes the daemon version, API version, administrator e-mail address and listening frequency. Shows extra information about IPv6 addresses, such as address-info embedded MAC or IPv4 addresses when available. Performs password guessing against Apple Filing Protocol afp-brute (AFP). Attempts to get useful information about files from AFP afp-ls volumes. The output is intended to resemble the output of ls. Detects the Mac OS X AFP directory traversal vulnerability, afp-path-vuln CVE-2010-0533. Shows AFP server information. This information includes the server's hostname, IPv4 and IPv6 addresses, and hardware type afp-serverinfo (for example Macmini or MacBookPro). Shows AFP shares and ACLs. afp-showmount Retrieves the authentication scheme and realm of an AJP service ajp-auth (Apache JServ Protocol) that requires authentication. Performs brute force passwords auditing against the Apache JServ protocol. The Apache JServ Protocol is commonly used by ajp-brute web servers to communicate with back-end Java application server containers. Performs a HEAD or GET request against either the root directory or any optional directory of an Apache JServ Protocol ajp-headers server and returns the server response headers. Discovers which options are supported by the AJP (Apache JServ Protocol) server by sending an OPTIONS request and lists ajp-methods potentially risky methods. ajp-request Requests a URI over the Apache JServ Protocol and displays the result (or stores it in a file). -

Catalogueformationspythagorefd 2017.Pdf

p.1 Pythagore F.D. : Apprendre à Apprendre Nouveautés 2017 : Pour plonger au coeur des technologies BigData, comprendre les concepts de NoSQL, d'indexation, de sharding, etc ... savoir concevoir les architecture ad-hoc et intégrer, déployer les solutions, nous proposons une gamme complète de formations, ateliers, classes virtuelles qui vont de l'introduction avec des stages comme « BigData, architecture et technologies », jusqu'à l'expertise sur des sujets comme la « Programmation R pour hadoop », ou le stage « Machine Learning : technologies et bonnes pratiques ». Nos domaines d'expertise : • Unix et Linux, et les applicatifs Apache, Openldap, Squid, Nagios, Zabbix, OCS/GLPI, puppet , chef... • la virtualisation et l'orchestration avec xen, kvm, lxc, Docker, et le cloud : cloudstack et openstack, openNebula, Cloudify, cobbler, etc ... • TCP/IP (IPv6, snmp, Architecture, Sécurité, Administration de réseaux IP, VoIP, ...) • Développement (langage C, Java, Jee, technologies Jee, JBoss, WebServices, PHP, Perl, Python , ...) et le développement sur mobiles android • les bases de données et le BigData avec NoSQL, Cassandra, MongoDB, Hadoop, ... Sur chacun de ces domaines, notre équipe possède un excellent niveau d'expertise couvrant l'ensemble du domaine, des fondamentaux aux outils les plus complexes : nos formations vont de l'introduction à Linux, à la Sécurité, la Haute Disponibilité, des concepts NoSQL à la programmation MapReduce Tous nos stages sont l'occasion de nombreuses mises en pratique et exercices de manière à permettre aux participants de bien assimiler les nouveaux concepts. Nos méthodes pédagogiques : Apprendre, concevoir, intégrer ... nous pensons que le meilleur moyen de comprendre les nouveaux concepts et les technologies est la mise en pratique. Nous organisons des ateliers, expériences, démonstrations, .. -

Development Production Line the Short Story

Development Production Line The Short Story Jene Jasper Copyright © 2007-2018 freedumbytes.dev.net (Free Dumb Bytes) Published 3 July 2018 4.0-beta Edition While every precaution has been taken in the preparation of this installation manual, the publisher and author assume no responsibility for errors or omissions, or for damages resulting from the use of the information contained herein. This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License. To get an idea of the Development Production Line take a look at the following Application Integration overview and Maven vs SonarQube Quality Assurance reports comparison. 1. Operating System ......................................................................................................... 1 1.1. Windows ........................................................................................................... 1 1.1.1. Resources ................................................................................................ 1 1.1.2. Desktop .................................................................................................. 1 1.1.3. Explorer .................................................................................................. 1 1.1.4. Windows 7 Start Menu ................................................................................ 2 1.1.5. Task Manager replacement ........................................................................... 3 1.1.6. Resource Monitor ..................................................................................... -

Oracle Internet Application Server 8I

Oracle Internet Application Server 8i Migrating from Oracle Application Server Release 1.0 June, 2000 Part No. A83709-01 Oracle Internet Application Server Migrating from Oracle Application Server, Release 1.0 Part No. A83709-01 Copyright © 2000, Oracle Corporation. All rights reserved. The Programs (which include both the software and documentation) contain proprietary information of Oracle Corporation; they are provided under a license agreement containing restrictions on use and disclosure and are also protected by copyright, patent, and other intellectual and industrial property laws. Reverse engineering, disassembly, or decompilation of the Programs is prohibited. The information contained in this document is subject to change without notice. If you find any problems in the documentation, please report them to us in writing. Oracle Corporation does not warrant that this document is error free. Except as may be expressly permitted in your license agreement for these Programs, no part of these Programs may be reproduced or transmitted in any form or by any means, electronic or mechanical, for any purpose, without the express written permission of Oracle Corporation. If the Programs are delivered to the U.S. Government or anyone licensing or using the programs on behalf of the U.S. Government, the following notice is applicable: Restricted Rights Notice Programs delivered subject to the DOD FAR Supplement are "commercial computer software" and use, duplication, and disclosure of the Programs, including documentation, shall be subject to the licensing restrictions set forth in the applicable Oracle license agreement. Otherwise, Programs delivered subject to the Federal Acquisition Regulations are "restricted computer software" and use, duplication, and disclosure of the Programs shall be subject to the restrictions in FAR 52.227-19, Commercial Computer Software - Restricted Rights (June, 1987). -

Red Hat Jboss Web Server 5.2 HTTP Connectors and Load Balancing Guide 5.2

Red Hat JBoss Web Server 5.2 HTTP Connectors and Load Balancing Guide 5.2 For Use with Red Hat JBoss Web Server 5.2 Last Updated: 2019-11-20 Red Hat JBoss Web Server 5.2 HTTP Connectors and Load Balancing Guide 5.2 For Use with Red Hat JBoss Web Server 5.2 Legal Notice Copyright © 2019 Red Hat, Inc. The text of and illustrations in this document are licensed by Red Hat under a Creative Commons Attribution–Share Alike 3.0 Unported license ("CC-BY-SA"). An explanation of CC-BY-SA is available at http://creativecommons.org/licenses/by-sa/3.0/ . In accordance with CC-BY-SA, if you distribute this document or an adaptation of it, you must provide the URL for the original version. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, the Red Hat logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. Linux ® is the registered trademark of Linus Torvalds in the United States and other countries. Java ® is a registered trademark of Oracle and/or its affiliates. XFS ® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL ® is a registered trademark of MySQL AB in the United States, the European Union and other countries. -

Final-Thesis.Pdf (1.648Mb)

VYSOKÉ UČENÍ TECHNICKÉ V BRNĚ BRNO UNIVERSITY OF TECHNOLOGY FAKULTA INFORMAČNÍCH TECHNOLOGIÍ FACULTY OF INFORMATION TECHNOLOGY ÚSTAV INTELIGENTNÍCH SYSTÉMŮ DEPARTMENT OF INTELLIGENT SYSTEMS ZPŘÍSTUPNĚNÍ OBRAZU Z IP KAMER V PROHLÍŽEČI WEB BROWSER FOR IP CAMERAS BAKALÁŘSKÁ PRÁCE BACHELOR’S THESIS AUTOR PRÁCE PAVEL ČERNÝ AUTHOR VEDOUCÍ PRÁCE doc. Ing. FRANTIŠEK ZBOŘIL, Ph.D. SUPERVISOR BRNO 2020 Vysoké učení technické v Brně Fakulta informačních technologií Ústav inteligentních systémů (UITS) Akademický rok 2019/2020 Zadání bakalářské práce Student: Černý Pavel Program: Informační technologie Název: Zpřístupnění obrazu z IP kamer v prohlížeči Web Browser for IP Cameras Kategorie: Umělá inteligence Zadání: 1. Prozkoumejte způsob přenosu obrazu z IP kamer a možnosti přenosu a zobrazení obrazu (videa) do webových prohlížečů. Věnujte pozornost možnostem HTML5 (např. použití tagu <video>). Seznamte se s problematikou používaných kodeků. 2. Na základě průzkumu navrhněte systém, který bude umožňovat především zobrazit živý obraz z kamer, včetně jednoduchého administračního rozhraní (správa kamer a přístupových práv uživatelů). 3. Systém implementujte pomocí vhodných programovacích jazyků. Použijte volně dostupné knihovny a programy (licencované pod GNU GPL, Apache a podobně). Webové rozhraní nechť je funkční v aktuálních verzích nejrozšířenějších prohlížečů (Firefox, Chrome, MS Edge). 4. Zhodnoťte dosažený výsledek a navrhněte možnosti dalšího vývoje. Literatura: Russel, S., Norvig, P.: Artificial Intelligence, A Modern Approach, Pearson, 2009 Podrobné -

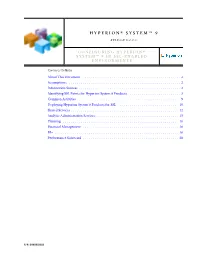

Hyperion System 9 Products

HYPERION® SYSTEM™ 9 RELEASE 9.2.0.3 CONFIGURING HYPERION® SYSTEM™ 9 IN SSL-ENABLED ENVIRONMENTS CONTENTS IN BRIEF About This Document .................................................. 2 Assumptions ......................................................... 2 Information Sources .................................................... 2 Identifying SSL Points for Hyperion System 9 Products .......................... 3 Common Activities ..................................................... 9 Deploying Hyperion System 9 Products for SSL ............................... 10 Shared Services ....................................................... 12 Analytic Administration Services .......................................... 15 Planning ........................................................... 16 Financial Management ................................................. 16 BI+ ............................................................... 18 Performance Scorecard ................................................. 28 P/N: DH98892030 About This Document This document describes how to deploy Hyperion® System™ 9 in Secure Sockets Layer (SSL)- enabled environments. SSL is a cryptographic protocol used to secure data exchange over the network. The procedures in this document are designed for users who intend to use SSL in their Web environments to secure communication with Hyperion System 9 products. Assumptions l You know how to SSL-enable the following: m Application servers: Apache Tomcat, BEA WebLogic, Oracle 10g, and IBM WebSphere m Web Servers: Apache, -

WSGI from Start to Finish

WSGI from Start to Finish EuroPython 2010. Gustavo Narea Who's speaking ● ¡Hola! ● Web Application Developer. ● Contributes to WSGI projects. Goals ● Explain what your framework does under- the-hood. ● More efficient troubleshooting. ● Integrate third party libraries and applications. ● Write framework-independent libraries and applications. ● Learn about existing WSGI-based software. Updates after the tutorial ● This presentation was modified to refer to working examples and fix errata. ● You probably downloaded this presentation with the examples. If not, go to gustavonarea.net/talks/ to get them. ● Read the instructions on how to install some of them. ● They are not essential to understand the presentation. The big picture 1.Introduction. 2.HTTP and WSGI. 3.WSGI applications. 4.WSGI middleware. 5.Testing and debugging. 6.Embedded Web applications. 7.Deployment. 8.Limitations. 9.Conclusion. Introduction What's WSGI? HTTP = HyperText Transfer Protocol WSGI = Web Server Gateway Interface Key facts about WSGI ● Python “Standard” (PEP-333). ● Created in 2003. ● Inspired by CGI. ● Officially supported by all the popular frameworks. ● Applications can run on virtually any HTTP server. Servers and gateways ● Server with Python embedded: ● Python-powered gateway: Requests and responses HTTP and WSGI HTTP requests and responses Response Request HTTP/1.1 200 OK Server: EP2010 Server GET /greeting HTTP/1.1 Content-Length: 12 Host: example.org Content-Type: text/plain User-Agent: EP2010 Client empty line Hello world! Request Response POST /login -

Aris Server Update Installation Guide

ARIS SERVER UPDATE INSTALLATION GUIDE VERSION 10.0 - SERVICE RELEASE 14 April 2021 SOFTWARE AG This document applies to ARIS Version 10.0 and to all subsequent releases. Specifications contained herein are subject to change and these changes will be reported in subsequent release notes or new editions. Copyright © 2010 - 2021 Software AG, Darmstadt, Germany and/or Software AG USA Inc., Reston, VA, USA, and/or its subsidiaries and/or its affiliates and/or their licensors. The name Software AG and all Software AG product names are either trademarks or registered trademarks of Software AG and/or Software AG USA Inc. and/or its subsidiaries and/or its affiliates and/or their licensors. Other company and product names mentioned herein may be trademarks of their respective owners. Detailed information on trademarks and patents owned by Software AG and/or its subsidiaries is located at https://softwareag.com/licenses. Use of this software is subject to adherence to Software AG's licensing conditions and terms. These terms are part of the product documentation, located at https://softwareag.com/licenses and/or in the root installation directory of the licensed product(s). This software may include portions of third-party products. For third-party copyright notices, license terms, additional rights or restrictions, please refer to "License Texts, Copyright Notices and Disclaimers of Third Party Products". For certain specific third-party license restrictions, please refer to section E of the Legal Notices available under "License Terms and Conditions for Use of Software AG Products / Copyright and Trademark Notices of Software AG Products". These documents are part of the product documentation, located at https://softwareag.com/licenses and/or in the root installation directory of the licensed product(s). -

Intro to Load-Balancing Tomcat with Httpd and Mod Jk

Intro to Load-Balancing Tomcat with httpd and mod_jk Christopher Schultz Chief Technology Officer Total Child Health, Inc. * Slides available on the Linux Foundation / ApacheCon2015 web site and at http://people.apache.org/~schultz/ApacheCon NA 2015/Load-balancing with mod_jk.odp Intro to Load-Balancing Tomcat with httpd and mod_jk ● Covering – Load balancing ● Not covering – Clustering* * See Mark's 3-part presentation(s) today starting at 10:00 in this room Tomcat ● Tomcat as a web server – Capable ● HTTP, HTTPS, WebSocket, NIO ● Virtual hosting, CGI, URL-rewriting ● Authentication (RDBMS, LDAP, file) ● Styled directory listings ● Arbitrary data filtering – Fast ● Static throughput can be comparable to httpd* * See Jean-Frederic's presentation today at 15:15 in this room Tomcat ● Tomcat as an application server – Java servlets, JSPs – Sky is the limit Image credit: Stan Shebs CC BY-SA 3.0 via Wikimedia Commons Tomcat ● Tomcat as an application server – Java servlets, JSPs – Sky is the limit* Image credit: Stan Shebs CC BY-SA 3.0 via Wikimedia Commons * Okay, heap size is the limit Scalability ● More memory Scalability ● More memory ● More deployed applications – without complex URLs Scalability ● More memory ● More deployed applications – without complex URLs ● Better fault-tolerance – fail-over Scalability ● More memory ● More deployed applications – without complex URLs ● Better fault-tolerance – fail-over ● Easier maintenance – bring-down a server without bringing down a service Scalability Load Balancing ● Client sees a single “service” ● “Server” is really an army of servers ● This army runs behind a façade: the load-balancer (lb) ● The load-balancer is also called a reverse proxy* * Because forward proxy was already taken Balancing versus Clustering ● Balancing is basic – Route incoming requests – Pushes bytes around ● Clustering* is complex – Relies on balancing as a building block – Configuration – Communication – Replication * See Mark's 3-part presentation(s) today starting at 10:00 in this room Reverse Proxying ● Necessary components 1. -

Load-Balancing Tomcat with Mod Jk.Pdf

Intro to Load-Balancing Tomcat with httpd and mod_jk Christopher Schultz Chief Technology Officer Total Child Health, Inc. * Slides available on the Linux Foundation / ApacheCon2015 web site and at http://people.apache.org/~schultz/ApacheCon NA 2015/Load-balancing with mod_jk.odp Intro to Load-Balancing Tomcat with httpd and mod_jk ● Covering – Load balancing ● Not covering – Clustering* * See Mark's 3-part presentation(s) today starting at 10:00 in this room Tomcat ● Tomcat as a web server – Capable ● HTTP, HTTPS, WebSocket, NIO ● Virtual hosting, CGI, URL-rewriting ● Authentication (RDBMS, LDAP, file) ● Styled directory listings ● Arbitrary data filtering – Fast ● Static throughput can be comparable to httpd* * See Jean-Frederic's presentation today at 15:15 in this room Tomcat ● Tomcat as an application server – Java servlets, JSPs – Sky is the limit Image credit: Stan Shebs CC BY-SA 3.0 via Wikimedia Commons Tomcat ● Tomcat as an application server – Java servlets, JSPs – Sky is the limit* Image credit: Stan Shebs CC BY-SA 3.0 via Wikimedia Commons * Okay, heap size is the limit Scalability ● More memory Scalability ● More memory ● More deployed applications – without complex URLs Scalability ● More memory ● More deployed applications – without complex URLs ● Better fault-tolerance – fail-over Scalability ● More memory ● More deployed applications – without complex URLs ● Better fault-tolerance – fail-over ● Easier maintenance – bring-down a server without bringing down a service Scalability Load Balancing ● Client sees a single “service” ● “Server” is really an army of servers ● This army runs behind a façade: the load-balancer (lb) ● The load-balancer is also called a reverse proxy* * Because forward proxy was already taken Balancing versus Clustering ● Balancing is basic – Route incoming requests – Pushes bytes around ● Clustering* is complex – Relies on balancing as a building block – Configuration – Communication – Replication * See Mark's 3-part presentation(s) today starting at 10:00 in this room Reverse Proxying ● Necessary components 1. -

Limitations of Azure in GIS Scalability a Performance and Migration Study

Mid Sweden University The Department of Information Technology and Media (ITM) Author: Jonas Bäckström E-mail address: [email protected] Study program: Master of Science in Engineering - Computer Engineering, 300 higher education credits Examiner: Prof. Tingting Zhang, [email protected] Supervisor: Lect. Martin Kjellqvist, [email protected] Date: 2012-08-28 M.Sc Thesis within Computer Engineering, Computer Engineering AV, 30 ETCS Limitations of Azure in GIS Scalability A performance and migration study Jonas Bäckström Abstract In this study, the cloud platform Windows Azure has been targeted for test im- plementations of Geographical Information System (GIS) software in the form of map servers and tile caches. The map servers included were GeoServer, MapNik, MapServer and SharpMap, which together with the tile caches, GeoWebCache, Map- Cache and TileCache, were installed on Windows Azures three different virtual ma- chine roles (Web, Worker and VM). Furthermore, different techniques for scaling applications and internal role communication are presented, followed by four sets of performance tests. The performance tests attempt to highlight the differences in re- quest times, how the different role sizes handle the load from the incoming requests, how the different role sizes handle many concurrent TCP-connections and how well the incoming requests are load balanced in between the worker roles. The test im- plementations showed that all map servers and tile caches were successfully installed in Azure, which leads to the conclusion that Windows Azure is suitable for hosting GIS software with similar installation requirements to the previously mentioned soft- ware. Four different approaches (Direct mapping, Public Internal Endpoints, Queue and Worker Role Request Broker) are presented showing how Azure allows different methods in order to scale the internal role communication as well as the external client requests.