Oculus Rift Control of a Mobile Robot

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Remote Control (RC) Monitor Electrical Controls Supplemental Instructions for Use with RC Monitor Manual

MANUAL: Remote Control (RC) Monitor Electrical Controls Supplemental Instructions for use with RC Monitor Manual INSTRUCTIONS FOR INSTALLATION, SAFE OPERATION AND MAINTENANCE Understand manual before use. Operation of this device without understanding the manual and DANGER receiving proper training is a misuse of this equipment. Obtain safety information at www.tft. com/serial-number SECTION 3.0 SECTION 4.9 General Information and Specifications Toggle Switch Monitor Operator Station SECTION 4.0 (Y4E-TS) Electrical Controls Installation and Operation SECTION 4.10 SECTION 4.1 Monitor Communication Monitor Mounted Interface Control Operator Station (Y4E-COMM) SECTION 4.2 SECTION 4.11 Panel Mount Monitor Position Display Operator Station (Y4E-DISP) (Y4E-RP) SECTION 4.12 SECTION 4.3 Remote Auxiliary Function Panel Mount With Display Interface Control Operator Station (YE-REMAUX) (Y4E-RP-D) SECTION 4.4 SECTION 4.13 Tethered Electric RC Monitor Aerial Operator Station Truck Installation (Y4E-CT-##) SECTION 4.14 SECTION 4.5 Multiplex Interface Control (YE-CAN#) Tethered Operator Station With Display (Y4E-CT-##-D) SECTION 4.15 Ethernet Interface Control SECTION 4.6 Wireless Operator Station (YE-RF-##) SECTION 4.16 Electric Nozzle Actuator SECTION 4.7 Wireless Operator Station With Display (YE-RF-##-D) SECTION 4.17 Valve Kits SECTION 4.8 (YE-VK-PH) Joystick Operator Station SECTION 5.0 (Y4E-JS) Troubleshooting TASK FORCE TIPS LLC 3701 Innovation Way, IN 46383-9327 USA MADE IN USA • tft.com 800-348-2686 • 219-462-6161 • Fax 219-464-7155 ©Copyright Task Force Tips LLC 2008-2018 LIY-500 November 21, 2018 Rev13 DANGER PERSONAL RESPONSIBILITY CODE The member companies of FEMSA that provide emergency response equipment and services want responders to know and understand the following: 1. -

Robot Explorer Program Manual

25 Valleywood Drive, Unit 20 Markham, Ontario, L3R 5L9, Canada Tel: (905) 943-9572 Fax: (905) 943-9197 i90 Robot Explorer Program Copyright © 2006, Dr Robot Inc. All Rights Reserved. www.DrRobot.com - 1 - Copyright Statement This manual or any portion of it may not be copied or duplicated without the expressed written consent of Dr Robot. All the software, firmware, hardware and product design accompanying with Dr Robot’s product are solely owned and copyrighted by Dr Robot. End users are authorized to use for personal research and educational use only. Duplication, distribution, reverse-engineering, or commercial application of the Dr Robot or licensed software and hardware without the expressed written consent of Dr Robot is explicitly forbidden. Copyright © 2006, Dr Robot Inc. All Rights Reserved. www.DrRobot.com - 2 - Table of Contents I. Introduction 4 II. System Requirements 4 III. Software Installation 5 Installing the i90 Robot Explorer Programs 5 Install the Joystick Controller 5 IV. Robot Operations 6 Using the Joystick Controls 6 Controlling Camera 7 Driving the Robot 7 Using i90 Robot Explorer Program Control 8 Video display 8 Operation Option 9 Utility Panel 17 Robot & Map Display 18 Robot Status 19 Using i90 Robot Explorer Client Program Control 20 Video 21 Robot & Map Display 21 Camera Operation 21 Robot Operation 21 Robot Data Display 21 Copyright © 2006, Dr Robot Inc. All Rights Reserved. www.DrRobot.com - 3 - I. Introduction This manual will provide you information on using the i90 Robot Explorer program to operate the robot. Please refer to the i90 Quick Guide regarding other documents related to i90. -

ACCESSORIES for PLAYSTATION®3 BECOME AVAILABLE Wireless Controller (SIXAXIS™), Memory Card Adaptor and BD Remote Control

ACCESSORIES FOR PLAYSTATION®3 BECOME AVAILABLE Wireless Controller (SIXAXIS™), Memory Card Adaptor and BD Remote Control Tokyo, October 3, 2006 – Sony Computer Entertainment Inc. (SCEI) today announced that Wireless Controller (SIXAXIS™) and Memory Card Adaptor would become available simultaneously with the launch of PLAYSTATION®3 (PS3) computer entertainment system on November 11th, 2006, in Japan, at a recommended retail price of 5,000 yen (tax included) and 1,500 yen (tax included) respectively. BD Remote Control will also become available on December 7th 2006, at a recommended retail price of 3,600 yen (tax included). Wireless Controller (SIXAXIS) for PS3 employs a high-precision, highly sensitive six-axis sensing system, which detects natural and intuitive movements of hands for real-time interactive play. With the adoption of Bluetooth® wireless technology, it allows up to 7 players to play at the same time, without having to attach any other external device such as a multitap. In addition, by simply plugging a USB cable to the controller, users can seamlessly switch from wireless to wired connection and automatically charge its battery while the controller is in use. Controller battery lasts up to 30 hours when fully charged *1). The new Memory Card Adaptor enables users to transfer data saved on Memory Cards for PlayStation® and PlayStation®2 onto the hard disk drive of PS3. To transfer data, users need to simply insert their Memory Cards to the Memory Card Adaptor connected to PS3 via a USB port. In December, BD Remote Control will also become available, which enables users to easily operate movies and music content on BD (Blu-ray Disc) and DVD on PS3. -

Immersive Robotic Telepresence for Remote Educational Scenarios

sustainability Article Immersive Robotic Telepresence for Remote Educational Scenarios Jean Botev 1,* and Francisco J. Rodríguez Lera 2 1 Department of Computer Science, University of Luxembourg, L-4364 Esch-sur-Alzette, Luxembourg 2 Department of Mechanical, Informatics and Aerospace Engineering, University of León, 24071 León, Spain; [email protected] * Correspondence: [email protected] Abstract: Social robots have an enormous potential for educational applications and allow for cognitive outcomes that are similar to those with human involvement. Remotely controlling a social robot to interact with students and peers in an immersive fashion opens up new possibilities for instructors and learners alike. Using immersive approaches can promote engagement and have beneficial effects on remote lesson delivery and participation. However, the performance and power consumption associated with the involved devices are often not sufficiently contemplated, despite being particularly important in light of sustainability considerations. The contributions of this research are thus twofold. On the one hand, we present telepresence solutions for a social robot’s location-independent operation using (a) a virtual reality headset with controllers and (b) a mobile augmented reality application. On the other hand, we perform a thorough analysis of their power consumption and system performance, discussing the impact of employing the various technologies. Using the QTrobot as a platform, direct and immersive control via different interaction modes, including motion, emotion, and voice output, is possible. By not focusing on individual subsystems or motor chains, but the cumulative Citation: Botev, J.; Rodríguez Lera, energy consumption of an unaltered robot performing remote tasks, this research provides orientation F.J. Immersive Robotic Telepresence regarding the actual cost of deploying immersive robotic telepresence solutions. -

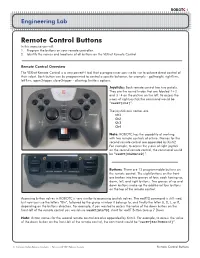

Remote Control Buttons in This Exercise You Will: 1

ROBOTC 1 Engineering Lab Remote Control Buttons In this exercise you will: 1. Program the buttons on your remote controller. 2. Identify the names and locations of all buttons on the VEXnet Remote Control. Remote Control Overview The VEXnet Remote Control is a very powerful tool that a programmer can use to use to achieve direct control of their robot. Each button can be programmed to control a specific behavior, for example - goStraight, rightTurn, leftTurn, openGripper, closeGripper - allowing limitless options. Joysticks: Each remote control has two josticks. They are the round knobs that are labeled 1+2 and 3+4 on the picture on the left. To access the y-axis of right joystick the command would be “vexRT[Ch2]”. The joystick axis names are: Ch1 Ch2 Ch3 Ch4 Note: ROBOTC has the capability of working with two remote controls at a time. Names for the second remote control are appended by Xmtr2. For example, to access the y-axis of right joystick on the second remote control, the command would be “vexRT[Ch2Xmtr2]”. Buttons: There are 12 programmable buttons on the remote control. The eight buttons on the front are broken into two groups of four, each having up, down, left, and right buttons. Two groups of up and down buttons make up the additional four buttons on the top of the remote control. Accessing button values in ROBOTC is very similar to accessing joystick values. The vexRT[] command is still used, but now you use the letters “Btn”, followed by the group number it belongs to, and finally the letter U, D, L, or R, depending on the buttons direction. -

Advanced Remote Control for Pc Using Smartphone

International Journal of Engineering Research & Technology (IJERT) ISSN: 2278-0181 Vol. 3 Issue 3, March - 2014 Advanced Remote Control For Pc Using Smartphone Rubinder Singh Nitin Kumar Bisret Narula Dept. of Information Technology Dept. of Information Technology Assi stant Professor G.T.B.I.T G.T.B.I.T G.T.B.I.T New Delhi, India New Delhi, India New Delhi, India Abstract—The Smartphone and Tablets have arisen as The aim is to make more efficient use of the android unique computational resource for many purposes. This paper Smartphone. As the android phones are very popular for their introduces a system by which a computer can be controlled rich features, this application gives advancement to those wirelessly through an android device. The aim is to transform a features. The application has many advance features like the Smartphone into a remote controller for the PC. Almost motion gaming feature to play game via remote. The everything on the computer could be controlled through phone from keyboard to mouse to specific applications like application converts the android device into a wireless racing PowerPoint, Media Players or even it can be used for the virtual wheel which will make the user to drive the vehicle in racing gaming as well. To send wireless signals Wi-Fi or Bluetooth games. Application uses the android Smartphone phone so no could be used which is already in the Smartphone. This paper separate hardware or device is needed to control the computer also represents a prototype application with a protocol and some system, the phone will be converted into a remote which make algorithms to show how the system can be implemented. -

Instruction Manual

instruction manual ! WARNING: CHOKING HAZARD — Small parts and small ball. Not for children under 3 years. CAUTION: HAIR ENTANGLEMENT - Tie back and cover hair and secure loose clothing prior to play. 6+ For instructional video, visit www.boxerthebot.com or the Boxer Bot app. table of contents CONTENTS …………………………………… 3 INTRODUCTION ……………………………… 4 HOW TO INSTALL BATTERIES ………………… 5 BATTERY SAFETY INFORMATION …………… 6 BOXER FEATURES ……………………………… 7 REMOTE CONTROL FEATURES ……………… 8 CHARGING BOXER ………………………… 9 GETTING STARTED …………………………… 10 BOXER MODES ……………………………… 11 REMOTE CONTROL MODE ………………… 12 REMOTE CONTROL CHANNEL SELECT …… 13 PLAY MODE …………………………………… 14 APP MODE …………………………………… 16 GAME ACTIVITY MODE ……………………… 17 GAME MODE: BOT BOWLING ……………… 18 GAME MODE: DJ BOT ……………………… 19 GAME MODE: SOCCER …………………… 20 GAME MODE: PADDLE BOT ………………… 21 GAME MODE: GO KART …………………… 22 GAME MODE: DANCE PARTY ……………… 23 GAME MODE: FORTUNE TELLER …………… 24 GAME MODE: STUNT SHOW ………………… 25 GAME MODE: LASER TANK ………………… 26 GAME MODE: SLING SHOT ………………… 27 SLEEP MODE …………………………………… 28 POWER OFF …………………………………… 28 TROUBLESHOOTING ………………………… 29 WARNINGS ……………………………… 30-31 2 IMPORTANT INFORMATION: Remove all packaging before use. Retain this information and address for future reference. Content may vary from pictures. BOXER INSTRUCTION MANUAL – Due to all the awesome features in Boxer, this guide should be used for reference only and may be modified. The most updated version is located at www.boxerthebot.com/help. contents 1 BOXER 1 REMOTE (WITH 3 LR44 BATTERIES INCLUDED) 1 BALL 1 MICRO-USB CHARGING CABLE 10 CARDS 1 INSTRUCTION MANUAL For instructional video, visit www.boxerthebot.com or the Boxer Bot app. 3 introduction hey there, i’m boxer! your pint-sized freewheelin’ robot who is always on the move. -

A4 Spec Sheets Final Version5.Cdr

M 800dpi Mediaball pro Wireless Via 2.4Ghz Wireless Powered By Media Center Fully Programmable Optical Trackball Precision 800dpi Inbuilt Trackball Soft Black USB Receiver 15M Range 2 x AA Batteries Buttons Via Software Tracking Resolution Rubberised Case l 2.4Ghz Wireless (via USB) Programmable Media Center Remote Control o r t n o Model No. KYB-MEDIABALLPRO C e t About o m e R Following on from the hugely successfull Accuratus Toughball, Accuratus now launch their new Mediaball Pro Media Center Remote r e t control. It contains everything the Toughball had and more, into a confortable handsized remote control. The Mediaball Pro has a built in n e C optical trackball and mouse buttons to allow you to control the mouse with your thumb and then also numerous buttons to control all a i your media center functions at ease and even allow you to type! Typing is made simple with the supplied driver, by using the number pad d you can type just like you would to on a mobile phone. Perfect for those of you who have you shiny PC set up with your huge LCD screen e M e and for any one else with a PC. You can also with the supplied software make your own custom profiles for different software packages l b you may have, every key is programmable so you can set the mediaball up to do exactly what you like. This is all made possible with a a m small USB 2.4GHz wireless receiver that has a great 15 metre range! m a r g o r P Specification ) B S U • 2.4GHz wireless interface via USB dongle a i v • 15 Meter range ( s • Media center remote control, with media center buttons s e l • Fully programmable via software provided e r • Software allows you to set up profiles for different software i W z • You can type with the number buttons like you would h G On a mobile phone 4 . -

USER MANUAL Before Using the TV, Please Read This Manual Thoroughly and Retain It for Future Reference

USER MANUAL Before using the TV, please read this manual thoroughly and retain it for future reference. ENGLISH FRANÇAIS ESPAÑOL USER MANUAL Before using the TV, please read this manual thoroughly and retain it for future reference. ENGLISH ENGLISH FRANÇAIS ESPAÑOL Copyright Statement © 2019 Hisense Company Ltd. All Rights Reserved. All material in this User Manual is the property of Hisense Company Ltd. and its subsidiaries, and is protected under US, CANADA, MEXICO and International copyright and/or other intellectual property laws. Reproduction or transmission of the materials, in whole or in part, in any manner, electronic, print, or otherwise, without the prior written consent of Hisense Company Ltd. is a violation of Hisense Company Ltd. rights under the aforementioned laws. No part of this publication may be stored, reproduced, transmitted or distributed, in whole or in part, in any manner, electronic or otherwise, whether or not for a charge or other or no consideration, without the prior written permission of Hisense Company Ltd. Requests for permission to store, reproduce, transmit or distribute materials may be made to one of the following addresses: USA: Hisense USA Corporation 7310 McGinnis Ferry Road Suwanee, GA 30024 CANADA: Hisense Canada Co., Ltd 2283 Argentia Road, Unit 16 Mississauga, ON, Canada L5N 5Z2 MEXICO: Hisense Mexico S. de R.L. de C.V. Blvd. Miguel de Cervantes Saavedra No 301 Torre Norte Piso 2, Col. Ampliación Granada Miguel Hidalgo, Ciudad de México, C.P. 11520 Hisense, and any and all other Hisense product names, logo’s, slogans or marks are registered trademarks of Hisense Company Ltd. -

MSU Library Detailed VR Instructions

Reserving the CyberDiscovery Wall ● Before you register, please note that you MUST have a valid MSU issued CAT card (Student, Faculty, or Staff). ● Go to the CyberDiscovery website: https://www.montana.edu/disc/projects/cyberdiscovery/ ● Click on the 'online scheduling system' link. ● Choose the time and date you would like to reserve. ● If the time slot is available, there will be a small plus sign in a circle in that slot. ○ Click on the time and date that you would like to reserve the space. ● It may ask you to log in. ○ It will require your NetID number (Ex: a12b345) your password. ○ Then press “Login”. ○ If you are having issues finding this information refer to MyInfo for ways to help you reset your password. ● Fill out the information required. ● Click “Save”. ○ This take you back to the initial reservation calendar and will show your reserved space in a green box. ○ You will receive an email confirming your reservation. Checking in for your reservation ● To get the required equipment go to the Service Desk and present your CAT card. Let them know that you have the space reserved. ● You will receive a box with a keyboard and a remote control, as well as a zippered case that has the VR headset, two controllers, a battery pack, and a key for the cubby to secure your items during your experience. ● Proceed to the Cyber Discovery Space and follow the instructions below. Virtual Reality (detailed instructions) ● Turn on the four CyberDiscovery monitors if they are not already on. ○ The best way to do this is to aim the remote control at the center of the wall of monitors and press the power button on the remote. -

Game Controller Accessories

Game Controller Accessories · The Game Controller wrist strap Game Controller wrist strap, for usage information please see our manual for details. · Battery Two AA alkaline batteries are needed to power the Game Controller. · Game Controller DVD Insert into your DVD drive to install all Game Controller software, user interface, and video games. Includes Bluetooth Driver installation program and complete Game Controller User Manual. 。1 。 Caution: How To Use The Game Controller Wrist Strap Playing with the Game Controller can involve rapid or vigorous motion. Please use the Game Controller wrist strap to prevent losing your grip on the Game Controller and causing damage to the Game Controller or surrounding objects, or injury to other people. First bullet: Please read the User Manual for the video game you are playing and read all instructions for the correct use of the Game Controller and all accessories. ATTACH THE GAME CONTROLLER WRIST STRAP WHEN USING THE Game Controller •Make sure all Players attach the Game Controller wrist strap and that the strap lock is securely fastened. •When sharing the Game Controller among multiple Players, make sure each person attaches the Game Controller wrist strap properly. •Using the Game Controller wrist strap will help prevent dropping or throwing the Game Controller accidentally when playing the games, which could damage the Game Controller or surrounding objects, or cause injury to other people. DO NOT LET GO OF THE Game Controller WHEN GAMING •Read the instruction booklet for the game you are playing and follow all game control instructions for correct use of the Game Controller and accessories. -

Android Based Remote Game Controller

International Journal of Current Engineering and Technology E-ISSN 2277 – 4106, P-ISSN 2347 - 5161 ® ©2014 INPRESSCO , All Rights Reserved Available at http://inpressco.com/category/ijcet Research Article Android based Remote Game Controller Ȧ* Ȧ Ȧ Ȧ Ȧ Akshay Kokate , Anay Karkhanis , Anushka Bhat , Chandrakant Khalse and Shalini.V.Wankhade Department of Computer Engineering (Savitribai Phule Pune University, Maharashtra, India Accepted 15 Nov 2014, Available online 01 Dec 2014, Vol.4, No.6 (Dec 2014) Abstract Expansion of games industry came in modern life, accompanied with innovative features in a game controller. Nowadays mobile industry is booming with the expansion of the variety of novel technologies, such as the use of 3D accelerometer sensors, high resolution cameras, and the capability of connecting to wireless networks and so on. Therefore, a smartphone can be added with the game control functions to become a mobile game controller. In this paper, we propose the design and implementation of making a mobile phone as a game controller using User Datagram Protocol socket programming. This system is developed by Java 2 Standard Edition and Google Android Software Development Kits which has already provided sensor and multi-touch APIs. Keywords: Game controller, Smartphone, Android, Java 2 Standard Edition, Sensor API 1. Introduction Besides, players also can use 3D sensor accelerators which can sense both rotational orientation and translational 1 Nowadays, mobile industry is booming greatly with the acceleration. Also, by generating native system input expansion of the variety of technologies accompanied such events, we can emulate the control functions, such as as the use of 3D accelerometer sensors, high resolution inputting by keyboard or mouse, accessing folder and cameras, capability of connecting to wireless networks etc.