Geometry, Trackball, and Transforms 1 Summary 2 Description

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hot Key Reference Guide

Hot Key Reference Guide Hot Keys for Desktops, Laptops & Touch Screens This information is available in alternative formats from www.YourDolphin.com Introduction 1 This booklet lists the most useful hot keys to control the three editions of Dolphin SuperNova: Magnifier Magnifier & Speech Magnifier & Screen Reader A list of all the hot keys for your SuperNova software can be found in the user manual. To open the manual, press F1 or click the “Help” button on the SuperNova Control Panel. To get context help and a list of available hot keys while using particular applications press CAPS LOCK + F1 from within your application. Most users prefer the default Dolphin hot key set as the most popular Speak commands can be operated by pressing a single key. When a hot key includes “NUMPAD”, this means that the key is situated on the numeric keypad, a block of keys usually on the right end of your keyboard. On a laptop the Numpad keys are usually available by holding down the FN key. Where the Numpad is unavailable or difficult to use, we recommend using the Laptopeyboard K layout. Throughout this document, Laptop hot keys that differ from the desktop are shown inside square brackets. Choosing the Keyboard Layout and Hot Key Set While SuperNova is running, open the SuperNova Control Panel by holding LEFT CONTROL and pressing SPACEBAR. Then press ALT + G to open the “General” menu, press K to open the “Keyboard and hot keys” dialogue where you can choose: keyboard layout, keyboard language and preferred hot key set. Press OK to save any changes and close the dialogue. -

KEYBOARD SHORTCUTS (Windows)

KEYBOARD SHORTCUTS (Windows) Note: For Mac users, please substitute the Command key for the Ctrl key. This substitution with work for the majority of commands _______________________________________________________________________ General Commands Navigation Windows key + D Desktop to foreground Context menu Right click Alt + underlined letter Menu drop down, Action selection Alt + Tab Toggle between open applications Alt, F + X or Alt + F4 Exit application Alt, Spacebar + X Maximize window Alt, Spacebar + N Minimize window Ctrl + W Closes window F2 Renames a selected file or folder Open Programs To open programs from START menu: Create a program shortcut and drop it into START menu To open programs/files on Desktop: Select first letter, and then press Enter to open Dialog Boxes Enter Selects highlighted button Tab Selects next button Arrow keys Selects next (>) or previous button (<) Shift + Tab Selects previous button _______________________________________________________________________ Microsoft Word Formatting Ctrl + P Print Ctrl + S Save Ctrl + Z Undo Ctrl + Y Redo CTRL+B Make text bold CTRL+I Italicize CTRL+U Underline Ctrl + C Copy Ctrl + V Paste Ctrl + X Copy + delete Shift + F3 Change case of letters Ctrl+Shift+> Increase font size Ctrl+Shift+< Decrease font size Highlight Text Shift + Arrow Keys Selects one letter at a time Shift + Ctrl + Arrow keys Selects one word at a time Shift + End or Home Selects lines of text Change or resize the font CTRL+SHIFT+ > Increase the font size 1 KEYBOARD SHORTCUTS (Windows) CTRL+SHIFT+ < -

Using Maniac Mansion™ on Your IBM® PC Or Compatible

Using Maniac Mansion™ on Your IBM® PC or Compatible Loading Maniac Mansion from Floppy Disk Note: We recommend that you first make a back up copy of all disks and put the originals in a sate place. After booting, place the Maniac Mansion disk in drive A and type: A: When you see the A: prompt, type: maniac (for demo, type: demo) In a few moments, you will see the Maniac Mansion selection screen. Cursor Control Keyboard cursor control can be done using either the arrow keys or the keypad: Numbers 1, 3, 7, and 9 take the cursor directly to the corners of the display; use the even-numbered keys for finer navigation. You can use a mouse if you have installed a compatible mouse driver. The left mouse button corresponds to "enter." Use the right mouse button (or right joystick button if you have a two-button joystick) to override cut scenes while playing the game. To select joystick or mouse type : shift J- Toggle joystick on/off shift M- Toggle mouse on/off Setting Preferences When loaded , the program will select the best mode for your machine. When the game is running you can select other graphics modes by using these shifted keys: shift V- MCGA mode shift H- Hercules mode shift E- EGA mode shift T- Tandy® 16-color mode shift C- CGA mode shift B- CGA black-and-white mode After selecting the modes that you want, you can type 'shift W' to write your preferences to disk. To Read the preferences while playing the game type 'shift R', or type 'maniac p' when starting a game. -

Keyboard Shortcuts in Acumatica

Keyboard Shortcuts in Acumatica Form Toolbar Shortcuts The following table displays the keyboard shortcuts you can use to execute form toolbar commands. Press This Key To Do This Esc Cancel Ctrl+Insert Add new record Ctrl+Delete Delete Ctrl+S Save Page Up Go to previous record Page Down Go to next record Summary Area Shortcuts The following table displays the keyboard shortcuts of the summary or selection area of any form. Press This Key To Do This Tab Move the cursor to the next element. Shift+Tab Move the cursor to the previous element. F3 Open the lookup table so you can search for a value. Ctrl+Left Arrow Move the cursor to the beginning of the next segment of the element if the element has multiple segments (for example, when you enter a subaccount). Ctrl+Right Arrow Move the cursor to the beginning of the previous segment of the element if the element has multiple segments (for example, when you enter a subac- count). Ctrl+Shift+L Invokes the Translations box for boxes with multi-language support if multilingual user input is enabled. For details, see Translations Dialog Box. For more information contact Crestwood Associates at [email protected] or 847-394-8820 Keyboard Shortcuts in Acumatica Table Navigation Shortcuts The following table displays the keyboard shortcuts you can use to navigate tables on any forms. Press This Key To Do This Arrow Keys Move one cell up, down, left, or right in a table. Tab Move the cursor to the next element in the current row. Shift+Tab Move the cursor to the previous element in the current row. -

Module 2: Keyboarding – Literacy/CLB 1 Lesson 1 Digital Literacy Curriculum Resource Module 2: Keyboarding Lit/CLB

Digital Literacy Curriculum Resource Module 2: Keyboarding Lit/CLB 1 Lesson Learning 1. The QWERTY keyboard (most common keys used ) Objectives for 2. Correct finger placement Module # 2 3. Hand / keyboard Ergonomics (type in a healthy way) 4. Keyboarding / Typing Pre-requisite ● Basic Mouse skills: hold mouse correctly, left click, scroll skills ● Basic Navigation: scroll, cursor shapes, where and how to place a cursor ● Can recognize alphabet and symbols Preparation: ● Set up the room with computers or laptops for each student ● Make sure you open the following on each student computer and on the teacher computer before you start: Word or Google Docs or another word processing program ● Put extra keyboards on each table. ● Read through the lesson outline and notes for the teacher ● Print the handouts for students ● Turn on the Projector or the Interactive Whiteboard or print out visuals in large format to teach concepts ● Optional: open the link to the typing tutor (typing.com) on each student computer Note about the lesson: • In the lesson, the → symbol is used to give the answer when it’s a part of the script. Otherwise, the word ‘Answer’ is used. • Vocabulary: For this level, don't spend time teaching vocabulary. Demonstrate where things are on the computer and how they work. Suggested / Useful readings/videos for teachers to watch Materials: ● Keyboards connected to desktop computers or laptops ● Internet access (to access online practice) ● Extra keyboards, if available, 1 for teacher to demonstrate and one / table for Ss to share. -

Manual TABLE of CONTENTS

Manual TABLE OF CONTENTS 1. Properties 3 2. Specifications 3 2.1 General 3 2.2 Key Properties 4 2.3 Cable and Connectors 4 3. Package Contents 4 4. SKILLER MECH SGK1 Overview 5 4.1 SKILLER Function Key 5 4.2. Set Brightness 5 4.3. Select Lighting Effectt 6 4.4. Set Frequency 6 4.5. Lighting Profiles 6 4.6. Gaming Mode 7 4.7. Arrow Keys Functions Are Interchangeable with the WASD Section 7 4.8. Function Keys with Preset Multimedia Actions 7 4.9. Reset 7 5. Connecting to the PC 7 SKILLER MECH SGK1 Dear Customer, Thank you for the purchase of your new Sharkoon product. To ensure a long service life, and full functionality of the product, we recommend that you read this manual thoroughly. SHARKOON Technologies www.sharkoon.com 1. Properties • Mechanical gaming keyboard with white LED illumination • Aluminium alloy reinforced top cover • Six pre-programmed lighting effects • Five individually programmable lighting profiles • Six brightness levels • Professional Kailh switches • N-key rollover • Anti-ghosting keys • Gaming mode with disabled windows key • Arrow keys functions are interchangeable with the WASD section • Function keys with preset multimedia actions • Integrated palm rest • Gold-plated USB plug 2. Specifications 2.1 General Type Gaming Keyboard Switch Technology Mechanical (Kailh) Illumination White, LED Adjustable Illumination 20 %, 40 %, 60 %, 80 %, 100 %, Off Lighting Effect 6 Lighting Profiles 5 Max Polling Rate 1,000 Hz Gaming Mode Block 3-Block Layout Palm Rest Integrated Weight incl. Cable 1.32 kg Dimensions (L x W x H) 458 x 220 x 44 mm Supported Operating Systems Windows 7/8/10 3 SKILLER MECH SGK1 2.2 Key Properties Function Keys with Preset Multimedia Actions Anti-Ghosting Keys N-Key Rollover Support Red Brown Blue Operating Force 50 g 45 g 50 g Switch Linear Tactile Tactile Switching Point Undetectable Detectable Detectable Precisely Click Point Undetectable Undetectable Detectable Distance to Actuation Point 2 mm 2 mm 1.9 mm Operating Life Cycle of Keys Min. -

JAWS Keystrokes

JAWS Keystrokes The keystrokes listed here are in tables and are grouped by headings. Major divisions are level two headings. Use the JAWS List of Headings (INSERT+F6) or the navigation quick key, H, to move quickly to the section of your choice. You can also use the navigation quick key, T, to move from one table to the next. Use the SHIFT key in combination with most navigation quick keys to move backwards. New JAWS Keystrokes Layered Keystrokes Layered keystrokes are keystrokes that require you to first press and release INSERT+SPACEBAR, and then press a different key to perform a function in JAWS. Layered keystrokes are easy to use and remember, and they do not interfere with native keystrokes within applications. Once you enter a layer, press the QUESTION MARK key to get a list of available keyboard commands within that layer. Description Command Helpful keystrokes within QUESTION MARK - ? each layer Text Analyzer INSERT+SPACEBAR, A View text on clipboard INSERT+SPACEBAR, C Show recent speech history INSERT+SPACEBAR, H INSERT+SPACEBAR, Clear recent speech history SHIFT+H Keyboard Lock on and off INSERT+SPACEBAR, L INSERT+SPACEBAR, M Select from marked place in (Mark Place first with Word (Microsoft Word only) CTRL+WINDOWS Key+K) Convenient OCR INSERT+SPACEBAR, O Research It INSERT+SPACEBAR, R Description Command Toggle Speech On or Off INSERT+SPACEBAR, S Table Layer INSERT+SPACEBAR, T Customize Web page with INSERT+SPACEBAR, X Flexible Web Windows Live Messages 1 through 5 numbers row JAWS Tandem Session Keystrokes Description Command Toggle Target and Controller Desktop Tandem Session INSERT+ALT+TAB (Controller User) Terminate JAWS Tandem Session (Controller or Target INSERT+ALT+T User) Pause Video (Controller INSERT+CTRL+SHIFT+V User) Web Pages and HTML Navigation quick keys make it faster and easier to move around on a Web page and anywhere else the Virtual Cursor is active. -

Hot Key Reference Guide

Hot Key Reference Guide Hot Keys for Desktops, Laptops & Touch Screens This information is available in alternative formats from www.YourDolphin.com Introduction 1 This booklet lists the most useful hot keys to control the three editions of Dolphin SuperNova: Magnifier Magnifier & Speech Magnifier & Screen Reader A list of all the hot keys for your SuperNova software can be found in the user manual. To open the manual, press F1 or click the “Help” button on the SuperNova Control Panel. To get context help and a list of available hot keys while using particular applications press CAPS LOCK + F1 from within your application. Most users prefer the default Dolphin hot key set as the most popular Speak commands can be operated by pressing a single key. When a hot key includes “NUMPAD”, this means that the key is situated on the numeric keypad, a block of keys usually on the right end of your keyboard. On a laptop the Numpad keys are usually available by holding down the FN key. Where the Numpad is unavailable or difficult to use, we recommend using the Laptop Keyboard layout. Throughout this document, Laptop hot keys that differ from the desktop are shown inside square brackets. Choosing the Keyboard Layout and Hot Key Set While SuperNova is running, open the SuperNova Control Panel by holding LEFT CONTROL and pressing SPACEBAR. Then press ALT + G to open the "General" menu, press K to open the "Keyboard and hot keys" dialogue where you can choose: keyboard layout, keyboard language and preferred hot key set. Press OK to save any changes and close the dialogue. -

POK3R User Manual

POK3R User manual Description: The Vortexgear POK3R is the perfect solution for limited spaces in situations. Built up 3 kinds of layout, it also features individually programmable keys with 3 layers customization options, all easily set through what you want. Main Specification: 1. Tiny size keyboard with 61/62 keys 2. Cherry MX inside 3. Arbitrary programming 4. PBT material Keycaps 5. 3 layers customization 6. Built-up 3 kind of layout (Qwerty, Dvorak, Colemak) 7. Metal bezel Package Content 1. Keyboard x 1 2. USB Cable x 1 Enjoy now for this fantastic tool and your typing feeling. Programming Instruction Note: The POK3R built in 4-layers. The default layer can not be programmed. Only layer 2~layer 4 can be. FN + R_ctrl, M,<,> and ? keys are fixed can not be programmed. Step 1. Choose the layer (FN+ <,> or ? key) which you want to programming. Step 2. Press FN + Right CTRL to enter the programming mode (Spacebar right LED steadily lit) Step 3. Press the key you want to program (Spacebar right LED flashing) Step 4. Key in the programming content and then press PN (Spacebar right LED steadily lit again) Step 5. Repeat step 2 and step 3 to program other keys. Step 6. Press FN + Right CTRL to exit programming mode ( Spacebar right LED off) More: · Support FN layer programming, you can select the FN combination key (e.g., FN + A) to program it · Under SELECT state (step 1), you can press PN + any key to view its content in a word processing Software (e.g., Notepad) · Support time delay, press 15ms key (FN + T) each time to delay 15ms, press 0.1s key (FN + G) each time to delay 0.1s, press 0.5s key(FN + B) each time to delay 0.5s. -

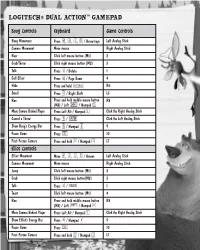

Logitech® Dual Action™ Gamepad

LOGITECH® DUAL ACTION™ GAMEPAD Boog Controls Keyboard Game Controls Boog Movement Press W, A, S, D / Arrow keys Left Analog Stick Camera Movement Move mouse Right Analog Stick Roar Click left mouse button (M1) 2 Grab/Throw Click right mouse button (M2) 3 Talk Press E / Delete 1 Call Elliot Press Q / Page Down 4 Hide Press and hold R6 Smell Press C / Right Shift L5 Run Press and hold middle mouse button R8 (M3) / Left q / Numpad 0 Move Camera Behind Player Press Left Alt / Numpad 1 Click the Right Analog Stick Cancel a Throw Press F / e Click the Left Analog Stick Show Boog’s Energy Bar Press R / Numpad 4 9 Pause Game Press s 10 First-Person Camera Press and hold X / Numpad 2 L7 Elliot Controls Elliot Movement Move W, A, S, D / Arrows Left Analog Stick Camera Movement Move mouse Right Analog Stick Jump Click left mouse button (M1) 2 Grab Click right mouse button(M2) 3 Talk Press E / m 1 Taunt Click left mouse button (M1) 4 Run Press and hold middle mouse button R8 (M3) / Left q / Numpad 0 Move Camera Behind Player Press Left Alt / Numpad 1 Click the Right Analog Stick Show Elliot’s Energy Bar Press R / Numpad 4 9 Pause Game Press s 10 First-Person Camera Press and hold X / Numpad 2 L7 LOGITECH® CORDLESS RUMBLEPAD™ 2 Boog Controls Keyboard Game Controls Boog Movement Press W, A, S, D / Arrow keys Left Analog Stick Camera Movement Move mouse Right Analog Stick Roar Click left mouse button (M1) A Grab/Throw Click right mouse button (M2) B Talk Press E / Delete X Call Elliot Press Q / Page Down Y Hide Press and hold R1 Smell Press C / Right -

Excel Keyboard Shortcuts

Excel Keyboard Shortcuts Keyboard shortcuts for using the Help task pane and Help window The Help Pane is a task pane that provides access to all Office Help content. As a task pane, the Help Pane appears as part of the active application. The Help window displays topics and other Help content and appears as a window next to, but separate from, the active application. In the Help task pane: F1 Display the Help task pane. F6 Switch between the Help task pane and the active application. TAB Select the next item in the Help task pane. SHIFT+TAB Select the previous item in the Help task pane. ENTER Perform the action for the selected item. DOWN ARROW and UP ARROW In a Table of Contents, select the next and previous item, respectively. RIGHT ARROW and LEFT ARROW In a Table of Contents, expand and collapse the selected item, respectively. ALT+LEFT ARROW Move back to the previous task Pane. ALT+RIGHT ARROW Move forward to the next task Pane. CTRL+SPACEBAR Open the menu of Pane options. CTRL+F1 Close and reopen the current task pane. RIGHT ARROW Expand a +/- list. LEFT ARROW Collapse a +/- list. In the Help window: TAB Select the next hidden text or hyperlink, or Show All or Hide All at the top of a topic SHIFT+TAB Select the previous hidden text or hyperlink, or the Browser View button at the top of a Microsoft Office Web site article ENTER Perform the action for the selected Show All, Hide All, hidden text, or hyperlink ALT+LEFT ARROW Move back to the previous Help topic. -

VR-Engage First Experience Iii Contents

VR-Engage First Experience Version 1.4 Copyright © 2019 VT MAK All rights Reserved. Printed in the United States. Under copyright laws, no part of this document may be copied or reproduced in any form without prior written consent of VT MAK. VR-Exchange™, VR-TheWorld™, VR-Vantage™, DI-Guy™, and DI-Guy Scenario™ are trademarks of VT MAK. MÄK Technologies®, VR-Forces®, RTIspy®, B-HAVE®, and VR-Link® are registered trademarks of VT MAK. GL Studio® is a registered trademark of The DiSTI® Corporation. Portions of this software utilize SpeedTree® technology (©2008 Interactive Data Visualization, Inc.). SpeedTree is a registered trademark of Interactive Data Visualization, Inc. All rights reserved. SilverLining™ is a trademark of Sundog Software. Terrain Profiles are based in part on the work of the Qwt project (http://qwt.sourceforge.net). 3ds Max® is a registered trademark of Autodesk, Inc. All other trademarks are owned by their respective companies. For additional trademark and copyright information, please see VR-Engage Users Guide. VT MAK 150 Cambridge Park Drive, 3rd Floor Cambridge, MA 02140 USA Voice: 617-876-8085 Fax: 617-876-9208 [email protected] www.mak.com Revision VRN-1.4-3-190718 Contents Chapter 1. Introduction to VR-Engage Chapter 2. Controlling Entities in VR-Engage 2.1. Start VR-Engage and Load a Scenario ......................................... 2-2 2.2. Explore as a Human Character ..................................................... 2-4 2.2.1. Walking Around .................................................................. 2-5 2.2.2. Resetting Entities to Saved Position .................................. 2-7 2.2.3. The Action Menu ................................................................. 2-7 2.2.4. Shooting At Targets ...........................................................