Logging Bus for Field Failure Data Analysis in Distributed Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Comparison of C++, C#, Java, and PHP in the Context of E-Learning

A Comparison of C++, C#, Java, and PHP in the context of e-learning MIKAEL OLSSON KTH Information and Communication Technology Master of Science Thesis Stockholm, Sweden 2009 TRITA-ICT-EX-2009:8 A Comparison of C++, C#, Java, and PHP in the context of e‐learning Mikael Olsson April 30, 2009 Master’s Thesis in Computer Science Royal Institute of Technology Examiner: Prof. Gerald Q. Maguire Jr. ‐ i ‐ Abstract The first part of this master thesis presents an effective method for producing video tutorials. This method was used during this thesis project to create tutorials on the e- learning site PVT (http://www.programmingvideotutorials.com). Part one also discloses how the production method was developed and how tutorials produced using this method compare to professional video tutorials. Finally, it evaluates the result of this thesis work and the efficiency of the production method. The second part of this thesis compares the syntactical similarities and differences between four of the languages taught via video tutorials for PVT. These languages are: C++, C#, Java, and PHP. The purpose of this comparison is to provide a bridge for programmers knowing one of these languages to rapidly learn one or more of the other languages. The reason why this would be necessary is because there is no single language suited for every area of software development. Knowing a multitude of languages gives a programmer a wider range of job opportunities and more choices in how to solve their problems. Part two of the thesis also includes a comparison of Java and C# in the context of a video tutorial series that shows how to build a basic text editor. -

Resharper Benefits for You and Your Business

ReSharper Benefits For You and Your Business ReSharper is the most intelligent productivity tool for .NET development. However, it proves to be a great asset not only for software engineers, but also for your whole software development business. ReSharper brings the necessary intelligence, code insight and productivity to give your business a competitive advantage on three organization levels: • Benefits for developers • Benefits for project managers • Benefits for business These benefits only get better with each new ReSharper release, due to ongoing performance improvements and added functionality. For example, recent versions of ReSharper have introduced ReSharper Build, which finds ways to reduce compi- lation time in Visual Studio, as well as new code inspections and refactorings. Even as Microsoft makes improvements to new releases of Visual Studio, ReSharper stays way ahead of the curve. Benefits for business Benefits for project managers Benefits for developers ReSharper Benefits For You and Your Business 1 www.jetbrains.com/resharper Benefits for developers • Save time on compilation, locating & fixing errors – ReSharper instantly detects and highlights errors in your code and allows automatic corrections for most errors • Automate routine tasks – Quickly create methods, properties, variables or classes from their usages, generate constructors, properties, delegating and equality members; implement and override members; and much more • Get useful hints right when you need them – IntelliSense, including code completion and quick parameter -

Kotlin 1.4 Online Event October 14, 2020 Kathrinpetrova

Kotlin 1.4 Online Event It's time for Kotlin Multiplatform Mobile! Ekaterina Petrova KathrinPetrova October 14, 2020 I love mobile development, but I can’t stand writing the Me neither! same code twice! UI Views Presentation Presenters, View Models, Controllers Business / Domain Entities, Use Cases, Interactors Data / Core Repositories, HTTP Clients, Cache UI centric UI Views Presentation Presenters, View Models, Controllers Business / Domain Entities, Use Cases, Interactors Data / Core Repositories, HTTP Clients, Cache ⚙ Core centric UI centric UI Views React Native Flutter Presentation Presenters, View Models, Controllers Xamarin Forms Business / Domain Entities, Use Cases, Interactors Data / Core Repositories, HTTP Clients, Cache ⚙ Core centric UI centric UI Views React Native Flutter How can I share Presentation business logic Presenters, View Models, Controllers Xamarin Forms and core code? Business / Domain Entities, Use Cases, Interactors Data / Core Repositories, HTTP Clients, Cache ⚙ Core centric UI centric UI for thin clients, Views React Native simple apps, MVP Flutter Presentation Presenters, View Models, Controllers Xamarin Forms Business / Domain Entities, Use Cases, Interactors Data / Core Repositories, HTTP Clients, Cache ⚙ Core centric UI centric UI for thin clients, Views React Native simple apps, MVP Flutter Presentation Presenters, View Models, Controllers Xamarin Forms Business / Domain Entities, Use Cases, Interactors for complex apps, Fat clients, Data / Core strict requirements Repositories, HTTP Clients, -

NET Core, ASP.NET Core, and ASP.NET Core MVC Brave New World Outline

.NET Core, ASP.NET Core, and ASP.NET Core MVC brave new world Outline • Motivation • .NET Core • ASP.NET Core • ASP.NET Core MVC Motivation • .NET has a strong history • Very popular • Lots of investments • There is more than just Windows • Many more platforms, devices, and clouds • .NET is evolving • .NET needs to learn to run in more places • .NET needs modern tooling .NET runtimes target a platform • .NET Framework • Windows-only • .NET Core • Cross-platform runtime • .NET Native (UWP) • Mono • Windows Phone • More… .NET Core : next gen .NET for server apps • Cross platform • Windows, Linux, Mac, FreeBSD • Portable • Can be ~/bin deployed • Can be user or machine installed as well • Open source • https://github.com/dotnet/coreclr • Contains core runtime and mscorlib (e.g. GC, JIT, BCL) • Does not contain many frameworks (e.g. WCF, WPF) Development ecosystem • SDK • Command-line tooling (dotnet) • Project system • File-system based project system (project.json) • Runtime, libraries, and packaging • NuGet-focused • Editors/IDEs • Any text editor (VS Code, Emacs, Sublime, etc) and OmniSharp (OSS) • Visual Studio (Microsoft) • Project Rider (JetBrains) Installing .NET SDK • Use nightly builds (until RC2 is released) • https://github.com/dotnet/cli dotnet : command line tool • Create new project • Install NuGet dependencies • Build application • Load .NET and run application • Package library • Publish application dotnet new • Creates new project • program.cs • project.json • Console-based application using System; namespace ConsoleApplication -

Descargar Y Compilar Gambas, ¡ Lea CUIDADOSAMENTE Las Usted Debe Tener Privilegios Para Escribir El Siguientes Recomendaciones !

Tercera edición digital de MYGNET-MAGAZINE Enero 2006 Les deseamos que este año se para todos de muchas oportunidades y que se cumplan todos nuestros sueños y propósitos. También queremos reconocer el excelente trabajo de los colaboradores que han brindado los contenidos de este número, así pues agradecemos el enorme apoyo que hemos recibido de parte de todos los colaboradores como lectores. Es muy alentador recibir sus comentarios y opiniones para continuar este esfuerzo y generar un producto de gran valor para todos. Les reiteramos la invitación para que participen con nosotros. Editores Martín Roberto Mondragón Sotelo. [email protected] Gustavo Santiago Lázaro. [email protected] Escríbenos a [email protected] Visítanos a http://www.mygnet.com o http://www.mygnet.org Aplicaciones Backup y recuperación en frió................................................................................................................................................... 3 DotGNU Portable.NET................................................................................................................................................................... 4 Instalación de Gambas – Notas importantes ........................................................................................................................ 6 Gambas – Compilación e instalación ..................................................................................................................................... 7 Tablas externas en Oracle 9i..................................................................................................................................................... -

AWS Mobile SDK Xamarin Developer Guide

AWS Mobile SDK Xamarin Developer Guide AWS Mobile SDK: Xamarin Developer Guide Copyright © Amazon Web Services, Inc. and/or its affiliates. All rights reserved. AWS Mobile SDK Xamarin Developer Guide Amazon's trademarks and trade dress may not be used in connection with any product or service that is not Amazon's, in any manner that is likely to cause confusion among customers, or in any manner that disparages or discredits Amazon. All other trademarks not owned by Amazon are the property of their respective owners, who may or may not be affiliated with, connected to, or sponsored by Amazon. AWS Mobile SDK Xamarin Developer Guide Table of Contents ...................................................................................................................................................... vii What is the AWS Mobile SDK for .NET and Xamarin? ............................................................................. 1 Related guides and topics ........................................................................................................... 1 Archived reference content .......................................................................................................... 1 What’s included in the AWS Mobile SDK for .NET and Xamarin? ................................................ 1 Compatability .................................................................................................................... 2 How do I get the AWS Mobile SDK for .NET and Xamarin? ...................................................... -

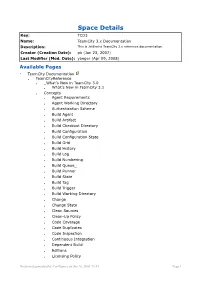

Space Details Key: TCD3 Name: Teamcity 3.X Documentation Description: This Is Jetbrains Teamcity 3.X Reference Documentation

Space Details Key: TCD3 Name: TeamCity 3.x Documentation Description: This is JetBrains TeamCity 3.x reference documentation. Creator (Creation Date): pk (Jan 23, 2007) Last Modifier (Mod. Date): yaegor (Apr 09, 2008) Available Pages • TeamCity Documentation • TeamCityReference • _What's New in TeamCity 3.0 • What's New in TeamCity 3.1 • Concepts • Agent Requirements • Agent Working Directory • Authentication Scheme • Build Agent • Build Artifact • Build Checkout Directory • Build Configuration • Build Configuration State • Build Grid • Build History • Build Log • Build Numbering • Build Queue_ • Build Runner • Build State • Build Tag • Build Trigger • Build Working Directory • Change • Change State • Clean Sources • Clean-Up Policy • Code Coverage • Code Duplicates • Code Inspection • Continuous Integration • Dependent Build • Editions • Licensing Policy Document generated by Confluence on Jun 16, 2008 13:43 Page 1 • Notification • Notification Conditions • Notification Rules • Notifier • Permission • Personal Build • Pinned Build • Pre-Tested (Delayed) Commit • Project • Remote Run • Responsibility • Role • RSS Feed • Run Configuration Policy • Security • Supported Platforms and Environments • TeamCity Data Directory • Testing Frameworks • User Account • VCS Root • Version Control System • Developing TeamCity Plugins • Agent Side Extensions • Plugin API FAQ • Server Side Extensions • Statistics customization • Typical Plugins • Web UI Extensions • Installation and Upgrade • Installation • Installing Additional Plugins • Installing and -

Spring 2021 a Guide to Using Clion

COP 3014: Spring 2021 A Guide to Using CLion Sharanya Jayaraman January 8, 2021 1 CLion CLion is a comprehensive C++ Integrated Development Environment (IDE) developed and main- tained by JetBrains. It is very intuitive and easy to use. For this class, we will use CLion for all of our work. 1.1 Applying for a Student License In order to use CLion, you need to apply for a license. Go to https://www.jetbrains.com. From the menu on the top of the screen, choose Solutions and then JetBrains for Education. You should see the following screen: Figure 1: JetBrains Academic Licences Choose the tab that says \Free Access to Professional Tools". Under the "For Students and Teach- ers" tab, scroll down and click on the blue \Apply Now" button. You will now see a form to apply for a Student License, as shown below. Please fill out the form and use your FSU email ID. 1 Figure 2: JetBrains Academic Licences Click on \Apply Now". You will get an email from JetBrains in a few minutes, asking you to confirm the request for a student license. Once you confirm the request, they will send you another email, asking you to create a JetBrains Student Account. This takes about 5 minutes. Once you create the account, you should see a page with your Key. 2 Installing CLion You can now download and install CLion. Download CLion from https://www.jetbrains.com/ clion/download. The page will automatically show you the right installer for your Operating System. Download the installer. -

About Me Hobbies Interests Education Skil Ls Training

Name: Tobias BurgEr Birthday: 28.02.1988 Address: RiEsEnburgstraßE 24, 81249 Munich, GErmany E-Mail: [email protected] Mobile: +49 152 24 04 61 78 Website: www.taijj.nEt Structured, reliable, and self-reliant game and application developer with 8+ years of experience developing for the digital division of Ravensburger. Focused on the tasks at hand, while also considering team performance. Flexible in adjusting to different projects, and creative with a multitude of interests and ambitions. EXPERIENCE SKILLS RavEnsburgEr AG sincE 2017 Unity 3D Software Developer - Implementation of game and application logic, primarily with the Unity game engine C# RavEnsburgEr-Digital GmbH 2012-2017 Java Software Developer - Implementation and development of game logic, UI/UX, and VFX with the Unity game engine Actionscript 3 HTML, CSS RavEnsburgEr-Digital GmbH 2012 Internship Game Design + Software Development Javascript, Php RavEnsburgEr-Digital GmbH 2011 Git Internship Software Development Jira, ConfluEncE GErman TRAINING English Java Programming MastErclass sincE 2020 VFX, ShadErs Video course on Udemy.com BlEndEr Unity Editor Scripting MastErclass 2019 AdobE Photoshop Video course on Udemy.com AdobE AftEr EffEcts UnitE CopEnhagEn 2019 Attendance at Unity’s own developer conference in the Animation danish capital PixElart Scrum Jumpstart 2019 GamE DEsign Attendance at a company internal workshop for the basics of Audio Editing Scrum PagE 1 Name: Tobias BurgEr Birthday: 28.02.1988 Address: RiEsEnburgstraßE 24, 81249 Munich, GErmany E-Mail: [email protected] -

Comparative Studies of Six Programming Languages

Comparative Studies of Six Programming Languages Zakaria Alomari Oualid El Halimi Kaushik Sivaprasad Chitrang Pandit Concordia University Concordia University Concordia University Concordia University Montreal, Canada Montreal, Canada Montreal, Canada Montreal, Canada [email protected] [email protected] [email protected] [email protected] Abstract Comparison of programming languages is a common topic of discussion among software engineers. Multiple programming languages are designed, specified, and implemented every year in order to keep up with the changing programming paradigms, hardware evolution, etc. In this paper we present a comparative study between six programming languages: C++, PHP, C#, Java, Python, VB ; These languages are compared under the characteristics of reusability, reliability, portability, availability of compilers and tools, readability, efficiency, familiarity and expressiveness. 1. Introduction: Programming languages are fascinating and interesting field of study. Computer scientists tend to create new programming language. Thousand different languages have been created in the last few years. Some languages enjoy wide popularity and others introduce new features. Each language has its advantages and drawbacks. The present work provides a comparison of various properties, paradigms, and features used by a couple of popular programming languages: C++, PHP, C#, Java, Python, VB. With these variety of languages and their widespread use, software designer and programmers should to be aware -

TCD65-141111-2205-6028.Pdf

1. TeamCity Documentation . 3 1.1 TeamCityReference . 4 1.1.1 What's New in TeamCity 6.5 . 4 1.1.2 Getting Started . 10 1.1.3 Concepts . 14 1.1.3.1 Agent Home Directory . 15 1.1.3.2 Agent Requirements . 16 1.1.3.3 Agent Work Directory . 16 1.1.3.4 Authentication Scheme . 16 1.1.3.5 Build Agent . 17 1.1.3.6 Build Artifact . 18 1.1.3.7 Build Chain . 18 1.1.3.8 Build Checkout Directory . 19 1.1.3.9 Build Configuration . 20 1.1.3.10 Build Configuration Template . 21 1.1.3.11 Build Grid . 22 1.1.3.12 Build History . 22 1.1.3.13 Build Log . 23 1.1.3.14 Build Number . 23 1.1.3.15 Build Queue . 23 1.1.3.16 Build Runner . 24 1.1.3.17 Build State . 24 1.1.3.18 Build Tag . 25 1.1.3.19 Build Working Directory . 25 1.1.3.20 Change . 26 1.1.3.21 Change State . 26 1.1.3.22 Clean Checkout . 27 1.1.3.23 Clean-Up . 28 1.1.3.24 Code Coverage . 28 1.1.3.25 Code Duplicates . 29 1.1.3.26 Code Inspection . 29 1.1.3.27 Continuous Integration . 29 1.1.3.28 Dependent Build . 30 1.1.3.29 Difference Viewer . 31 1.1.3.30 Guest User . 32 1.1.3.31 History Build . 32 1.1.3.32 Notifier . 33 1.1.3.33 Personal Build . -

Add Markdown Support for Knowledge Base Articles Add Markdown Support for Knowledge Base Articles Collecting Feedback

Help Center > Community > Feature Request > Add Markdown support for Knowledge Base articles Add Markdown support for Knowledge Base articles Collecting Feedback Doug Schmidt Forum name: #Feature Request Deskpro has very inconsistent support for authoring good looking techincal content across the various parts of the product: - Guides: Markdown is supported. Yay! - Ticket workflows: C+: Markdown isn't supported, but at least the "code block" icon actually uses a mono-spaced font, and block looks visually different. - Knowledge Base articles: D-: All authored content looks awful. No mono-spaced fonts are supported, and the "code" style is a weird UI beast that strips out newlines from selected text. WTH? Using software elicits one of two possible emotions: Joy or rage. I can't get my technical knowledge base articles to look like anything approaching a competent professional document that another coder would say "Yeah, it looks like Doug knows what he is doing". KB-authoring within Deskpro is such a step backward compared to other tech writing that I do for my job that I have stopped trying to use Deskpro. The KB-authoring (and reading) experience is that awful and infuriating. The Deskpro KB authoring does not let me do my job of effectively demonstrating technical content (code snippets and API examples) to my technical customers. All the workarounds we have to go through just make me sad and cause inconvenience to my customers. Please make code blocks work properly in KB articles. Please add a monospaced font so that code will look like code! Jetbrains Mono is an excellent and free choice: https://www.jetbrains.com/lp/mono/ And please support Markdown content like the rest of the internet.