Cloud-Ready Hypervisor-Based Security

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Test-Beds and Guidelines for Securing Iot Products and for Secure Set-Up Production Environments

IoT4CPS – Trustworthy IoT for CPS FFG - ICT of the Future Project No. 863129 Deliverable D7.4 Test-beds and guidelines for securing IoT products and for secure set-up production environments The IoT4CPS Consortium: AIT – Austrian Institute of Technology GmbH AVL – AVL List GmbH DUK – Donau-Universit t Krems I!AT – In"neon Technologies Austria AG #KU – JK Universit t Lin$ / Institute for &ervasive 'om(uting #) – Joanneum )esearch !orschungsgesellschaft mbH *+KIA – No,ia -olutions an. Net/or,s 0sterreich GmbH *1& – *1& -emicon.uctors Austria GmbH -2A – -2A )esearch GmbH -)!G – -al$burg )esearch !orschungsgesellschaft -''H – -oft/are 'om(etence 'enter Hagenberg GmbH -AG0 – -iemens AG 0sterreich TTTech – TTTech 'om(utertechni, AG IAIK – TU Gra$ / Institute for A((lie. Information &rocessing an. 'ommunications ITI – TU Gra$ / Institute for Technical Informatics TU3 – TU 3ien / Institute of 'om(uter 4ngineering 1*4T – 1-Net -ervices GmbH © Copyright 2020, the Members of the IoT4CPS Consortium !or more information on this .ocument or the IoT5'&- (ro6ect, (lease contact8 9ario Drobics7 AIT Austrian Institute of Technology7 mario:.robics@ait:ac:at IoT4C&- – <=>?@A Test-be.s an. guidelines for securing IoT (ro.ucts an. for secure set-up (ro.uction environments Dissemination level8 &U2LI' Document Control Title8 Test-be.s an. gui.elines for securing IoT (ro.ucts an. for secure set-u( (ro.uction environments Ty(e8 &ublic 4.itorBsC8 Katharina Kloiber 4-mail8 ,,;D-net:at AuthorBsC8 Katharina Kloiber, Ni,olaus DEr,, -ilvio -tern )evie/erBsC8 -te(hanie von )E.en, Violeta Dam6anovic, Leo Ha((-2otler Doc ID8 DF:5 Amendment History Version Date Author Description/Comments VG:? ?>:G?:@G@G -ilvio -tern Technology Analysis VG:@ ?G:G>:@G@G -ilvio -tern &ossible )esearch !iel.s for the -2I--ystem VG:> >?:G<:@G@G Katharina Kloiber Initial version (re(are. -

Github: a Case Study of Linux/BSD Perceptions from Microsoft's

1 FLOSS != GitHub: A Case Study of Linux/BSD Perceptions from Microsoft’s Acquisition of GitHub Raula Gaikovina Kula∗, Hideki Hata∗, Kenichi Matsumoto∗ ∗Nara Institute of Science and Technology, Japan {raula-k, hata, matumoto}@is.naist.jp Abstract—In 2018, the software industry giants Microsoft made has had its share of disagreements with Microsoft [6], [7], a move into the Open Source world by completing the acquisition [8], [9], the only reported negative opinion of free software of mega Open Source platform, GitHub. This acquisition was not community has different attitudes towards GitHub is the idea without controversy, as it is well-known that the free software communities includes not only the ability to use software freely, of ‘forking’ so far, as it it is considered as a danger to FLOSS but also the libre nature in Open Source Software. In this study, development [10]. our aim is to explore these perceptions in FLOSS developers. We In this paper, we report on how external events such as conducted a survey that covered traditional FLOSS source Linux, acquisition of the open source platform by a closed source and BSD communities and received 246 developer responses. organization triggers a FLOSS developers such the Linux/ The results of the survey confirm that the free community did trigger some communities to move away from GitHub and raised BSD Free Software communities. discussions into free and open software on the GitHub platform. The study reminds us that although GitHub is influential and II. TARGET SUBJECTS AND SURVEY DESIGN trendy, it does not representative all FLOSS communities. -

Institutionalizing Freebsd Isolated and Virtualized Hosts Using Bsdinstall(8), Zfs(8) and Nfsd(8)

Institutionalizing FreeBSD Isolated and Virtualized Hosts Using bsdinstall(8), zfs(8) and nfsd(8) [email protected] @MichaelDexter BSDCan 2018 Jails and bhyve… FreeBSD’s had Isolation since 2000 and Virtualization since 2014 Why are they still strangers? Institutionalizing FreeBSD Isolated and Virtualized Hosts Using bsdinstall(8), zfs(8) and nfsd(8) Integrating as first-class features Institutionalizing FreeBSD Isolated and Virtualized Hosts Using bsdinstall(8), zfs(8) and nfsd(8) This example but this is not FreeBSD-exclusive Institutionalizing FreeBSD Isolated and Virtualized Hosts Using bsdinstall(8), zfs(8) and nfsd(8) jail(8) and bhyve(8) “guests” Application Binary Interface vs. Instructions Set Architecture Institutionalizing FreeBSD Isolated and Virtualized Hosts Using bsdinstall(8), zfs(8) and nfsd(8) The FreeBSD installer The best file system/volume manager available The Network File System Broad Motivations Virtualization! Containers! Docker! Zones! Droplets! More more more! My Motivations 2003: Jails to mitigate “RPM Hell” 2011: “bhyve sounds interesting...” 2017: Mitigating Regression Hell 2018: OpenZFS EVERYWHERE A Tale of Two Regressions Listen up. Regression One FreeBSD Commit r324161 “MFV r323796: fix memory leak in [ZFS] g_bio zone introduced in r320452” Bug: r320452: June 28th, 2017 Fix: r324162: October 1st, 2017 3,710 Commits and 3 Months Later June 28th through October 1st BUT July 27th, FreeNAS MFC Slips into FreeNAS 11.1 Released December 13th Fixed in FreeNAS January 18th 3 Months in FreeBSD HEAD 36 Days -

Thread Scheduling in Multi-Core Operating Systems Redha Gouicem

Thread Scheduling in Multi-core Operating Systems Redha Gouicem To cite this version: Redha Gouicem. Thread Scheduling in Multi-core Operating Systems. Computer Science [cs]. Sor- bonne Université, 2020. English. tel-02977242 HAL Id: tel-02977242 https://hal.archives-ouvertes.fr/tel-02977242 Submitted on 24 Oct 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Ph.D thesis in Computer Science Thread Scheduling in Multi-core Operating Systems How to Understand, Improve and Fix your Scheduler Redha GOUICEM Sorbonne Université Laboratoire d’Informatique de Paris 6 Inria Whisper Team PH.D.DEFENSE: 23 October 2020, Paris, France JURYMEMBERS: Mr. Pascal Felber, Full Professor, Université de Neuchâtel Reviewer Mr. Vivien Quéma, Full Professor, Grenoble INP (ENSIMAG) Reviewer Mr. Rachid Guerraoui, Full Professor, École Polytechnique Fédérale de Lausanne Examiner Ms. Karine Heydemann, Associate Professor, Sorbonne Université Examiner Mr. Etienne Rivière, Full Professor, University of Louvain Examiner Mr. Gilles Muller, Senior Research Scientist, Inria Advisor Mr. Julien Sopena, Associate Professor, Sorbonne Université Advisor ABSTRACT In this thesis, we address the problem of schedulers for multi-core architectures from several perspectives: design (simplicity and correct- ness), performance improvement and the development of application- specific schedulers. -

Sintesi Catalogo Competenze 2

Internet of Things Competenze Campi di applicazione • Progettazione e sviluppo di firmware su micro • Monitoraggio ambientale meteorologico di para- controllori a basso e bassissimo consumo quali ad metri climatici e parametri della qualità dell’aria, esempio Arduino, Microchip, NXP, Texas Instru- anche in mobilità ments e Freescale • Monitoraggio ambientale distribuito per l’agricol- • Sviluppo su PC embedded basati su processori tura di precisione ARM e sistema operativo Linux quali ad esempio • Monitoraggio della qualità dell’acqua e dei parame- Portux, Odroid, RaspberryPI ed Nvidia Jetson tri di rischio ambientale (alluvioni, frane, ecc.) • Progettazione e sviluppo di Wired e Wireless Sen- • Monitoraggio di ambienti indoor (scuole, bibliote- sor Networks basate su standard quali ZigBee, che, uffici pubblici, ecc) SimpliciTI, 6LoWPAN, 802.15.4 e Modbus • Smart building: efficienza energetica, comfort am- • Progettazione e sviluppo di sistemi ad alimentazio- bientale e sicurezza ne autonoma e soluzioni di Energy harvesting • Utilizzo di piattaforme microUAV per misure distri- • Ottimizzazione di software e protocolli wireless buite, per applicazioni di fotogrammetria, teleme- per l’uso efficiente dell’energia all’interno di nodi tria e cartografia, per sistemi di navigazione auto- ad alimentazione autonoma matica basata su sensoristica e image processing, • Design e prototipazione (con strumenti CAD, pianificazione e gestione delle missioni stampante 3D, ecc) di circuiti elettronici per l’inte- • Smart Grid locale per l’ottimizzazione -

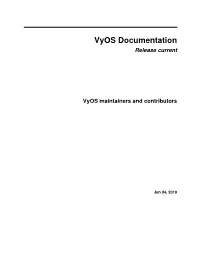

Vyos Documentation Release Current

VyOS Documentation Release current VyOS maintainers and contributors Jun 04, 2019 Contents: 1 Installation 3 1.1 Verify digital signatures.........................................5 2 Command-Line Interface 7 3 Quick Start Guide 9 3.1 Basic QoS................................................ 11 4 Configuration Overview 13 5 Network Interfaces 17 5.1 Interface Addresses........................................... 18 5.2 Dummy Interfaces............................................ 20 5.3 Ethernet Interfaces............................................ 20 5.4 L2TPv3 Interfaces............................................ 21 5.5 PPPoE.................................................. 23 5.6 Wireless Interfaces............................................ 25 5.7 Bridging................................................. 26 5.8 Bonding................................................. 27 5.9 Tunnel Interfaces............................................. 28 5.10 VLAN Sub-Interfaces (802.1Q)..................................... 31 5.11 QinQ................................................... 32 5.12 VXLAN................................................. 33 5.13 WireGuard VPN Interface........................................ 37 6 Routing 41 6.1 Static................................................... 41 6.2 RIP.................................................... 41 6.3 OSPF................................................... 42 6.4 BGP................................................... 43 6.5 ARP................................................... 45 7 -

Deploying IBM Spectrum Accelerate on Cloud

Front cover Deploying IBM Spectrum Accelerate on Cloud Bert Dufrasne Nancy Kinney Donald Mathisen Christopher Moore Markus Oscheka Ralf Wohlfarth Eric Zhang Redpaper International Technical Support Organization Deploying IBM Spectrum Accelerate on Cloud December 2015 REDP-5261-00 Note: Before using this information and the product it supports, read the information in “Notices” on page v. First Edition (December 2015) This edition applies to IBM Spectrum Accelerate Version 11.5 © Copyright International Business Machines Corporation 2015. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Notices . .v Trademarks . vi IBM Redbooks promotions . vii Preface . ix Authors. ix Now you can become a published author, too . xi Comments welcome. xi Stay connected to IBM Redbooks . xi Chapter 1. Introducing IBM SoftLayer and IBM Spectrum Accelerate . 1 1.1 IBM Cloud computing overview. 2 1.2 IBM SoftLayer Cloud overview . 3 1.3 IBM Spectrum Accelerate . 6 1.3.1 IBM Spectrum Accelerate on Cloud . 7 Chapter 2. IBM Spectrum Accelerate on Cloud . 9 2.1 Description of service . 10 2.2 Customer responsibilities . 11 2.3 Configuration types . 11 2.4 Hardware in SoftLayer data centers . 12 2.5 Ordering process. 12 2.5.1 Order process flow . 12 2.6 Changes to the existing configuration . 13 2.6.1 Increasing capacity and performance . 13 2.6.2 Capacity and performance reduction . 13 2.6.3 Termination of service. 13 2.7 Restrictions . 14 2.7.1 Ordering for use in customer SoftLayer account. 14 2.8 Connectivity. -

Building a Virtualisation Appliance with Freebsd/Bhyve/Openzfs Jason Tubnor ICT Senior Security Lead Introduction

Building a virtualisation appliance with FreeBSD/bhyve/OpenZFS Jason Tubnor ICT Senior Security Lead Introduction Building an virtualisation appliance for use within a NGO/NFP Australian Health Sector About Me Latrobe Community Health Service (LCHS) Background Problem Concept Production Reiteration About Me 26 years of IT experience Introduced to Open Source in the mid 90’s Discovered OpenBSD in 2000 A user and advocate of OpenBSD and FreeBSD Life outside of computers: Ultra endurance gravel cycling Latrobe Community Health Service (LCHS) Originally a Gippsland based NFP/NGO health service ICT manages 900+ users Servicing 51 sites across Victoria, Australia Covering ~230,000km2 Roughly the size of Laos in Aisa or Minnesota in USA “Better health, Better lifestyles, Stronger communities” Background First half of 2016 awarded contract to provide NDIS services Mid 2016 – deployment of initial infrastructure MPLS connection L3 switch gear ESXi host running a Windows Server 2016 for printing services Background – cont. Staff number grew We hit capacity constraints on the managed MPLS network An offloading guest was added to the ESXi host VPN traffic could be offloaded from the main network Using cheaply available ISP internet connection Problem Taking stock of the lessons learned in the first phase We needed to come up with a reproducible device Device required to be durable in harsh conditions Budget constraints/cost savings Licensing model Phase 2 was already being negotiated so a solution was required quickly Concept bhyve [FreeBSD] was working extremely well in testing Excellent hardware support Liberally licensed OpenZFS Simplistic Small footprint for a type 2 hypervisor Hardware discovery phase FreeBSD Required virtualisation components in CPU Concept – cont. -

Bhyve - Improvements to Virtual Machine State Save and Restore

bhyve - Improvements to Virtual Machine State Save and Restore Darius Mihai Mihai Carabas, University POLITEHNICA of Bucharest University POLITEHNICA of Bucharest Splaiul Independent, ei 313, Bucharest, Romania, 060042 Splaiul Independent, ei 313, Bucharest, Romania, 060042 Email: [email protected] Email: [email protected] Abstract—As more complex tasks are delegated to distributed Regardless of their exact function, a periodic task is a routine servers, virtual machine hypervisors need to adapt and provide that will have to be called at (or as close as possible) a set features that allow redundancy and load balancing. One such interval. mechanism is the virtual machine save and restore through sys- sleep tem snapshots. A snapshot should allow the complete restoration For example, the basic Unix command can be used of the state that the virtual machine was in when the snapshot to perform an operation every N seconds if sleep $N is was created. Since the snapshot should encapsulate the entire called in a script loop. Since it is safe to assume that all state of the virtualized system, the guest system should not be modern processors have hardware timekeeping components able to differentiate between the moment a snapshot was created implemented, sleep will request from the operating system and the moment when the system was restored, regardless of how much real time has passed between the two events. This that a software timer (i.e., one that is implemented by the paper will present how the time management and block devices operating system as an abstraction [1]) to be set for N are saved and restored for bhyve, FreeBSD’s virtual machine seconds in the future and will yield the processor, without hypervisor. -

Virtual Router Performance

SOFTWARE DEFINED NETWORKING: VIRTUAL ROUTER PERFORMANCE Bachelor Degree Project in Network and System Administration Level ECTS Spring term 2016 Björn Svantesson Supervisor: Jianguo Ding Examiner: Manfred Jeusfeld Table of Contents 1Introduction..........................................................................................................................................1 2Background...........................................................................................................................................2 2.1Virtualization................................................................................................................................2 2.2Hypervisors...................................................................................................................................2 2.3VMware ESXi................................................................................................................................2 2.4Software defined networking.......................................................................................................3 2.5The split of the data and control plane........................................................................................3 2.6Centralization of network control................................................................................................4 2.7Network virtualization..................................................................................................................4 2.8Software routers..........................................................................................................................6 -

In PDF Format

Arranging Your Virtual Network on FreeBSD Michael Gmelin ([email protected]) January 2020 1 CONTENTS CONTENTS Contents Introduction 3 Document Conventions . .3 License . .3 Plain Jails 4 Plain Jails Using Inherited IP Configuration . .4 Plain Jails Using a Dedicated IP Address . .5 Plain Jails Using a VLAN IP Address . .6 Plain Jails Using a Loopback IP Address . .7 Adding Outbound NAT for Public Traffic . .7 Running a Service and Redirecting Traffic to It . .9 VNET Jails and bhyve VMs 10 VNET Jails Using sysutils/pot ............................. 10 VNET Jails Using sysutils/iocage ............................ 13 Managing Bridges . 13 Adding bhyve VMs and DHCP to the Mix . 16 Preventing Traffic Between VNET Jails/VMs . 17 Firewalling Inside VNET Jails/VMs . 20 VXLAN 22 VXLAN Example Overview . 22 Gateway Configuration . 24 Jailhost-a . 25 Network Configuration (jailhost-a) . 25 VM Configuration (jailhost-a) . 26 Jail Configuration (jailhost-a) . 27 Jailhost-b . 27 Network Configuration (jailhost-b) . 27 Plain Jail Configuration (jailhost-b) . 28 Network Switch Setup (jailhost-b) . 29 VNET Jail Configuration (jailhost-b) . 30 VM Configuration (jailhost-b) . 30 VXLAN Multicast Troubleshooting . 31 Conclusion and Further Reading 33 2020-01-08 (final) 2 CC BY 4.0 INTRODUCTION Introduction Modern FreeBSD offers a range of virtualization options, from the traditional jail environment sharing the network stack with the host operating system, over vnet jails, which allow each jail to have its own network stack, to bhyve virtual machines running their own kernels/operating systems. Depending on individual requirements, there are different ways to configure the virtual network. Jail and VM management tools can ease the process by abstracting away (at least some of) the underlying complexities. -

Curs 8 Virtualizare Nativ˘A

Curs 8 Virtualizare nativ˘a Servicii avansate pentru ISP 24 aprilie 2017 SAISP Curs 8, Virtualizare nativ˘a 1/37 Outline Introducere KVM KVM s, i libvirt Concluzii ^Intreb˘ari SAISP Curs 8, Virtualizare nativ˘a 2/37 Virtualizare I abstractizarea resurselor unui sistem de calcul I memorie virtual˘a I mas, ini virtuale I emulare I virtualizarea stoc˘arii I system virtual machine I process virtual machine SAISP Curs 8, Virtualizare nativ˘a 3/37 I paravirtualizare (paravirtualization) I virtualizare la nivelul sistemului de operare (operating-system level virtualization) I Cu ce difer˘avirtualizarea de emulare? I la virtualizare, cea mai mare parte a instruct, iunilor se execut˘a pe sistemul fizic I se poate emula o arhitectur˘adiferit˘ade arhitectura sistemului fizic I hypervisor I hosted virtualization (type 2 hypervisor) I bare metal virtualization (type 1 hypervisor) Tipuri de virtualizare I virtualizare complet˘a(full-virtualization) I virtualizare asistat˘ahardware (hardware-assisted virtualization) SAISP Curs 8, Virtualizare nativ˘a 4/37 I virtualizare la nivelul sistemului de operare (operating-system level virtualization) I Cu ce difer˘avirtualizarea de emulare? I la virtualizare, cea mai mare parte a instruct, iunilor se execut˘a pe sistemul fizic I se poate emula o arhitectur˘adiferit˘ade arhitectura sistemului fizic I hypervisor I hosted virtualization (type 2 hypervisor) I bare metal virtualization (type 1 hypervisor) Tipuri de virtualizare I virtualizare complet˘a(full-virtualization) I virtualizare asistat˘ahardware (hardware-assisted