Network Programming Guide

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Solaris Advanced User's Guide

Solaris Advanced User’s Guide Sun Microsystems, Inc. 4150 Network Circle Santa Clara, CA 95054 U.S.A. Part No: 806–7612–10 May 2002 Copyright 2002 Sun Microsystems, Inc. 4150 Network Circle, Santa Clara, CA 95054 U.S.A. All rights reserved. This product or document is protected by copyright and distributed under licenses restricting its use, copying, distribution, and decompilation. No part of this product or document may be reproduced in any form by any means without prior written authorization of Sun and its licensors, if any. Third-party software, including font technology, is copyrighted and licensed from Sun suppliers. Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and other countries, exclusively licensed through X/Open Company, Ltd. Sun, Sun Microsystems, the Sun logo, docs.sun.com, AnswerBook, AnswerBook2, SunOS, and Solaris are trademarks, registered trademarks, or service marks of Sun Microsystems, Inc. in the U.S. and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. in the U.S. and other countries. Products bearing SPARC trademarks are based upon an architecture developed by Sun Microsystems, Inc. The OPEN LOOK and Sun™ Graphical User Interface was developed by Sun Microsystems, Inc. for its users and licensees. Sun acknowledges the pioneering efforts of Xerox in researching and developing the concept of visual or graphical user interfaces for the computer industry. Sun holds a non-exclusive license from Xerox to the Xerox Graphical User Interface, which license also covers Sun’s licensees who implement OPEN LOOK GUIs and otherwise comply with Sun’s written license agreements. -

Introduction to UNIX What Is UNIX? Why UNIX? Brief History of UNIX Early UNIX History UNIX Variants

What is UNIX? A modern computer operating system Introduction to UNIX Operating system: “a program that acts as an intermediary between a user of the computer and the computer hardware” CS 2204 Software that manages your computer’s resources (files, programs, disks, network, …) Class meeting 1 e.g. Windows, MacOS Modern: features for stability, flexibility, multiple users and programs, configurability, etc. *Notes by Doug Bowman and other members of the CS faculty at Virginia Tech. Copyright 2001-2003. (C) Doug Bowman, Virginia Tech, 2001- 2 Why UNIX? Brief history of UNIX Used in many scientific and industrial settings Ken Thompson & Dennis Richie Huge number of free and well-written originally developed the earliest software programs versions of UNIX at Bell Labs for Open-source OS internal use in 1970s Internet servers and services run on UNIX Borrowed best ideas from other Oss Largely hardware-independent Meant for programmers and computer Based on standards experts Meant to run on “mini computers” (C) Doug Bowman, Virginia Tech, 2001- 3 (C) Doug Bowman, Virginia Tech, 2001- 4 Early UNIX History UNIX variants Thompson also rewrote the operating system Two main threads of development: in high level language of his own design Berkeley software distribution (BSD) which he called B. Unix System Laboratories System V Sun: SunOS, Solaris The B language lacked many features and Ritchie decided to design a successor to B GNU: Linux (many flavors) which he called C. SGI: Irix They then rewrote UNIX in the C FreeBSD programming language to aid in portability. Hewlett-Packard: HP-UX Apple: OS X (Darwin) … (C) Doug Bowman, Virginia Tech, 2001- 5 (C) Doug Bowman, Virginia Tech, 2001- 6 1 Layers in the UNIX System UNIX Structure User Interface The kernel is the core of the UNIX Library Interface Users system, controlling the system Standard Utility Programs hardware and performing various low- (shell, editors, compilers, etc.) System Interface calls User Mode level functions. -

Managing Network File Systems in Oracle® Solaris 11.4

Managing Network File Systems in ® Oracle Solaris 11.4 Part No: E61004 August 2021 Managing Network File Systems in Oracle Solaris 11.4 Part No: E61004 Copyright © 2002, 2021, Oracle and/or its affiliates. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed by U.S. Government end users are "commercial computer software" or "commercial computer software documentation" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, reproduction, duplication, release, display, disclosure, modification, preparation of derivative works, and/or adaptation of i) Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs), ii) Oracle computer documentation and/or iii) other Oracle data, is subject to the rights and limitations specified in the license contained in the applicable contract. -

The Rise & Development of Illumos

Fork Yeah! The Rise & Development of illumos Bryan Cantrill VP, Engineering [email protected] @bcantrill WTF is illumos? • An open source descendant of OpenSolaris • ...which itself was a branch of Solaris Nevada • ...which was the name of the release after Solaris 10 • ...and was open but is now closed • ...and is itself a descendant of Solaris 2.x • ...but it can all be called “SunOS 5.x” • ...but not “SunOS 4.x” — thatʼs different • Letʼs start at (or rather, near) the beginning... SunOS: A peopleʼs history • In the early 1990s, after a painful transition to Solaris, much of the SunOS 4.x engineering talent had left • Problems compounded by the adoption of an immature SCM, the Network Software Environment (NSE) • The engineers revolted: Larry McVoy developed a much simpler variant of NSE called NSElite (ancestor to git) • Using NSElite (and later, TeamWare), Roger Faulkner, Tim Marsland, Joe Kowalski and Jeff Bonwick led a sufficiently parallelized development effort to produce Solaris 2.3, “the first version that worked” • ...but with Solaris 2.4, management took over day-to- day operations of the release, and quality slipped again Solaris 2.5: Do or die • Solaris 2.5 absolutely had to get it right — Sun had new hardware, the UltraSPARC-I, that depended on it • To assure quality, the engineers “took over,” with Bonwick installed as the gatekeeper • Bonwick granted authority to “rip it out if itʼs broken" — an early BDFL model, and a template for later generations of engineering leadership • Solaris 2.5 shipped on schedule and at quality -

Openafs: BSD Clients 2009

OpenAFS: BSD Clients 2009 Matt Benjamin <[email protected]> OpenAFS: BSD Clients 2009 Who am I? ● OpenAFS developer interested in various new-code development topics ● for the last while, “portmaster” for BSD clients except DARWIN/MacOS X ● involves evolving the ports, interfacing withusers and port maintainers in the BSD communities OpenAFS: BSD Clients 2009 Former Maintainers ● Tom Maher, MIT ● Jim Rees, University of Michigan (former Gatekeeper and Elder) ● Garret Wollman, MIT OpenAFS: BSD Clients 2009 Other Active ● Ben Kaduk (FreeBSD) ● Tony Jago (FreeBSD) ● Jamie Fournier (NetBSD) OpenAFS: BSD Clients 2009 Historical Remarks ● AFS originated in a BSD 4.2 environment ● extend UFS with coherence across a group of machines ● Terminology in common with BSD, SunOS, etc, e.g., vnodes ● Followed SunOS and Ultrix to Solaris and Digital Unix in Transarc period ● 386BSD released at 4.3 level in the Transarc period, some client development never publically released (or independent of Transarc) OpenAFS: BSD Clients 2009 BSD Clients Today ● Descendents of 386BSD distribution and successors, not including DARWIN/MacOS X ● DARWIN separately maintained, though of course there are similarities OpenAFS: BSD Clients 2009 Today ● FreeBSD ● OpenBSD Soon ● NetBSD ● OpenBSD Not yet supported (as a client): ● Dragonfly BSD OpenAFS: BSD Clients 2009 BSD Port History I ● First 386BSD port probably that of John Kohl (MIT), for NetBSD ● First to appear in OpenAFS is FreeBSD, by Tom Maher ● Next to appear in OpenAFS is OpenBSD, by Jim Rees ● Significant evolution -

UNIX History Page 1 Tuesday, December 10, 2002 7:02 PM

UNIX History Page 1 Tuesday, December 10, 2002 7:02 PM CHAPTER 1 UNIX Evolution and Standardization This chapter introduces UNIX from a historical perspective, showing how the various UNIX versions have evolved over the years since the very first implementation in 1969 to the present day. The chapter also traces the history of the different attempts at standardization that have produced widely adopted standards such as POSIX and the Single UNIX Specification. The material presented here is not intended to document all of the UNIX variants, but rather describes the early UNIX implementations along with those companies and bodies that have had a major impact on the direction and evolution of UNIX. A Brief Walk through Time There are numerous events in the computer industry that have occurred since UNIX started life as a small project in Bell Labs in 1969. UNIX history has been largely influenced by Bell Labs’ Research Editions of UNIX, AT&T’s System V UNIX, Berkeley’s Software Distribution (BSD), and Sun Microsystems’ SunOS and Solaris operating systems. The following list shows the major events that have happened throughout the history of UNIX. Later sections describe some of these events in more detail. 1 UNIX History Page 2 Tuesday, December 10, 2002 7:02 PM 2 UNIX Filesystems—Evolution, Design, and Implementation 1969. Development on UNIX starts in AT&T’s Bell Labs. 1971. 1st Edition UNIX is released. 1973. 4th Edition UNIX is released. This is the first version of UNIX that had the kernel written in C. 1974. Ken Thompson and Dennis Ritchie publish their classic paper, “The UNIX Timesharing System” [RITC74]. -

Transitioning from Oracle® Solaris 10 to Oracle® Solaris 11.4 2 ■ Installation and Upgrade: ■ Instead of Jumpstart, Use Automated Installer

® Transitioning From Oracle Solaris 10 ® to Oracle Solaris 11.4 August 2019 Part No: E89347 This document provides information to assist you to transition to Oracle Solaris 11 from Oracle Solaris 10. Key Differences between Oracle Solaris 10 and Oracle Solaris 11 Upgrading from Oracle Solaris 10 to Oracle Solaris 11 requires a fresh installation of Oracle Solaris 11. Tools to help you make the transition include the following: ■ Oracle Solaris 10 branded zones. Migrate Oracle Solaris 10 instances to Oracle Solaris 10 zones on Oracle Solaris 11 systems. ■ ZFS shadow migration. Migrate UFS data from an existing file system, either local or NFS, to a new local ZFS file system. Do not mix UFS directories and ZFS file systems in the same file system hierarchy. You can also remotely mount UFS file systems from an Oracle Solaris 10 system onto an Oracle Solaris 11 system, or use the ufsrestore command on an Oracle Solaris 10 system to restore UFS data (ufsdump) into an Oracle Solaris 11 ZFS file system. ■ ZFS pool import. Export and disconnect storage devices that contain ZFS storage pools on your Oracle Solaris 10 systems and then import them into your Oracle Solaris 11 systems. ■ NFS file sharing. Share files from an Oracle Solaris 10 system to an Oracle Solaris 11 system. Do not mix NFS legacy shared ZFS file systems and ZFS NFS shared file systems. Use only ZFS NFS shared file systems. For the main Oracle Solaris documentation, see Oracle Solaris Documentation. For additional documentation and examples, select a technology on the Oracle Solaris 11 Technology Spotlights page. -

Jack F. Vogel Hillsboro, OR 97124 ‖ (503) 3303395 [email protected] ‖ Linkedin: Jack F

Jack F. Vogel Hillsboro, OR 97124 ‖ (503) 3303395 [email protected] ‖ LinkedIn: Jack F. Vogel Senior Software Engineer New/Emerging Technology ‖ Software Development Lifecycle (SDLC) ‖ CrossFunctional Team Leadership Seasoned Software Engineer with more than 20 years of demonstrated experience across diverse hardware and software development environments in the semiconductor and IT services industries. Expert in core kernel systems and network stack and drivers. Broadbased FreeBSD and Linux expertise. Adept at spearheading largescale programs, streamlining processes, and developing innovative solutions for Fortune 500 employers and client companies based on nextgeneration technologies. Track record of accomplishments across progressively responsible leadership roles; designing, developing, testing, troubleshooting, debugging, and implementing various critical decisionmaking applications. Collaborating with Csuite executives and key stakeholders to ensure optimized ROI, and efficiently resolve enterprisewide system issues. CORE COMPETENCIES Strategic Planning & Execution ‖ Process Reengineering Initiatives ‖ Systems Design/Development‖ Quality Assurance Product Development ‖ Software Debugging Techniques ‖ Project Management ‖ CrossFunctional team Leadership Client Needs Fulfillment ‖ Software Development Life Cycle ‖ Software Automation ‖ Emerging Technologies Technical Summary: C, C++, JAVA, Assembler, Networking, TCP/IP, Drivers, Virtualization, SRIOV, Kernel, Device Drivers, UNIX, Linux, FreeBSD, BSD, Solaris, SunOS, AIX, -

Sun Microsystems, Inc. 2550 Garcia Avenue, Mountain View, CA 94043 News (415) 960-1300, FAX: (415) 969-9131

Sun Microsystems, Inc. 2550 Garcia Avenue, Mountain View, CA 94043 News (415) 960-1300, FAX: (415) 969-9131 FOR IMMEDIATE RELEASE FOR MORE INFORMATION Sun Microsystems, Inc. Erica Vener (415) 336-3566 SUN ENHANCES COMPILERS New SPARCompiler Optimization Technology Boosts Hardware Performance MOUNTAIN VIEW, Calif. — April 18, 1989 — Sun Microsystems has enhanced its family of programming language compilers, significantly improving the run-time performance of Sun's SPARC™-based family of workstations and servers. Sun's com pilers already are among the most robust in the industry, meaning they generate correct code. There are more than 1,850 SPARCware applications available from Sun Catalyst vendors developed using Sun compilers. Now called SPARCompilers™, Sun's language products are available for all Sun platforms. They utilize new optimization technology that improves the performance of SPARC systems ~ without changes to hardware -- by up to five percent according to the most recent industry benchmarks published by the Systems Performance Evaluation Cooperative (SPEC). In the case of many FORTRAN applications, performance can be boosted 15 percent or more. Besides improved performance, SPARCompilers have been enhanced by new language features, ease-of-use improvements and expanded user documentation. SPARCompiler products are available today for the most recent ver sions of Sun FORTRAN, Pascal, Modula-2 and C++. Sun Enhances Compilers Page 2 Sun Offers New Unbundled C Compiler In addition, Sun introduced a new product, Sun C 1.0 — its first C compiler sold separately from SunOS , Sun's UNIX operating system. By unbundling the com piler, Sun can provide more frequent updates and enhancements independent of operat ing system releases. -

UBC: an Efficient Unified I/O and Memory Caching Subsystem for Netbsd

Proceedings of FREENIX Track: 2000 USENIX Annual Technical Conference San Diego, California, USA, June 18–23, 2000 U B C : A N E F F I C I E N T U N I F I E D I / O A N D M E M O R Y C A C H I N G S U B S Y S T E M F O R N E T B S D Chuck Silvers THE ADVANCED COMPUTING SYSTEMS ASSOCIATION © 2000 by The USENIX Association All Rights Reserved For more information about the USENIX Association: Phone: 1 510 528 8649 FAX: 1 510 548 5738 Email: [email protected] WWW: http://www.usenix.org Rights to individual papers remain with the author or the author's employer. Permission is granted for noncommercial reproduction of the work for educational or research purposes. This copyright notice must be included in the reproduced paper. USENIX acknowledges all trademarks herein. UBC: An Efficient Unified I/O and Memory Caching Subsystem for NetBSD Chuck Silvers The NetBSD Project [email protected], http://www.netbsd.org/ Abstract ¯ “buffer cache”: A pool of memory allocated during system startup This paper introduces UBC (“Unified Buffer Cache”), a which is dedicated to caching filesystem data and is design for unifying the filesystem and virtual memory managed by special-purpose routines. caches of file data, thereby providing increased system performance. In this paper we discuss both the tradi- The memory is organized into “buffers,” which are tional BSD caching interfaces and new UBC interfaces, variable-sized chunks of file data that are mapped to concentrating on the design decisions that were made as kernel virtual addresses as long as they retain their the design progressed. -

CVS II: Parallelizing Software Dev Elopment

CVS II: Parallelizing Software Dev elopment Brian Berliner Prisma, Inc. 5465 Mark Dabling Blvd. Colorado Springs, CO 80918 [email protected] ABSTRACT The program described in this paper fills a need in the UNIX community for a freely available tool to manage software revision and release control in a multi-developer, multi-directory, multi-group environment. This tool also addresses the increasing need for tracking third-party vendor source distributions while trying to maintain local modifications to earlier releases. 1. Background In large software development projects, it is usually necessary for more than one software developer to be modifying (usually different) modules of the code at the same time. Some of these code modifications are done in an experimental sense, at least until the code functions cor- rectly, and some testing of the entire program is usually necessary. Then, the modifications are returned to a master source repository so that others in the project can enjoy the new bug-fix or functionality. In order to manage such a project, some sort of revision control system is neces- sary. Specifically, UNIX1 kernel development is an excellent example of the problems that an adequate revision control system must address. The SunOS2 kernel is composed of over a thou- sand files spread across a hierarchy of dozens of directories.3 Pieces of the kernel must be edited by many software developers within an organization. While undesirable in theory, it is not uncommon to have two or more people making modifications to the same file within the kernel sources in order to facilitate a desired change. -

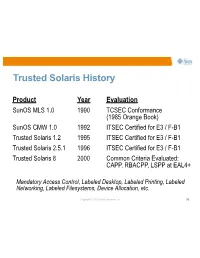

Trusted Solaris History

Trusted Solaris History Product Year Evaluation SunOS MLS 1.0 1990 TCSEC Conformance (1985 Orange Book) SunOS CMW 1.0 1992 ITSEC Certified for E3 / F-B1 Trusted Solaris 1.2 1995 ITSEC Certified for E3 / F-B1 Trusted Solaris 2.5.1 1996 ITSEC Certified for E3 / F-B1 Trusted Solaris 8 2000 Common Criteria Evaluated: CAPP, RBACPP, LSPP at EAL4+ Mandatory Access Control, Labeled Desktop, Labeled Printing, Labeled Networking, Labeled Filesystems, Device Allocation, etc. Copyright © 2009 Sun Microsystems, Inc. 79 Trusted Solaris History Product Year Evaluation SunOS MLS 1.0 1990 TCSEC Conformance (1985 Orange Book) SunOS CMW 1.0 1992 ITSEC Certified for E3 / F-B1 Trusted Solaris 1.2 1995 ITSEC Certified for E3 / F-B1 Trusted Solaris 2.5.1 1996 ITSEC Certified for E3 / F-B1 Trusted Solaris 8 2000 Common Criteria Evaluated: CAPP, RBACPP, LSPP at EAL4+ Mandatory Access Control, Labeled Desktop, Labeled Printing, Labeled Networking, Labeled Filesystems, Device Allocation, etc. Copyright © 2009 Sun Microsystems, Inc. 79 Solaris Trusted Extensions •A redesign of the Trusted Solaris product using a layered architecture. •An extension of the Solaris 10 security foundation providing access control policies based on the sensitivity/label of objects. •A set of label-aware services which implement multilevel security. Copyright © 2009 Sun Microsystems, Inc. 80 Extending Solaris 10 Security Features •Process Rights Management (Privileges) >Fine-grained privileges for X windows >Rights management applied to desktop actions •User Rights Management (RBAC) >Labels and clearances >Additional desktop policies •Solaris Containers (Zones) >Unique Sensitivity Labels >Trusted (label-based) Networking Copyright © 2009 Sun Microsystems, Inc. 81 Trusted Extensions in a Nutshell •Every object has a label associated with it.