Capture the Flag: Military Simulation Meets Computer Games

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Learning Obstacles in the Capture the Flag Model

Learning Obstacles in the Capture The Flag Model Kevin Chung, NYU Polytechnic School of Engineering Julian Cohen, NYU Polytechnic School of Engineering Abstract game is an IRC channel in which competitors discuss Capture The Flag (CTF) competitions have been used in the competition and interact with the. the computer security community for education and evaluation objectives for over a decade. These competi- At its core, the CTF is a type of “open-book test” re- tions are often regarded as excellent approaches to learn volving around computer security. This lends itself to deeply technical concepts in a fun, non-traditional learn- testing competitors on very obscure types of knowledge. ing environment, but there are many difficulties associ- The technical knowledge that is communicated during a ated with developing and competing in a CTF event that CTF is often only mentioned in passing in documenta- are rarely discussed that counteract these benefits. CTF tion or a classroom. For example, the Python documen- competitions often have issues related to participation, tation briefly, and without much discussion, mentions quality assurance, and confusing challenges. These that the pickle module is “not intended to be secure problems affect the overall quality of a CTF competi- against erroneous or maliciously constructed data.” This tion and describe how effective they are at catalyzing ambiguity was leveraged in the 2012 Zombie Reminder learning and assessing skill. In this paper, we present (Hack.lu CTF) and Minesweeper (29c3 CTF) to create insights and lessons learned from organizing CSAW challenges that involved un-pickling untrusted data. The CTF, one of the largest and most successful CTFs. -

Game AI: the Shrinking Gap Between Computer Games and AI Systems

Game AI: The Shrinking Gap Between Computer Games and AI Systems Alexander Kleiner Post Print N.B.: When citing this work, cite the original article. Original Publication: Alexander Kleiner , Game AI: The Shrinking Gap Between Computer Games and AI Systems, 2005, chapter in Ambient Intelligence;: The evolution of technology, communication and cognition towards the future of human-computer interaction, 143-155. Copyright: IOS Press Postprint available at: Linköping University Electronic Press http://urn.kb.se/resolve?urn=urn:nbn:se:liu:diva-72548 Game AI: The shrinking gap between computer games and AI systems Alexander Kleiner Institut fur¨ Informatik Universitat¨ Freiburg 79110 Freiburg, Germany [email protected] Abstract. The introduction of games for benchmarking intelligent systems has a long tradition in AI (Artificial Intelligence) research. Alan Turing was one of the first to mention that a computer can be considered as intelligent if it is able to play chess. Today AI benchmarks are designed to capture difficulties that humans deal with every day. They are carried out on robots with unreliable sensors and actuators or on agents integrated in digital environments that simulate aspects of the real world. One example is given by the annually held RoboCup competitions, where robots compete in a football game but also fight for the rescue of civilians in a simulated large-scale disaster simulation. Besides these scientific events, another environment, also challenging AI, origi- nates from the commercial computer game market. Computer games are nowa- days known for their impressive graphics and sound effects. However, the latest generation of game engines shows clearly that the trend leads towards more re- alistic physics simulations, agent centered perception, and complex player inter- actions due to the rapidly increasing degrees of freedom that digital characters obtain. -

Online Gaming

American Journal of Applied Sciences 2 (3): 622-625, 2005 ISSN 1546-9239 © Science Publications, 2005 Online Gaming Kevin Curran, Paul Canning, Martin Laughlin, Ciarán McGowan and Rory Carlin Internet Technologies Research Group University of Ulster, Magee Campus, Northland Road, Northern Ireland, UK Abstract: Computer gaming is a medium by which we can entertain ourselves, a medium that has expanded to the online worldwide market as part as globalization. The growth of online gaming has close ties with the use of broadband, as a good online gaming experience requires a broadband connection. Through online gaming, people can play and communicate with each other freely in almost any country, at any given time. This paper examines the phenomenon of online gaming. Key words: Online gaming INTRODUCTION for-all, or lock the entrances to the areas in which they play as simply play amongst a selected group of people. With the advent of the ARPANET and the development Online accounts like MPlayer also adapted their of TELNEX (1971), e-mail gaming became popular. formats to suit other forms of online gaming which The use of e-mail to play games over the network was were now becoming popular. One of their most borrowed from ‘playing-by-post’ which was popular innovative changes came with the arrival of the PC’s before the advent of widespread internet usage [1]. The intergalactic squad based piloting game, ‘Freespace’. It engine that was developed and used was the MUD’s was similar to the older versions in the way players (Multi-User Dungeon). This was the use of a server could set up, open and lock their games from other program that users logged into to play the game based users but now they could do much more. -

Gamebots: a 3D Virtual World Test-Bed for Multi-Agent Research Rogelio Adobbati, Andrew N

Gamebots: A 3D Virtual World Test-Bed For Multi-Agent Research Rogelio Adobbati, Andrew N. Marshall, Gal Kaminka, Steven Schaffer, Chris Sollitto Andrew Scholer, Sheila Tejada Computer Science Department Information Sciences Institute Carnegie Mellon University University of Southern California Pittsburgh, PA 15213 4676 Admiralty Way [email protected] Marina del Rey, CA 90292 {srs3, cs3} @andrew.cmu.edu {rogelio, amarshal, ascholer, tejada} @isi.edu ABSTRACT To address these difficulties, we have been developing a new type This paper describes Gamebots, a multi-agent system of research infrastructure. Gamebots is a project started at the infrastructure derived from an Internet-based multi-player video University of Southern California’s Information Sciences game called Unreal Tournament. The game allows characters to Institute, and jointly developed by Carnegie Mellon University. be controlled over client-server network connections by feeding The project seeks to turn a fast-paced multi-agent interactive sensory information to client players (humans and agents). Unlike computer game into a domain for research in artificial intelligence other standard test-beds, the Gamebots domain allows both human (AI) and multi-agent systems (MAS). It consists of a players and agents, or bots, to play simultaneously; thus providing commercially developed, complex, and dynamic game engine, the opportunity to study human team behavior and to construct which is extended and enhanced to provide the important agents that play collaboratively with humans. The Gamebots capabilities required for research. system provides a built-in scripting language giving interested Gamebots provides several unique opportunities for multi-agent researchers the ability to create their own multi-agent tasks and and artificial intelligence research previously unavailable with environments for the simulation. -

Revolutionizing the Visual Design of Capture the Flag (CTF) Competitions

Revolutionizing the Visual Design of Capture the Flag (CTF) Competitions Jason Kaehler1, Rukman Senanayake2, Phillip Porras2 1 Asylum Labs, 1735 Wharf Road, Suite A, Capitola, CA 95010, USA 2 SRI International, Menlo Park, CA, 95125, USA [email protected], [email protected], [email protected] Abstract. There are a variety of cyber-security challenge tournaments held within the INFOSEC and Hacker communities, which among their benefits help to promote and identify emerging talent. Unfortunately, most of these competi- tions are rather narrow in reach, being of interest primarily to those enthusiasts who are already well versed in cyber security. To attract a broader pool of younger generation participants requires one to make such events more engag- ing and intellectually accessible. The way these tournaments are currently con- ducted and presented to live audiences is rather opaque, if not unintelligible to most who encounter them. This paper presents an ongoing effort to bridge the presentation gap necessary to make cyber security competitions more attractive and accessible to a broader audience. We present the design of a new but famil- iar model for capturing the interplay, individual achievements, and tactical dra- ma that transpires during one form of cyber security competition. The main user interface and presentation paradigm in this research borrows from those of es- tablished e-sports, such as League of Legends and Overwatch. Our motivation is to elevate the current format of cyber security competition events to incorporate design and presentation elements that are informed by techniques that have evolved within the e-sports community. We apply the physics models and bat- tlefield visualizations of virtual world gaming environments in a manner that captures the intellectual challenges, team achievements, and tactical gameplay that occur in a popular form of cyber security tournament, called the Capture The Flag (CTF) competition. -

An Authoring Tool for Location-Based Mobile Games with Augmented Reality Features

SBC – Proceedings of SBGames 2015 | ISSN: 2179-2259 Computing Track – Full Papers An Authoring Tool for Location-based Mobile Games with Augmented Reality features Carleandro Noleto,ˆ Messias Lima, Lu´ıs Fernando Maia, Windson Viana, Fernando Trinta Federal University of Ceara,´ Group of Computer Networks, Software Engineering and Systems (GREat), Brazil Abstract location-based mobile games, where people with no-prior knowl- edge of programing languages or application development skills This paper presents an authoring tool for building location-based can design and execute these applications. This authoring tool uses mobile games, enhanced with augmented reality capabilities. These a visual notation to create games based on a set of quests. Each games are a subclass of pervasive games in which the gameplay quest represents a task (or group of tasks) that a player or a group evolves and progresses according to player’s location. We have con- of players must accomplish to reach the game objective. Each task ducted a literature review on authoring tools and pervasive games is associated with activities such as ”Moving to a specific point”, to (i) collect the common scenarios of current location-based mo- ”Collecting a virtual object”, and so on. In our solution, these bile games, and (ii) features of authoring tools for these games. tasks are known as Game Mechanics. Quests and Game Mechan- Additionally, we have also used the focus groups methodology to ics are concepts in which our authoring tool is based upon, and find new scenarios for location-based mobile games, in particular reflect common activities that most LBMGs require, and that were regarding how augmented reality can be used in these games. -

Doom Expansion Rules

ALERT! ALERT! ALERT! All UAC personnel be advised. A new outbreak of invaders has been detected. These new invaders display powerful abilities, and personnel should be extremely cautious of them. We have compiled the following information about the new types of invaders. Species Designation: Cherub Species Designation: Vagary These small flying invaders are extremely difficult to hit. Although we surmise that this creature is less powerful They move very quickly and marines should aim carefully than the cyberdemon, its abilities are largely a mystery to when attacking them. us. It seems to have telekinetic abilities, however, so be careful! Species Designation: Zombie Commando IMPORTANT ADDENDUM! These invaders are UAC security personnel who have been possessed. They are as slow as ordinary zombies, All UAC personnel be advised. Our scientists have discovered and they are slightly easier to kill. Beware, however, as evidence of a powerful alien device that was used to defeat the they are able to fire the weapons in their possession. invaders thousands of years ago. If you discover the whereabouts of this device, you should capture it by any means necessary! Species Designation: Maggot Although they are easier to kill than demons, marines should avoid letting these invaders get within melee range. Their two heads can attack independently of each other, and each can inflict severe injury. Like demons, maggots are capable of pre-emptively attacking enemies who get too close. Species Designation: Revenant These skeletal invaders can attack from around corners Weapon Designation: Soul Cube with their shoulder-mounted missile launchers. Fortunately, their rockets are not very fast and marines can often avoid This alien weapon appears to be powered by life energy. -

Facebook's Open Source Capture the Flag

https://doi.org/10.48009/1_iis_2020_202-212 Issues in Information Systems Volume 21, Issue 1, pp. 202-212, 2020 A COMPARISON STUDY OF TWO CYBERSECURITY LEARNING SYSTEMS: FACEBOOK’S OPEN SOURCE CAPTURE THE FLAG AND CTFD Rhonda G. Chicone, Purdue University Global, [email protected] Susan Ferebee, Purdue University Global, [email protected] ABSTRACT There is a high demand for innovative and smart technologies to improve cybersecurity education and produce skilled cybersecurity professionals in a faster, more engaging manner. Gamification, in the form of Capture the Flag (CTF) platforms, are increasingly being used by educational institutions and in private and public industry sectors. A previous 2018 study by Chicone and Burton investigated Facebook’s CTF platform as an open source inexpensive learning and assessment tool for undergraduate and graduate IT/cybersecurity adult students for an online university. In this comparative expansion study, the 2018 study was replicated but this time examining and comparing Facebook’s CTF platform with another CTF platform called CTFd. The studies were evaluated on assessment capability. It was found that Facebook’s CTF had very little to aid with assessment while CTFd provided many formative assessment features that were valuable for students as well as for faculty in determining needs for future learning. Keywords Cybersecurity, Information Security, Capture the Flag, Cybergames, Cyber Range, Gamification, Assessment, Learning Outcomes INTRODUCTION Moving toward 2020, demand for cybersecurity professionals continues to increase. Casesa (2019) points to five positions, specifically that are in the highest demand in 2020. These positions are Cybersecurity Manager/Administrator, Cybersecurity Consultant, Network Architect and Engineer, Cybersecurity Analyst, and Cybersecurity Engineer. -

1 Call of Duty World League 2018 Official Handbook Effective Date

Call of Duty World League 2018 Official Handbook Effective Date: May 11th, 2018 1. Introduction This Official Handbook (“Handbook” or “Rules”) of the 2018 Call of Duty World League (“CWL” or “Competition”) applies to all Teams, Team Owners, Team Managers, Team Coaches and Players (each a “Participant” and, collectively the “Participants” or “You”) who are participating in the Competition or any event and/or tournament related to the Competition (each, an “Event” or “Tournament”). This Handbook forms a contract between Participants, on the one hand, and Activision Publishing, Inc., Major League Gaming Corp., and applicable affiliates, and the operators of the Call of Duty World League (collectively, the “Administration), on the other hand. This Handbook governs competitive play of Call of Duty in the Competition. These Rules establish the general rules of tournament play, including rules governing player eligibility, tournament structure, points structure, prize awards, and player conduct. These Rules also contain limitations of liability, license grants, and other legally binding contractual terms. Each Participant is required to read, understand, and agree to these Rules before participating in the Competition. THE HANDBOOK AND ALL DISPUTES RELATED TO OR ARISING OUT OF YOUR PARTICIPATION IN THE COMPETITION ARE GOVERNED BY A BINDING ARBITRATION CLAUSE IN SECTION 15 AND A WAIVER OF CLASS ACTION RIGHTS. THAT CLAUSE AFFECTS YOUR LEGAL RIGHTS AND REMEDIES, AND YOU SHOULD REVIEW IT CAREFULLY BEFORE ACCEPTING THIS HANDBOOK. The Competition is made up of Online Activity (GameBattles Ladders, Weekly Tournaments, National Circuit) and Offline Activity (Global Opens, Sanctioned Events, Pro League, and Championships) across three Regions (North America, Europe, and Asia Pacific). -

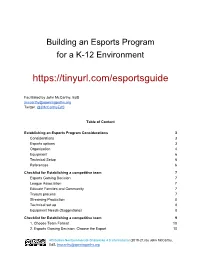

Starting an Esports Program Pdf 109.51 KB

Building an Esports Program for a K-12 Environment https://tinyurl.com/esportsguide Facilitated by John McCarthy, EdS [email protected] Twitter: @JMcCarthyEdS Table of Content Establishing an Esports Program Considerations 3 Considerations 3 Esports options 3 Organization 4 Equipment 6 Technical Setup 6 References 6 Checklist for Establishing a competitive team 7 Esports Gaming Decision 7 League Association 7 Educate Families and Community 7 Tryouts process 7 Streaming Production 8 Technical set up 8 Equipment Needs (Suggestions) 8 Checklist for Establishing a competitive team 9 1. Choose Team Format 10 2. Esports Gaming Decision: Choose the Esport 10 Attribution-NonCommercial-ShareAlike 4.0 International (2019-21) by John McCarthy, EdS, [email protected] League Association (ie. NASEF, HSEL & PlayVS ) 10 Equipment Needs (Suggestions) 11 Streaming Production 11 Educate Families and Community 11 Tryouts process 12 Technical set up 12 References ● NASEF ● 2020 Guide to K-12 Esports ● British Esports: Parents’ Guide ● National Association of Esports Coaches and Directors ● List of games, documentaries, and esports celebrities ● PlayVs Resources Attribution-NonCommercial-ShareAlike 4.0 International (2019-21) by John McCarthy, EdS, [email protected] Establishing an Esports Program Considerations Descriptions Considerations Will we start a school team, a club team, or a recreational club? Esports options ● School Team Sanctioned by the school or district as an official sport. Has access to all related resources and privileges provided to sports teams. Practices are held by a coach who is hired by the district, and matches are held by the school, and elsewhere. Transportation is provided by the school based on school and district guidelines. -

Ka-Boom Licence to Thrill on a Mission LIFTING the LID on VIDEO GAMES

ALL FORMATS LIFTING THE LID ON VIDEO GAMES Licence Ka-boom When games look to thrill like comic books Tiny studios making big licensed games On a mission The secrets of great campaign design Issue 33 £3 wfmag.cc Yacht Club’s armoured hero goes rogue 01_WF#33_Cover V3_RL_DH_VI_HK.indd 2 19/02/2020 16:45 JOIN THE PRO SQUAD! Free GB2560HSU¹ | GB2760HSU¹ | GB2760QSU² 24.5’’ 27’’ Sync Panel TN LED / 1920x1080¹, TN LED / 2560x1440² Response time 1 ms, 144Hz, FreeSync™ Features OverDrive, Black Tuner, Blue Light Reducer, Predefined and Custom Gaming Modes Inputs DVI-D², HDMI, DisplayPort, USB Audio speakers and headphone connector Height adjustment 13 cm Design edge-to-edge, height adjustable stand with PIVOT gmaster.iiyama.com Team Fortress 2: death by a thousand cuts? t may be 13 years old, but Team Fortress 2 is to stop it. Using the in-game reporting tool does still an exceptional game. A distinctive visual nothing. Reporting their Steam profiles does nothing. style with unique characters who are still Kicking them does nothing because another four will I quoted and memed about to this day, an join in their place. Even Valve’s own anti-cheat service open-ended system that lets players differentiate is useless, as it works on a delay to prevent the rapid themselves from others of the same class, well- JOE PARLOCK development of hacks that can circumvent it… at the designed maps, and a skill ceiling that feels sky-high… cost of the matches they ruin in the meantime. Joe Parlock is a even modern heavyweights like Overwatch and So for Valve to drop Team Fortress 2 when it is in freelance games Paladins struggle to stand up to Valve’s classic. -

Gaming Practices, Self-Care, and Thriving Under Neoliberalism

University of Massachusetts Amherst ScholarWorks@UMass Amherst Doctoral Dissertations Dissertations and Theses November 2019 Gaming For Life: Gaming Practices, Self-Care, and Thriving Under Neoliberalism Brian Myers University of Massachusetts Amherst Follow this and additional works at: https://scholarworks.umass.edu/dissertations_2 Part of the Critical and Cultural Studies Commons Recommended Citation Myers, Brian, "Gaming For Life: Gaming Practices, Self-Care, and Thriving Under Neoliberalism" (2019). Doctoral Dissertations. 1801. https://doi.org/10.7275/15065736 https://scholarworks.umass.edu/dissertations_2/1801 This Open Access Dissertation is brought to you for free and open access by the Dissertations and Theses at ScholarWorks@UMass Amherst. It has been accepted for inclusion in Doctoral Dissertations by an authorized administrator of ScholarWorks@UMass Amherst. For more information, please contact [email protected]. Gaming For Life: Gaming Practices, Self-Care, and Thriving Under Neoliberalism A Dissertation Presented by BRIAN H. MYERS Submitted to the Graduate School of the University of Massachusetts Amherst in partial fulfillment of the requirements for the degree of Doctor of Philosophy September 2019 Department of Communication © Copyright by Brian H. Myers 2019 All Rights Reserved Gaming For Life: Gaming Practices, Self-Care, and Thriving Under Neoliberalism A Dissertation Presented By BRIAN H. MYERS Approved as to style and content by: ______________________________________________________________ Emily West, Chair _____________________________________________________________ Lisa Henderson, Member _______________________________________________________________ TreaAndrea Russworm, Member _____________________________________________________________________ Sut Jhally, Department Chair Department of Communication ACKNOWLEDGEMENTS If this project has taught me one thing, it is that the cultural image we have of the individual writer, sitting alone at a desk, spilling out words from his genius is a fiction.