How Languages Affect Design Patterns

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Design Pattern Detection Tool for Code Reuse

DP-CORE: A Design Pattern Detection Tool for Code Reuse Themistoklis Diamantopoulos, Antonis Noutsos and Andreas Symeonidis Electrical and Computer Engineering Dept., Aristotle University of Thessaloniki, Thessaloniki, Greece [email protected], [email protected], [email protected] Keywords: Design Pattern Detection, Static Code Analysis, Reverse Engineering, Code Reuse. Abstract: In order to maintain, extend or reuse software projects one has to primarily understand what a system does and how well it does it. And, while in some cases information on system functionality exists, information covering the non-functional aspects is usually unavailable. Thus, one has to infer such knowledge by extracting design patterns directly from the source code. Several tools have been developed to identify design patterns, however most of them are limited to compilable and in most cases executable code, they rely on complex representations, and do not offer the developer any control over the detected patterns. In this paper we present DP-CORE, a design pattern detection tool that defines a highly descriptive representation to detect known and define custom patterns. DP-CORE is flexible, identifying exact and approximate pattern versions even in non-compilable code. Our analysis indicates that DP-CORE provides an efficient alternative to existing design pattern detection tools. 1 INTRODUCTION ecutable. As a result, developers cannot exploit the source code of other systems without first resolving Developers need to understand existing projects in or- their dependencies and executing them correctly. Sec- der to maintain, extend, or reuse them. However, un- ondly, pattern representations in most tools are not in- derstanding usually comes down to understanding the tuitive, thus resulting in black box systems that do not source code of a project, which is inherently difficult, allow the developer any control over the detected pat- especially when the original software architecture and terns. -

On the Interaction of Object-Oriented Design Patterns and Programming

On the Interaction of Object-Oriented Design Patterns and Programming Languages Gerald Baumgartner∗ Konstantin L¨aufer∗∗ Vincent F. Russo∗∗∗ ∗ Department of Computer and Information Science The Ohio State University 395 Dreese Lab., 2015 Neil Ave. Columbus, OH 43210–1277, USA [email protected] ∗∗ Department of Mathematical and Computer Sciences Loyola University Chicago 6525 N. Sheridan Rd. Chicago, IL 60626, USA [email protected] ∗∗∗ Lycos, Inc. 400–2 Totten Pond Rd. Waltham, MA 02154, USA [email protected] February 29, 1996 Abstract Design patterns are distilled from many real systems to catalog common programming practice. However, some object-oriented design patterns are distorted or overly complicated because of the lack of supporting programming language constructs or mechanisms. For this paper, we have analyzed several published design patterns looking for idiomatic ways of working around constraints of the implemen- tation language. From this analysis, we lay a groundwork of general-purpose language constructs and mechanisms that, if provided by a statically typed, object-oriented language, would better support the arXiv:1905.13674v1 [cs.PL] 31 May 2019 implementation of design patterns and, transitively, benefit the construction of many real systems. In particular, our catalog of language constructs includes subtyping separate from inheritance, lexically scoped closure objects independent of classes, and multimethod dispatch. The proposed constructs and mechanisms are not radically new, but rather are adopted from a variety of languages and programming language research and combined in a new, orthogonal manner. We argue that by describing design pat- terns in terms of the proposed constructs and mechanisms, pattern descriptions become simpler and, therefore, accessible to a larger number of language communities. -

Design Pattern Implementation in Java and Aspectj

Design Pattern Implementation in Java and AspectJ Jan Hannemann Gregor Kiczales University of British Columbia University of British Columbia 201-2366 Main Mall 201-2366 Main Mall Vancouver B.C. V6T 1Z4 Vancouver B.C. V6T 1Z4 jan [at] cs.ubc.ca gregor [at] cs.ubc.ca ABSTRACT successor in the chain. The event handling mechanism crosscuts the Handlers. AspectJ implementations of the GoF design patterns show modularity improvements in 17 of 23 cases. These improvements When the GoF patterns were first identified, the sample are manifested in terms of better code locality, reusability, implementations were geared to the current state of the art in composability, and (un)pluggability. object-oriented languages. Other work [19, 22] has shown that implementation language affects pattern implementation, so it seems The degree of improvement in implementation modularity varies, natural to explore the effect of aspect-oriented programming with the greatest improvement coming when the pattern solution techniques [11] on the implementation of the GoF patterns. structure involves crosscutting of some form, including one object As an initial experiment we chose to develop and compare Java playing multiple roles, many objects playing one role, or an object [27] and AspectJ [25] implementations of the 23 GoF patterns. playing roles in multiple pattern instances. AspectJ is a seamless aspect-oriented extension to Java, which means that programming in AspectJ is effectively programming in Categories and Subject Descriptors Java plus aspects. D.2.11 [Software Engineering]: Software Architectures – By focusing on the GoF patterns, we are keeping the purpose, patterns, information hiding, and languages; D.3.3 intent, and applicability of 23 well-known patterns, and only allowing [Programming Languages]: Language Constructs and Features – the solution structure and solution implementation to change. -

A Design Pattern Generation Tool

Project Number: GFP 0801 A DESIGN PATTERN GEN ERATION TOOL A MAJOR QUALIFYING P ROJECT REPORT SUBMITTED TO THE FAC ULTY OF WORCESTER POLYTECHNIC INSTITUTE IN PARTIAL FULFILLME NT OF THE REQUIREMEN TS FOR THE DEGREE OF BACHELOR O F SCIENCE BY CAITLIN VANDYKE APRIL 23, 2009 APPROVED: PROFESSOR GARY POLLICE, ADVISOR 1 ABSTRACT This project determines the feasibility of a tool that, given code, can convert it into equivalent code (e.g. code that performs the same task) in the form of a specified design pattern. The goal is to produce an Eclipse plugin that performs this task with minimal input, such as special tags.. The final edition of this plugin will be released to the Eclipse community. ACKNOWLEGEMENTS This project was completed by Caitlin Vandyke with gratitude to Gary Pollice for his advice and assistance, as well as reference materials and troubleshooting. 2 TABLE OF CONTENTS Abstract ....................................................................................................................................................................................... 2 Acknowlegements ................................................................................................................................................................... 2 Table of Contents ..................................................................................................................................................................... 3 Table of Illustrations ............................................................................................................................................................. -

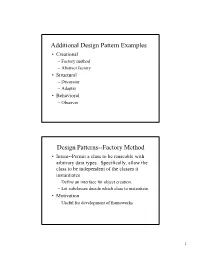

Additional Design Pattern Examples Design Patterns--Factory Method

Additional Design Pattern Examples • Creational – Factory method – Abstract factory • Structural – Decorator – Adapter • Behavioral – Observer Design Patterns--Factory Method • Intent--Permit a class to be reuseable with arbitrary data types. Specifically, allow the class to be independent of the classes it instantiates – Define an interface for object creation. – Let subclasses decide which class to instantiate. • Motivation – Useful for development of frameworks 1 Factory Method--Continued • Consider a document-processing framework – High-level support for creating, opening, saving documents – Consistent method calls for these commands, regardless of document type (word-processor, spreadsheet, etc.) – Logic to implement these commands delegated to specific types of document objects. – May be some operations common to all document types. Factory Method--Continued Document Processing Example-General Framework: Document Application getTitle( ) * Edits 1 newDocument( ) newDocument( ) openDocument( ) openDocument( ) ... ... MyDocument Problem: How can an Application object newDocument( ) create instances of specific document classes openDocument( ) without being application-specific itself. ... 2 Factory Method--Continued Use of a document creation “factory”: Document Application getTitle( ) * Edits 1 newDocument( ) newDocument( ) openDocument( ) openDocument( ) ... ... 1 requestor * Requests-creation creator 1 <<interface>> MyDocument DocumentFactoryIF newDocument( ) createDocument(type:String):Document openDocument( ) ... DocumentFactory -

Abstract Factory and Singleton Design Patterns to Create Decorator Pattern Objects in Web Application

International Journal of Advanced Information Technology (IJAIT) Vol. 1, No.5, October 2011 ABSTRACT FACTORY AND SINGLETON DESIGN PATTERNS TO CREATE DECORATOR PATTERN OBJECTS IN WEB APPLICATION Vijay K Kerji Department of Computer Science and Engineering, PDA College of Engineering,Gulbarga, India [email protected] ABSTRACT Software Design Patterns are reusable designs providing common solutions to the similar kind of problems in software development. Creational patterns are that category of design patterns which aid in how objects are created, composed and represented. They abstract the creation of objects from clients thus making the application more adaptable to future requirements changes. In this work, it has been proposed and implemented the creation of objects involved in Decorator Design Pattern. (Decorator Pattern Adds Additional responsibilities to the individual objects dynamically and transparently without affecting other objects). This enhanced the reusability of the application design, made application more adaptable to future requirement changes and eased the system maintenance. Proposed DMS (Development Management System) web application is implemented using .NET framework, ASP.NET and C#. KEYWORDS Design Patterns, Abstract Factory, Singleton, Decorator, Reusability, Web Application 1. INTRODUCTION Design Patterns refer to reusable or repeatable solutions that aim to solve similar design problems during development process. Various design patterns are available to support development process. Each pattern provides the main solution for particular problem. From developer’s perspectives, design patterns produce a more maintainable design. While from user’s perspectives, the solutions provided by design patterns will enhance the usability of web applications [1] [2]. Normally, developers use their own design for a given software requirement, which may be new each time they design for a similar requirement. -

Java Design Patterns I

Java Design Patterns i Java Design Patterns Java Design Patterns ii Contents 1 Introduction to Design Patterns 1 1.1 Introduction......................................................1 1.2 What are Design Patterns...............................................1 1.3 Why use them.....................................................2 1.4 How to select and use one...............................................2 1.5 Categorization of patterns...............................................3 1.5.1 Creational patterns..............................................3 1.5.2 Structural patterns..............................................3 1.5.3 Behavior patterns...............................................3 2 Adapter Design Pattern 5 2.1 Adapter Pattern....................................................5 2.2 An Adapter to rescue.................................................6 2.3 Solution to the problem................................................7 2.4 Class Adapter..................................................... 11 2.5 When to use Adapter Pattern............................................. 12 2.6 Download the Source Code.............................................. 12 3 Facade Design Pattern 13 3.1 Introduction...................................................... 13 3.2 What is the Facade Pattern.............................................. 13 3.3 Solution to the problem................................................ 14 3.4 Use of the Facade Pattern............................................... 16 3.5 Download the Source Code............................................. -

Design Patterns Past and Future

Proceedings of Informing Science & IT Education Conference (InSITE) 2011 Design Patterns Past and Future Aleksandar Bulajic Metropolitan University, Belgrade, Serbia [email protected]; [email protected] Abstract A very important part of the software development process is service or component internal de- sign and implementation. Design Patterns (Gamma et al., 1995) provide list of the common pat- terns used in the object-oriented software design process. The primary goal of the Design Patterns is to reuse good practice in the design of new developed components or applications. Another important reason of using Design Patterns is improving common application design understand- ing and reducing communication overhead by reusing the same generic names for implemented solution. Patterns are designed to capture best practice in a specific domain. A pattern is supposed to present a problem and a solution that is supported by an example. It is always worth to listen to an expert advice, but keep in mind that common sense should decide about particular implemen- tation, even in case when are used already proven Design Patterns. Critical view and frequent and well designed testing would give an answer about design validity and a quality. Design Patterns are templates and cannot be blindly copied. Each design pattern records design idea and shall be adapted to particular implementation. Using time to research and analyze existing solutions is recommendation supported by large number of experts and authorities and fits very well in the pattern basic philosophy; reuse solution that you know has been successfully implemented in the past. Sections 2 and 3 are dedicated to the Design Patterns history and theory as well as literature sur- vey. -

1. Difference Between Factory Pattern and Abstract Factory Pattern S.No

1. Difference between Factory Pattern and Abstract Factory Pattern S.No Factory Pattern Abstract Factory Pattern 1 Create object through inheritance Create object through composition 2 Produce only one product Produce families of products 3 Implements code in the abstract creator Concrete factories implements factory that make use of the concrete type that method to create product sub class produces 2.Difference between Abstract Factory Pattern And Builder Pattern S.No Builder Pattern Abstract Factory Pattern 1 In Builder Pattern, there will be one Abstract Factory Pattern will return the Director class which will instruct Builder instance directly. class to build the different parts/properties of our object and finally retrieve the object. 2 It will have reference to the created It does not keep the track of it's created object. object. 3.Difference between Builder Pattern And Composite Pattern S.No Builder Pattern Composite Pattern 1 It is used to create group of objects of It creates Parent - Child relations between predefined types. our objects. 4.Difference between MVC and MVP S.No MVP MVC 1 MVP is a bit more complex to MVC is easier to implement than MVP. implement than MVC .Also, it has additional layer for view interfaces. 2 The request is always received by the The request is received by the controller View and delegated to the presenter which in turn gets the required data and which in turn gets the data does the loads up the appropriate view processing 3 The presentation and view logic an be The controller logic can be unit tested. -

The Dynamic Factory Pattern León Welicki Joseph W

The Dynamic Factory Pattern León Welicki Joseph W. Yoder Rebecca Wirfs-Brock ONO (Cableuropa S.A.) The Refactory, Inc. Wirfs-Brock Associates Basauri, 7-9 7 Florida Drive 24003 S.W. Baker Road 28023, Madrid, Spain Urbana, Illinois USA 61801 Sherwood, Oregon USA +34 637 879 258 1-217-344-4847 1-503-625-9529 [email protected] [email protected] [email protected] Abstract to incorporate these new implementations without coding changes to core framework classes. The DYNAMIC FACTORY pattern describes a factory that can create product instances based on concrete type definitions stored as 3. Example external metadata. This facilitates adding new products to a A workflow system has a rule evaluation module. Each rule system without having to modify code in the factory class. implements a well-defined interface and is injected into a container that evaluates it. The rules can be simple or composite Categories and Subject Descriptors (using the COMPOSITE [7] and INTERPRETER [7] patterns) allowing D.1.5 [Programming Techniques]: Object-oriented for the creation of complex expressions by composing finer- Programming; D.2.2 [Design Tools and Techniques]: Object- grained elements. oriented design methods; D.2.11 [Software Architectures]: Patterns Creation of rules is delegated to a factory class that has a standard interface. Clients of the rules request an instance of the rule and General Terms the factory provides it. Design The workflow system vendor supplies a fixed set of rules. New rules can be added by simply providing an implementation of the Keywords rule interface. The problem comes at rule instantiation, since any Factory Objects, Adaptive Object-Models, Creational Patterns factory that contains the logic for creating rule instances may need 1. -

Factory Pattern: Motivation • Consider the Following Scenario: – You Have

Factory Pattern: Motivation • Consider the following scenario: { You have a superclass (P roduct) and a set of subclasses that inherit from it (P roductSubclass1, P roductSubclass2, ...) { As usual, there are a number of methods that the subclasses share in com- mon, that they inherit from the superclass { You have client code that needs to be able to create objects of the various subclasses ∗ We'll call this the orderProduct method ∗ We'll refer to the created objects as products ∗ To specify to which subclass a product should belong, orderProduct will have a parameter which specifies the type 1 Factory Pattern: Motivation (2) { For example: public class Product { //constructor ... ... step1(...) { //Methods that apply to all subclasses in common ... } ... step2(...) { ... } ... } public class ProductSubclass1 extends Product { ... } public class ProductSubclass2 extends Product { ... } public class ProductClient { ... Product orderProduct (String type) { Product product; if (type.equals("subclass1")) product = new ProductSubclass1(); else if (type.equals("subclass2")) product = new ProductSubclass2(); ... product.step1(); product.step2(); ... return product; } ... } • The problem with this approach - as usual - is limited flexibility { If we want to add new subclasses - types of products - (or remove existing ones), the client's code must be altered { The above design violates the Open/Closed Principle, among others 2 Factory Pattern: The Simple Factory Model • We want to encapsulate what varies { The part that varies is the product creation - We want to encapsulate the code that creates new products { To accomplish this, we'll create a new class that we'll call a factory { It's purpose is to create new products of a desired type { The client will have an instance variable for the factory ∗ The factory will be used from within the orderP roduct method of the client to create products 3 Factory Pattern: The Simple Factory Model (2) • Sample code: //Superclass and subclasses as before public class ProductSubclass1 extends Product { .. -

Design Patterns Chapter 3

A Brief Introduction to Design Patterns Jerod Weinman Department of Computer Science Grinnell College [email protected] Contents 1 Introduction 2 2 Creational Design Patterns 4 2.1 Introduction ...................................... 4 2.2 Factory ........................................ 4 2.3 Abstract Factory .................................... 5 2.4 Singleton ....................................... 8 2.5 Builder ........................................ 8 3 Structural Design Patterns 10 3.1 Introduction ...................................... 10 3.2 Adapter Pattern .................................... 10 3.3 Façade ......................................... 11 3.4 Flyweight ....................................... 12 3.5 Proxy ......................................... 13 3.6 Decorator ....................................... 14 4 Behavioral Design Patterns 20 4.1 Introduction ...................................... 20 4.2 Chain of Responsibility ................................ 20 4.3 Observer ........................................ 20 4.4 Visitor ......................................... 22 4.5 State .......................................... 25 1 Chapter 1 Introduction As you have probably experienced by now, writing correct computer programs can sometimes be quite a challenge. Writing the programs is fairly easy, it can be the “correct” part that maybe is a bit hard. One of the prime culprits is the challenge in understanding a great number of complex interactions. We all have probably experienced the crash of some application program