Velociraptor Hunting Evil with Open Source!

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

PETER STEPHENS Current Technical Pursuits: Agile Practices, Cloud

PETER STEPHENS 512.778.6322 / cell 865.567.7173 1955 County Road 202 www.diligentsoftware.com/resume Liberty Hill, TX 78642 [email protected] Current Technical Pursuits: Agile Practices, Cloud Computing and Amazon Web Services, Cloud Automation, ASP.NET MVC, REST based web services, JavaScript and jQuery CURRENT SKILLS • Microsoft.NET Framework versions 1.0 – 4.5 » C#, LINQ, XML , Networking, Regular Expressions, Multithreading , Task Parallel Library, Encryption, etc. » Websites: ASP.NET WebForms and MVC » Thick Client: Windows Presentation Foundation (WPF) » Web Services: WCF, Web API (REST and RPC) , and OData • Cloud Computing » Amazon Web Services : EC2 , S3 , RDS , SQS, SNS, Cloud Formation, Route 53, etc. » Rackspace Cloud • Automation : Powershell, MSBuild, and T4 templating • Agile Practices : Continuous Integration with Team City and CruiseControl.NET, Test Driven Development (TDD ), NUnit, Rhino Mocks, MOQ, Autofac, and ReSharper • Microsoft SQL Server versions 7 – 2008. Extensive use of T-SQL , C# stored procedures, C# functions, management and database optimization • HTML , CSS , JavaScript , jQuery , HTTP, Web Design, SEO , and Microsoft Expression Studio, Report Viewer Control based RDLC reports • Domain Specific Languages: Irony • Deployment: WIX , InstallShield, and WebDeploy • Source Control : Git and Mercurial, Beyond Compare, KDIFF OPEN SOURCE AND SOCIAL • Stack Overflow: http://stackoverflow.com/users/72860/peter-stephens • Twitter: https://twitter.com/#!/peterastephens • Bitbucket: https://bitbucket.org/pstephens/ -

Software License Agreement (EULA)

Third-party Computer Software AutoVu™ ALPR cameras • angular-animate (https://docs.angularjs.org/api/ngAnimate) licensed under the terms of the MIT License (https://github.com/angular/angular.js/blob/master/LICENSE). © 2010-2016 Google, Inc. http://angularjs.org • angular-base64 (https://github.com/ninjatronic/angular-base64) licensed under the terms of the MIT License (https://github.com/ninjatronic/angular-base64/blob/master/LICENSE). © 2010 Nick Galbreath © 2013 Pete Martin • angular-translate (https://github.com/angular-translate/angular-translate) licensed under the terms of the MIT License (https://github.com/angular-translate/angular-translate/blob/master/LICENSE). © 2014 [email protected] • angular-translate-handler-log (https://github.com/angular-translate/bower-angular-translate-handler-log) licensed under the terms of the MIT License (https://github.com/angular-translate/angular-translate/blob/master/LICENSE). © 2014 [email protected] • angular-translate-loader-static-files (https://github.com/angular-translate/bower-angular-translate-loader-static-files) licensed under the terms of the MIT License (https://github.com/angular-translate/angular-translate/blob/master/LICENSE). © 2014 [email protected] • Angular Google Maps (http://angular-ui.github.io/angular-google-maps/#!/) licensed under the terms of the MIT License (https://opensource.org/licenses/MIT). © 2013-2016 angular-google-maps • AngularJS (http://angularjs.org/) licensed under the terms of the MIT License (https://github.com/angular/angular.js/blob/master/LICENSE). © 2010-2016 Google, Inc. http://angularjs.org • AngularUI Bootstrap (http://angular-ui.github.io/bootstrap/) licensed under the terms of the MIT License (https://github.com/angular- ui/bootstrap/blob/master/LICENSE). -

Windows Installer XML: Käyttöönotto Asennusohjelman Tekemisessä

Windows Installer XML: käyttöönotto asennusohjelman tekemisessä Koskina, Ekaterina 2012 Kerava Laurea-ammattikorkeakoulu Kerava Windows Installer XML: käyttöönotto asennusohjelman tekemisessä Ekaterina Koskina Tietojenkäsittelyn koulutusohjelma Opinnäytetyö Maaliskuu, 2012 Sisällys 1 Johdanto ............................................................................................. 6 2 Asennusohjelmat Windows- käyttöjärjestelmissä ............................................ 6 2.1 Windows Installer .......................................................................... 7 2.1.1 Synty, kehitys ja nykytilanne..................................................... 7 2.1.2 MSI- asennustiedoston rakenne .................................................. 8 2.2 WiX- työkalupaketti ja sen kilpailijat................................................... 9 3 WiX:in käyttöönotto ............................................................................. 11 4 WiX- teknologia ................................................................................... 13 4.1 Rungon Elementit ........................................................................ 14 4.1.1 Tuote- elementti ................................................................. 15 4.1.2 Pakkaus- elementti............................................................... 15 4.1.3 Media- elementti ................................................................. 16 4.2 Hakemistot ................................................................................ 17 4.3 Komponentti- elementit -

Snare for Windows Custom MSI V2.0

Snare for Windows Custom MSI v2.0 © Intersect Alliance International Pty Ltd. All rights reserved worldwide. Intersect Alliance Pty Ltd shall not be liable for errors contained herein or for direct, or indirect damages in connection with the use of this material. No part of this work may be reproduced or transmitted in any form or by any means except as expressly permitted by Intersect Alliance International Pty Ltd. This does not include those documents and software developed under the terms of the open source General Public Licence, which covers the Snare agents and some other software. The Intersect Alliance logo and Snare logo are registered trademarks of Intersect Alliance International Pty Ltd. Other trademarks and trade names are marks' and names of their owners as may or may not be indicated. All trademarks are the property of their respective owners and are used here in an editorial context without intent of infringement. Specifications and content are subject to change without notice. Page 1 of 17 Table of Contents 1. Guide Overview . 3 2. Introduction to MSI . 3 3. Requirements . 4 4. Installing WIX . 5 5. Creating the MSI package . 6 6. Installing the MSI package using Group Policy . 9 7. About InterSect Alliance . 16 Page 2 of 17 1. Guide Overview About this Guide This guide provides administrators with the instructions to create a Windows MSI file, based on the required organizational security settings, using the freely available Windows Installer XML (WiX) toolset. This will allow you to remotely deploy Snare Enterprise Agents for Windows with a customized configuration, using the Microsoft Installer (MSI). -

F/LOSS Is Commercial Software, David A. Wheeler

Wheeler Page 1 of 8 Open Source Business Resource, February 2009: Commercialization HOME ABOUT LOG IN REGISTER SEARCH CURRENT ARCHIVES UPCOMING THEMES TIM LECTURE SERIES SPONSORS BLOG MULTIMEDIA Home > February 2009: Commercialization > Wheeler F/LOSS is Commercial Software, David A. Wheeler "The software created by open source communities became so powerful that commercial interests embraced those communities, supported them, learned from them and now are using the mechanisms of open source to make their businesses run better. This embrace has extended so long that commercial open source and open source are virtually synonymous." Dan Woods Many people mistakenly use the term "commercial software" as if it was the opposite of Free/Libre Open Source Software (F/LOSS). This is in spite of: i) the rise in commercial development and support for F/LOSS; ii) the goal of most F/LOSS projects to incorporate improvements, which is actually a form of financial gain; iii) official definitions of "commercial item" that include F/LOSS; and iv) F/LOSS licenses and projects that clearly approve of commercial support. In reality, there are two types of commercial software: proprietary software and F/LOSS. This paper provides examples for each of the four points mentioned above, briefly notes some alternative terms, and ends with some conclusions, explaining why it is important to understand that F/LOSS software is almost always commercial. Defining Key Terms Let's first define our key terms: F/LOSS: can be briefly defined as software with a license that gives users the freedom to run the program for any purpose, to study and modify the program, and to redistribute copies of either the original or modified program without having to pay royalties to previous developers. -

Release Notes

Intel® SDK for OpenCL™ Applications 2019 Update 2 Release Notes 14 February 2019 Intel® SDK for OpenCL™ Applications 2019 Update 2 Version History/Revision History These are the main releases of Intel® SDK for OpenCL™ Applications (also known as Intel® System Studio: OpenCL™ Tools component): Date Revision Description February 2019 2019 U2 Added support of Eclipse* 2018-12 R. Support of Eclipse* Oxygen and Visual Studio 2013 were deprecated and removed. Qt* was updated to 5.12 version. Protobuf* was updated to 3.6.1 version. November 2018 2019 U1 Experimental version of the CPU Runtime supporting OpenCL™ 2.1 has been deprecated and removed. Added support for Intel® VTune™ Amplifier, added support for Ubuntu* 18.04 August 2018 2019 Coffee Lake support, support of Intel® CPU Runtime for OpenCL™ Applications 18.1 December 2017 2017 U2 Eclipse Oxygen (4.7) support, bug fixes, and improvements July 2017 2017 U1 Visual Studio 2017 support, new OSes and platforms support October 2016 2016 U3 7th Generation Intel® Core™ Processor support, Windows* 10 Anniversary Update, OpenCL 2.1 experimental CPU only runtime for Linux* July 2016 2016 U2 (Linux) GPU Kernel debugger Beta and kernel level analysis for Linux June 2016 2016 U2 OpenCL 2.1 support, new features in Kernel Development (Windows) Framework April 2016 2016 GPU Kernel debugger Beta and standalone release for Linux Nov. 17, 2016 2015 U3 Standalone release for Microsoft Windows* April 28, 2015 2015 U2 Visual Studio 2015 support Jan 22, 2015 2015 U1 Released as part of Intel® Integrated Native Developer Experience (Intel® INDE) Customer Support For technical support, including answers to questions not addressed in this product, visit the technical support forum, FAQs, and other support information at Intel OpenCL Support or http://www.intel.com/software/products/support/. -

Wix Database XML Schema Table of Contents

WiX Database XML Schema Table of Contents WiX Database XML Schema..........................................................................................................................21 Elements...................................................................................................................................................... 22 AdminExecuteSequence............................................................................................................................30 AdminUISequence..................................................................................................................................... 31 AdvertiseExecuteSequence....................................................................................................................... 32 AllocateRegistrySpace............................................................................................................................... 33 AppData..................................................................................................................................................... 34 AppId.......................................................................................................................................................... 35 AppSearch..................................................................................................................................................36 AssemblyName......................................................................................................................................... -

Cake Documentation Release 0.1.21

Cake Documentation Release 0.1.21 Patrik Svensson January 27, 2015 Contents 1 User documentation 3 1.1 Introduction...............................................3 1.2 How does it work?............................................3 1.3 Getting started..............................................5 1.4 Extensibility...............................................7 1.5 Contributors...............................................7 1.6 Contribution Guidelines.........................................8 1.7 AppVeyor integration..........................................9 2 Api documentation 13 2.1 API documentation............................................ 13 i ii Cake Documentation, Release 0.1.21 Cake (C# Make) is a build automation system with a C# DSL to do things like compiling code, copy files/folders, running unit tests, compress files and build NuGet packages. • GitHub: https://github.com/cake-build/cake • NuGet: https://www.nuget.org/packages/Cake Contents 1 Cake Documentation, Release 0.1.21 2 Contents CHAPTER 1 User documentation 1.1 Introduction 1.1.1 What is Cake? Cake is a build automation system with a C# DSL to do things like compiling code, copy files/folders, running unit tests, compress files and build NuGet packages. 1.1.2 Goals The goal of Cake is to be a first class alternative to those who want to write their build scripts in C# instead of Ruby, F# or Powershell. 1.2 How does it work? Cake uses a dependency based programming model just like Rake, FAKE and similar build automation systems where you declare tasks and the dependencies between those. 1.2.1 Tasks To define a new task, use the Task-method. Task("A") .Does(() => { }); RunTarget("A"); 1.2.2 Dependencies To add a dependency on another task, use the IsDependentOn-method. 3 Cake Documentation, Release 0.1.21 Task("A") .Does(() => { }); Task("B") .IsDependentOn("A"); .Does(() => { }); RunTarget("B"); This will first execute target A and then B as expected. -

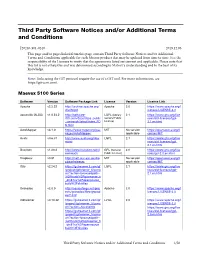

Third Party Software Notices And/Or Additional Terms and Conditions

Third Party Software Notices and/or Additional Terms and Conditions F20240-501-0240 2019.12.06 This page and/or pages linked from this page contain Third Party Software Notices and/or Additional Terms and Conditions applicable for each Matrox product that may be updated from time to time. It is the responsibility of the Licensee to verify that the agreements listed are current and applicable. Please note that this list is not exhaustive and was determined according to Matrox’s understanding and to the best of its knowledge. Note: Links using the GIT protocol require the use of a GIT tool. For more information, see https://git-scm.com/. Maevex 5100 Series Software Version Software Package Link License Version License Link Apache v2.2.22 http://archive.apache.org/ Apache 2.0 https://www.apache.org/l dist/httpd icenses/LICENSE-2.0 asoundlib (ALSA) v1.0.24.2 http://software- LGPL (Library 2.1 https://www.gnu.org/lice dl.ti.com/dsps/dsps_public General Public nses/old-licenses/lgpl- _sw/ezsdk/latest/index_FD License) 2.1.en.html S.html AutoMapper v3.1.0 https://www.nuget.org/pac MIT No version https://opensource.org/li kages/AutoMapper/ applicable censes/MIT Avahi v0.6.31 http://www.avahi.org/dow LGPL 2.1 https://www.gnu.org/lice nload nses/old-licenses/lgpl- 2.1.en.html Busybox v1.20.2 http://www.busybox.net/d GPL (General 2.0 https://www.gnu.org/lice ownloads Public License) nses/gpl-2.0.en.html Dropbear v0.51 http://matt.ucc.asn.au/dro MIT No version https://opensource.org/li pbear/releases applicable censes/MIT Glib v2.24.2 https://gstreamer.ti.com/gf -

Open Source Basics for Management

Open Source Basics for Management Open Source 101 for Managers Speakers: Greg Pryzby Senior Solutions Architect, Red Hat David Wheeler Researcher and Author, Institute for Defense Analyses What is Open Source Software (OSS)? • OSS: software licensed to users with these freedoms: Open Source Software – to run the program for any purpose, – to study and modify the program, and (OSS or FLOSS) and the – to freely redistribute copies of either the original or modified U.S. Department of Defense (DoD) program (without royalties, etc.) • Original term: “Free software” (confused with no-price) David A. Wheeler • Other synonyms: libre sw, free-libre sw, FOSS, FLOSS November 4, 2009 – OSS most common in DoD (I often use “FLOSS” to non-DoD) • Antonyms: proprietary software, closed software http://www.dwheeler.com/ • Widely used; OSS #1 or #2 in many markets – “… plays a more critical role in the DoD than has generally been recognized.” [MITRE 2003] This presentation contains the views of the author and does not indicate • Not non-commercial; OSS almost always commercial endorsement by IDA, the U.S. government, or the U.S. Department of Defense. 1 [For details see “Free Software Definition” & “Open Source Definition”] 2 Why would governments use or create OSS (value for government)? Why would contractors use/develop Reasons follow from the definition OSS for supply to others? • Can evaluate in detail, lowering risk • Same list as previous, plus... – Can see if meets needs (security, etc.) • OSS use—similar advantages to use of proprietary – Mass -

1-Wire® Drivers Installation Guide for Windows

Maxim > Design Support > Technical Documents > Application Notes > iButton® > APP 1740 Keywords: 1-Wire, 1-Wire Drivers, OneWire, OneWire Drivers, 1-Wire Drivers Installation, Driver Installation, iButton, API, software, software distribution, examples, TMEX, RTE, iButton TMEX RTE, 1wire APPLICATION NOTE 1740 White Paper 6: 1-Wire® Drivers Installation Guide for Windows Sep 24, 2002 Abstract: The software application developer has two options for installing 1-Wire device drivers on Microsoft® Windows® 32- and 64-bit platforms (Windows 7, Windows Server 2008, Windows Vista®, and Windows XP® service pack 2 or greater). The first option uses Maxim's 1-Wire Drivers installation program; the second option is to develop a custom installation program. This application note discusses the installation process for 1-Wire drivers. It explains which drivers and dlls go with which 1-Wire port adapter (USB, serial port, and parallel port). It also lists the subdirectory to which each dll and driver must be copied and the appropriate registry keys that need to be created. This white paper also covers the installation of both the TMEX API and the 1-Wire API for .NET. Introduction This white paper discusses the installation process of the 1-Wire drivers for the following Microsoft Windows 32-bit and 64-bit operating systems: Windows 7, Windows Server 2008, Windows Vista, and Windows XP (service pack 2 or greater). There are two ways to accomplish a 1-Wire drivers installation. The first option uses Maxim's 1-Wire drivers installation program, and the second option is to develop a custom installation program. 1-Wire Drivers Installation Program The easiest option for a 1-Wire software developer is to use the installation program created by Maxim. -

Examine .NET Core As a Development Platform for Monitor ERP System AB

Examine .NET Core as a development platform for Monitor ERP System AB Viktor Rosvall Computer Engineering BA (C), Final Project Main field of study: Computer Engineering Credits: 15 hp Semester, year: Spring, 2020 Supervisor: Martin Kjellqvist, [email protected] Examiner: Dr. Ulf Jennehag, [email protected] Degree programme: Datateknik, 180 credits Examine .NET Core as a development platform for Monitor ERP System Viktor Rosvall 2020–06–12 Abstract The objective of this study has been to examine the portability of Moni- tor ERP System AB’s existing .NET Framework codebase to .NET Core. The study has been conducted by examining the portability of Monitor’s projects by using Microsoft’s .NET Portability Analyzer and visualizing the dependencies of projects by using AsmSpy to gain an understanding of what order projects need to be ported. An examination of used third-party libraries has also been conducted to see which libraries are not compatible with .NET Core. And an attempt to port Monitor’s root solution has been made as a proof-of-concept and to make test and build duration compar- isons between the ported solution and the old solution. The .NET Porta- bility Analyzer reported that 19 projects aren’t 100% compatible with .NET Core (+ Windows Compatibility Pack) where some projects have one miss- ing API call while others have many more. The third-party library examina- tion showed that two libraries are not compatible with .NET Core, the first is Dynamsoft Dynamic .NET TWAIN SDK which can probably be replaced with VintaSoft Twain .NET SDK, and SAP SQL Anywhere which doesn’t have a direct replacement.