Rugby-Bot: Utilizing Multi-Task Learning & Fine-Grained Features

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Round 8 2021 Row Volume 2 · Issue 8

The FRONT ROW ROUND 82021 VOLUME 2 · ISSUE 8 Stand by your Mann Newcastle's five-eighth on his side's STATS season defining run of games ahead Two into one? Why the mooted two-conference NOT system for the NRL is a bad call. GOOD WE ANALYSE EXACTLY HOW THE COVID-19 PANDEMIC HAS INFLUENCED THE GAME INSIDE: NRL Round 8 program with squad lists, previews & head to head stats, Round 7 reviewed LEAGUEUNLIMITED.COM AUSTRALIA’S LEADING INDEPENDENT RUGBY LEAGUE WEBSITE THERE IS NO OFF-SEASON 2 | LEAGUEUNLIMITED.COM | THE FRONT ROW | VOL 2 ISSUE 8 What’s inside From the editor THE FRONT ROW - VOL 2 ISSUE 8 Tim Costello From the editor 3 Last week, long-serving former player and referee Henry Feature What's (with) the point(s)? 4-5 Perenara was forced into medical retirement from on-field Feature Kurt Mann 6-7 duties. While former player-turned-official will remain as part of the NRL Bunker operations, a heart condition means he'll be Opinion Why the conference idea is bad 8-9 doing so without a whistle or flag. All of us at LeagueUnlimited. NRL Ladder, Stats Leaders. Player Birthdays 10 com wish Henry all the best - see Pg 33 for more from the PRLMO. GAME DAY · NRL Round 8 11-27 Meanwhile - the game rolls on. We no longer have a winless team LU Team Tips 11 with Canterbury getting up over Cronulla on Saturday, while THU Canberra v South Sydney 12-13 Penrith remain the high-flyers, unbeaten through seven rounds. -

NBA MLB NFL NHL MLS WNBA American Athletic

Facilities That Have the AlterG® ® Anti-Gravity Treadmill Texas Rangers LA Galaxy NBA Toronto Blue Jays (2) Minnesota United Atlanta Hawks (2) Washington Nationals (2) New York City FC Brooklyn Nets New York Red Bulls Boston Celtics Orlando City SC Charlotte Hornets (2) NFL Real Salt Lake Chicago Bulls Atlanta Falcons San Jose Earthquakes Cleveland Cavaliers Sporting KC Denver Nuggets Arizona Cardinals (2) Detroit Pistons Baltimore Ravens Golden State Warriors Buffalo Bills WNBA Houston Rockets Carolina Panthers Indiana Pacers Chicago Bears New York Liberty Los Angeles Lakers Cincinnati Bengals Los Angeles Clippers Cleveland Browns COLLEGE/UNIVERSITY Memphis Grizzlies Dallas Cowboys PHYSICAL THERAPY (3) PROGRAMS Miami Heat Denver Broncos Milwaukee Bucks (2) Detroit Lions Florida Gulf Coast University Minnesota Timberwolves Green Bay Packers Chapman University (2) New York Knicks Houston Texans Northern Arizona University New Orleans Pelicans Indianapolis Colts Marquette University Oklahoma City Thunder Jacksonville Jaguars University of Southern California Orlando Magic Kansas City Chiefs (2) University of Delaware Philadelphia 76ers Los Angeles Rams Samuel Merritt University Phoenix Suns (2) Miami Dolphins Georgia Regents University Hardin- Portland Trailblazers Sacramento Minnesota Vikings Simmons University Kings New England Patriots High Point University San Antonio Spurs New Orleans Saints Long Beach State University Utah Jazz New York Giants Chapman University (2) Washington Wizards New York Jets University of Texas at Arlington- -

Harold Matthews Cup Draw 2021

HAROLD MATTHEWS CUP Harold Matthews Cup Round Date Round Type (Regular or Final) Home Team Away Team Venue ID Day Time (hh:mm am/pm) BYE (*If Bye: Yes) Number (dd/mm/yyyy) 1 Regular Canterbury Bankstown Bulldogs Sydney Roosters Belmore Sports Ground Saturday 06/02/2021 11:30 AM 1 Regular Canberra Raiders Cronulla-Sutherland Sharks Raiders Club Belconnen Saturday 06/02/2021 1:30 PM 1 Regular Penrith Panthers South Sydney Rabbitohs Panthers Stadium Saturday 06/02/2021 12:00 PM 1 Regular St George Dragons Newcastle Knights Mascot Oval Saturday 06/02/2021 11:30 AM 1 Regular Western Suburbs Magpies North Sydney Bears Campbelltown Stadium Saturday 06/02/2021 10:30 AM 1 Regular Parramatta Eels Manly Warringah Sea Eagles New Era Stadium Saturday 06/02/2021 10:00 AM 1 Regular Balmain Tigers Central Coast Roosters Leichhardt Oval Saturday 06/02/2021 12:30 PM 1 Regular Illawarra Steelers NIL Yes 2 Regular North Sydney Bears Parramatta Eels Macquarie University Sports Fields Saturday 13/02/2021 10:30 AM 2 Regular Penrith Panthers St George Dragons Panthers Stadium Saturday 13/02/2021 12:00 PM 2 Regular Western Suburbs Magpies Canberra Raiders Campbelltown Stadium Saturday 13/02/2021 12:30 PM 2 Regular South Sydney Rabbitohs Manly Warringah Sea Eagles Metricon HP Centre (Redfern) Saturday 13/02/2021 10:00 AM 2 Regular Sydney Roosters Illawarra Steelers Mascot Oval Saturday 13/02/2021 11:30 AM 2 Regular Balmain Tigers Newcastle Knights Leichhardt Oval Saturday 13/02/2021 12:00 PM 2 Regular Central Coast Roosters Canterbury Bankstown Bulldogs Morry -

National Rugby League Lawn Bowls National Rugby League

SELECTIONS ~UNIQUE COFFINS ~ Celebrating through a beautiful funeral life 1 EXPRESSIONS COFFINS - $2290 EXPRESSIONS COFFINS - $2290 Gerbera Flowers Frangipani Flowers Gerbera Flowers Frangipani Flowers Pink Blossom Pink Blossom Succulents of Colour ashtonmanufacturing.com.au ashtonmanufacturing.com.au Pink Roses Mixed Flowers Pink Roses ashtonmanufacturing.com.au 2 Expressions Coffins Expressions Coffins 3 ashtonmanufacturing.com.au EXPRESSIONS COFFINS - $2290 EXPRESSIONS COFFINS - $2290 White Rose Golden Sunflower White Roses Golden Sunflower Pink & Purple Roses Sunflowers Blooming ashtonmanufacturing.com.au ashtonmanufacturing.com.au Red Roses Red Roses Rainbow Lorikeets 4 Expressions Coffins Expressions Coffins 5 ashtonmanufacturing.com.au EXPRESSIONS COFFINS - $2290 EXPRESSIONS COFFINS - $2290 Doves Released Leopard Print Doves Released Leopard Print Butterfly Migration Love Hearts Butterfly Migration Love Hearts ashtonmanufacturing.com.au ashtonmanufacturing.com.au Cloudy Sky Jelly Beans Cloudy Sky Jelly Beans ashtonmanufacturing.com.au ashtonmanufacturing.com.au 6 Expressions Coffins Expressions Coffins 7 ashtonmanufacturing.com.au ashtonmanufacturing.com.au EXPRESSIONS COFFINS - $2290 EXPRESSIONS COFFINS - $2290 Red Wood Green Tractor Red Wood Green Tractor Checker Plate Red Tractor Checker Plate Red Tractor ashtonmanufacturing.com.au ashtonmanufacturing.com.au Wheat Harvest Corrugated Iron Wheat Harvest ashtonmanufacturing.com.au ashtonmanufacturing.com.au 8 Expressions Coffins Expressions Coffins 9 ashtonmanufacturing.com.au -

Sir Peter Leitch Club at MT SMART STADIUM, HOME of the MIGHTY VODAFONE WARRIORS

Sir Peter Leitch Club AT MT SMART STADIUM, HOME OF THE MIGHTY VODAFONE WARRIORS 21st September 2016 Newsletter #140 By David Kemeys Former Sunday Star-Times Editor, Former Editor-in-Chief Suburban Newspapers, Long Suffering Warriors Fan RIKEY DID the Vodafone Warriors get hammered at the weekend. The constant theme was that we Cneed a player clearout. That is hardly groundbreaking stuff, but what was, was that players were named. Hugh McGahan singled out Manu Vatuvei and Ben Matulino, arguing both had failed to live up their status as two of our highest paid players. The former Kiwi captain said Warriors coach Stephen Kearney could make a mark by showing the pair the door, and proving to the others that poor performances won't be tolerated. “Irrespective of his standing, Manu Vatuvei has got to go,” McGahan told Tony Veitch. “And again, irre- spective of his standing, Ben Matulino has got to go. They have underperformed. If you're going to make an impact I'd say that's probably the two players that you would look at.” Bold stuff, and fair play to the man, he told it like he saw it. Kearney, on the other hand, clearly doesn’t see it the same way, since he named both in the Kiwis train-on squad, and while he acknowledged they had struggled this year, he backed himself to get the best out of them. In fact he went further, he said it was his job. “That's my responsibility as the coach, to get the individuals in a position so they can go out and play their best. -

A Former Townsville Bulletin Sports Editor Who Played a Key Role in The

A former Townsville Bulletin sports editor who played a key role in the establishment of the North Queensland Cowboys has called for major changes at board level to get the struggling club back on track. Doug Kingston, who floated the idea that North Queensland should have a Winfield Cup (now NRL) team in a story in the Townsville Bulletin back in 1989, called and chaired the first meeting, and worked on a voluntary basis to help get the team into the national competition, believes the current Cowboys board appears to have lost sight of the core reason the club was established. "Unless major changes are made in the composition of the Cowboys board the club faces a bleak future," Kingston said. "The secrecy surrounding the board of directors gives rise to suspicion that it is a closed shop, which has lost sight of the core reason the club was established. "During the past few weeks I have tried, unsuccessfully, to find out just who is on the NQ Cowboys board. My quest to identify the current board members included numerous Google searches and an email to NQ Cowboys chairman, Lewis Ramsay, requesting details of board members and the procedure for appointment of board members. I also asked Mr Ramsay if any of the current board members were elected by a vote of club members. "Mr Ramsay replied that these matters were ‘confidential’. Kingston then wrote back to Mr Ramsay saying: "In the absence of your advice to the contrary, I will assume that the Cowboys Leagues Club currently owns the North Queensland Cowboys football club/team, having acquired it in 2015 from News Limited. -

6110B10ee091a31e4bc115b0

ARENASARENAS In December 2016 HG Sports Turf installed its Eclipse Stabilised Turf system at Westpac Stadium in Wellington. The new surface had its first outing on New Year’s Day with an A-League clash between the Wellington Phoenix and Adelaide United IcingIcing onon thethe CakeCake TinTin PHOTOS COURTESY OF HG SPORTS TURF AND WESTPAC STADIUM TURF AND WESTPAC OF HG SPORTS PHOTOS COURTESY Affectionately dubbed the estpac Stadium, or Wellington Regional adequate to cater for international events due to its In addition to sporting events, Westpac Stadium stabilisation or reinforcement. This combined with Stadium, is a major sporting venue in age and location. A new stadium was also needed regularly hosts major events and concerts. Shortly an ever-increasing events strategy, and the need for ‘Cake Tin’ by the locals, W Wellington, New Zealand which was to provide a larger-capacity venue for One Day after opening in 2000, it hosted the Edinburgh the stadium to be a multi-functional events space for officially opened in early 2000. Residing one International cricket matches, due to the city’s Basin Military Tattoo, the first time the event was held sports and non-sports events, meant it needed a turf Westpac Stadium in New kilometre north of the Wellington CBD on reclaimed Reserve ground losing such matches to larger outside of Edinburgh, Scotland, while in 2006 it system that would be up to the challenge. railway land, it was constructed to replace Athletic stadia in other parts of the country. hosted WWE’s first ever New Zealand show in front HG Sports Turf (HGST), which had previously Zealand’s capital Wellington Park, the city’s long-standing rugby union venue. -

Triple Bottom Line (TBL)

Triple Bottom Line (TBL) Assessment Summary The Triple Bottom Line Assessment is required to be published in accordance with Part 4, section 23 (1)(b) of the Freedom of Information Act 2016 19/514 – Canberra Raiders Performance Alliance Agreement Summary of impacts: The submission seeks Government agreement to the Minister for Sport and Recreation finalising an agreement (the Agreement) with the Canberra Raiders upon which National Rugby League (NRL) content, and Canberra Raiders home games, will be played in Canberra up to and including the 2025 NRL season. The Agreement has particularly positive social impacts, with a positive assessment made in the areas of gender equality (raising the profile of women in sport), health (encouraging community participation in sport and recreation activities), and access to participation in community activities (social and community-building benefits of major events). The Agreement also has positive economic outcomes in relation to economic growth thanks to the expenditure, visitation and destination marketing activity associated with hosting major sporting events. Negative impacts have been identified in relation to the ACT Budget (merely because funding will need to be appropriated, although the submission notes the worth of the investment) and waste generation. On balance, the TBL assessment supports the submission and the Agreement. Level of impact Positive Negative Neutral Social Level of impact Impact Summary Positive Gender equality The Canberra Raiders, together with Canberra Region Rugby League, play an active role in the promotion and development of Women’s rugby league in the Territory. The Katrina Fanning Shield (Open Women’s Tackle competition) is contested by seven teams from ACT and surrounding region. -

Cowboys Vs Dragons Facebook Competition Ts and Cs

‘Cowboys Tickets Social Media Giveaway’ - Terms and Conditions Home Game Cowboys Vs Dragons Saturday 20 March 2021, kick-off 6.35PM Instructions on how to enter and prizes form part of these terms and conditions. Section 1: General Information 1. Promoter: Queensland Country Bank Limited (ABN 77 087 651 027), 333 Ross River Road, Aitkenvale QLD 4814. The Promoter can be contacted on 1800 075 078. 2. Promotion Period: The Promotion will run between 9.00am on Tuesday 16 March, 2021 until 11:00am on Thursday 18 March 2021 (AEST). 3. Eligibility: To be eligible to enter the draw, entrants must satisfy the following criteria: (a) Be a current Member of the Promoter; (b) ‘Like’ the Facebook post found via @QueenslandCountry; (c) be aged 18 years and over and a resident of Queensland during the Promotion period; (d) Not be a director, employee or an immediate family member of a director or employee, of the Promoter or its related entities, or the Cowboys Rugby League Football Limited (ABN 28 060 382 961) ‘the North Queensland Cowboys’) or its related entities, and (i) Immediate family member - includes your spouse or partner, your (or your spouse’s) children, parents or other relatives, provided these live permanently with you; (e) Agree to the terms and conditions of this Promotion. 4. Entry conditions: Entrants may enter the Promotion by satisfying all eligibility criteria. Each prize winner must be able to provide an email address to be sent the tickets by 1pm on Friday 19 March 2021. An inability to do so will trigger the re-draw clause detailed at item 8 of these Terms & Conditions. -

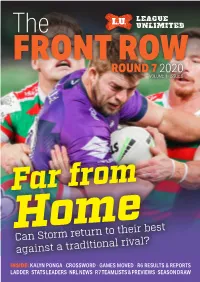

Round 7 2020 Volume 1 · Issue 5

The FRONT ROW ROUND 7 2020 VOLUME 1 · ISSUE 5 Far from HomeCan Storm return to their best against a traditional rival? INSIDE: KALYN PONGA · CROSSWORD · GAMES MOVED · R6 RESULTS & REPORTS LADDER · STATS LEADERS · NRL NEWS · R7 TEAMLISTS & PREVIEWS · SEASON DRAW LEAGUEUNLIMITED.COM | THE FRONT ROW | ROUND 7, 2020 | 1 THE FRONT ROW FORUMS AUSTRALIA’S BIGGEST RUGBY LEAGUE DISCUSSION FORUMS forums.leagueunlimited.com THERE IS NO OFF-SEASON 2 | LEAGUEUNLIMITED.COM | THE FRONT ROW | ROUND 7, 2020 From the editor What’s inside Tim Costello THE FRONT ROW - ISSUE 5 It had to happen eventually - a coach was sacked. While From the editor 3 many were expecting Bulldogs boss Dean Pay or Dragon Paul THE WRAP · Round 6 McGregor to be given their marching orders, the Warriors were Match reports 4-7 the first to move, with Stephen Kearney given the flick following The scoresheet 8 the NZ side's loss to South Sydney on Friday. Todd Payten takes over in the meantime as his side looks to bounce back in this LU Player of the Year standings 9 Friday's belated ANZAC clash with the Storm - relocated to NRL Match Review & Judiciary 9 Sydney's Netstrata Jubilee Stadium. Premiership Ladder, Stats Leaders 10 That's two matches for the coming weekend which were From the NRL, Player Birthdays 11 abruptly moved to the Kogarah venue - Thursday's Penrith v Feature: Kalyn Ponga 12-13 South Sydney match will be played there following overuse Feature: Christian Welch 14-15 concerns at Campbelltown. The Storm v Warriors clash moving Fun & Games: Crossword & Jumbles 16-17 there on Friday follows a spike in new coronavirus cases in the Victorian capital. -

Blackbird Case Study NRL Copy

CASE STUDY Australian National Rugby League turns to Blackbird to drive brand reach, engagement and monetization Australia’s National Rugby League (NRL) is the most viewed and attended rugby league club competition in the world. 16 professional men’s rugby teams, including the enormously popular Sydney Roosters, Melbourne Storm and Brisbane Broncos, compete annually for the prestigious Telstra Premiership title. With the phasing out of SnappyTV, the NRL sought an alternative cloud video editing platform that could continue to significantly build the sport’s brand and reach by delivering engaging content to multiple platforms faster than any other solution on the market. After extensive research into available systems, the NRL chose Blackbird. Every weekend, live streams of the 8 games are run through the Microsoft Azure cloud from the NRL’s content partners’ production locations. Based in Sydney, the NRL’s digital team use Blackbird to rapidly clip, edit and publish highlights during and post-match to Twitter, Facebook and YouTube. Clips can be delivered to social platforms within 30 seconds – with emojis added and players and sponsors tagged for further engagement and reach. Idents, overlaid stills and animations and sponsor logos are easily added with geo and playback restrictions implemented to support international rights control. www.blackbird.video CONTINUED 800,000 fans globally with an NRL Watch account can enjoy premium video on-demand (VOD) content consisting of longer form match highlights, player interviews and behind the scenes content – all edited in Blackbird. With a cumulative TV audience of 116 million and over 2.9 million <30 Seconds fans regularly engaging with the sport on social media, the NRL clip from live game to social exceeded Australian Rules Football in popularity last year for the first time since 2010, boasting revenues of over $500m. -

Encyclopedia of Australian Football Clubs

Full Points Footy ENCYCLOPEDIA OF AUSTRALIAN FOOTBALL CLUBS Volume One by John Devaney Published in Great Britain by Full Points Publications © John Devaney and Full Points Publications 2008 This book is copyright. Apart from any fair dealing for the purposes of private study, research, criticism or review as permitted under the Copyright Act, no part may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording or otherwise without prior written permission. Every effort has been made to ensure that this book is free from error or omissions. However, the Publisher and Author, or their respective employees or agents, shall not accept responsibility for injury, loss or damage occasioned to any person acting or refraining from action as a result of material in this book whether or not such injury, loss or damage is in any way due to any negligent act or omission, breach of duty or default on the part of the Publisher, Author or their respective employees or agents. Cataloguing-in-Publication data: The Full Points Footy Encyclopedia Of Australian Football Clubs Volume One ISBN 978-0-9556897-0-3 1. Australian football—Encyclopedias. 2. Australian football—Clubs. 3. Sports—Australian football—History. I. Devaney, John. Full Points Footy http://www.fullpointsfooty.net Introduction For most football devotees, clubs are the lenses through which they view the game, colouring and shaping their perception of it more than all other factors combined. To use another overblown metaphor, clubs are also the essential fabric out of which the rich, variegated tapestry of the game’s history has been woven.