The Semantic Web Is a Vision About the Future of the World Wide Web Brought Forward by the Inventor of the Web, Tim Berners-Lee

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

RDF Query Languages Need Support for Graph Properties

RDF Query Languages Need Support for Graph Properties Renzo Angles1, Claudio Gutierrez1, and Jonathan Hayes1,2 1 Dept. of Computer Science, Universidad de Chile 2 Dept. of Computer Science, Technische Universit¨at Darmstadt, Germany {rangles,cgutierr,jhayes}@dcc.uchile.cl Abstract. This short paper discusses the need to include into RDF query languages the ability to directly query graph properties from RDF data. We study the support that current RDF query languages give to these features, to conclude that they are currently not supported. We propose a set of basic graph properties that should be added to RDF query languages and provide evidence for this view. 1 Introduction One of the main features of the Resource Description Framework (RDF) is its ability to interconnect information resources, resulting in a graph-like structure for which connectivity is a central notion [GLMB98]. As we will argue, basic concepts of graph theory such as degree, path, and diameter play an important role for applications that involve RDF querying. Considering the fact that the data model influences the set of operations that should be provided by a query language [HBEV04], it follows the need for graph operations support in RDF query languages. For example, the query “all relatives of degree 1 of Alice”, submitted to a genealogy database, amounts to retrieving the nodes adjacent to a resource. The query “are suspects A and B related?”, submitted to a police database, asks for any path connecting these resources in the (RDF) graph that is stored in this database. The query “what is the Erd˝osnumber of Alberto Mendelzon”, submitted to (a RDF version of) DBLP, asks simply for the length of the shortest path between the nodes representing Erd˝osand Mendelzon. -

HTML5 for .NET Developers by Jim Jackson II Ian Gilman

S AMPLE CHAPTER Single page web apps, JavaScript, and semantic markup Jim Jackson II Ian Gilman FOREWORD BY Scott Hanselman MANNING HTML5 for .NET Developers by Jim Jackson II Ian Gilman Chapter 1 Copyright 2013 Manning Publications brief contents 1 ■ HTML5 and .NET 1 2 ■ A markup primer: classic HTML, semantic HTML, and CSS 33 3 ■ Audio and video controls 66 4 ■ Canvas 90 5 ■ The History API: Changing the game for MVC sites 118 6 ■ Geolocation and web mapping 147 7 ■ Web workers and drag and drop 185 8 ■ Websockets 214 9 ■ Local storage and state management 248 10 ■ Offline web applications 273 vii HTML5 and .NET This chapter covers ■ Understanding the scope of HTML5 ■ Touring the new features in HTML5 ■ Assessing where HTML5 fits in software projects ■ Learning what an HTML application is ■ Getting started with HTML applications in Visual Studio You’re really going to love HTML5. It’s like having a box of brand new toys in front of you when you have nothing else to do but play. Forget pushing the envelope; using HTML5 on the client and .NET on the server gives you the ability to create entirely new envelopes for executing applications inside browsers that just a few years ago would have been difficult to build even as desktop applications. The abil- ity to use the skills you already have to build robust and fault-tolerant .NET solu- tions for any browser anywhere gives you an advantage in the market that we hope to prove throughout this book. For instance, with HTML5, you can ■ Tap the new Geolocation API to locate your users anywhere -

Weaving a Web of Linked Resources

Semantic Web 8 (2017) 767–772 767 DOI 10.3233/SW-170284 IOS Press Editorial Weaving a Web of linked resources Fabien Gandon a,*, Marta Sabou b and Harald Sack c a Wimmics, Université Côte d’Azur, Inria, CNRS, I3S, Sophia Antipolis, France E-mail: [email protected] b CDL-Flex, Vienna University of Technology, Austria E-mail: [email protected] c FIZ Karlsruhe, Leibniz Institute for Information Infrastructure, KIT Karlsruhe, Germany E-mail: Harald.Sack@fiz-Karlsruhe.de Abstract. This editorial introduces the special issue based on the best papers from ESWC 2015. And since ESWC’15 marked 15 years of Semantic Web research, we extended this editorial to a position paper that reflects the path that we, as a community, traveled so far with the goal of transforming the Web of Pages to a Web of Resources. We discuss some of the key challenges, research topics and trends addressed by the Semantic Web community in its journey. We conclude that the symbiotic relation of our community with the Web requires a truly multidisciplinary research approach to support the Web’s diversity. Keywords: Semantic Web, trends, challenges 1. From a Web of pages to a Web of resources a first wave of deployment of the Semantic Web with the Linked Data principles and the Linked Open Data As we write this article Tim Berners-Lee just re- 5-Star rules [3] leading to the publication and growth ceived the Turing Award “for inventing the World of linked open datasets towards the Web of Data, as Wide Web, the first Web browser, and the fundamental depicted in Fig. -

Common Sense Reasoning with the Semantic Web

Common Sense Reasoning with the Semantic Web Christopher C. Johnson and Push Singh MIT Summer Research Program Massachusetts Institute of Technology, Cambridge, MA 02139 [email protected], [email protected] http://groups.csail.mit.edu/dig/2005/08/Johnson-CommonSense.pdf Abstract Current HTML content on the World Wide Web has no real meaning to the computers that display the content. Rather, the content is just fodder for the human eye. This is unfortunate as in fact Web documents describe real objects and concepts, and give particular relationships between them. The goal of the World Wide Web Consortium’s (W3C) Semantic Web initiative is to formalize web content into Resource Description Framework (RDF) ontologies so that computers may reason and make decisions about content across the Web. Current W3C work has so far been concerned with creating languages in which to express formal Web ontologies and tools, but has overlooked the value and importance of implementing common sense reasoning within the Semantic Web. As Web blogging and news postings become more prominent across the Web, there will be a vast source of natural language text not represented as RDF metadata. Common sense reasoning will be needed to take full advantage of this content. In this paper we will first describe our work in converting the common sense knowledge base, ConceptNet, to RDF format and running N3 rules through the forward chaining reasoner, CWM, to further produce new concepts in ConceptNet. We will then describe an example in using ConceptNet to recommend gift ideas by analyzing the contents of a weblog. -

Microformats: Empowering Your Markup for Web 2.0

Microformats: Empowering Your Markup for Web 2.0 John Allsopp Microformats: Empowering Your Markup for Web 2.0 Copyright © 2007 by John Allsopp All rights reserved. No part of this work may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or by any information storage or retrieval system, without the prior written permission of the copyright owner and the publisher. ISBN-13 (pbk): 978-1-59059814-6 ISBN-10 (pbk): 1-59059-814-8 Printed and bound in the United States of America 9 8 7 6 5 4 3 2 1 Trademarked names may appear in this book. Rather than use a trademark symbol with every occurrence of a trademarked name, we use the names only in an editorial fashion and to the benefit of the trademark owner, with no intention of infringement of the trademark. Distributed to the book trade worldwide by Springer-Verlag New York, Inc., 233 Spring Street, 6th Floor, New York, NY 10013. Phone 1-800-SPRINGER, fax 201-348-4505, e-mail [email protected],or visit www.springeronline.com. For information on translations, please contact Apress directly at 2560 Ninth Street, Suite 219, Berkeley, CA 94710. Phone 510-549-5930, fax 510-549-5939, e-mail [email protected], or visit www.apress.com. The information in this book is distributed on an “as is” basis, without warranty. Although every precaution has been taken in the preparation of this work, neither the author(s) nor Apress shall have any liability to any person or entity with respect to any loss or damage caused or alleged to be caused directly or indirectly by the information contained in this work. -

Enhanced Semantic Web Layered Architecture Model

NEW ASPECTS of APPLIED INFORMATICS, BIOMEDICAL ELECTRONICS & INFORMATICS and COMMUNICATIONS Enhanced Semantic Web Layered Architecture Model Islam H. Harb, Salah Abdel-Mageid, Hassan Farahat, M. S. Farag Comp. Sys. Engineer Comp. Sys. Engineer Comp. Sys. Engineer Math. Dept. Al-Azhar Univ. Al-Azhar Univ. Al-Azhar Univ. Al-Azhar Univ. Egypt. Egypt. Egypt. Egypt. [email protected], [email protected] [email protected] Abstract: - This paper introduces the traditional web and its limitations, and how these limitations can be overcome by putting the lights on a new interesting approach for the web which is the Semantic Web. The most common technologies that can be used to construct such smart web are discussed briefly, and then its current layered architecture models as proposed by Tim Berners-Lee and others are evaluated to alleviate discrepancies and weak points. An enhanced architecture obeying layered architecture evaluation criteria and standard principles is proposed. This enhanced model is evaluated and contrasted against other models. Key-Words: - Semantic web, ontology, XML, RDF, URI and Functional Layers. 1 Introduction the system engineering description and the layers integration. The Semantic Web may be considered as an This paper introduces an Enhanced Semantic Web evolution to this WWW which aims to make all the Layered Architecture Model. This enhanced model information and application data on the internet introduces a new architecture which follows the universally shared and machine processable in a very layered architecture as [12], [18]. This enhanced efficient way. It is an intelligent web which can proposed architecture alleviates the previous work understand the information semantics and services weak points. -

Website Development and Web Standards in the Ubiquitous World: Where Are We Going?

WSEAS TRANSACTIONS on COMPUTERS Serena Pastore Website development and web standards in the ubiquitous world: Where are we going? SERENA PASTORE INAF-Astronomical Observatory of Padova National Institute for Astrophisics (INAF) Vicolo Osservatorio 5 – 35122 - Padova ITALY [email protected] Abstract: - A website is actually the first indispensable tool for information dissemination, but its development embraces various aspects: the choice of Content Management System (CMS) to implement production and the publishing process, the provision of relationships and interconnections with social networks and public web services, and the reuse of information from other sources. Meanwhile, the context where web content is displayed has dramatically changed with the advent of mobile devices that are very different in terms of size and features, which have led to a ubiquitous world made of mobile Internet-connected devices. Users’ behavior on mobile web devices means that developers need new strategies for web design. The paper analyzes technologies, methods, and solutions that should be adopted to provide a good user experience regardless of the devices used to visualize the content in its various forms (i.e., web pages, web applications, widgets, social applications, and so on), assuring that open standards are adhered to, and thus providing wider accessibility to users. The strategies for website development cover responsive technology vs. mobile apps, the interconnection with social networks and web services in the Google world, the adoption -

Use Style: Paper Title

IJRECE VOL. 6 ISSUE 2 APR.-JUNE 2018 ISSN: 2393-9028 (PRINT) | ISSN: 2348-2281 (ONLINE) A Review on Reasoning System, Types and Tools and Need for Hybrid Reasoning Goutami M.K1, Mrs. Rashmi S R2, Bharath V3 1M.Tech, Computer Network Engineering, Dept. of Computer Science and Engineering, Dayananda Sagar college of Engineering, Bangalore. (Email: [email protected]) 2Asst. Professor, Dept. of Computer Science and Engineering, Dayananda Sagar college of Engineering , Bangalore. (Email: [email protected]) 3Dept. of Computer Science and Engineering, Dayananda Sagar college of Engineering, Bangalore Abstract— Expert system is a programming system which knowledge base then we require a programming system that utilizes the information of expert knowledge of the specific will facilitate us to access the knowledge for reasoning purpose domain to make decisions. These frameworks are intended to and helps in decision making and problem solving. Inference tackle complex issues by thinking through the learning process engine is an element of the system that applies logical rules to called as reasoning. The knowledge used for reasoning is the knowledge base to deduce new information. User interface represented mainly as if-then rules. Expert system forms a is one through which the user can communicate with the expert significant part in day-to-day life nowadays as it emulates the system. expert’s behavior in analyzing the information and concludes “A reasoning system is a software system that uses available knowledge, applies logical techniques to generate decision. This paper primarily centers on the reasoning system conclusions”. It uses logical techniques such as deduction which plays the fundamental part in field of artificial approach and induction approach. -

Representing Knowledge in the Semantic Web Oreste Signore W3C Office in Italy at CNR - Via G

Representing Knowledge in the Semantic Web Oreste Signore W3C Office in Italy at CNR - via G. Moruzzi, 1 -56124 Pisa - (Italy) Phone: +39 050 315 2995 (office) e.mail: [email protected] home page: http://www.weblab.isti.cnr.it/people/oreste/ Abstract – The Web is an immense repository of data and knowledge. Semantic web technologies support semantic interoperability and machine-to-machine interaction. A significant role is played by ontologies, which can support reasoning. Generally much emphasis is given to retrieval, while users tend to browse by association. If data are semantically annotated, an appropriate intelligent user agent aware of the mental model and interests of the user can support her/him in finding the desired information. The whole process must be supported by a core ontology. 1. Introduction W3C leads the evolution of the Web. Universal Access and Semantic Web show a significant impact upon interoperability, technological as well as semantic. In this paper we will discuss the role played by XML to support interoperability within applications, while RDF helps in cross applications interoperability. Subsequently, the paper considers the metadata issue, with a brief description of RDF and the Semantic Web stack. Finally, we discuss an example in the area of cultural heritage, which is very rich in variety of possible associations, presenting an architecture where intelligent agents make use of core ontology to help users in finding the appropriate information. 2. The World Wide Web Consortium (W3C) The Wide Web Consortium (W3C) was created in October 1994 to lead the World Wide Web to its full potential by developing common protocols that promote its evolution and ensure its interoperability. -

The Semantic

The Fate of the Semantic Web Technology experts and stakeholders who participated in a recent survey believe online information will continue to be organized and made accessible in smarter and more useful ways in coming years, but there is stark dispute about whether the improvements will match the visionary ideals of those who are working to build the semantic web. Janna Quitney Anderson, Elon University Lee Rainie, Pew Research Center’s Internet & American Life Project May 4, 2010 Pew Research Center’s Internet & American Life Project An initiative of the Pew Research Center 1615 L St., NW – Suite 700 Washington, D.C. 20036 202‐419‐4500 | pewInternet.org This publication is part of a Pew Research Center series that captures people’s expectations for the future of the Internet, in the process presenting a snapshot of current attitudes. Find out more at: http://www.pewInternet.org/topics/Future‐of‐the‐Internet.aspx and http://www.imaginingtheinternet.org. 1 Overview Sir Tim Berners‐Lee, the inventor of the World Wide Web, has worked along with many others in the Internet community for more than a decade to achieve his next big dream: the semantic web. His vision is a web that allows software agents to carry out sophisticated tasks for users, making meaningful connections between bits of information so “computers can perform more of the tedious work involved in finding, combining, and acting upon information on the web.”1 The concept of the semantic web has been fluid and evolving and never quite found a concrete expression and easily‐understood application that could be grasped readily by ordinary Internet users. -

Motivation, Possibilities, and Difficulties for Participating in the Semantic

Motivation, Possibilities, and Difficulties for Participating in the Semantic Web Aning Rothe Professor: Prof. Dr. rer. nat. habil. Dr. h. c. Alexander Schill Supervisor: Dipl. -Inf. habil Marius Feldmann Department of Computer Science Institute for System Architecture TECHNISCHE UNIVERSITAT¨ DRESDEN July, 2008 APPROVAL Author: Aning Rothe Matrikel-Nr.: 2810396 Title: Motivation, Possibilities, and Difficulties for Participating in the Semantic Web Degree: Master of Science Examining Committee: Dated: A THESIS SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIRE- MENTS FOR THE DEGREE OF MASTER OF SCIENCE IN INSTITUTE FOR SYSTEM ARCHITECTURE TECHNISCHE UNIVERSITAT¨ DRESDEN The author reserves all rights. This work may not be reproduced in whole or in part without the author’s written permission. Permission is granted to Technische Universit¨at Dresden to make copies for non-commercial purposes. The author certifies that all works during this research has been completed by myself. Any copyrighted material that appeared in this work has been cited, and all external helps that I received in this research work have been clearly acknowl- edged. Signature of Author Dated: Acknowledgements I would like to express my heartfelt thanks to my supervisor Mr. Marius Feldmann, who gave me the chance to do this thesis. Without his advice, encouragement, enduring patience and constant support, the work presented in this thesis would not have been possible. I also wish to thank Prof. Dr. Alexander Schill who provided direction and all ideas along the way as I expected. I appreciate his guidance and additional helpful proposal I needed in order to accomplish this thesis. Additionally, I am greatly indebted to Liu, Wu, Deng and Wason for sharing and providing me their knowledge, and to Xu for giving her advice. -

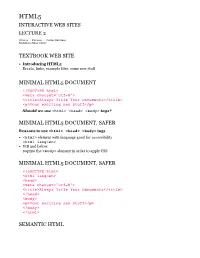

Textbook Web Site Minimal Html5 Document Minimal

HTML5 INTERACTIVE WEB SITES LECTURE 2 CS 50.12 : : Fall 2013 : : Corrine Haverinen Santa Rosa Junior College TEXTBOOK WEB SITE Introducing HTML5 Errata, links, example files, some new stuff MINIMAL HTML5 DOCUMENT <!DOCTYPE html> <meta charset="utf-8"> <title>Always Title Your Documents</title> <p>Your exciting new stuff</p> Should we use <html> <head> <body> tags? MINIMAL HTML5 DOCUMENT, SAFER Reasons to use <html> <head> <body> tags <html> element with language good for accessibility <html lang=en> IE8 and below: require the <body> element in order to apply CSS MINIMAL HTML5 DOCUMENT, SAFER <!DOCTYPE html> <html lang=en> <head> <meta charset="utf-8"> <title>Always Title Your Documents</title> </head> <body> <p>Your exciting new stuff</p> </body> </html> SEMANTIC HTML conveying the meaning behind Web pages understanding how things are related to each other embed semantics in Web docs with: HTML elements, body, #d headings, lists, blockquote use new HTML5 sectioning elements use the correct element or tag to describe the content semantic tags used for their purpose, not for styling ARIA enhancement Microdata such as Microformats, RDFa REASONS TO USE SEMANTIC HTML lighter, cleaner code accessibility search engine optimization if your important content is not in the HTML (JS, CSS, etc) search engines will not see it easier for search engines to get what your site is about semantically correct the right way as opposed to such practices as hidden text used to increase relevance Hold meaning even though repurposed (RSS feeds, redesign of layout) Easier for developers to work with OLD METHOD DIV TAGS <div> based layouts standard way to layout and style content <div> is flow content element, does not stand apart in the structure of the document <div> tags have no meaning semantically, no impact on the computable structure of the page or document outline HTML5 NEW SEMANTIC ELEMENTS <header> <nav> <hgroup> <main> NEW!! <section> <article> <aside> <footer> HTML5 ELEMENTS OLD BROWSERS New tags stylable in all modern browsers..