Autonomous Identification and Goal-Directed Invocation of Event-Predictive Behavioral Primitives

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

It Goes Without Saying

Adam Peterson It Goes Without Saying Winner, Camera Obscura Award for Outstanding Fiction e was nothing if not the consummate Hlocal. If a people were boorish he was boorish, if courteous then courteous. In Bei- jing he was anonymous, in Tokyo he was se- rious, and in Doolin he was a baritone. And, heavy and drunk at a picnic table in Munich, he was in a state of permanent appetite. A fl y sentried the golden, salty chicken from which he tore a leg as the Germans around him licked the grease from their fi ngers and dried them on their shorts. So he too let the juices run down his chin then chased them with ce- tacean gulps of beer until his face shone and his pants looked as if he’d passed the aft er- noon crying rather than drinking stein aft er stein of the helles to hunt his thirst. 7 summer-2011-issue.indd 7 5/26/2011 11:22:54 AM He’d been to the Augustiner beer garden twice before, once as a young man on his fi rst trip to Europe and then some twenty years later on assignment for a magazine. On both occasions he’d lolled an aft ernoon exactly as this one. Beer. Chicken. Sunlight com- ing through the leaves and making a dirty marble of the earthen path to the beer tent and back. Every Sunday aft ernoon in Munich is the same. He wondered if he’d writt en that before. Still, there were always new discoveries though his eyesight was failing and the sun was sett ing and the only way he’d ever get home was if someone he loved and who loved him led him through his drunk to a foreign bed made domestic. -

Songs by Title

Songs by Title Title Artist Title Artist #1 Goldfrapp (Medley) Can't Help Falling Elvis Presley John Legend In Love Nelly (Medley) It's Now Or Never Elvis Presley Pharrell Ft Kanye West (Medley) One Night Elvis Presley Skye Sweetnam (Medley) Rock & Roll Mike Denver Skye Sweetnam Christmas Tinchy Stryder Ft N Dubz (Medley) Such A Night Elvis Presley #1 Crush Garbage (Medley) Surrender Elvis Presley #1 Enemy Chipmunks Ft Daisy Dares (Medley) Suspicion Elvis Presley You (Medley) Teddy Bear Elvis Presley Daisy Dares You & (Olivia) Lost And Turned Whispers Chipmunk Out #1 Spot (TH) Ludacris (You Gotta) Fight For Your Richard Cheese #9 Dream John Lennon Right (To Party) & All That Jazz Catherine Zeta Jones +1 (Workout Mix) Martin Solveig & Sam White & Get Away Esquires 007 (Shanty Town) Desmond Dekker & I Ciara 03 Bonnie & Clyde Jay Z Ft Beyonce & I Am Telling You Im Not Jennifer Hudson Going 1 3 Dog Night & I Love Her Beatles Backstreet Boys & I Love You So Elvis Presley Chorus Line Hirley Bassey Creed Perry Como Faith Hill & If I Had Teddy Pendergrass HearSay & It Stoned Me Van Morrison Mary J Blige Ft U2 & Our Feelings Babyface Metallica & She Said Lucas Prata Tammy Wynette Ft George Jones & She Was Talking Heads Tyrese & So It Goes Billy Joel U2 & Still Reba McEntire U2 Ft Mary J Blige & The Angels Sing Barry Manilow 1 & 1 Robert Miles & The Beat Goes On Whispers 1 000 Times A Day Patty Loveless & The Cradle Will Rock Van Halen 1 2 I Love You Clay Walker & The Crowd Goes Wild Mark Wills 1 2 Step Ciara Ft Missy Elliott & The Grass Wont Pay -

Tinie Tempah Junk Food Torrent Download ALBUM: Tinie Tempah – Junk Food

tinie tempah junk food torrent download ALBUM: Tinie Tempah – Junk Food. Stream And “Listen to ALBUM: Tinie Tempah – Junk Food” “Fakaza Mp3“ 320kbps flexyjams cdq Fakaza download datafilehost torrent download Song Below. Release Date: December 14, 2015. Copyright: ℗ 2015 Disturbing London Records. Tracklist 1. Junk Food 2. Been the Man (feat. Jme, Stormzy & Ms Banks) 3. Autogas (feat. Big Narstie & Mostack) 4. We Don’t Play No Games (feat. Sneakbo & Mostack) 5. All You (feat. G Frsh & Wretch 32) 6. Mileage (feat. J Avalanche & M Dargg) 7. I Could Do This Every Night (feat. Yungen, Bonkaz & LIV) 8. Might Flip (feat. Cadet & Youngs Teflon) 9. Look at Me (feat. Giggs) 10. 100 Friends (feat. J Hus) Best Tinie Tempah Songs. Written in the stars. Million miles away. Message to the main ooh. Season come and go but I will never change. And I am on my way. This song is awe some and famous and hit. I like this meaning. Tinie tempah voice is good. Eric turner sound is beautiful. My favourite singer is eric turner and tinie. Just awesome. Listening 50 times a day. I like this singer. And Obviously Erik Turner. Both of their chemistry. Was excellent. I loved this song very much. Please. Request to. Compose this type of song. I think this song probably has the most meaning and makes the most sense. Maybe people don't know that this is the original so that's why they voted for the other. So now you know, vote for this one. Why is the remix version of this song in the number one spot I rekon that this is a lot better than the remix version but I think Pass Out should be number one because that is an epic song. -

The Uk's Top 200 Most Requested Songs in 2014

The Uk’s top 200 most requested songs in 2014 1 Killers Mr. Brightside 2 Kings Of Leon Sex On Fire 3 Black Eyed Peas I Gotta Feeling 4 Pharrell Williams Happy 5 Bon Jovi Livin' On A Prayer 6 Robin Thicke Blurred Lines 7 Whitney Houston I Wanna Dance With Somebody 8 Daft Punk Get Lucky 9 Journey Don't Stop Believin' 10 Bryan Adams Summer Of '69 11 Maroon 5 MovesLike Jagger 12 Beyonce Single Ladies (Put A Ring On It) 13 Bruno Mars Marry You 14 Psy Gangam Style 15 ABBA Dancing Queen 16 Queen Don't Stop Me Now 17 Rihanna We Found Love 18 Foundations Build Me Up Buttercup 19 Dexys Midnight Runners Come On Eileen 20 LMFAO Sexy And I Know It 21 Van Morrison Brown Eyed Girl 22 B-52's Love Shack 23 Beyonce Crazy In Love 24 Michael Jackson Billie Jean 25 LMFAO Party Rock Anthem 26 Amy Winehouse Valerie 27 Avicii Wake Me Up! 28 Katy Perry Firework 29 Arctic Monkeys I Bet You Look Good On The Dancefloor 30 John Travolta & Olivia Newton-John Grease Megamix 31 Guns N' Roses Sweet Child O' Mine 32 Kenny Loggins Footloose 33 Olly Murs Dance With Me Tonight 34 OutKast Hey Ya! 35 Beatles Twist And Shout 36 One Direction What Makes You Beautiful 37 DJ Casper Cha Cha Slide 38 Clean Bandit Rather Be 39 Proclaimers I'm Gonna Be (500 Miles) 40 Stevie Wonder Superstition 41 Bill Medley & Jennifer Warnes (I've Had) The Time Of My Life 42 Swedish House Mafia Don't You Worry Child 43 House Of Pain Jump Around 44 Oasis Wonderwall 45 Wham! Wake Me Up Before You Go-go 46 Cyndi Lauper Girls Just Want To Have Fun 47 David Guetta Titanium 48 Village People Y.M.C.A. -

Song Catalogue February 2020 Artist Title 2 States Mast Magan 2 States Locha E Ulfat 2 Unlimited No Limit 2Pac Dear Mama 2Pac Changes 2Pac & Notorious B.I.G

Song Catalogue February 2020 Artist Title 2 States Mast Magan 2 States Locha_E_Ulfat 2 Unlimited No Limit 2Pac Dear Mama 2Pac Changes 2Pac & Notorious B.I.G. Runnin' (Trying To Live) 2Pac Feat. Dr. Dre California Love 3 Doors Down Kryptonite 3Oh!3 Feat. Katy Perry Starstrukk 3T Anything 4 Non Blondes What's Up 5 Seconds of Summer Youngblood 5 Seconds of Summer She's Kinda Hot 5 Seconds of Summer She Looks So Perfect 5 Seconds of Summer Hey Everybody 5 Seconds of Summer Good Girls 5 Seconds of Summer Girls Talk Boys 5 Seconds of Summer Don't Stop 5 Seconds of Summer Amnesia 5 Seconds of Summer (Feat. Julia Michaels) Lie to Me 5ive When The Lights Go Out 5ive We Will Rock You 5ive Let's Dance 5ive Keep On Movin' 5ive If Ya Getting Down 5ive Got The Feelin' 5ive Everybody Get Up 6LACK Feat. J Cole Pretty Little Fears 7Б Молодые ветра 10cc The Things We Do For Love 10cc Rubber Bullets 10cc I'm Not In Love 10cc I'm Mandy Fly Me 10cc Dreadlock Holiday 10cc Donna 30 Seconds To Mars The Kill 30 Seconds To Mars Rescue Me 30 Seconds To Mars Kings And Queens 30 Seconds To Mars From Yesterday 50 Cent Just A Lil Bit 50 Cent In Da Club 50 Cent Candy Shop 50 Cent Feat. Eminem & Adam Levine My Life 50 Cent Feat. Snoop Dogg and Young Jeezy Major Distribution 101 Dalmatians (Disney) Cruella De Vil 883 Nord Sud Ovest Est 911 A Little Bit More 1910 Fruitgum Company Simon Says 1927 If I Could "Weird Al" Yankovic Men In Brown "Weird Al" Yankovic Ebay "Weird Al" Yankovic Canadian Idiot A Bugs Life The Time Of Your Life A Chorus Line (Musical) What I Did For Love A Chorus Line (Musical) One A Chorus Line (Musical) Nothing A Goofy Movie After Today A Great Big World Feat. -

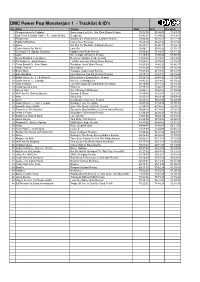

Power Pop Monsterjam 1

DMC Power Pop Monsterjam 1 - Tracklist & ID's No.Artist Tracks Start End Duration 1 Chainsmokers & Coldplay Something Just Like This (Don Diablo Remix) 00:02:00 01:45:03 01:43:03 2 Luis Fonsi & Daddy Yankee Fe. Justin Bieber Despacito 01:45:03 03:04:06 01:19:03 3 Ed Sheeran Galway Girl (Danny Dove & Offset Remix) 03:04:06 05:26:10 02:22:19 4 Sigala & Ella Eyre Came Here For Love 05:26:10 06:43:44 01:17:34 5 Adele Set Fire To The Rain (Cazzette Remix) 06:43:44 08:06:11 01:22:42 6 Calvin Harris Fe. Ne Yo Let's Go 08:06:11 09:38:22 01:32:11 7 DJ Snake Fe. Bipolar Sunshine Middle (Alex Ross Remix) 09:38:22 11:10:01 01:31:39 8 Lorde Green Light (Chromeo Remix) 11:10:01 13:00:22 01:50:36 9 Clean Bandit & Jess Glynne Real Love (Danny Verde Remix) 13:00:22 15:39:31 02:39:09 10 DJ Snake Fe. Justin Bieber Let Me Love You (Danny Dove Remix) 15:39:31 16:55:03 01:15:47 11 Clean Bandit Fe. Anne Marie Rockabye (Jack Wins Remix) 16:55:03 18:41:20 01:46:17 12 Martin Jensen Solo Dance 18:41:20 19:57:29 01:16:09 13 Bruno Mars Treasure (Cash Cash Remix) 19:57:29 21:10:13 01:12:44 14 Ellie Goulding Love Me Like You Do (Cosmic Remix) 21:10:13 23:28:18 02:18:20 15 Robin Thicke Fe. -

Adjudication No: 14 /A/2013

ADJUDICATION NO: 14 /A/2013 PROGRAMME: MUSIC VIDEO: "DRINKING FROM THE BOTTLE" BY CALVIN HARRIS ON 19 MARCH 2013 AT 12:40 BROADCASTER: DSTV TRACE CHANNEL COMPLAINANT: ALET DE VOS ___________________________________________________________________________ COMPLAINT That music video contains blasphemy, promotes promiscuity and encourages the youth to live for the moment. ___________________________________________________________________________ APPLICABLE CLAUSE OF THE CODE OF CONDUCT FOR SUBSCRIPTION BROADCASTING SERVICE LICENSEES 10. A subscription broadcasting service licensee may not knowingly broadcast material which, judged within context – 10.1 - 10.2 - 10.3 advocates hatred that is based on race, ethnicity, gender or religion and which constitutes incitement to cause harm. ___________________________________________________________________________ ADJUDICATION [1] The following complaint has been received by the Broadcasting Complaints Commission of South Africa by the Complainant mentioned above: "I herewith wish to lodge a complaint regarding a music video. The video was aired on the DSTV Trace channel on Tuesday 19 March 2013 at 12:40. The title of the song is: 'Drinking from the bottle' by Calvin Harris. My biggest objection against the video is the blasphemy contained in the video. Especially the part where the 'devil' character comments that God waits for people to come to his place, but he (the devil character) is willing to travel. This is highly insulting to me as a Christian, who believes that God walks with us and is actively involved in our lives as a loving God. The notion that He is a passive god, who just sits and waits, is sadly misrepresented. My objection to this video and by implication, the song, is that it promotes promiscuity. -

Inside Official Singles & Albums • Airplay Charts

ChartPack cover_v3_News and Playlists 08/04/13 13:56 Page 35 CHARTPACK Duke Dumont is at No.1 in the Official Singles Chart this week with Need U (100%) feat A*M*E & MNEK - as Justin Timberlake returns to the albums summit INSIDE OFFICIAL SINGLES & ALBUMS • AIRPLAY CHARTS • COMPILATIONS & INDIE CHARTS 52-53 Singles-Albums_v1_News and Playlists 08/04/13 11:33 Page 28 CHARTS UK SINGLES WEEK 14 For all charts and credits queries email [email protected]. Any changes to credits, etc, must be notified to us by Monday morning to ensure correction in that week’s printed issue The Official UK Singles and Albums Charts are produced by the Official Charts Company, based on a sample of more than 4,000 record outlets. They are compiled from actual sales last Sunday to Saturday, THE OFFICIAL UK SINGLES CHART THIS LAST WKS ON ARTIST / TITLE / LABEL CATALOGUE NUMBER (DISTRIBUTOR) THIS LAST WKS ON ARTIST / TITLE / LABEL CATALOGUE NUMBER (DISTRIBUTOR) WK WK CHRT (PRODUCER) PUBLISHER (WRITER) WK WK CHRT (PRODUCER) PUBLISHER (WRITER) 1 DUKE DUMONT FEAT. A*M*E & MNEK Need U (100%) MoS/Blas? Boys Club GBCEN1300001 (ARV) 39 29 8 BAAUER Harlem Shake Mad Decent USZ4V1200043 (C) N (Duke Dumont/Forrest) EMI/Kobalt/San Remo Live/CC (Dyment/Kabba/Emenike) (Baauer) CC (Rodrigues) 2 29 PINK FEAT. NATE RUESS Just Give Me A Reason RCA USRC11200786 (ARV) 40 IMAGINE DRAGONS It’s Time Interscope USUM71200987 (ARV) (Bhasker) Sony ATV/EMI Blackwood/Pink Inside/Way Above (Pink/Bhasker/Ruess) N (tbc) tbc (tbc) 3 48 JUSTIN TIMBERLAKE Mirrors RCA USRC11300059 (ARV) 41 35 27 RIHANNA Diamonds Def Jam USUM71211793 (ARV) 1# (Timbaland/Timberlake/Harmon) Universal/Warner Chappell/Tennman Tune/Z Tunes/J.Harmon/J.Fauntleroy/Almo (Timberlake/Mosley/Harmon/Godbey/Fauntleroy) (B.Blanco/StarGate) EMI/Kobalt/Matza Ball/Where Da Kasz At (Furler/Eriksen/Hermansen/Levine) 4 33 THE SATURDAYS FEAT. -

Sweet 16 Hot List

Sweet 16 Hot List Song Artist Happy Pharrell Best Day of My Life American Authors Run Run Run Talk Dirty to Me Jason Derulo Timber Pitbull Demons & Radioactive Imagine Dragons Dark Horse Katy Perry Find You Zedd Pumping Blood NoNoNo Animals Martin Garrix Empire State of Mind Jay Z The Monster Eminem Blurred Lines We found Love Rihanna/Calvin Love Me Again John Newman Dare You Hardwell Don't Say Goodnight Hot Chella Rae All Night Icona Pop Wild Heart The Vamps Tennis Court & Royals Lorde Songs by Coldplay Counting Stars One Republic Get Lucky Daft punk Sexy Back Justin Timberland Ain't it Fun Paramore City of Angels 30 Seconds Walking on a Dream Empire of the Sun If I loose Myself One Republic (w/Allesso mix) Every Teardrop is a Waterfall mix Coldplay & Swedish Mafia Hey Ho The Lumineers Turbulence Laidback Luke Steve Aoki Lil Jon Pursuit of Happiness Steve Aoki Heads will roll Yeah yeah yeah's A-trak remix Mercy Kanye West Crazy in love Beyonce and Jay-z Pop that Rick Ross, Lil Wayne, Drake Reason Nervo & Hook N Sling All night longer Sammy Adams Timber Ke$ha, Pitbull Alive Krewella Teach me how to dougie Cali Swag District Aye ladies Travis Porter #GETITRIGHT Miley Cyrus We can't stop Miley Cyrus Lip gloss Lil mama Turn down for what Laidback Luke Get low Lil Jon Shots LMFAO We found love Rihanna Hypnotize Biggie Smalls Scream and Shout Cupid Shuffle Wobble Hips Don’t Lie Sexy and I know it International Love Whistle Best Love Song Chris Brown Single Ladies Danza Kuduro Can’t Hold Us Kiss You One direction Don’t You worry Child Don’t -

Songs by Artist

Andromeda II DJ Entertainment Songs by Artist www.adj2.com Title Title Title 10,000 Maniacs 50 Cent AC DC Because The Night Disco Inferno Stiff Upper Lip Trouble Me Just A Lil Bit You Shook Me All Night Long 10Cc P.I.M.P. Ace Of Base I'm Not In Love Straight To The Bank All That She Wants 112 50 Cent & Eminen Beautiful Life Dance With Me Patiently Waiting Cruel Summer 112 & Ludacris 50 Cent & The Game Don't Turn Around Hot & Wet Hate It Or Love It Living In Danger 112 & Supercat 50 Cent Feat. Eminem And Adam Levine Sign, The Na Na Na My Life (Clean) Adam Gregory 1975 50 Cent Feat. Snoop Dogg And Young Crazy Days City Jeezy Adam Lambert Love Me Major Distribution (Clean) Never Close Our Eyes Robbers 69 Boyz Adam Levine The Sound Tootsee Roll Lost Stars UGH 702 Adam Sandler 2 Pac Where My Girls At What The Hell Happened To Me California Love 8 Ball & MJG Adams Family 2 Unlimited You Don't Want Drama The Addams Family Theme Song No Limits 98 Degrees Addams Family 20 Fingers Because Of You The Addams Family Theme Short Dick Man Give Me Just One Night Adele 21 Savage Hardest Thing Chasing Pavements Bank Account I Do Cherish You Cold Shoulder 3 Degrees, The My Everything Hello Woman In Love A Chorus Line Make You Feel My Love 3 Doors Down What I Did For Love One And Only Here Without You a ha Promise This Its Not My Time Take On Me Rolling In The Deep Kryptonite A Taste Of Honey Rumour Has It Loser Boogie Oogie Oogie Set Fire To The Rain 30 Seconds To Mars Sukiyaki Skyfall Kill, The (Bury Me) Aah Someone Like You Kings & Queens Kho Meh Terri -

Et Cetera English Student Research

Marshall University Marshall Digital Scholar Et Cetera English Student Research Spring 1954 et cetera Marshall University Follow this and additional works at: https://mds.marshall.edu/english_etc Part of the Appalachian Studies Commons, Children's and Young Adult Literature Commons, Feminist, Gender, and Sexuality Studies Commons, Fiction Commons, Nonfiction Commons, and the Poetry Commons Recommended Citation Marshall University, "et cetera" (1954). Et Cetera. 25. https://mds.marshall.edu/english_etc/25 This Article is brought to you for free and open access by the English Student Research at Marshall Digital Scholar. It has been accepted for inclusion in Et Cetera by an authorized administrator of Marshall Digital Scholar. For more information, please contact [email protected], [email protected]. I • . "-... .t Gtc • • • Conlenb Buddy ------------------------------------------- 5 Editor ___________________________ Marilyn Putz There Was Time ----------------------------------- 7 Managing Editor_ ______________ Boice Daugherty Objects ---------------------------------------____ 7 Man: Peninsula ----------------------------------- 8 Art Editor _____________________ Adele Thornton Thirty Year Man ---------------------------------- 9 Business Manager_______________ David Pilkenton Minor Losses ------------------------------------- 18 Production StafL ___ Carolyn Copen, Lola Caster, Come to This Garden ------------------------------ 19 Peggy Groves Leftover Ilotu,e ----------------------------------- 20 Editorial Board__________ Nick -

Songs by Artist

TOTALLY TWISTED KARAOKE Songs by Artist 37 SONGS ADDED IN SEP 2021 Title Title (HED) PLANET EARTH 2 CHAINZ, DRAKE & QUAVO (DUET) BARTENDER BIGGER THAN YOU (EXPLICIT) 10 YEARS 2 CHAINZ, KENDRICK LAMAR, A$AP, ROCKY & BEAUTIFUL DRAKE THROUGH THE IRIS FUCKIN PROBLEMS (EXPLICIT) WASTELAND 2 EVISA 10,000 MANIACS OH LA LA LA BECAUSE THE NIGHT 2 LIVE CREW CANDY EVERYBODY WANTS ME SO HORNY LIKE THE WEATHER WE WANT SOME PUSSY MORE THAN THIS 2 PAC THESE ARE THE DAYS CALIFORNIA LOVE (ORIGINAL VERSION) TROUBLE ME CHANGES 10CC DEAR MAMA DREADLOCK HOLIDAY HOW DO U WANT IT I'M NOT IN LOVE I GET AROUND RUBBER BULLETS SO MANY TEARS THINGS WE DO FOR LOVE, THE UNTIL THE END OF TIME (RADIO VERSION) WALL STREET SHUFFLE 2 PAC & ELTON JOHN 112 GHETTO GOSPEL DANCE WITH ME (RADIO VERSION) 2 PAC & EMINEM PEACHES AND CREAM ONE DAY AT A TIME PEACHES AND CREAM (RADIO VERSION) 2 PAC & ERIC WILLIAMS (DUET) 112 & LUDACRIS DO FOR LOVE HOT & WET 2 PAC, DR DRE & ROGER TROUTMAN (DUET) 12 GAUGE CALIFORNIA LOVE DUNKIE BUTT CALIFORNIA LOVE (REMIX) 12 STONES 2 PISTOLS & RAY J CRASH YOU KNOW ME FAR AWAY 2 UNLIMITED WAY I FEEL NO LIMITS WE ARE ONE 20 FINGERS 1910 FRUITGUM CO SHORT 1, 2, 3 RED LIGHT 21 SAVAGE, OFFSET, METRO BOOMIN & TRAVIS SIMON SAYS SCOTT (DUET) 1975, THE GHOSTFACE KILLERS (EXPLICIT) SOUND, THE 21ST CENTURY GIRLS TOOTIMETOOTIMETOOTIME 21ST CENTURY GIRLS 1999 MAN UNITED SQUAD 220 KID X BILLEN TED REMIX LIFT IT HIGH (ALL ABOUT BELIEF) WELLERMAN (SEA SHANTY) 2 24KGOLDN & IANN DIOR (DUET) WHERE MY GIRLS AT MOOD (EXPLICIT) 2 BROTHERS ON 4TH 2AM CLUB COME TAKE MY HAND