A Retargetable Usability Testbed for Website Authentication Technologies

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Verified Implementations of the Information Card Federated Identity

Verified Implementations of the Information Card Federated Identity-Management Protocol Karthikeyan Bhargavan∗ Cédric Fournet∗ Andrew D. Gordon∗ Nikhil Swamy† ∗Microsoft Research †University of Maryland, College Park ABSTRACT We describe reference implementations for selected configurations of the user authentication protocol defined by the Information Card Profile V1.0. Our code can interoperate with existing implemen- tations of the roles of the protocol (client, identity provider, and relying party). We derive formal proofs of security properties for our code using an automated theorem prover. Hence, we obtain the most substantial examples of verified implementations of crypto- graphic protocols to date, and the first for any federated identity- management protocols. Moreover, we present a tool that down- loads security policies from services and identity providers and Figure 1: InfoCard: Card-based User Authentication compiles them to a verifiably secure client proxy. Categories and Subject Descriptors and (2) to check consistency between the reference implementation and the informal specification in the same way as for any imple- D.2.4 [Software Engineering]: Software/Program Verification mentation, via interoperability testing with other implementations. This paper reports the techniques underpinning the most com- Security, Verification General Terms plex examples to date of such verified implementations. Keywords Information Card Profile V1.0 (InfoCard). We have built and Cryptographic protocol verification, Verified implementations, Web verified reference implementations of various configurations of the Services Security, Federated identity management, CardSpace. card-based user authentication protocol defined by the Information Card Profile V1.0 [InfoCard Guide, Nanda, 2006]. We refer to this 1. INTRODUCTION protocol as InfoCard. InfoCard is the core of a federated identity-management frame- Verified Reference Implementations of Protocols. -

Cardspace Web Integration

A Guide to Supporting Information Cards within Web Applications and Browsers as of the Information Card Profile V1.0 December 2006 Author Michael B. Jones Microsoft Corporation Copyright Notice © 2006 Microsoft Corporation. All rights reserved. Abstract The Identity Metasystem allows users to manage their digital identities from various identity providers and employ them in different contexts where they are accepted to access online services. In the Identity Metasystem, identities are represented to users as “Information Cards” (a.k.a. “InfoCards”). One important class of applications where Information Card- based authentication can be used is applications hosted on web sites and accessed through web browsers. This paper documents the web interfaces utilized by browsers and web applications that support the Identity Metasystem. The information in this document is not specific to any one browser or platform. This document supplements the information provided in two other Information Card Profile references: the Guide to the Information Card Profile V1.0 [InfoCard-Guide], which provides a non-normative description of the overall Information Card model, and the Technical Reference for the Information Card Profile V1.0 [InfoCard-Ref], which provides the normative schema definitions and behaviors referenced by the Guide to the Information Card Profile V1.0. Status The Information Card Profile V1.0 was used to implement Windows CardSpace V1.0, which is part of Microsoft .NET Framework 3.0 [.NET3.0], and Internet Explorer 7. Other implementations following these specifications should be able to interoperate with the Microsoft implementation. Version 1.0 Page 1 of 13 Table of Contents 1. Introduction 2. -

Security Policy Page 1 of 20

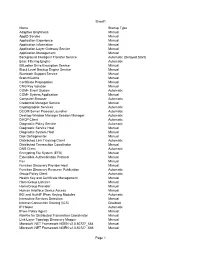

Security Policy Page 1 of 20 Security Policy This security policy contains data to configure services and network security based on the server’s role, as well as data to configure registry and auditing settings. Server: VENGWIN207 Services Service Name Startup Mode Description Issues, manages, and removes X.509 certificates for such applications such as Active Directory Certificate S/MIME and SSL. If the service is stopped, Disabled Services certificates will not be issued. If this service is disabled, any services that explicitly depend on it will fail to start. AD DS Domain Controller service. If this service is stopped, users will be unable to log Active Directory Domain Services Disabled on to the network. If this service is disabled, any services that explicitly depend on it will fail to start. AD FS Web Agent Authentication The AD FS Web Agent Authentication Service Disabled Service validates incoming tokens and cookies. Adobe Acrobat Updater keeps your Adobe Adobe Acrobat Update Service Automatic software up to date. Sends logging messages to the logging database when logging is enabled for the Active Directory Rights Management Services role. If this service is disabled or stopped AdRmsLoggingService Disabled when logging is enabled, logging messages will be stored in local message queues and sent to the logging database when the service is started. Processes application compatibility cache Application Experience Disabled requests for applications as they are launched Provides administrative services for IIS, for example configuration history and Application Pool account mapping. If this Application Host Helper Service Disabled service is stopped, configuration history and locking down files or directories with Application Pool specific Access Control Entries will not work. -

A One-Page Introduction to Windows Cardspace Michael B

A One-Page Introduction to Windows CardSpace Michael B. Jones, Microsoft Corporation, January 2007 © 2007 Microsoft Corporation. All rights reserved. Many of the problems facing the Internet today stem from the lack of a widely deployed, easily understood, secure identity solution. Microsoft’s Windows CardSpace software and the “Identity Metasystem” protocols underlying it are aimed at filling this gap using technology all can adopt and solutions all can endorse, putting people in control of their identity interactions on the Internet. One problem people face is knowing whether they’re at a legitimate web site or a malicious site. This is an identity problem: a problem with reliably identifying authentic sites to their users. People also face numerous problems in identifying themselves to the sites they use. Username/password authentication is the prevailing paradigm, but its weaknesses are all too evident on today’s Internet. Password reuse, insecure passwords, forgotten passwords, and poor password management practices open a world of attacks by themselves. Combine that with the password theft attacks enabled by counterfeit web sites and man-in-the-middle attacks and today’s Internet is an attacker’s paradise. CardSpace is part of the solution to all of these problems. The Windows CardSpace software enables people to maintain a set of personal digital identities that are shown to them as visual “Information Cards”. These cards are easier to use than passwords. Furthermore, they employ strong cryptography, making them significantly more secure than passwords and other information typed into web forms. There are three participants in any digital identity interaction using CardSpace: Identity Providers issue digital identities for you. -

Tweakhound, Windows 7 Beta Default Services

Sheet1 Name Startup Type Adaptive Brightness Manual AppID Service Manual Application Experience Manual Application Information Manual Application Layer Gateway Service Manual Application Management Manual Background Intelligent Transfer Service Automatic (Delayed Start) Base Filtering Engine Automatic BitLocker Drive Encryption Service Manual Block Level Backup Engine Service Manual Bluetooth Support Service Manual BranchCache Manual Certificate Propagation Manual CNG Key Isolation Manual COM+ Event System Automatic COM+ System Application Manual Computer Browser Automatic Credential Manager Service Manual Cryptographic Services Automatic DCOM Server Process Launcher Automatic Desktop Window Manager Session Manager Automatic DHCP Client Automatic Diagnostic Policy Service Automatic Diagnostic Service Host Manual Diagnostic System Host Manual Disk Defragmenter Manual Distributed Link Tracking Client Automatic Distributed Transaction Coordinator Manual DNS Client Automatic Encrypting File System (EFS) Manual Extensible Authentication Protocol Manual Fax Manual Function Discovery Provider Host Manual Function Discovery Resource Publication Automatic Group Policy Client Automatic Health Key and Certificate Management Manual HomeGroup Listener Manual HomeGroup Provider Manual Human Interface Device Access Manual IKE and AuthIP IPsec Keying Modules Automatic Interactive Services Detection Manual Internet Connection Sharing (ICS) Disabled IP Helper Automatic IPsec Policy Agent Manual KtmRm for Distributed Transaction Coordinator Manual Link-Layer -

Next Generation Shopping and Windows Cardspace at Otto Group

Next Generation Shopping and Windows CardSpace at Otto Group Next Generation Shopping needs next generation Identity Management Technologie 1 Next Generation Shopping and Windows CardSpace at Otto Group NEW LINE NEW About me . .NET Architect since 2001 . VB6 -> Migration from client server to SOA . Implemented a Security Token Service . Employed by ATE Software GmbH CIO Connected Identity & Directory Group . Technology, Technology, Technology . ATE is specialized for .NET 3.0 and architecture . Contact: . http://www.windowscardspace.de . [email protected] Technologie 2 Next Generation Shopping and Windows CardSpace at Otto Group NEW LINE NEW Agenda . The OTTO Group . Microsoft & Otto Group Strategic Partnership Digital Shopping Experience . Vista OTTO-Store: Next Generation Online Shopping . Demo . Why we decided to use Windows CardSpace . System Architecture . What´s next Technologie 3 Next Generation Shopping and Windows CardSpace at Otto Group NEW LINE NEW The Vista OTTO-Store overcomes traditional boundaries to pioneer a new shopping world Technologie 12 Next Generation Shopping and Windows CardSpace at Otto Group NEW LINE NEW Agenda . The Otto Group . Microsoft & Otto Group Strategic Partnership Digital Shopping Experience . Vista OTTO-Store: Next Generation Online Shopping . Demo . Why we decided to use Windows CardSpace . System Architecture . What´s next Technologie 13 Next Generation Shopping and Windows CardSpace at Otto Group NEW LINE NEW Why we decided to use Windows CardSpace as the first retail company worldwide? . We want to map to the common shopping manner . Otto backend is based on Intershop, so we talk about WebServices . We need a way to talk to this webservices in a secure manner . -

Microsoft Windows Identity Foundation (WIF) Whitepaper for Developers

Microsoft Windows Identity Foundation (WIF) Whitepaper for Developers Keith Brown Pluralsight, LLC Sesha Mani Microsoft Corporation 2 Microsoft Windows Identity Foundation (WIF) Whitepaper for Developers Legal Information The information contained in this document represents the current view of Microsoft Corporation on the issues discussed as of the date of publication. Because Microsoft must respond to changing market conditions, it should not be interpreted to be a commitment on the part of Microsoft, and Microsoft cannot guarantee the accuracy of any information presented after the date of publication. This White Paper is for informational purposes only. MICROSOFT MAKES NO WARRANTIES, EXPRESS, IMPLIED OR STATUTORY, AS TO THE INFORMATION IN THIS DOCUMENT. Complying with all applicable copyright laws is the responsibility of the user. Without limiting the rights under copyright, no part of this document may be reproduced, stored in or introduced into a retrieval system, or transmitted in any form or by any means (electronic, mechanical, photocopying, recording, or otherwise), or for any purpose, without the express written permission of Microsoft Corporation. Microsoft may have patents, patent applications, trademarks, copyrights, or other intellectual property rights covering subject matter in this document. Except as expressly provided in any written license agreement from Microsoft, the furnishing of this document does not give you any license to these patents, trademarks, copyrights, or other intellectual property. Unless otherwise noted, the companies, organizations, products, domain names, e-mail addresses, logos, people, places, and events depicted in examples herein are fictitious. No association with any real company, organization, product, domain name, e-mail address, logo, person, place, or event is intended or should be inferred. -

An Efficient Windows Cardspace Identity Management Technique in Cloud Computing

IOSR Journal of Computer Engineering (IOSR-JCE) e-ISSN: 2278-0661, p- ISSN: 2278-8727Volume 16, Issue 3, Ver. VII (May-Jun. 2014), PP 61-66 www.iosrjournals.org An Efficient Windows Cardspace identity Management Technique in Cloud Computing Smita Saini1, Deep Mann2 1(Dept. of Computer Science Engineering, Lovely Professional University, India) 2(Dept. of Computer Science Engineering, Lovely Professional University, India) Abstract: Cloud computing provides the shared infrastructure to the user this lead to the privacy and security violation. Privacy is regarding to the user’s sensitive information that resides onto the cloud, unauthorized secondary usage of the data. Windows Cardspace is a digital identity management system that deals with the privacy. It is managed the digital identities inform of Security Token. This paper described the shortcoming of the windows Cardspace that direct to a privacy violation. First issue is reliance on the single layer authentication, second is relying on service provider judgment and third is token storage on cloud. This proposed work will overcome the limitation of the windows cardspace identity management solution. Keyword: windows Cardspace I. Introduction Web applications are moving toward the share infrastructure it raises the privacy and security issues for user data. Cloud computing provides the large storage capacity and platform to the user (There user can deploy services without installation). Users are stored his/her sensitive data onto cloud server but users are not aware how the data are used by administrator (lack of user control) this lead to the privacy violation. For accessing the services users sends a request to the service provider and service providers ask for the claims, these claims can be inform of name, email-id, date of birth, sensitive information(credit card information) and this lead to the phishing attack, identity theft and pharming attack. -

Release Notes for Cisco CTI OS, Release 7.5(1)

Release Notes for Cisco CTI OS, Release 7.5(1) April 13, 2011 Contents • Introduction, page 2 • System Requirements, page 2 • Related Documentation, page 2 • New and Changed Information, page 3 • Important Notes, page 17 • Resolved Caveats in the CTI OS 7.5(1) Release, page 17 • Open Caveats in CTI OS 7.5(1) Release, page 20 • Obtaining Documentation, Obtaining Support, and Security Guidelines, page 20 • Documentation Feedback, page 20 Americas Headquarters: Cisco Systems, Inc., 170 West Tasman Drive, San Jose, CA 95134-1706 USA © 2008-2011 Cisco Systems, Inc. All rights reserved. Introduction Introduction These release notes describe the new features and caveats for Cisco CTI OS release 7.5(1). Note To view the release notes for previous versions of Cisco CTI OS, go to: http://www.cisco.com/univercd/cc/td/doc/product/icm/icmentpr/ Before you install Cisco CTI OS, Cisco recommends that you review the section Important Notes, page 17 for information about issues that may affect your system. For a list of the open and resolved caveats for Cisco CTI OS Release 7.5(1), see the Resolved Caveats in the CTI OS 7.5(1) Release, page 17 and the Open Caveats in CTI OS 7.5(1) Release, page 20. Updates for these release notes occur with every maintenance release and major release. Note While CTI OS Release 7.5(1) supports updates from previous releases, Release 7.5(1) requires a full installation/setup. No rollback is available. The CTI OS software Release 7.5(1) supports: • ICM Enterprise Edition [Unified Intelligent Contact Management Enterprise (ICME)] • ICM Hosted Edition [Unified Intelligent Contact Management Hosted (ICMH)] • IPCC Enterprise Edition [Unified Contact Center Enterprise (CCE)] – System IPCC Enterprise Edition [Unified System Contact Center Enterprise (SCCE)] • IPCC Hosted Edition [Unified Contact Center Hosted (CCH)] Additional information on new features, and on many of the product changes, is available in the relevant end-user documentation. -

Managing the Windows 7 Desktop Environment

Chapter 5 Managing the Windows 7 Desktop Environment INFORMATION IN THIS CHAPTER ■ Local Management Tools ■ Managing Hardware Devices and Drivers ■ Managing Disks and File Systems ■ Summary Windows 7 comes with a variety of tools for managing your system. There are tools for managing the local system, managing hardware and devices, and managing disks and file systems. Some of these tools are new, and some have been around for a while. Windows 7 can be a quite complex operating system. So you need to make sure that you understand all the tools that have been created to make the job of managing systems easier. LOCAL MANAGEMENT TOOLS Windows 7 includes many management tools to help manage your system. We will review a few of the most commonly used ones. These are as follows: ■ Control Panel ■ Microsoft Management Console 3.0 ■ Computer Management Console ■ Local Group Policy Editor ■ Windows Registry Each of these management tools serves a different purpose and provides dif- ferent functionality. It’s important that you have a good understanding of all these tools in order to properly manage your system. © 2010 Elsevier Inc. All rights reserved. DOI: 10.1016/B978-1-59749-561-5.00005-X 305 306 CHAPTER 5 Managing the Windows 7 Desktop Environment Control Panel The Control Panel has long been a central place to go to configure your Win- dows system. The look has changed over the years, but tools have stayed pretty similar, with a few additions. The Windows 7 Control Panel is broken down into several categories and subcategories. Control Panel is accessed from the Start menu. -

Windows Cardspace Information Cards and Secure2trust

Windows CardSpace Information Cards and secure2trust | [email protected] US: +1 415 839 9433 International: +44 207 851 6070 Avoco Secure Ltd www.avocosecure.com © Avoco Secure 2009 ‐ All rights reserved. Windows CardSpace1 provides a type of information card in the form of a software token, that is used as a method of digital identity. Windows CardSpace information card is a client application that comes pre‐installed with the Windows .NET 3.x Framework. The information card runs on the desktop as an identity selector for CardSpace Cards created or issued Windows CardSpace information card is based on an identity meta‐system, architecture consisting of: 1. Identity Providers (companies who issue cards to their customers, employees, etc. 2. Users (individuals who have a card issued that they can they use as their identity to log onto websites, etc. 3. Relying parties (applications, services and similar that use an identity to authenticate a person to then authorise an action such as logging onto a website, accessing a documents, etc.) A Card is either issued by the Identity provider to a user, or a user can self‐issue a card. Each card holds a number of ‘Claims’ associated with that user which are subsequently used to identify them when the card is used. These claims include identifiers such as name, email address, etc. When a user needs to use a card with a supporting application (relying party) to access a resource (such as a secure2trust protected document or a website that supports logon through information cards) the CardSpace desktop interface opens and the user can choose a card to identify themselves. -

Windows Server 2008 Security Resource Kit.Pdf

PUBLISHED BY Microsoft Press A Division of Microsoft Corporation One Microsoft Way Redmond, Washington 98052-6399 Copyright © 2008 by Jesper M. Johansson All rights reserved. No part of the contents of this book may be reproduced or transmitted in any form or by any means without the written permission of the publisher. Library of Congress Control Number: 2008920563 Printed and bound in the United States of America. 1 2 3 4 5 6 7 8 9 QWT 3 2 1 0 9 8 Distributed in Canada by H.B. Fenn and Company Ltd. A CIP catalogue record for this book is available from the British Library. Microsoft Press books are available through booksellers and distributors worldwide. For further infor- mation about international editions, contact your local Microsoft Corporation office or contact Microsoft Press International directly at fax (425) 936-7329. Visit our Web site at www.microsoft.com/mspress. Send comments to [email protected]. Microsoft, Microsoft Press, Active Directory, ActiveX, Authenticode, bCentral, BitLocker, DirectX, Excel, ForeFront, Hotmail, Internet Explorer, MSDN, MSN, Outlook, PowerPoint, SharePoint, SQL Server, Visio, Visual Basic, Visual Studio, Windows, Windows CardSpace, Windows Live, Windows Media, Windows Mobile, Windows NT, Windows PowerShell, Windows Server, Windows Server System, Windows Vista, Xbox, and Xbox Live are either registered trademarks or trademarks of Microsoft Corporation in the United States and/or other countries. Other product and company names mentioned herein may be the trademarks of their respective owners. The example companies, organizations, products, domain names, e-mail addresses, logos, people, places, and events depicted herein are fictitious. No association with any real company, organization, product, domain name, e-mail address, logo, person, place, or event is intended or should be inferred.