Fast Generation of Provable Primes Using Search in Arithmetic Progressions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

X9.80–2005 Prime Number Generation, Primality Testing, and Primality Certificates

This is a preview of "ANSI X9.80:2005". Click here to purchase the full version from the ANSI store. American National Standard for Financial Services X9.80–2005 Prime Number Generation, Primality Testing, and Primality Certificates Accredited Standards Committee X9, Incorporated Financial Industry Standards Date Approved: August 15, 2005 American National Standards Institute American National Standards, Technical Reports and Guides developed through the Accredited Standards Committee X9, Inc., are copyrighted. Copying these documents for personal or commercial use outside X9 membership agreements is prohibited without express written permission of the Accredited Standards Committee X9, Inc. For additional information please contact ASC X9, Inc., P.O. Box 4035, Annapolis, Maryland 21403. This is a preview of "ANSI X9.80:2005". Click here to purchase the full version from the ANSI store. ANS X9.80–2005 Foreword Approval of an American National Standard requires verification by ANSI that the requirements for due process, consensus, and other criteria for approval have been met by the standards developer. Consensus is established when, in the judgment of the ANSI Board of Standards Review, substantial agreement has been reached by directly and materially affected interests. Substantial agreement means much more than a simple majority, but not necessarily unanimity. Consensus requires that all views and objections be considered, and that a concerted effort be made toward their resolution. The use of American National Standards is completely voluntary; their existence does not in any respect preclude anyone, whether he has approved the standards or not from manufacturing, marketing, purchasing, or using products, processes, or procedures not conforming to the standards. -

An Analysis of Primality Testing and Its Use in Cryptographic Applications

An Analysis of Primality Testing and Its Use in Cryptographic Applications Jake Massimo Thesis submitted to the University of London for the degree of Doctor of Philosophy Information Security Group Department of Information Security Royal Holloway, University of London 2020 Declaration These doctoral studies were conducted under the supervision of Prof. Kenneth G. Paterson. The work presented in this thesis is the result of original research carried out by myself, in collaboration with others, whilst enrolled in the Department of Mathe- matics as a candidate for the degree of Doctor of Philosophy. This work has not been submitted for any other degree or award in any other university or educational establishment. Jake Massimo April, 2020 2 Abstract Due to their fundamental utility within cryptography, prime numbers must be easy to both recognise and generate. For this, we depend upon primality testing. Both used as a tool to validate prime parameters, or as part of the algorithm used to generate random prime numbers, primality tests are found near universally within a cryptographer's tool-kit. In this thesis, we study in depth primality tests and their use in cryptographic applications. We first provide a systematic analysis of the implementation landscape of primality testing within cryptographic libraries and mathematical software. We then demon- strate how these tests perform under adversarial conditions, where the numbers being tested are not generated randomly, but instead by a possibly malicious party. We show that many of the libraries studied provide primality tests that are not pre- pared for testing on adversarial input, and therefore can declare composite numbers as being prime with a high probability. -

Sequences of Numbers Obtained by Digit and Iterative Digit Sums of Sophie Germain Primes and Its Variants

Global Journal of Pure and Applied Mathematics. ISSN 0973-1768 Volume 12, Number 2 (2016), pp. 1473-1480 © Research India Publications http://www.ripublication.com Sequences of numbers obtained by digit and iterative digit sums of Sophie Germain primes and its variants 1Sheila A. Bishop, *1Hilary I. Okagbue, 2Muminu O. Adamu and 3Funminiyi A. Olajide 1Department of Mathematics, Covenant University, Canaanland, Ota, Nigeria. 2Department of Mathematics, University of Lagos, Akoka, Lagos, Nigeria. 3Department of Computer and Information Sciences, Covenant University, Canaanland, Ota, Nigeria. Abstract Sequences were generated by the digit and iterative digit sums of Sophie Germain and Safe primes and their variants. The results of the digit and iterative digit sum of Sophie Germain and Safe primes were almost the same. The same applied to the square and cube of the respective primes. Also, the results of the digit and iterative digit sum of primes that are not Sophie Germain are the same with the primes that are notSafe. The results of the digit and iterative digit sum of prime that are either Sophie Germain or Safe are like the combination of the results of the respective primes when considered separately. Keywords: Sophie Germain prime, Safe prime, digit sum, iterative digit sum. Introduction In number theory, many types of primes exist; one of such is the Sophie Germain prime. A prime number p is said to be a Sophie Germain prime if 21p is also prime. A prime number qp21 is known as a safe prime. Sophie Germain prime were named after the famous French mathematician, physicist and philosopher Sophie Germain (1776-1831). -

Cryptanalysis of Public Key Cryptosystems Abderrahmane Nitaj

Cryptanalysis of Public Key Cryptosystems Abderrahmane Nitaj To cite this version: Abderrahmane Nitaj. Cryptanalysis of Public Key Cryptosystems. Cryptography and Security [cs.CR]. Université de Caen Normandie, 2016. tel-02321087 HAL Id: tel-02321087 https://hal-normandie-univ.archives-ouvertes.fr/tel-02321087 Submitted on 20 Oct 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. MEMOIRE D'HABILITATION A DIRIGER DES RECHERCHES Sp´ecialit´e: Math´ematiques Pr´epar´eau sein de l'Universit´ede Caen Normandie Cryptanalysis of Public Key Cryptosystems Pr´esent´eet soutenu par Abderrahmane NITAJ Soutenu publiquement le 1er d´ecembre 2016 devant le jury compos´ede Mr Thierry BERGER Professeur, Universit´ede Limoges Rapporteur Mr Marc GIRAULT Chercheur HDR, Orange Labs, Caen Examinateur Mr Marc JOYE Chercheur HDR, NXP Semiconductors Rapporteur Mr Fabien LAGUILLAUMIE Professeur, Universit´ede Lyon 1 Rapporteur Mr Denis SIMON Professeur, Universit´ede Caen Examinateur Mme Brigitte VALLEE Directrice de Recherche, CNRS Examinatrice M´emoirepr´epar´eau Laboratoire de Math´ematiquesNicolas Oresme (LMNO) Contents Remerciements ix List of Publications xi 1 Introduction 1 2 Cryptanalysis of RSA 9 2.1 Introduction . .9 2.2 Continued Fractions . -

Primality Testing

Syracuse University SURFACE Electrical Engineering and Computer Science - Technical Reports College of Engineering and Computer Science 6-1992 Primality Testing Per Brinch Hansen Syracuse University, School of Computer and Information Science, [email protected] Follow this and additional works at: https://surface.syr.edu/eecs_techreports Part of the Computer Sciences Commons Recommended Citation Hansen, Per Brinch, "Primality Testing" (1992). Electrical Engineering and Computer Science - Technical Reports. 169. https://surface.syr.edu/eecs_techreports/169 This Report is brought to you for free and open access by the College of Engineering and Computer Science at SURFACE. It has been accepted for inclusion in Electrical Engineering and Computer Science - Technical Reports by an authorized administrator of SURFACE. For more information, please contact [email protected]. SU-CIS-92-13 Primality Testing Per Brinch Hansen June 1992 School of Computer and Information Science Syracuse University Suite 4-116, Center for Science and Technology Syracuse, NY 13244-4100 Primality Testing1 PER BRINCH HANSEN Syracuse University, Syracuse, New York 13244 June 1992 This tutorial describes the Miller-Rabin method for testing the primality of large integers. The method is illustrated by a Pascal algorithm. The performance of the algorithm was measured on a Computing Surface. Categories and Subject Descriptors: G.3 [Probability and Statistics: [Proba bilistic algorithms (Monte Carlo) General Terms: Algorithms Additional Key Words and Phrases: Primality testing CONTENTS INTRODUCTION 1. FERMAT'S THEOREM 2. THE FERMAT TEST 3. QUADRATIC REMAINDERS 4. THE MILLER-RABIN TEST 5. A PROBABILISTIC ALGORITHM 6. COMPLEXITY 7. EXPERIMENTS 8. SUMMARY ACKNOWLEDGEMENTS REFERENCES 1Copyright@1992 Per Brinch Hansen Per Brinch Hansen: Primality Testing 2 INTRODUCTION This tutorial describes a probabilistic method for testing the primality of large inte gers. -

Detecting a Regularity in the Generation and Utilization of Primes in the Multiplicative Number Theory

http://www.scirp.org/journal/ns Natural Science, 2019, Vol. 11, (No. 6), pp: 187-196 Detecting a Regularity in the Generation and Utilization of Primes in the Multiplicative Number Theory Silviu Guiasu Department of Mathematics and Statistics, York University, Toronto, Canada Correspondence to: Silviu Guiasu, Keywords: Goldbach’s Conjecture, Symmetric Prime Cousins, Systemic Approach in Number Theory, Parallel System Covering Integers with Primes, Euler’s Formula for the Product of Reciprocals of Primes, Formula for the Exact Number of Primes Less than or Equal to an Arbitrary Bound Received: May 15, 2019 Accepted: June 22, 2019 Published: June 25, 2019 Copyright © 2019 by author(s) and Scientific Research Publishing Inc. This work is licensed under the Creative Commons Attribution International License (CC BY 4.0). http://creativecommons.org/licenses/by/4.0/ Open Access ABSTRACT If Goldbach’s conjecture is true, then for each prime number p there is at least one pair of primes symmetric with respect to p and whose sum is 2p. In the multiplicative number theory, covering the positive integers with primes, during the prime factorization, may be viewed as being the outcome of a parallel system which functions properly if and only if Euler’s formula of the product of the reciprocals of the primes is true. An exact formula for the number of primes less than or equal to an arbitrary bound is given. This formula may be implemented using Wolfram’s computer package Mathematica. 1. INTRODUCTION The prime numbers are the positive integers that can be divided only by 1 and by themselves. -

An Efficient Variant of Boneh-Gentry-Hamburg's Identity-Based Encryption Without Pairing Ibrahim Elashry University of Wollongong, [email protected]

University of Wollongong Research Online Faculty of Engineering and Information Sciences - Faculty of Engineering and Information Sciences Papers: Part A 2015 An efficient variant of Boneh-Gentry-Hamburg's identity-based encryption without pairing Ibrahim Elashry University of Wollongong, [email protected] Yi Mu University of Wollongong, [email protected] Willy Susilo University of Wollongong, [email protected] Publication Details Elashry, I., Mu, Y. & Susilo, W. (2015). An efficient variant of Boneh-Gentry-Hamburg's identity-based encryption without pairing. Lecture Notes in Computer Science, 8909 257-268. Research Online is the open access institutional repository for the University of Wollongong. For further information contact the UOW Library: [email protected] An efficient variant of Boneh-Gentry-Hamburg's identity-based encryption without pairing Keywords boneh, variant, efficient, gentry, hamburg, pairing, identity, encryption, without Disciplines Engineering | Science and Technology Studies Publication Details Elashry, I., Mu, Y. & Susilo, W. (2015). An efficient variant of Boneh-Gentry-Hamburg's identity-based encryption without pairing. Lecture Notes in Computer Science, 8909 257-268. This journal article is available at Research Online: http://ro.uow.edu.au/eispapers/4210 An Efficient Variant of Boneh-Gentry-Hamburg's Identity-based Encryption without Pairing Ibrahim Elashry, Yi Mu and Willy Susilo Centre for Computer and Information Security Research School of Computer Science and Software Engineering University of Wollongong, Australia Email: [email protected]; fymu, [email protected] Abstract. Boneh, Gentry and Hamburg presented an encryption sys- tem known as BasicIBE without incorporating pairings. This system has short ciphertext size but this comes at the cost of less time-efficient en- cryption / decryption algorithms in which their processing time increases drastically with the message length. -

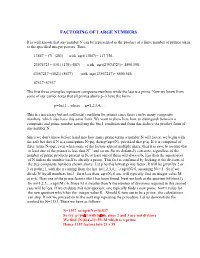

Factoring of Large Numbers

FACTORING OF LARGE NUMBERS It is well known that any number N can be represented as the product of a finite number of primes taken to the specified integer powers. Thus- 13867 = (7)2 (283) with sqrt(13867)= 117.756.. 23974723= (151) (179) (887) with sqrt(23974723)= 4896.398.. 43567217=(5021) (8677) with sqrt(43567217)= 6600.546.. 67537=67537 The first three examples represent composite numbers while the last is a prime. Now we know from some of our earlier notes that all primes above p=3 have the form- p=6n1 , where n=1,2,3,4,… This is a necessary but not sufficient condition for primes since there can be many composite numbers which also have this same form. We want to show here how to distinguish between a composite and prime number satisfying the 6n1 condition and from this deduce the product form of any number N. Since we don’t know before hand into how many prime terms a number N will factor, we begin with the safe bet that if N is a semi-prime N=pq, then p<sqrt(N), provided that p<q. If it is composed of three terms N=pqr, even when some of the factors appear multiple times, then it is save to assume that at least one of the primes is less than N1/3 and so on. So we definitely can state, regardless of the number of prime products present in N, at least one of them will always be less than the square-root of N unless the number itself is already a prime. -

Algorithms in Quantum Computation

Quantum Factoring and Search Algorithms Quantum Information Seminar Friday, Sep. 17, 1999 Ashok Muthukrishnan Rochester Center for Quantum Information (RCQI) ___________________________________________________________ § 1. Quantum Algorithm for Factorisation (1994) A. Factorising and cryptography p. 2 B. Relation of factorisation to period-finding p. 5 C. Quantum algorithm for period-finding p. 6 D. Efficiency of the period-finding algorithm p. 13 § 2. Quantum Algorithm for Database Search (1997) A. Database search and its uses p. 15 B. The quantum search algorithm p. 16 C. Related problems and algorithms p. 19 1 § 1. Quantum Algorithm for Factorisation In 1994, at the 35th Annual Symposium on the Foundations of Computer Science in Los Alamitos, California, Peter Shor of Bell Laboratories announced his discovery of an algorithm for factorising numbers efficiently – one that would factorise a log N (= K) -digit number in O[poly(K)] steps, O[T(K)] meaning asymptotically less than T(K). However, the execution of the algorithm would require the coherent state evolution and measurement collapse of quantum mechanics. In classical complexity theory, the factorising problem is not at present known to be NP-hard, which means that an efficient algorithm for factorising does not imply the same for all problems in the NP class [see Gy79 for a discussion of complexity classes]. Nonetheless, Shor’s algorithm has been hailed as a big acheivement for two reasons. One is that his finding is the first clear demonstration of a problem for which a quantum computer has a distinct advantage over a classical one. The second reason is the factorising problem itself, an efficient algorithm for which has rather disconcerting implications for present-day cryptography: the extreme difficulty of factorising large numbers (the best computer today would take about 428,000 years to factorise a 200-digit number) is precisely what secures the secrecy of most encoded messages transmitted today. -

Reported As Prime 4

Generation of Titanic Prime Numbers through High Performance Computing Infrastructure Mentor: Mr. Je’aime Powell (ECSU) Elizabeth City State University Elizabeth City, NC 27909 Authors: John Bell (MVSU), Matravia Seymore (ECSU), Joseph Jackson (MVSU) Abstract - The focus of the project was to The process of seeking and discovering generate a titanic prime number by using high new prime numbers became very performance computing resources. What makes prime numbers significant is their use in complicated as the numbers grew larger. modern computers for the encryption of data. Unique patterns found in smaller primes The generation of primes are particularly were harder to detect as well. This computing intensive the larger the prime. This complication led to the development of makes titanic primes (thousand digit prime numerous theories, algorithms, and numbers) a perfect candidate for distribution through grid infrastructure. In order for the formulas proposed that could verify the demands of the project to be met, a prime primality of a number. In order to number generator had to be created. Multiple discover an unknown prime number, computer languages such as Javascript, Java, some used visualization techniques. and C++ were tested for use in an attempt to There are many visualization resources develop a generator. Functionality was verified first through the terminal and then by job available such as Benford’s Law, Prime submission to Elizabeth City State University’s Number Theorem, Euclid of Alexandria, VikeGrid (a Condor based computer cluster). Pierre de Fermat, and Eratosthenes. Overall, the results indicated the successes of Currently, there is no official formula for the project and the improvements needed for all prime numbers. -

Notices of the American Mathematical Society

OF THE AMERICAN MATH-EMATICAL ·soCIETY VOLUME 12, NUMBER 8 ISSUE NO. 86 DECEMBER, 1965 SPECIAL ISSUE ASSISTANTSHIPS AND FELLOWSHIPS IN MATHEMATICS IN 1966-1967 OF THE AMERICAN MATHEMATICAL SOCIETY Edited by John W. Green and Gordon L. 'Valker CONTENTS ASSISTANTSHIPS AND FELLOWSHIPS IN MATHEMATICS IN 1966-1967, ••••••••••• 841 SELECTED LIST OF STIPENDS ••••••••••••••••••••••••••••••••• 910 TAX STATUS OF GRANTS •••••••••••••••••••••••••••••••••••• 922 INDEX OF ABSTRACTS, Volume 12 ••••••••••••••••••••••••••••• , • 924 INDEX, Volume 12. • • • • • • • • • • • • • • • • • • • • • , • • • • • • , • , •••••••• 950 The c}/oficei) of the American Mathematical Society is published by the Society in January, February, April, June, August, October, November and December. Price per annual volume is $7.00. Price per copy $2.00. Special price for copies sold at registration desks of meetings of the Society, $1.00 per copy. Subscriptions, orders for back numbers (back issues of the last two years only are available) and inquiries should be addressed to the American Mathematical Society, P.O. Box 6248, Providence, Rhode Island 02904. Second-class postage paid at Providence, Rhode Island, and additional mailing offices. Authorization is granted under the authority of the act of August 24, 1912, as amended by the act of August 4, 1947 (Sec. 34,21, P. L. and R.). Accepted for mailing at the special rate of Postage provided for in section 34,40, paragraph (d). Copyright~. 1965 by the American Mathematical Society Printed in the United States of America ASSISTANTSHIPS AND FELLOWSHIPS IN MATHEMATICS IN 1966-1967 This seventh annual Special Issue of the cN"otictiJ contains listings of assistantships and fellowships available in mathematics for 1966-1967 in Z56 departments of mathematics, statistics, computer sciences and related mathematical disciplines. -

Efficient Non-Malleable Commitment Schemes

Efficient Non-Malleable Commitment Schemes Marc Fischlin and Roger Fischlin Fachbereich Mathematik (AG 7.2) Johann Wolfgang Goethe-Universit¨atFrankfurt am Main Postfach 111932 D{60054 Frankfurt/Main, Germany marc,fischlin @ mi.informatik.uni-frankfurt.de f http://www.mi.informatik.uni-frankfurt.de/g Abstract. We present efficient non-malleable commitment schemes based on standard assumptions such as RSA and Discrete-Log, and un- der the condition that the network provides publicly available RSA or Discrete-Log parameters generated by a trusted party. Our protocols re- quire only three rounds and a few modular exponentiations. We also discuss the difference between the notion of non-malleable commitment schemes used by Dolev, Dwork and Naor [DDN00] and the one given by Di Crescenzo, Ishai and Ostrovsky [DIO98]. 1 Introduction Loosely speaking, a commitment scheme is non-malleable if one cannot trans- form the commitment of another person's secret into one of a related secret. Such non-malleable schemes are for example important for auctions over the Internet: it is necessary that one cannot generate a valid commitment of a bid b + 1 after seeing the commitment of an unknown bid b of another participant. Unfortunately, this property is not achieved by commitment schemes in general, because ordinary schemes are only designated to hide the secret. Even worse, most known commitment schemes are in fact provably malleable. The concept of non-malleability has been introduced by Dolev et al. [DDN00]. They present a non-malleable public-key encryption scheme (based on any trap- door permutation) and a non-malleable commitment scheme with logarithmi- cally many rounds based on any one-way function.