A Learning Algorithm Based on Λ-Policy Iteration and Its Application

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Task: TET Tetris Attack Stage II

Task: TET Tetris Attack Stage II. Day 2. Source file tet.* 08.02.2007 Available memory: 32 MB. A puzzle called “Tetris Attack” has lately become a very popular game in Byteotia. The game itself is highly sophisticated, so we shall only introduce its simplified rules: the player is givena stack of 2n elements, placed one on another and marked with n different symbols. Exactly two elements of the stack are marked with each symbol. A player’s move consists in swapping two neighbouring elements, ie. interchanging them. If, as a result of the swap, there are some two neighbouring elements marked with the same symbol, they are both removed from the stack. Then all the elements that have been above them fall down in consequence and may very well cause another removals. The player’s goal is emptying the stack in the least possible number of moves. Task Write a programme that: • reads the description of the initial stack content from the standard input, • finds a solution with the minimum number of moves possible, • writes out the outcome to the standard output. Input In the first line of the standard input there is one integer n,1 ≤ n ≤ 50000. The following 2n lines describe the th th initial content of the stack. The (i + 1) line contains one integer ai — the symbol which the i (1 ≤ ai ≤ n) element from the bottom is marked with. Each symbol appears in the stack exactly twice. Moreover, no two identical symbols neighbour initially. The test data is well chosen so that a solution with no more than 1000000 moves exists. -

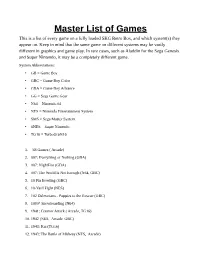

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games ( Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack ( Arcade, TG16) 10. 1942 (NES, Arcade, GBC) 11. 1943: Kai (TG16) 12. 1943: The Battle of Midway (NES, Arcade) 13. 1944: The Loop Master ( Arcade) 14. 1999: Hore, Mitakotoka! Seikimatsu (NES) 15. 19XX: The War Against Destiny ( Arcade) 16. 2 on 2 Open Ice Challenge ( Arcade) 17. 2010: The Graphic Action Game (Colecovision) 18. 2020 Super Baseball ( Arcade, SNES) 19. 21-Emon (TG16) 20. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 21. 3 Count Bout ( Arcade) 22. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 23. 3-D Tic-Tac-Toe (Atari 2600) 24. 3-D Ultra Pinball: Thrillride (GBC) 25. 3-D WorldRunner (NES) 26. 3D Asteroids (Atari 7800) 27. -

Video Game Archive: Nintendo 64

Video Game Archive: Nintendo 64 An Interactive Qualifying Project submitted to the Faculty of WORCESTER POLYTECHNIC INSTITUTE in partial fulfilment of the requirements for the degree of Bachelor of Science by James R. McAleese Janelle Knight Edward Matava Matthew Hurlbut-Coke Date: 22nd March 2021 Report Submitted to: Professor Dean O’Donnell Worcester Polytechnic Institute This report represents work of one or more WPI undergraduate students submitted to the faculty as evidence of a degree requirement. WPI routinely publishes these reports on its web site without editorial or peer review. Abstract This project was an attempt to expand and document the Gordon Library’s Video Game Archive more specifically, the Nintendo 64 (N64) collection. We made the N64 and related accessories and games more accessible to the WPI community and created an exhibition on The History of 3D Games and Twitch Plays Paper Mario, featuring the N64. 2 Table of Contents Abstract…………………………………………………………………………………………………… 2 Table of Contents…………………………………………………………………………………………. 3 Table of Figures……………………………………………………………………………………………5 Acknowledgements……………………………………………………………………………………….. 7 Executive Summary………………………………………………………………………………………. 8 1-Introduction…………………………………………………………………………………………….. 9 2-Background………………………………………………………………………………………… . 11 2.1 - A Brief of History of Nintendo Co., Ltd. Prior to the Release of the N64 in 1996:……………. 11 2.2 - The Console and its Competitors:………………………………………………………………. 16 Development of the Console……………………………………………………………………...16 -

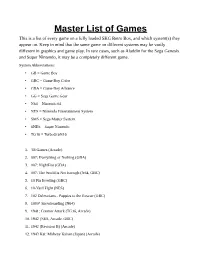

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games (Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack (TG16, Arcade) 10. 1942 (NES, Arcade, GBC) 11. 1942 (Revision B) (Arcade) 12. 1943 Kai: Midway Kaisen (Japan) (Arcade) 13. 1943: Kai (TG16) 14. 1943: The Battle of Midway (NES, Arcade) 15. 1944: The Loop Master (Arcade) 16. 1999: Hore, Mitakotoka! Seikimatsu (NES) 17. 19XX: The War Against Destiny (Arcade) 18. 2 on 2 Open Ice Challenge (Arcade) 19. 2010: The Graphic Action Game (Colecovision) 20. 2020 Super Baseball (SNES, Arcade) 21. 21-Emon (TG16) 22. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 23. 3 Count Bout (Arcade) 24. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 25. 3-D Tic-Tac-Toe (Atari 2600) 26. 3-D Ultra Pinball: Thrillride (GBC) 27. -

Full Games List Pandora DX 3000 in 1

www.myarcade.com.au Ph 0412227168 Full Games List Pandora DX 3000 in 1 1. 10-YARD FIGHT – (1/2P) – (1464) 38. ACTION 52 – (1/2P) – (2414) 2. 16 ZHANG MA JIANG – (1/2P) – 39. ACTION FIGHTER – (1/2P) – (1093) (2391) 40. ACTION HOLLYWOOOD – (1/2P) – 3. 1941 : COUNTER ATTACK – (1/2P) – (362) (860) 41. ADDAMS FAMILY VALUES – (1/2P) – 4. 1942 – (1/2P) – (861) (2431) 5. 1942 – AIR BATTLE – (1/2P) – (1140) 42. ADVENTUROUS BOY-MAO XIAN 6. 1943 : THE BATTLE OF MIDWAY – XIAO ZI – (1/2P) – (2266) (1/2P) – (862) 43. AERO FIGHTERS – (1/2P) – (927) 7. 1943 KAI : MIDWAY KAISEN – (1/2P) 44. AERO FIGHTERS 2 – (1/2P) – (810) – (863) 45. AERO FIGHTERS 3 – (1/2P) – (811) 8. 1943: THE BATTLE OF MIDWAY – 46. AERO FIGHTERS 3 BOSS – (1/2P) – (1/2P) – (1141) (812) 9. 1944 : THE LOOP MASTER – (1/2P) 47. AERO FIGHTERS- UNLIMITED LIFE – (780) – (1/2P) – (2685) 10. 1945KIII – (1/2P) – (856) 48. AERO THE ACRO-BAT – (1/2P) – 11. 19XX : THE WAR AGAINST DESTINY (2222) – (1/2P) – (864) 49. AERO THE ACRO-BAT 2 – (1/2P) – 12. 2 ON 2 OPEN ICE CHALLENGE – (2624) (1/2P) – (1415) 50. AERO THE ACRO-BAT 2 (USA) – 13. 2020 SUPER BASEBALL – (1/2P) – (1/2P) – (2221) (1215) 51. AFTER BURNER II – (1/2P) – (1142) 14. 3 COUNT BOUT – (1/2P) – (1312) 52. AGENT SUPER BOND – (1/2P) – 15. 4 EN RAYA – (1/2P) – (1612) (2022) 16. 4 FUN IN 1 – (1/2P) – (965) 53. AGGRESSORS OF DARK KOMBAT – 17. 46 OKUNEN MONOGATARI – (1/2P) (1/2P) – (106) – (2711) 54. -

Download 80 PLUS 4983 Horizontal Game List

4 player + 4983 Horizontal 10-Yard Fight (Japan) advmame 2P 10-Yard Fight (USA, Europe) nintendo 1941 - Counter Attack (Japan) supergrafx 1941: Counter Attack (World 900227) mame172 2P sim 1942 (Japan, USA) nintendo 1942 (set 1) advmame 2P alt 1943 Kai (Japan) pcengine 1943 Kai: Midway Kaisen (Japan) mame172 2P sim 1943: The Battle of Midway (Euro) mame172 2P sim 1943 - The Battle of Midway (USA) nintendo 1944: The Loop Master (USA 000620) mame172 2P sim 1945k III advmame 2P sim 19XX: The War Against Destiny (USA 951207) mame172 2P sim 2010 - The Graphic Action Game (USA, Europe) colecovision 2020 Super Baseball (set 1) fba 2P sim 2 On 2 Open Ice Challenge (rev 1.21) mame078 4P sim 36 Great Holes Starring Fred Couples (JU) (32X) [!] sega32x 3 Count Bout / Fire Suplex (NGM-043)(NGH-043) fba 2P sim 3D Crazy Coaster vectrex 3D Mine Storm vectrex 3D Narrow Escape vectrex 3-D WorldRunner (USA) nintendo 3 Ninjas Kick Back (U) [!] megadrive 3 Ninjas Kick Back (U) supernintendo 4-D Warriors advmame 2P alt 4 Fun in 1 advmame 2P alt 4 Player Bowling Alley advmame 4P alt 600 advmame 2P alt 64th. Street - A Detective Story (World) advmame 2P sim 688 Attack Sub (UE) [!] megadrive 720 Degrees (rev 4) advmame 2P alt 720 Degrees (USA) nintendo 7th Saga supernintendo 800 Fathoms mame172 2P alt '88 Games mame172 4P alt / 2P sim 8 Eyes (USA) nintendo '99: The Last War advmame 2P alt AAAHH!!! Real Monsters (E) [!] supernintendo AAAHH!!! Real Monsters (UE) [!] megadrive Abadox - The Deadly Inner War (USA) nintendo A.B. -

Juuma Houkan Accele Brid Ace Wo Nerae! Acrobat Mission

3X3 EYES - JUUMA HOUKAN ACCELE BRID ACE WO NERAE! ACROBAT MISSION ACTRAISER HOURAI GAKUEN NO BOUKEN! - TENKOUSEI SCRAMBLE AIM FOR THE ACE! ALCAHEST THE AMAZING SPIDER-MAN - LETHAL FOES ANGELIQUE ARABIAN NIGHTS - SABAKU NO SEIREI-O ASHITA NO JOE CYBERNATOR BAHAMUT LAGOON BALL BULLET GUN BASTARD!! BATTLE SOCCER - FIELD NO HASHA ANCIENT MAGIC - BAZOO! MAHOU SEKAI BING BING! BINGO BISHOUJO SENSHI SAILOR MOON - ANOTHER STORY SAILOR MOON R BISHOUJO SENSHI SAILOR MOON SUPER S - FUWA FUWA PANIC BRANDISH 2 - THE PLANET BUSTER BREATH OF FIRE II - SHIMEI NO KO BS CHRONO TRIGGER - MUSIC LIBRARY CAPTAIN TSUBASA III - KOUTEI NO CHOUSEN CAPTAIN TSUBASA V - HASH NO SHOUGOU CAMPIONE CARAVAN SHOOTING COLLECTION CHAOS SEED - FUUSUI KAIROKI CHOU MAHOU TAIRIKU WOZZ CHRONO TRIGGER CLOCK TOWER CLOCKWERX CRYSTAL BEANS FROM DUNGEON EXPLORER CU-ON-PA SFC CYBER KNIGHT CYBER KNIGHT II - CHIKYUU TEIKOKU NO YABOU CYBORG 009 DAI 3 JI SUPER ROBOT WARS DAI 4 JI SUPER ROBOT WARS DAIKAIJ MONOGATARI DARK HALF DARK LAW - THE MEANING OF DEATH DER LANGRISSER DIGITAL DEVIL STORY 2 - SHIN MEGAMI TENSEI II DONALD DUCK NO MAHOU NO BOUSHI DORAEMON 4 DO RE MI FANTASY - MILON NO DOKIDOKI DAIBOUKEN DOSSUN! GANSEKI BATTLE DR. MARIO DRAGON BALL Z - HYPER DIMENSION DRAGON BALL Z - CHOU SAIYA DENSETSU DRAGON BALL Z - SUPER BUTOUDEN DRAGON BALL Z - SUPER BUTOUDEN 3 DRAGON BALL Z - SUPER GOKUDEN - TOTSUGEKI HEN DRAGON BALL Z - SUPER GOKUDEN - KAKUSEI HEN DRAGON BALL Z - SUPER SAIYA DENSETSU DRAGON QUEST I AND II DRAGON QUEST III - SOSHITE DENSETU E... DRAGON QUEST V - TENKUU NO HANAYOME -

Full Arcade List OVER 2700 ARCADE CLASSICS 1

Full Arcade List OVER 2700 ARCADE CLASSICS 1. 005 54. Air Inferno 111. Arm Wrestling 2. 1 on 1 Government 55. Air Rescue 112. Armed Formation 3. 1000 Miglia: Great 1000 Miles 56. Airwolf 113. Armed Police Batrider Rally 57. Ajax 114. Armor Attack 4. 10-Yard Fight 58. Aladdin 115. Armored Car 5. 18 Holes Pro Golf 59. Alcon/SlaP Fight 116. Armored Warriors 6. 1941: Counter Attack 60. Alex Kidd: The Lost Stars 117. Art of Fighting / Ryuuko no 7. 1942 61. Ali Baba and 40 Thieves Ken 8. 1943 Kai: Midway Kaisen 62. Alien Arena 118. Art of Fighting 2 / Ryuuko no 9. 1943: The Battle of Midway 63. Alien Challenge Ken 2 10. 1944: The LooP Master 64. Alien Crush 119. Art of Fighting 3 - The Path of 11. 1945k III 65. Alien Invaders the Warrior / Art of Fighting - 12. 19XX: The War Against Destiny 66. Alien Sector Ryuuko no Ken Gaiden 13. 2 On 2 OPen Ice Challenge 67. Alien Storm 120. Ashura Blaster 14. 2020 SuPer Baseball 68. Alien Syndrome 121. ASO - Armored Scrum Object 15. 280-ZZZAP 69. Alien vs. Predator 122. Assault 16. 3 Count Bout / Fire SuPlex 70. Alien3: The Gun 123. Asterix 17. 30 Test 71. Aliens 124. Asteroids 18. 3-D Bowling 72. All American Football 125. Asteroids Deluxe 19. 4 En Raya 73. Alley Master 126. Astra SuPerStars 20. 4 Fun in 1 74. Alligator Hunt 127. Astro Blaster 21. 4-D Warriors 75. AlPha Fighter / Head On 128. Astro Chase 22. 64th. Street - A Detective Story 76. -

Arcade 4. 1943 Kai: Midway Kaisen (Japan) Arcade 5

1. 10-Yard Fight (Japan) arcade 2. 1941: Counter Attack (World 900227) arcade 3. 1942 (set 1) arcade 4. 1943 Kai: Midway Kaisen (Japan) arcade 5. 1943: The Battle of Midway (Euro) arcade 6. 1944: The Loop Master (USA 000620) arcade 7. 1945k III arcade 8. 19XX: The War Against Destiny (USA 951207) arcade 9. 2020 Super Baseball (set 1) arcade 10. 2 On 2 Open Ice Challenge (rev 1.21) arcade 11. 3 Count Bout / Fire Suplex (NGM-043)(NGH-043) arcade 12. 3D Crazy Coaster vectrex 13. 3D Mine Storm vectrex 14. 3D Narrow Escape vectrex 15. 3 Ninjas Kick Back (U) supernintend 16. 4-D Warriors arcade 17. 4 Fun in 1 arcade 18. 4 Player Bowling Alley arcade 19. 600 arcade 20. 64th. Street - A Detective Story (World) arcade 21. 720 Degrees (rev 4) arcade 22. 7th Saga supernintend 23. 7-Up's Spot - Spot Goes to Hollywood # SMD megadrive 24. 800 Fathoms arcade 25. '88 Games arcade 26. '99: The Last War arcade 27. AAAHH!!! Real Monsters (E) [!] supernintend 28. Abadox nintendo 29. A.B. Cop (World) (FD1094 317-0169b) arcade 30. A Boy and His Blob nintendo 31. Abscam arcade 32. Acrobatic Dog-Fight arcade 33. Acrobat Mission arcade 34. Act-Fancer Cybernetick Hyper Weapon (World revision 2) arcade 35. Action Fighter (, FD1089A 317-0018) arcade 36. Action Hollywood arcade 37. ActRaiser 2 (E) supernintend 38. ActRaiser supernintend 39. Addams Family - Pugsley's Scavenger Hunt supernintend 40. Addams Family, The (U) supernintend 41. Addams Family Values supernintend 42. Adventures of Batman & Robin megadrive 43. -

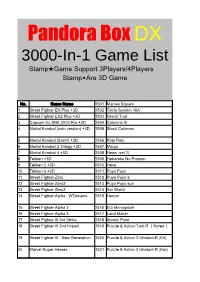

Pandora Box DX 3000-In-1 Games List

Pandora Box DX 3000-In-1 Game List Stamp★Game Support 3Players/4Players Stamp▲Are 3D Game No. Game Name 1501 Maniac Square 1 Street Fighter EX Plus ▲3D 1502 Tetris-System 16A 2 Street Fighter EX2 Plus ▲3D 1503 Grand Tour 3 Capcom Vs.SNK 2000 Pro ▲3D 1504 Columns III 4 Mortal Kombat (coin version) ▲3D 1505 Stack Columns 5 Mortal Kombat 2(set1) ▲3D 1506 Poto Poto 6 Mortal Kombat 3 Trilogy ▲3D 1507 Mouja 7 Mortal Kombat 4 ▲3D 1508 News (set 1) 8 Tekken ▲3D 1509 Hebereke No Popoon 9 Tekken 2 ▲3D 1510 Hexa 10 Tekken 3 ▲3D 1511 Puyo Puyo 11 Street Fighter Zero 1512 Puyo Puyo 2 12 Street Fighter Zero2 1513 Puyo Puyo sun 13 Street Fighter Zero3 1514 Xor World 14 Street Fighter Alpha : W'Dreams 1515 Hexion 15 Street Fighter Alpha 2 1516 Eto Monogatari 16 Street Fighter Alpha 3 1517 Land Maker 17 Street Fighter III 3rd Strike 1518 Atomic Point 18 Street Fighter III 2nd Impact 1519 Puzzle & Action:Tant-R (Korea) 19 Street Fighter III : New Generation 1520 Puzzle & Action 2 Ichidant-R (En) 20 Marvel Super Heroes 1521 Puzzle & Action 2 Ichidant-R (Kor) 21 Marvel Super Heroes Vs. St Fighter 1522 The Newzealand Story 22 Marvel Vs. Capcom : Super Heroes 1523 Puzzle Bobble 23 X-Men : Children oF the Atom 1524 Puzzle Bobble 2 24 X-Men Vs. Street Fighter 1525 Puzzle Bobble 3 25 Hyper Street Fighter II : AE 1526 Puzzle Bobble 4 26 Super Street Fighter II : New C 1527 Bust-A-Move Again 27 Super Street Fighter II Turbo 1528 Puzzle De Pon! 28 Super Street Fighter II X : GMC 1529 Dolmen 29 Street Fighter II : The World Warrior 1530 Magical Drop II 30 Street -

Nintendo Game Boy

Nintendo Game Boy Last Updated on September 26, 2021 Title Publisher Qty Box Man Comments 4 in 1: #1 Sachen 4-in-1 Fun Pak Interplay Productions 4-in-1 Funpak: Volume II Interplay Productions Addams Family, The Ocean Addams Family, The: Pugsley's Scavenger Hunt Ocean Adventure Island II: Aliens in Paradise, Hudson's Hudson Soft Adventure Island II: Aliens in Paradise, Hudson's: Electro Brain Rerelease Electro Brain Adventure Island, Hudson's Hudson Soft Adventure Island, Hudson's: Electro Brain Rerelease Electro Brain Adventures of Rocky and Bullwinkle and Friends, The THQ Adventures of Star Saver, The Taito Aerostar VIC Tokai Aerostar: Sunsoft Rerelease Sunsoft Aladdin, Disney's Virgin Interactive Aladdin, Disney's: THQ Rerelease THQ Alfred Chicken Mindscape Alien vs Predator: The Last of His Clan Activision Alien³ LJN All-Star Baseball '99 Acclaim Alleyway Nintendo Altered Space Sony Imagesoft Amazing Penguin Natsume Amazing Spider-Man 3, The: Invasion of the Spider-Slayers LJN Amazing Spider-Man, The LJN Amazing Tater Atlus Animaniacs Konami Arcade Classic #1: Asteroids & Missile Command Nintendo Arcade Classic #2: Centipede & Millipede Nintendo Arcade Classic No. 3: Galaga & Galaxian Nintendo Arcade Classic No. 4: Defender / Joust Nintendo Asteroids Accolade Atomic Punk Hudson Soft Attack of the Killer Tomatoes THQ Avenging Spirit Jaleco Balloon Kid Nintendo Barbie Game Girl Hi-Tech Express Bart Simpson's Escape From Camp Deadly Acclaim Baseball Nintendo Bases Loaded Jaleco Batman Sunsoft Batman Forever Acclaim Batman: Return of the -

5794 Games.Numbers

Table 1 Nintendo Super Nintendo Sega Genesis/ Master System Entertainment Sega 32X (33 Sega SG-1000 (68 Entertainment TurboGrafx-16/PC MAME Arcade (2959 Games) Mega Drive (782 (281 Games) System/NES (791 Games) Games) System/SNES (786 Engine (94 Games) Games) Games) Games) After Burner Ace of Aces 3 Ninjas Kick Back 10-Yard Fight (USA, Complete ~ After 2020 Super 005 1942 1942 Bank Panic (Japan) Aero Blasters (USA) (Europe) (USA) Europe) Burner (Japan, Baseball (USA) USA) Action Fighter Amazing Spider- Black Onyx, The 3 Ninjas Kick Back 1000 Miglia: Great 10-Yard Fight (USA, Europe) 6-Pak (USA) 1942 (Japan, USA) Man, The - Web of Air Zonk (USA) 1 on 1 Government (Japan) (USA) 1000 Miles Rally (World, set 1) (v1.2) Fire (USA) 1941: Counter 1943 Kai: Midway Addams Family, 688 Attack Sub 1943 - The Battle of 7th Saga, The 18 Holes Pro Golf BC Racers (USA) Bomb Jack (Japan) Alien Crush (USA) Attack Kaisen The (Europe) (USA, Europe) Midway (USA) (USA) 90 Minutes - 1943: The Battle of 1944: The Loop 3 Ninjas Kick Back 3-D WorldRunner Borderline (Japan, 1943mii Aerial Assault (USA) Blackthorne (USA) European Prime Ballistix (USA) Midway Master (USA) (USA) Europe) Goal (Europe) 19XX: The War Brutal Unleashed - 2 On 2 Open Ice A.S.P. - Air Strike 1945k III Against Destiny After Burner (World) 6-Pak (USA) 720 Degrees (USA) Above the Claw Castle, The (Japan) Battle Royale (USA) Challenge Patrol (USA) (USA 951207) (USA) Chaotix ~ 688 Attack Sub Chack'n Pop Aaahh!!! Real Blazing Lazers 3 Count Bout / Fire 39 in 1 MAME Air Rescue (Europe) 8 Eyes (USA) Knuckles' Chaotix 2020 Super Baseball (USA, Europe) (Japan) Monsters (USA) (USA) Suplex bootleg (Japan, USA) Abadox - The Cyber Brawl ~ AAAHH!!! Real Champion Baseball ABC Monday Night 3ds 4 En Raya 4 Fun in 1 Aladdin (Europe) Deadly Inner War Cosmic Carnage Bloody Wolf (USA) Monsters (USA) (Japan) Football (USA) (USA) (Japan, USA) 64th.