Toxtrac: a Fast and Robust Software for Tracking Organisms

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cisco SCA BB Protocol Reference Guide

Cisco Service Control Application for Broadband Protocol Reference Guide Protocol Pack #60 August 02, 2018 Cisco Systems, Inc. www.cisco.com Cisco has more than 200 offices worldwide. Addresses, phone numbers, and fax numbers are listed on the Cisco website at www.cisco.com/go/offices. THE SPECIFICATIONS AND INFORMATION REGARDING THE PRODUCTS IN THIS MANUAL ARE SUBJECT TO CHANGE WITHOUT NOTICE. ALL STATEMENTS, INFORMATION, AND RECOMMENDATIONS IN THIS MANUAL ARE BELIEVED TO BE ACCURATE BUT ARE PRESENTED WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED. USERS MUST TAKE FULL RESPONSIBILITY FOR THEIR APPLICATION OF ANY PRODUCTS. THE SOFTWARE LICENSE AND LIMITED WARRANTY FOR THE ACCOMPANYING PRODUCT ARE SET FORTH IN THE INFORMATION PACKET THAT SHIPPED WITH THE PRODUCT AND ARE INCORPORATED HEREIN BY THIS REFERENCE. IF YOU ARE UNABLE TO LOCATE THE SOFTWARE LICENSE OR LIMITED WARRANTY, CONTACT YOUR CISCO REPRESENTATIVE FOR A COPY. The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB’s public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California. NOTWITHSTANDING ANY OTHER WARRANTY HEREIN, ALL DOCUMENT FILES AND SOFTWARE OF THESE SUPPLIERS ARE PROVIDED “AS IS” WITH ALL FAULTS. CISCO AND THE ABOVE-NAMED SUPPLIERS DISCLAIM ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING, WITHOUT LIMITATION, THOSE OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THIS MANUAL, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. -

CCIA Comments in ITU CWG-Internet OTT Open Consultation.Pdf

CCIA Response to the Open Consultation of the ITU Council Working Group on International Internet-related Public Policy Issues (CWG-Internet) on the “Public Policy considerations for OTTs” Summary. The Computer & Communications Industry Association welcomes this opportunity to present the views of the tech sector to the ITU’s Open Consultation of the CWG-Internet on the “Public Policy considerations for OTTs”.1 CCIA acknowledges the ITU’s expertise in the areas of international, technical standards development and spectrum coordination and its ambition to help improve access to ICTs to underserved communities worldwide. We remain supporters of the ITU’s important work within its current mandate and remit; however, we strongly oppose expanding the ITU’s work program to include Internet and content-related issues and Internet-enabled applications that are well beyond its mandate and core competencies. Furthermore, such an expansion would regrettably divert the ITU’s resources away from its globally-recognized core competencies. The Internet is an unparalleled engine of economic growth enabling commerce, social development and freedom of expression. Recent research notes the vast economic and societal benefits from Rich Interaction Applications (RIAs), a term that refers to applications that facilitate “rich interaction” such as photo/video sharing, money transferring, in-app gaming, location sharing, translation, and chat among individuals, groups and enterprises.2 Global GDP has increased US$5.6 trillion for every ten percent increase in the usage of RIAs across 164 countries over 16 years (2000 to 2015).3 However, these economic and societal benefits are at risk if RIAs are subjected to sweeping regulations. -

UNITED STATES BANKRUPTCY COURT DISTRICT of NEW JERSEY Caption in Compliance with D.N.J

Case 19-30256-VFP Doc 169 Filed 12/31/19 Entered 12/31/19 09:20:50 Desc Main Document Page 1 of 16 UNITED STATES BANKRUPTCY COURT DISTRICT OF NEW JERSEY Caption in Compliance with D.N.J. LBR 9004-19(b) OMNI AGENT SOLUTIONS, LLC 5955 De Soto Ave, Ste 100 Woodland Hills, CA 91367 (818) 906-8300 (818) 783-2737 Facsimile Scott M. Ewing ([email protected]) Case No.: 19-30256-VFP In Re: Chapter: 11 CTE 1 LLC, Judge: Vincent F. Papalia Debtor(s) CERTIFICATION OF SERVICE 1. I, Scott M. Ewing : X represent the Claims and Noticing Agent, in the above-captioned matters am the secretary/paralegal for __________________, who represents in this matter. am the in the above case and am representing myself. I caused the following pleadings and/or documents to be 2. On December 24, 2019 served on the parties listed in the chart below: Notice of Bid Deadline, Auction Date, and Sale Hearing for the Approval of the Sale of Certain Assets of the Debtor Free and Clear of Liens, Claims, and Interests1 Order Approving Sales Procedure Notice and Bidding Procedures [Docket No. 156] 3. I hereby certify under penalty of perjury that the above documents were sent using the mode of service indicated. Dated: December 26, 2019 /s/ Scott M. Ewing Signature: Scott M. Ewing 1 A copy of the Notice is attached as Exhibit D. 3952037 Case 19-30256-VFP Doc 169 Filed 12/31/19 Entered 12/31/19 09:20:50 Desc Main Document Page 2 of 16 Name And Address of Party Served Relationship Of Mode Of Service Party To The Case SEE EXHIBIT A SEE EXHIBIT A Hand-Delivered Regular mail Certified mail/RRR X Other Electronic mail (As authorized by the Court or by rule. -

QUESTION 20-1/2 Examination of Access Technologies for Broadband Communications

International Telecommunication Union QUESTION 20-1/2 Examination of access technologies for broadband communications ITU-D STUDY GROUP 2 3rd STUDY PERIOD (2002-2006) Report on broadband access technologies eport on broadband access technologies QUESTION 20-1/2 R International Telecommunication Union ITU-D THE STUDY GROUPS OF ITU-D The ITU-D Study Groups were set up in accordance with Resolutions 2 of the World Tele- communication Development Conference (WTDC) held in Buenos Aires, Argentina, in 1994. For the period 2002-2006, Study Group 1 is entrusted with the study of seven Questions in the field of telecommunication development strategies and policies. Study Group 2 is entrusted with the study of eleven Questions in the field of development and management of telecommunication services and networks. For this period, in order to respond as quickly as possible to the concerns of developing countries, instead of being approved during the WTDC, the output of each Question is published as and when it is ready. For further information: Please contact Ms Alessandra PILERI Telecommunication Development Bureau (BDT) ITU Place des Nations CH-1211 GENEVA 20 Switzerland Telephone: +41 22 730 6698 Fax: +41 22 730 5484 E-mail: [email protected] Free download: www.itu.int/ITU-D/study_groups/index.html Electronic Bookshop of ITU: www.itu.int/publications © ITU 2006 All rights reserved. No part of this publication may be reproduced, by any means whatsoever, without the prior written permission of ITU. International Telecommunication Union QUESTION 20-1/2 Examination of access technologies for broadband communications ITU-D STUDY GROUP 2 3rd STUDY PERIOD (2002-2006) Report on broadband access technologies DISCLAIMER This report has been prepared by many volunteers from different Administrations and companies. -

Wide. Wider. Widest

WIDE. WIDER. WIDEST. Logitech Webcam C930e Experience video calls that are the next best Raise meeting productivity with remarkably With advanced business-grade certifications thing to being there in person. Logitech® clear video at all times – even when band- and enhanced integration with Logitech C930e Webcam delivers clear video and sound width is limited. The C930e Webcam supports Collaboration Program (LCP) members3, you in virtually any environment, even low-light H.264 UVC 1.5 with Scalable Video Coding can launch your next presentation or video conditions. With HD 1080p, a wide 90-degree to minimize its dependence on computer meeting with complete confidence using any field of view, pan, tilt, and 4x digital zoom, C930e and network resources. Plus, USB plug-and- video conferencing application. offers advanced webcam capabilities for superior play connectivity makes the C930e webcam video conferencing. simple to start and even easier to operate. Logitech Webcam C930e FEATURE SPOTLIGHT HD 1080p video quality at 30 frames-per-second Logitech RightLightTM 2 technology and autofocus Premium glass lens Brings life-like HD video to conference calls, enabling Webcam intelligently adjusts to improve visual Enjoy razor-sharp images even when showing expressions, non-verbal cues and movements to be quality in low light at multiple distances. documents up close, a whiteboard drawing or a seen clearly. product demo. 4X digital zoom in Full HD Widest-ever business webcam field-of-view 4X zoom at 1080p provides the highest level of Convenient privacy shutter Enjoy an extended view – 90 degrees – perfect for detail for your calls, visuals and presentations. -

VOIP-108 G2 / Connect Ipx8 – Intercom-Over-IP Solution

VOIP-108 G2 / Connect IPx8 – Intercom-over-IP Solution Intelligent and seamless interfacing to the outside world is the key to success in many intercom applications. Connect IPx8 is a specialised Audio-over-IP interface to connect your intercom system to IP-based networks. The VOIP-108 G2 Client Card and the Connect IPx8 are Connect IPx8 8-Port Panel Interface designed to transport quality audio with low bandwidth The Connect IPx8 is a 19”/1RU unit which converts eight over digital IP-based networks. Designed for mission- AES or analogue signals into IP data and vice versa. The critical applications like the broadcast industries the device is available in three versions, offering different devices provide several failsafe options, which ensure interface options on the rear of the unit. The CAT5 and audio is available even under extreme circumstances. COAX versions are for connecting panels and other AES signals, while the AIO version is perfect for the connection There are three major applications for the devices: of 4-wires and other analogue sources. The Connect IPx8 • multi-port matrix-to-matrix connections (Trunking) can flawlessly connect up to eight standard Artist 1000, • multi-port matrix-to-control panel connections 2000 or 3000 series control panels with full functionality (Remote panels) to an Artist matrix via an IP-network. • multi-channel distribution of audio lines Easy Configuration VOIP-108 G2 8-Port Matrix Client Card The VOIP-108 G2 client card and the Connect IPx8 The new VOIP-108 G2 card converts eight Artist matrix panel interface can be configured directly in the Director ports into a compressed IP-stream and vice versa. -

![A Glimpse of the Matrix (Extended Version) Arxiv:1910.06295V2 [Cs.NI]](https://docslib.b-cdn.net/cover/3393/a-glimpse-of-the-matrix-extended-version-arxiv-1910-06295v2-cs-ni-1223393.webp)

A Glimpse of the Matrix (Extended Version) Arxiv:1910.06295V2 [Cs.NI]

A Glimpse of the Matrix (Extended Version) Scalability Issues of a New Message-Oriented Data Synchronization Middleware Florian Jacob Jan Grashöfer Hannes Hartenstein Karlsruhe Institute of Technology Karlsruhe Institute of Technology Karlsruhe Institute of Technology Institute of Telematics Institute of Telematics Institute of Telematics fl[email protected] [email protected] [email protected] Abstract partial order in the replicated per-room data structure from which the current state is derived. Matrix is a new message-oriented data synchroniza- At present, the public federation is centered around tion middleware, used as a federated platform for near one large server with about 50 000 daily active users as real-time decentralized applications. It features a novel of January 2019. However, Matrix is growing fast and approach for inter-server communication based on syn- is intended to be more decentralized. Consequently, the chronizing message history by using a replicated data structure of the network might change drastically in structure. We measured the structure of public parts in the future when more servers join the federation and the Matrix federation as a basis to analyze the middle- users are distributed more evenly across them. This ware’s scalability. We confirm that users are currently will challenge the Matrix protocol in terms of scalability. cumulated on a single large server, but find more small In addition to the public federation, at least one large, servers than expected. We then analyze network load independent private federation exists: In April 2019, distribution in the measured structure and identify scala- the French government announced the beta release of bility issues of Matrix’ group communication mechanism its own, self-sovereign communication system based in structurally diverse federations. -

Burst-By-Burst Adaptive Joint-Detection CDMA/H.26L Based Wireless Video Telephony Using TTCM and LDPC Codes J

Burst-by-burst Adaptive Joint-Detection CDMA/H.26L Based Wireless Video Telephony using TTCM and LDPC Codes J. Y. Chung, F. Guo, S. X. Ng and 1L. Hanzo Dept. of ECS, University of Southampton, SO17 1BJ, UK. Tel: +44-23-8059 3125, Fax: +44-23-8059 4508 Email: [email protected], http://www-mobile.ecs.soton.ac.uk Abstract—A low bit-rate video coding techniques using the H.26L stan- a LDPC-based Block Coded Modulation (LDPC-BCM) scheme was dard codec for robust transmission in mobile multimedia environments are proposed in [10]. When employing a long codeword length of 3000 presented. For the sake of achieving error resilience, the source codec modulated symbols, regular LDPC-BCM was found to slightly out- has to make provisions for error detection, resynchronization and error concealment. Thus a packetization technique invoking adaptive bit-rate perform TTCM in the context of non-dispersive uncorrelated Rayleigh control was used in conjuction with the various modulation scheme em- fading channels at a similar coding rate and a comparable decoding ployed. In this contribution, we propose a Burst-by-Burst Adaptive Coded complexity [11]. Modulation-Aided Joint Detection-Based CDMA (ACM-JD-CDMA) sche- In order to counteract the time varying nature of mobile radio chan- me for wireless video telephony and characterise its performance when nels, in this study Adaptive Coded Modulation (ACM) was employed- communicating over the UTRA wideband vehicular fading channels. The coded modulation schemes invoked in our fixed modulation mode based [12]. Explicitly, a higher order modulation mode is employed, when systems are Low Density Parity Check code based Block Coded Modula- the instantaneous estimated channel quality is high, in order to in- tion (LDPC-BCM) and Turbo Trellis Coded Modulation (TTCM). -

Language Learning in Tandem Via Skype

269 The Reading Matrix Vol. 6, No. 3, December 2006 5th Anniversary Special Issue — CALL Technologies and the Digital Learner LANGUAGE LEARNING IN TANDEM VIA SKYPE Antonella Elia University of Naples “L’Orientale” Abstract ________________ Skype is the largest of the new companies offering Voice over Internet Protocol (VoIP). It allows users to call anyone in the world for free, while offering a precious opportunity to practice foreign languages with native speakers on the Internet. ‘Mixxer’ is an educational site created to help students and teachers find partners for language exchanges. Through this site, for example, native Italian speakers studying English can find English speaking students worldwide studying Italian with whom a Skype tandem exchange can take place. The next frontier is Skype Casting: by merging Skype and Podcasting it is possible to run personal mini-radio stations, which can be accessed by any Skype user. New technologies continue to offer challenging solutions to language learning. ________________ Introduction Language teachers have always embraced the world of opportunities offered through new technologies. The Internet continues to provide challenging and intriguing possibilities for language learning. Nowadays many tools, such as e-mail, mailing lists, discussion forums and chat are familiar to many language teachers. Although recent innovations such as blogs, wikis, and VoIP may be less familiar, they are powerful media for online language learning. In the new millennium the first-generation of synchronous tools have not disappeared from the Internet landscape. E-mail continues to be a useful tool for tandem learning and classroom exchanges and many teachers are using forums more and more as the principal tools for written communication and are starting to use wikis for collaborative projects. -

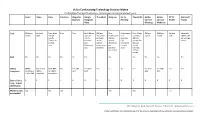

Video Conferencing Technology Decision Matrix

Video Conferencing Technology Decision Matrix For Providers/Practices/Organizations ready to begin conducting telehealth visits Zoom Vidyo VSee Facetime Skype for Google TheraNest doxy.me Go To Thera LINK Adobe Adobe OTTO Microsoft Business Hangouts Meeting Connect Connect Health Teams Meet Meetings Webinars Cost $200 per Contact Free, Basic Free Free Basic $6 per $38 per Free, Professional Free 15 day $50 per $130 per Contact Microsoft month Sales $49 per user per month for Professional $12 trial Basic month month sales 365 E5 $57 month, month up to 30 $35 per Business $30 per per user per Enterprise Business active month, $16 month Plus month contact $12 clients, Clinic $50 Enterprise $45 per sales Enterprise other plans per Contact month $25 available provider sales Ultimate per month $65 per month BAA Yes Yes Yes No No Yes Yes Yes Yes Yes No HIPAA HIPAA SOC 2 and Basic BAA No Yes with Yes with Yes Yes Yes Yes Yes with Yes with Yes Yes Compliant Complianc HIPAA for HIPAA BAA BAA BAA BAA e with BAA compliant compliance Ease of Use 1 2 2 2 1 1 3 2 2 3 2 3 3 2 3 easy - 3 more challenging Works on low Yes Yes Yes Yes Yes Yes bandwidth? 3513 Brighton Blvd, Ste 484, Denver, CO 80216 | primehealthco.com Prime Health does not endorse the use of or the security capabilities of any particular communications product. Video Conferencing Technology Decision Matrix For Providers/Practices/Organizations ready to begin conducting telehealth visits Zoom Vidyo VSee Facetime Skype for Google TheraNest doxy.me Go To Thera LINK Adobe Adobe OTTO Microsoft -

SVD-Aided Unequal-Protection Spatial Multiplexing for Wireless Video Telephony

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by e-Prints Soton SVD-aided Unequal-Protection Spatial Multiplexing for Wireless Video Telephony Du Yang, Nasruminallah, Lie-Liang Yang and Lajos Hanzo School of ECS, University of Southampton, SO17 1BJ, UK Tel: +44-23-8059 3364; Fax: +44-23-8059 4508 E-mail: dy05r,n06r,lly,[email protected]; http://www-mobile.ecs.soton.ac.uk Abstract—1 The proposed Singular Value Decomposition (SVD) using Trellis Coded Modulation (TCM) for error protection aided Spatial Multiplexing (SM) based Multiple-Input-Multiple- without extending the bandwidth requirements, which was Output (MIMO) system is capable of achieving a high bandwidth also combined with Multi-level Coding (MLC) to provide efficiency. The SVD operation effectively splits the MIMO chan- nel into orthogonal eigen-beams having unequal Bit-error-ratios UEP, for the sake of improving the MEPG-4 video scheme’s (BERs). This property may be exploited for the transmission of performance. speech, audio, video and other multimedia source signals, where In this paper, we propose a novel Singular Value De- the source-coded bits have different error sensitivity. composition (SVD) aided Spatial Multiplexing (SM) based Index Terms—SVD,MIMO,H.264,UEP MIMO transmission scheme for video communications, which decomposes the MIMO channel into orthogonal eigen-beams I. INTRODUCTION exhibiting unequal channel gains characterized by their cor- Video communication over wireless channel has attract responding eigen-values. Consequently, the data transmitted substantial research interest in recent years because of the via the different eigen-beams exhibits a different Bit-error- popularity of diverse video applications, such as for exam- ratio (BER), which provides a novel form of UEP instead ple video-phones, as well as YouTube- and BBC iplayer- of using different-rate FEC schemes. -

Automated Malware Analysis Report For

ID: 402013 Cookbook: browseurl.jbs Time: 11:41:48 Date: 01/05/2021 Version: 32.0.0 Black Diamond Table of Contents Table of Contents 2 Analysis Report https://managebooking.reservanto.cz/Account/SecretKey? type=NewAccount&secretKey=3d428529-925c-4bc2-8634-dfe869521dd8 4 Overview 4 General Information 4 Detection 4 Signatures 4 Classification 4 Startup 4 Malware Configuration 4 Yara Overview 4 Sigma Overview 4 Signature Overview 4 Mitre Att&ck Matrix 5 Behavior Graph 5 Screenshots 6 Thumbnails 6 Antivirus, Machine Learning and Genetic Malware Detection 7 Initial Sample 7 Dropped Files 7 Unpacked PE Files 7 Domains 7 URLs 7 Domains and IPs 8 Contacted Domains 8 Contacted URLs 8 URLs from Memory and Binaries 9 Contacted IPs 9 Public 10 General Information 10 Simulations 11 Behavior and APIs 11 Joe Sandbox View / Context 11 IPs 11 Domains 11 ASN 12 JA3 Fingerprints 12 Dropped Files 12 Created / dropped Files 12 Static File Info 43 No static file info 43 Network Behavior 43 Network Port Distribution 43 TCP Packets 44 UDP Packets 45 DNS Queries 47 DNS Answers 48 HTTPS Packets 49 Code Manipulations 55 Statistics 55 Behavior 55 System Behavior 55 Analysis Process: iexplore.exe PID: 3728 Parent PID: 792 55 General 55 Copyright Joe Security LLC 2021 Page 2 of 56 File Activities 55 Registry Activities 56 Analysis Process: iexplore.exe PID: 68 Parent PID: 3728 56 General 56 File Activities 56 Registry Activities 56 Disassembly 56 Copyright Joe Security LLC 2021 Page 3 of 56 Analysis Report https://managebooking.reservanto.cz/A…ccount/SecretKey?type=NewAccount&secretKey=3d428529-925c-4bc2-8634-dfe869521dd8