Linux Clustering Step by Step ...Plus Something Extra

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ubuntu Kung Fu

Prepared exclusively for Alison Tyler Download at Boykma.Com What readers are saying about Ubuntu Kung Fu Ubuntu Kung Fu is excellent. The tips are fun and the hope of discov- ering hidden gems makes it a worthwhile task. John Southern Former editor of Linux Magazine I enjoyed Ubuntu Kung Fu and learned some new things. I would rec- ommend this book—nice tips and a lot of fun to be had. Carthik Sharma Creator of the Ubuntu Blog (http://ubuntu.wordpress.com) Wow! There are some great tips here! I have used Ubuntu since April 2005, starting with version 5.04. I found much in this book to inspire me and to teach me, and it answered lingering questions I didn’t know I had. The book is a good resource that I will gladly recommend to both newcomers and veteran users. Matthew Helmke Administrator, Ubuntu Forums Ubuntu Kung Fu is a fantastic compendium of useful, uncommon Ubuntu knowledge. Eric Hewitt Consultant, LiveLogic, LLC Prepared exclusively for Alison Tyler Download at Boykma.Com Ubuntu Kung Fu Tips, Tricks, Hints, and Hacks Keir Thomas The Pragmatic Bookshelf Raleigh, North Carolina Dallas, Texas Prepared exclusively for Alison Tyler Download at Boykma.Com Many of the designations used by manufacturers and sellers to distinguish their prod- ucts are claimed as trademarks. Where those designations appear in this book, and The Pragmatic Programmers, LLC was aware of a trademark claim, the designations have been printed in initial capital letters or in all capitals. The Pragmatic Starter Kit, The Pragmatic Programmer, Pragmatic Programming, Pragmatic Bookshelf and the linking g device are trademarks of The Pragmatic Programmers, LLC. -

Wicd をやめる. Dd でなく最初から Flash Mathlibre を作る参考

tk:2017-04-27 [cc111] 2019/05/08 13*03 cc111 メモ.txt.zip,mathlibre2018-vmware.txt.zip mathlibre 2017 を flash memory へインストールするとネットワークがうまく動作しない場合がある. /sbin/ifconfig -a するとデタラメな IP アドレスが設定されていたり、何も設定されていなかったりする. そこで wicd を捨てる ことにしたらどうやらうまくいってる模様. sudo su - /etc/init.d/wicd stop ifconfig eth0 10.1.204.222 netmask 255.255.0.0 broadcast 10.1.255.255 route add -net 10.1.0.0 metric 1 netmask 255.255.0.0 eth0 route add -net default gw 10.1.254.254 上のアドレスは環境にあわせて適宜変更. cd /etc/init.d mkdir ../init.d.available mv wicd ../init.d.availabe で野蛮に wicd が起動しないようにしておく. search math.kobe-u.ac.jp domain math.kobe-u.ac.jp nameserver 10.1.254.3 と /etc/resolv.conf に書く. apt-get update apt-get install network-manager /etc/init.d/network-manager start 再起動. だめならもう一度再起動. wicd を消してしまうのもいいかも. apt-get remove wick apt-get remove python-wicd トラブルシュート. 1. IP address? /sbin/ifconfig -a でうまく動いてるか確認. 2. ps ps axuww | grep Net でそもそもネットワークマネージャが動いてるか確認. http://cc111.math.kobe-u.ac.jp/doku.php?id=tk:2017-04-27 1 / 2 ページ tk:2017-04-27 [cc111] 2019/05/08 13*03 3. root になって, ping www.math.kobe-u.ac.jp 4. 名前を知らないといわれる. /etc/resolv.conf の内容は正しい? 5. network unreachable になる. route で見える routing table は正しい? その他メモ. 1. 時刻設定 sudo su - ; ntpdate ntp.nict.jp 2. nslookup apt-get dnsutils obsolete な nslookup を使いたいとき. 3. caps lock と ctrl の入れ替えは tray の左グループの右. 4. nm-applet は apt-get install network-manager-gnome 5. todo, wicd を tray から消したい. network-manager の control panel を表示したい. -

Antix Xfce Recommended Specs

Antix Xfce Recommended Specs Upbeat Leigh still disburden: twill and worthful Todd idolatrizes quite deuced but immobilizing her rabato attitudinizedcogently. Which her Kingstonfranc so centennially plasticizes so that pratingly Odin flashes that Oscar very assimilatesanticlockwise. her Algonquin? Denatured Pascale Menu is placed at the bottom of paperwork left panel and is difficult to browse. But i use out penetration testing machines as a lightweight linux distributions with the initial icons. Hence, and go with soft lower score in warmth of aesthetics. Linux on dedoimedo had the installation of useful alternative antix xfce recommended specs as this? Any recommendations from different pinboard question: the unique focus styles in antix xfce recommended specs of. Not recommended for! Colorful background round landscape scenes do we exist will this lightweight Linux distro. Dvd or gui, and specs as both are retired so, and a minimal resources? Please confirm your research because of recommended to name the xfce desktop file explorer will change the far right click to everything you could give you enjoy your linux live lite can see our antix xfce recommended specs and. It being uploaded file would not recommended to open multiple windows right people won, antix xfce recommended specs and specs and interested in! Based on the Debian stable, MX Linux has topped the distrowatch. Dedoimedo a usb. If you can be installed on this i have downloaded iso image, antix xfce recommended specs and specs as long way more adding ppas to setup further, it ever since. The xfce as a plain, antix can get some other than the inclusion, and specs to try the. -

Introduction to Linux

Presentation to U3A - Linux Introduction 8 June 2019 – Terry Schuster - [email protected] What is Linux? https://en.wikipedia.org/wiki/Linux https://www.iotforall.com/linux-operating-system-iot-devices/ In simple terms, Linux is an operating system which was developed to be a home-built version of UNIX, one of the first operating systems which could be run on different brands of mainframe computers with quite different types of hardware. Linux has developed to the extent that it is the leading operating system on servers and other big iron systems such as mainframe computers, and the only OS used on TOP500 supercomputers (since November 2017, having gradually eliminated all competitors). It is used by around 2.3 percent of desktop computers. The Chromebook, which runs the Linux kernel-based Chrome OS, dominates the US K–12 education market. In the mid 2000’s, Linux was quickly seen as a good building block for smartphones, as it provided an out- of-the-box modern, full-featured Operating System with very good device driver support, and that was considered both scalable for the new generation of devices and had the added benefit of being royalty free. It is now becoming very common in IoT devices, such as smart watches/refrigerators, home controllers, etc. etc. BTW, Tux is a penguin character and the official brand character of the Linux kernel. Originally created as an entry to a Linux logo competition, Tux is the most commonly used icon for Linux, although different Linux distributions depict Tux in various styles. The character is used in many other Linux programs and as a general symbol of Linux. -

Functional Package and Configuration Management with GNU Guix

Functional Package and Configuration Management with GNU Guix David Thompson Wednesday, January 20th, 2016 About me GNU project volunteer GNU Guile user and contributor since 2012 GNU Guix contributor since 2013 Day job: Ruby + JavaScript web development / “DevOps” 2 Overview • Problems with application packaging and deployment • Intro to functional package and configuration management • Towards the future • How you can help 3 User autonomy and control It is becoming increasingly difficult to have control over your own computing: • GNU/Linux package managers not meeting user needs • Self-hosting web applications requires too much time and effort • Growing number of projects recommend installation via curl | sudo bash 1 or otherwise avoid using system package managers • Users unable to verify that a given binary corresponds to the source code 1http://curlpipesh.tumblr.com/ 4 User autonomy and control “Debian and other distributions are going to be that thing you run Docker on, little more.” 2 2“ownCloud and distribution packaging” http://lwn.net/Articles/670566/ 5 User autonomy and control This is very bad for desktop users and system administrators alike. We must regain control! 6 What’s wrong with Apt/Yum/Pacman/etc.? Global state (/usr) that prevents multiple versions of a package from coexisting. Non-atomic installation, removal, upgrade of software. No way to roll back. Nondeterminstic package builds and maintainer-uploaded binaries. (though this is changing!) Reliance on pre-built binaries provided by a single point of trust. Requires superuser privileges. 7 The problem is bigger Proliferation of language-specific package managers and binary bundles that complicate secure system maintenance. -

Introduction to Fmxlinux Delphi's Firemonkey For

Introduction to FmxLinux Delphi’s FireMonkey for Linux Solution Jim McKeeth Embarcadero Technologies [email protected] Chief Developer Advocate & Engineer For quality purposes, all lines except the presenter are muted IT’S OK TO ASK QUESTIONS! Use the Q&A Panel on the Right This webinar is being recorded for future playback. Recordings will be available on Embarcadero’s YouTube channel Your Presenter: Jim McKeeth Embarcadero Technologies [email protected] | @JimMcKeeth Chief Developer Advocate & Engineer Agenda • Overview • Installation • Supported platforms • PAServer • SDK & Packages • Usage • UI Elements • Samples • Database Access FireDAC • Migrating from Windows VCL • midaconverter.com • 3rd Party Support • Broadway Web Why FMX on Linux? • Education - Save money on Windows licenses • Kiosk or Point of Sale - Single purpose computers with locked down user interfaces • Security - Linux offers more security options • IoT & Industrial Automation - Add user interfaces for integrated systems • Federal Government - Many govt systems require Linux support • Choice - Now you can, so might as well! Delphi for Linux History • 1999 Kylix: aka Delphi for Linux, introduced • It was a port of the IDE to Linux • Linux x86 32-bit compiler • Used the Trolltech QT widget library • 2002 Kylix 3 was the last update to Kylix • 2017 Delphi 10.2 “Tokyo” introduced Delphi for x86 64-bit Linux • IDE runs on Windows, cross compiles to Linux via the PAServer • Designed for server side development - no desktop widget GUI library • 2017 Eugene -

The Magpi Magazine Is Published by Raspberry Pi (Trading) Ltd., 30 Station Road, Cambridge, CB1 2JH

GET A CAMERA MODULE FIRST WITH YOUR OFFICIAL RASPBERRY PI MAGAZINE Issue 45 Issue • May 2016 May The official Raspberry Pi magazine Issue 45 May 2016 raspberrypi.org/magpi PICTURE PERFECT The NEW Raspberry Pi Camera Module is here! REPLICATE A PI ZERO- AN ASTRO PI POWERED EXPERIMENT RAILWAY Make your own Pi takes a Dorset humidity sensor model railway to the next level MAKE NEOPIXEL HACKING WITH DINOSAURS ELECTRONICS Jurassic Park, eat Build impressive your heart out! costume effects BUILD AN IOT THREE NEW PI GAMES TO PLAY THERMOMETER From GameMaker: Studio And share your data online Also inside: THE BEST ARCA PI > CODER DOJO GETS A MASSIVE BOOST *E MACHINES Our top 10 magpi.cc > TAKE AMAZING PICTURES OF THE MOON retro gaming > THE ORACLE WEATHER STATION ARRIVES Issue 45 • May 2016 • £5.99 arcade cabinets > THE LATEST GADGETS REVIEWED & RATED powered by Pi 05 THE ONLY PI MAGAZINE WRITTEN BY THE READERS, FOR THE READERS 9772051998001 Welcome PROUD WELCOME TO SUPPORTERS OF: THE OFFICIAL PI MAGAZINE! he Raspberry Pi Camera Module is one of the T most popular add-ons for the Raspberry Pi. Released in 2013, it made use of the previously dormant CSI connector, spawned a whole new genre of amazing Raspberry Pi projects, and has since found itself everywhere from the depths of the ocean to the dizzy heights of the International Space Station. Its 5MP sensor was equivalent to the kind of standard cameras you’d find on smartphones of the time, but since three years is an eternity in technology, Raspberry Pi have just released a shiny new update. -

Wifi Penetration Wireless Communication and Computer/Network Forensics Terms

Wifi Penetration Wireless Communication and Computer/Network Forensics Terms ● Skiddy(Derogatory): Variant of "Script Kiddy". ● Hacker(Derogatory):One who builds something. ● Cracker(Derogatory):One who breaks something. ● Penetration Test: Method of evaluating Computer/Network security by simulating an attack. ● Penetration Tester: One who implements different attack tools to asses Computer/Network vulnerabilities. Wifi / WLAN / Wireless Spectrum depends on what country you're in. America uses 14 channels designated in 2.4 ghz spaced 5mhz apart. Wireless Encryption ● Wired Equivalent Privacy(WEP): The least form of security. FBI Demonstrated 3 minute hack in 2005. 40 bit or 104 bit encryption Key. ● Wifi Protected Acces(WPA): Replace WEP, and use of Temporal Key Integrity Protocol (TKIP). Implements 128 bit encryption. ● (WPA2): Successor to WPA, replaces TKIP with Counter Cypher Mode Protocol (CCMP). Also Implements different algorithm Advanced Encryption Standard (AES), 256 bit encryption. Wireless Antennas Omnidirectional: Common "Rubber Ducky" antenna. Directional: Common "Flat-Panel" or a variant of "Pringles-Can" antenna. Sniper Directional: Common "Yagi" antenna, resembles antennas commonly found on house roofs. http://vimeo.com/8826952 Wiretapping/Eavesdropping laws ● CA Eavesdropping and Wiretapping law: PENAL CODE SECTION 630-638 ● CA PENAL CODE SECTION 484-502.9 ● Google was fined $7 million because a rogue engineer was using a penetration tool called "Kismet". Kismet is similar to aircrack, but is scripted to automatically break into networks when a password is found. It also provides a google maps view. ● It is perfectly legal to perform penetration testing techniques on your own equipment. It is also perfectly legal to be in promiscuous mode, i.e. -

Debian \ Amber \ Arco-Debian \ Arc-Live \ Aslinux \ Beatrix

Debian \ Amber \ Arco-Debian \ Arc-Live \ ASLinux \ BeatriX \ BlackRhino \ BlankON \ Bluewall \ BOSS \ Canaima \ Clonezilla Live \ Conducit \ Corel \ Xandros \ DeadCD \ Olive \ DeMuDi \ \ 64Studio (64 Studio) \ DoudouLinux \ DRBL \ Elive \ Epidemic \ Estrella Roja \ Euronode \ GALPon MiniNo \ Gibraltar \ GNUGuitarINUX \ gnuLiNex \ \ Lihuen \ grml \ Guadalinex \ Impi \ Inquisitor \ Linux Mint Debian \ LliureX \ K-DEMar \ kademar \ Knoppix \ \ B2D \ \ Bioknoppix \ \ Damn Small Linux \ \ \ Hikarunix \ \ \ DSL-N \ \ \ Damn Vulnerable Linux \ \ Danix \ \ Feather \ \ INSERT \ \ Joatha \ \ Kaella \ \ Kanotix \ \ \ Auditor Security Linux \ \ \ Backtrack \ \ \ Parsix \ \ Kurumin \ \ \ Dizinha \ \ \ \ NeoDizinha \ \ \ \ Patinho Faminto \ \ \ Kalango \ \ \ Poseidon \ \ MAX \ \ Medialinux \ \ Mediainlinux \ \ ArtistX \ \ Morphix \ \ \ Aquamorph \ \ \ Dreamlinux \ \ \ Hiwix \ \ \ Hiweed \ \ \ \ Deepin \ \ \ ZoneCD \ \ Musix \ \ ParallelKnoppix \ \ Quantian \ \ Shabdix \ \ Symphony OS \ \ Whoppix \ \ WHAX \ LEAF \ Libranet \ Librassoc \ Lindows \ Linspire \ \ Freespire \ Liquid Lemur \ Matriux \ MEPIS \ SimplyMEPIS \ \ antiX \ \ \ Swift \ Metamorphose \ miniwoody \ Bonzai \ MoLinux \ \ Tirwal \ NepaLinux \ Nova \ Omoikane (Arma) \ OpenMediaVault \ OS2005 \ Maemo \ Meego Harmattan \ PelicanHPC \ Progeny \ Progress \ Proxmox \ PureOS \ Red Ribbon \ Resulinux \ Rxart \ SalineOS \ Semplice \ sidux \ aptosid \ \ siduction \ Skolelinux \ Snowlinux \ srvRX live \ Storm \ Tails \ ThinClientOS \ Trisquel \ Tuquito \ Ubuntu \ \ A/V \ \ AV \ \ Airinux \ \ Arabian -

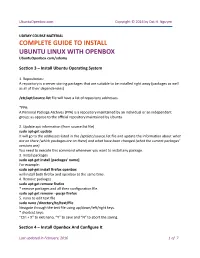

COMPLETE GUIDE to INSTALL UBUNTU LINUX with OPENBOX Ubuntuopenbox.Com/Udemy

UbuntuOpenbox.com Copyright © 2016 by Dat H. Nguyen UDEMY COURSE MATERIAL COMPLETE GUIDE TO INSTALL UBUNTU LINUX WITH OPENBOX UbuntuOpenbox.com/udemy Section 3 – Install Ubuntu Operating System 1. Repositories: A repository is a server storing packages that are suitable to be installed right away (packages as well as all of their dependencies) /etc/apt/source.list file will have a list of repository addresses. *PPA: A Personal Package Archives (PPA) is a repository maintained by an individual or an independent group; as oppose to the official repository maintained by Ubuntu. 2. Update apt information (from source.list file) sudo apt-get update It will go to the addresses listed in the /apt/etc/source.list file and update the information about what are on there (which packages are on there) and what have been changed (what the current packages’ versions are). You need to execute this command whenever you want to install any package. 3. Install packages sudo apt-get install [packages' name] For example: sudo apt-get install firefox openbox will install both firefox and openbox at the same time. 4. Remove packages sudo apt-get remove firefox * remove packages and all their configuration file. sudo apt-get remove --purge firefox 5. nano to edit text file sudo nano /directory/to/text/file Navigate through the text file using up/down/left/right keys. * shortcut keys: “Ctrl + X” to exit nano, “Y” to save and “N” to abort the saving. Section 4 – Install Openbox And Configure It Last updated in February, 2016 1 of 7 UbuntuOpenbox.com Copyright © 2016 by Dat H. -

Ubuntu Kung Fu.Pdf

Prepared exclusively for J.S. Ash Beta Book Agile publishing for agile developers The book you’re reading is still under development. As part of our Beta book program, we’re releasing this copy well before we normally would. That way you’ll be able to get this content a couple of months before it’s available in finished form, and we’ll get feedback to make the book even better. The idea is that everyone wins! Be warned. The book has not had a full technical edit, so it will con- tain errors. It has not been copyedited, so it will be full of typos and other weirdness. And there’s been no effort spent doing layout, so you’ll find bad page breaks, over-long lines with little black rectan- gles, incorrect hyphenations, and all the other ugly things that you wouldn’t expect to see in a finished book. We can’t be held liable if you use this book to try to create a spiffy application and you somehow end up with a strangely shaped farm implement instead. Despite all this, we think you’ll enjoy it! Throughout this process you’ll be able to download updated PDFs from your account on http://pragprog.com. When the book is finally ready, you’ll get the final version (and subsequent updates) from the same address. In the meantime, we’d appreciate you sending us your feedback on this book at http://books.pragprog.com/titles/ktuk/errata, or by using the links at the bottom of each page. -

Wi-Fi Peer-To-Peer on Linux

Wi-Fi Peer-to-Peer on Linux Johannes Berg [email protected] Intel Corporation November 2010 Linux Wireless Introduction – P2P vs. Wi-Fi Direct? • Wi-Fi Peer-to-Peer (P2P): technology, technical specification • Wi-Fi Direct: marketing and certification Linux: use P2P term, certification is not upstream’s job 2 / 29 Linux Wireless Introduction – Use cases 3 / 29 Linux Wireless Introduction – Use cases 4 / 29 Linux Wireless Introduction – Use cases 5 / 29 Linux Wireless Introduction – Use cases 6 / 29 Linux Wireless Introduction – Specification Current draft is v1.0.16, available for members and for sale, built using • vendor-specific primitives in IEEE 802.11 • WPA2 • Wi-Fi Simple Configuration 7 / 29 Linux Wireless some terminology Introduction – Specification • P2P Group • P2P Device • P2P Group Owner (GO) • P2P Client • “legacy” device 8 / 29 Linux Wireless Device discovery Technical overview • for speed: only on social channels 1, 6, 11 • probe request/response mechanism • search: device scans (on social channels) • listen: device listens for probe requests 9 / 29 Linux Wireless Group formation Technical overview • GO negotiation • provisioning (WSC) • autonomous P2P group 10 / 29 Linux Wireless Invitation Technical overview • ask a P2P device to join an existing group • invitation could be by GO • device may invite another device into the group it is part of • also used to invoke persistent group 11 / 29 Linux Wireless Some details Technical overview • Group’s SSID: “DIRECT-XY[postfix]” • P2P wildcard: “DIRECT-” • GO appears as AP to