High Quality Linked Data Generation from Heterogeneous Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Applications

Applications CSE 595 – Semantic Web Instructor: Dr. Paul Fodor Stony Brook University http://www3.cs.stonybrook.edu/~pfodor/courses/cse595.html GoodRelations BBC Artists BBC World Cup Website Lecture Outline Government Data New York Times Sig.ma and Sindice Swoogle OpenCalais Schema.org data.world Elsevier Audi Data Integration Swiss Life EnerSearch E-Learning Web Services Multimedia Collection Indexing at Scotland Yard Online Procurement at Daimler Device Interoperability at Nokia 2 Publication Management @ Semantic Web Primer GoodRelations E-commerce, and in particular Business-to-Consumer (B2C) e- commerce, has been one of the main drivers behind the rapid adoption of the World Wide Web in everyday live It is now commonplace to see URLs listed on storefronts and goods vehicles Taking the UK as an example, the B2C market has grown from £87 million in April 2000 to £68.4 billion by the end of 2009, a thousand-fold increase over a single decade USA 2017 B2C market was $660 billion, but the growth is decreasing 3 statista.com @ Semantic Web Primer GoodRelations E-commerce marketplace is suffering from all the deficits of the traditional web: E-commerce websites are typically generated from structured information systems, listing price, availability, type of product, delivery options, etc., but by the time this information reaches the company’s web pages, it has been turned into HTML and all machine-interpretable structure has disappeared, with the result that machines can no longer distinguish a price from a product-code -

V a Lida T in G R D F Da

Series ISSN: 2160-4711 LABRA GAYO • ET AL GAYO LABRA Series Editors: Ying Ding, Indiana University Paul Groth, Elsevier Labs Validating RDF Data Jose Emilio Labra Gayo, University of Oviedo Eric Prud’hommeaux, W3C/MIT and Micelio Iovka Boneva, University of Lille Dimitris Kontokostas, University of Leipzig VALIDATING RDF DATA This book describes two technologies for RDF validation: Shape Expressions (ShEx) and Shapes Constraint Language (SHACL), the rationales for their designs, a comparison of the two, and some example applications. RDF and Linked Data have broad applicability across many fields, from aircraft manufacturing to zoology. Requirements for detecting bad data differ across communities, fields, and tasks, but nearly all involve some form of data validation. This book introduces data validation and describes its practical use in day-to-day data exchange. The Semantic Web offers a bold, new take on how to organize, distribute, index, and share data. Using Web addresses (URIs) as identifiers for data elements enables the construction of distributed databases on a global scale. Like the Web, the Semantic Web is heralded as an information revolution, and also like the Web, it is encumbered by data quality issues. The quality of Semantic Web data is compromised by the lack of resources for data curation, for maintenance, and for developing globally applicable data models. At the enterprise scale, these problems have conventional solutions. Master data management provides an enterprise-wide vocabulary, while constraint languages capture and enforce data structures. Filling a need long recognized by Semantic Web users, shapes languages provide models and vocabularies for expressing such structural constraints. -

Product Schema, SEO, Structured Data | Caliber Media Group & Schema.Org Products | Product Schema | SEO

ProductCamp: Product Schema, SEO, Structured Data | Caliber Media Group & Schema.org Products | Product Schema | SEO Caliber Media Group presented at Product Camp Southern California 2014, and Caliber also volunteered its services towards this conference’s success. Caliber Media Group ProductCamp: Product Schema, SEO, Structured Data | Caliber Media Group & Schema.org Products | Schema | Examples Resources | Tools | Readings 2 ProductCamp SoCal 2014 & Schema: Google, after Schema ProductCamp SoCal 2014 & Schema: Google via Disconnect & Yahoo, after Schema , ProductCamp SoCal 2014 & Schema: How did Caliber help ProductCamp beat all of the other events? ProductCamp SoCal 2014 & Schema: So what was inside the pages? ProductCamp SoCal 2014 & Schema: So what was inside the pages? ProductCamp SoCal 2014 & Schema: So what was inside the pages? Search => Schema, with JSON-LD Search & Schema: When & Who? Very short version… Martin Hepp http://www.heppnetz.de/projects/goodrelations/ ProductCamp SoCal 2014 & Schema: When & Who? Short version…here they come… ProductCamp SoCal 2014 & Schema: GoodRelations added… Search & Schema: Why, and… What does Schema do for me? http://www.schema.org ProductCamp SoCal 2014 & Schema: Product since this is ProductCamp http://schema.org/Product ProductCamp SoCal 2014 & Schema: An actual Product, since this is ProductCamp… ProductCamp SoCal 2014 & Schema: Recall, Products as Structured Data Structure -- Hierarchy -- Structured Data Search & Schema: Show me Schema examples… Schema: Product ontology | microdata | Knowledge Graph ProductCamp SoCal 2014 & Schema: Product ProductCamp SoCal 2014 & Schema: Google ProductCamp SoCal 2014 & Schema: Product Schema locally ProductCamp SoCal 2014 & Schema: Product locally ProductCamp SoCal 2014 & Schema: Local Schema gone wild… Search & Product Schema | Knowledge Graph from Google Knowledge Vault – where? Search & Product Schema | Knowledge Graph – where? Google Tables. -

Deciding SHACL Shape Containment Through Description Logics Reasoning

Deciding SHACL Shape Containment through Description Logics Reasoning Martin Leinberger1, Philipp Seifer2, Tjitze Rienstra1, Ralf Lämmel2, and Steffen Staab3;4 1 Inst. for Web Science and Technologies, University of Koblenz-Landau, Germany 2 The Software Languages Team, University of Koblenz-Landau, Germany 3 Institute for Parallel and Distributed Systems, University of Stuttgart, Germany 4 Web and Internet Science Research Group, University of Southampton, England Abstract. The Shapes Constraint Language (SHACL) allows for for- malizing constraints over RDF data graphs. A shape groups a set of constraints that may be fulfilled by nodes in the RDF graph. We investi- gate the problem of containment between SHACL shapes. One shape is contained in a second shape if every graph node meeting the constraints of the first shape also meets the constraints of the second. Todecide shape containment, we map SHACL shape graphs into description logic axioms such that shape containment can be answered by description logic reasoning. We identify several, increasingly tight syntactic restrictions of SHACL for which this approach becomes sound and complete. 1 Introduction RDF has been designed as a flexible, semi-structured data format. To ensure data quality and to allow for restricting its large flexibility in specific domains, the W3C has standardized the Shapes Constraint Language (SHACL)5. A set of SHACL shapes are represented in a shape graph. A shape graph represents constraints that only a subset of all possible RDF data graphs conform to. A SHACL processor may validate whether a given RDF data graph conforms to a given SHACL shape graph. A shape graph and a data graph that act as a running example are pre- sented in Fig. -

Rdfa in XHTML: Syntax and Processing Rdfa in XHTML: Syntax and Processing

RDFa in XHTML: Syntax and Processing RDFa in XHTML: Syntax and Processing RDFa in XHTML: Syntax and Processing A collection of attributes and processing rules for extending XHTML to support RDF W3C Recommendation 14 October 2008 This version: http://www.w3.org/TR/2008/REC-rdfa-syntax-20081014 Latest version: http://www.w3.org/TR/rdfa-syntax Previous version: http://www.w3.org/TR/2008/PR-rdfa-syntax-20080904 Diff from previous version: rdfa-syntax-diff.html Editors: Ben Adida, Creative Commons [email protected] Mark Birbeck, webBackplane [email protected] Shane McCarron, Applied Testing and Technology, Inc. [email protected] Steven Pemberton, CWI Please refer to the errata for this document, which may include some normative corrections. This document is also available in these non-normative formats: PostScript version, PDF version, ZIP archive, and Gzip’d TAR archive. The English version of this specification is the only normative version. Non-normative translations may also be available. Copyright © 2007-2008 W3C® (MIT, ERCIM, Keio), All Rights Reserved. W3C liability, trademark and document use rules apply. Abstract The current Web is primarily made up of an enormous number of documents that have been created using HTML. These documents contain significant amounts of structured data, which is largely unavailable to tools and applications. When publishers can express this data more completely, and when tools can read it, a new world of user functionality becomes available, letting users transfer structured data between applications and web sites, and allowing browsing applications to improve the user experience: an event on a web page can be directly imported - 1 - How to Read this Document RDFa in XHTML: Syntax and Processing into a user’s desktop calendar; a license on a document can be detected so that users can be informed of their rights automatically; a photo’s creator, camera setting information, resolution, location and topic can be published as easily as the original photo itself, enabling structured search and sharing. -

Using Rule-Based Reasoning for RDF Validation

Using Rule-Based Reasoning for RDF Validation Dörthe Arndt, Ben De Meester, Anastasia Dimou, Ruben Verborgh, and Erik Mannens Ghent University - imec - IDLab Sint-Pietersnieuwstraat 41, B-9000 Ghent, Belgium [email protected] Abstract. The success of the Semantic Web highly depends on its in- gredients. If we want to fully realize the vision of a machine-readable Web, it is crucial that Linked Data are actually useful for machines con- suming them. On this background it is not surprising that (Linked) Data validation is an ongoing research topic in the community. However, most approaches so far either do not consider reasoning, and thereby miss the chance of detecting implicit constraint violations, or they base them- selves on a combination of dierent formalisms, eg Description Logics combined with SPARQL. In this paper, we propose using Rule-Based Web Logics for RDF validation focusing on the concepts needed to sup- port the most common validation constraints, such as Scoped Negation As Failure (SNAF), and the predicates dened in the Rule Interchange Format (RIF). We prove the feasibility of the approach by providing an implementation in Notation3 Logic. As such, we show that rule logic can cover both validation and reasoning if it is expressive enough. Keywords: N3, RDF Validation, Rule-Based Reasoning 1 Introduction The amount of publicly available Linked Open Data (LOD) sets is constantly growing1, however, the diversity of the data employed in applications is mostly very limited: only a handful of RDF data is used frequently [27]. One of the reasons for this is that the datasets' quality and consistency varies signicantly, ranging from expensively curated to relatively low quality data [33], and thus need to be validated carefully before use. -

The Application of Semantic Web Technologies to Content Analysis in Sociology

THEAPPLICATIONOFSEMANTICWEBTECHNOLOGIESTO CONTENTANALYSISINSOCIOLOGY MASTER THESIS tabea tietz Matrikelnummer: 749153 Faculty of Economics and Social Science University of Potsdam Erstgutachter: Alexander Knoth, M.A. Zweitgutachter: Prof. Dr. rer. nat. Harald Sack Potsdam, August 2018 Tabea Tietz: The Application of Semantic Web Technologies to Content Analysis in Soci- ology, , © August 2018 ABSTRACT In sociology, texts are understood as social phenomena and provide means to an- alyze social reality. Throughout the years, a broad range of techniques evolved to perform such analysis, qualitative and quantitative approaches as well as com- pletely manual analyses and computer-assisted methods. The development of the World Wide Web and social media as well as technical developments like optical character recognition and automated speech recognition contributed to the enor- mous increase of text available for analysis. This also led sociologists to rely more on computer-assisted approaches for their text analysis and included statistical Natural Language Processing (NLP) techniques. A variety of techniques, tools and use cases developed, which lack an overall uniform way of standardizing these approaches. Furthermore, this problem is coupled with a lack of standards for reporting studies with regards to text analysis in sociology. Semantic Web and Linked Data provide a variety of standards to represent information and knowl- edge. Numerous applications make use of these standards, including possibilities to publish data and to perform Named Entity Linking, a specific branch of NLP. This thesis attempts to discuss the question to which extend the standards and tools provided by the Semantic Web and Linked Data community may support computer-assisted text analysis in sociology. First, these said tools and standards will be briefly introduced and then applied to the use case of constitutional texts of the Netherlands from 1884 to 2016. -

Semantic Description of Web Services

Semantic Description of Web Services Thabet Slimani CS Department, Taif University, P.O.Box 888, 21974, KSA Abstract syntaxes) and in terms of the paradigms proposed for The tasks of semantic web service (discovery, selection, employing these in practice. composition, and execution) are supposed to enable seamless interoperation between systems, whereby human intervention is This paper is dedicated to provide an overview of these kept at a minimum. In the field of Web service description approaches, expressing their classification in terms of research, the exploitation of descriptions of services through commonalities and differences. It provides an semantics is a better support for the life-cycle of Web services. understanding of the technical foundation on which they The large number of developed ontologies, languages of are built. These techniques are classified from a range of representations, and integrated frameworks supporting the research areas including Top-down, Bottom-up and Restful discovery, composition and invocation of services is a good Approaches. indicator that research in the field of Semantic Web Services (SWS) has been considerably active. We provide in this paper a This paper does also provide some grounding that could detailed classification of the approaches and solutions, indicating help the reader perform a more detailed analysis of the their core characteristics and objectives required and provide different approaches which relies on the required indicators for the interested reader to follow up further insights objectives. We provide a little detailed comparison and details about these solutions and related software. between some approaches because this would require Keywords: SWS, SWS description, top-down approaches, addressing them from the perspective of some tasks bottom-up approaches, RESTful services. -

Mapping Between Digital Identity Ontologies Through SISM

Mapping between Digital Identity Ontologies through SISM Matthew Rowe The OAK Group, Department of Computer Science, University of Sheffield, Regent Court, 211 Portobello Street, Sheffield S1 4DP, UK [email protected] Abstract. Various ontologies are available defining the semantics of dig- ital identity information. Due to the rise in use of lowercase semantics, such ontologies are now used to add metadata to digital identity informa- tion within web pages. However concepts exist in these ontologies which are related and must be mapped together in order to enhance machine- readability of identity information on the web. This paper presents the Social identity Schema Mapping (SISM) vocabulary which contains a set of mappings between related concepts in distinct digital identity ontolo- gies using OWL and SKOS mapping constructs. Key words: Semantic Web, Social Web, SKOS, OWL, FOAF, SIOC, PIMO, NCO, Microformats 1 Introduction The semantic web provides a web of machine-readable data. Ontologies form a vital component of the semantic web by providing conceptualisations of domains of knowledge which can then be used to provide a common understanding of some domain. A basic ontology contains a vocabulary of concepts and definitions of the relationships between those concepts. An agent reading a concept from an ontology can look up the concept and discover its properties and characteristics, therefore interpreting how it fits into that particular domain. Due to the great number of ontologies it is common for related concepts to be defined in separate ontologies, these concepts must be identified and mapped together. Web technologies such as Microformats, eRDF and RDFa have allowed web developers to encode lowercase semantics within XHTML pages. -

D1.2.1 WSMO Grounding in SAWSDL

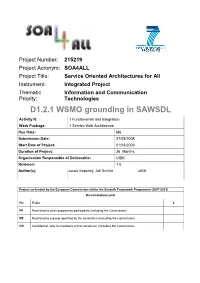

Project Number: 215219 Project Acronym: SOA4ALL Project Title: Service Oriented Architectures for All Instrument: Integrated Project Thematic Information and Communication Priority: Technologies D1.2.1 WSMO grounding in SAWSDL Activity N: 1 Fundamental and Integration Work Package: 1 Service Web Architecture Due Date: M6 Submission Date: 27/08/2008 Start Date of Project: 01/03/2006 Duration of Project: 36 Months Organisation Responsible of Deliverable: UIBK Revision: 1.0 Author(s): Jacek Kopecký, Adi Schütz UIBK Project co-funded by the European Commission within the Seventh Framework Programme (2007-2013) Dissemination Level PU Public X PP Restricted to other programme participants (including the Commission) RE Restricted to a group specified by the consortium (including the Commission) CO Confidential, only for members of the consortium (including the Commission) SOA4All –FP7 – 215219 – D1.2.1 WSMO grounding in SAWSDL Version History Version Date Comments, Changes, Status Authors, contributors, reviewers 0.1 2008/07/16 Initial version Jacek Kopecký 0.2 2008/07/24 Filling in more Jacek Kopecký, Adi Schütz 0.9 2008/07/25 A complete draft, ready for review Jacek Kopecký, Adi Schütz 0.95 2008/07/19 Review comments incorporated Elisabetta Di Nitto, Marin Dimitrov, Jacek Kopecký 1.0 2008/07/23 Version to be submitted Jacek Kopecký © SOA4ALL consortium Page 2 of 28 SOA4All –FP7 – 215219 – D1.2.1 WSMO grounding in SAWSDL Table of Contents EXECUTIVE SUMMARY ____________________________________________________ 5 1. INTRODUCTION ______________________________________________________ -

A Framework for Semantic Publishing of Modular Content Objects

A Framework for Semantic Publishing of Modular Content Objects Catalin David, Deyan Ginev, Michael Kohlhase, Bogdan Matican, Stefan Mirea Computer Science, Jacobs University, Germany; http://kwarc.info/ Abstract. We present the Active Documents approach to semantic pub- lishing (semantically annotated documents associated with a content commons that holds the background ontologies) and the Planetary system (as an active document player). In this paper we explore the interaction of content object reuse and context sensitivity in the presentation process that transforms content modules to active documents. We propose a \separate compilation and dynamic linking" regime that makes semantic publishing of highly struc- tured content representations into active documents tractable and show how this is realized in the Planetary system. 1 Introduction Semantic publication can range from merely equipping published documents with RDFa annotations, expressing metadata or inter-paper links, to frame- works that support the provisioning of user-adapted documents from content representations and instrumenting them with interactions based on the seman- tic information embedded in the content forms. We want to propose an entry to the latter category in this paper. Our framework is based on semantically anno- tated documents together with semantic background ontologies (which we call the content commons). This information can then be used by user-visible, se- mantic services like program (fragment) execution, computation, visualization, navigation, information aggregation and information retrieval (see Figure 5). Finally a document player application can embed these services to make docu- ments executable. We call this framework the Active Documents Paradigm (ADP), since documents can also actively adapt to user preferences and envi- ronment rather than only executing services upon user request. -

Linked Data Schemata: Fixing Unsound Foundations

Linked data schemata: fixing unsound foundations. Kevin Feeney, Gavin Mendel Gleason, Rob Brennan Knowledge and Data Engineering Group & ADAPT Centre, School of Computer Science & Statistics, Trinity College Dublin, Ireland Abstract. This paper describes an analysis, and the tools and methods used to produce it, of the practical and logical implications of unifying common linked data vocabularies into a single logical model. In order to support any type of reasoning or even just simple type-checking, the vocabularies that are referenced by linked data statements need to be unified into a complete model wherever they reference or reuse terms that have been defined in other linked data vocabularies. Strong interdependencies between vocabularies are common and a large number of logical and practical problems make this unification inconsistent and messy. However, the situation is far from hopeless. We identify a minimal set of necessary fixes that can be carried out to make a large number of widely-deployed vocabularies mutually compatible, and a set of wider-ranging recommendations for linked data ontology design best practice to help alleviate the problem in future. Finally we make some suggestions for improving OWL’s support for distributed authoring and ontology reuse in the wild. Keywords: Linked Data, Reasoning, Data Quality 1. Introduction One of the central tenets of the Linked Data movement is the reuse of terms from existing well- known vocabularies [Bizer09] when developing new schemata or datasets. The semantic web infrastructure, and the RDF, RDFS and OWL languages, support this with their inherently distributed and modular nature. In practice, through vocabulary reuse, linked data schemata adopt knowledge models that are based on multiple, independently devised ontologies that often exhibit varying definitional semantics [Hogan12].