An Overview of Remote 3D Visualisation VDI Technologies

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

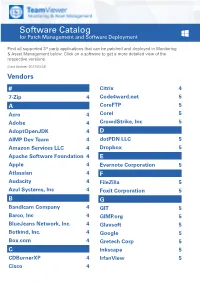

Software Catalog for Patch Management and Software Deployment

Software Catalog for Patch Management and Software Deployment Find all supported 3rd party applications that can be patched and deployed in Monitoring & Asset Management below. Click on a software to get a more detailed view of the respective versions. (Last Update: 2021/03/23) Vendors # Citrix 4 7-Zip 4 Code4ward.net 5 A CoreFTP 5 Acro 4 Corel 5 Adobe 4 CrowdStrike, Inc 5 AdoptOpenJDK 4 D AIMP Dev Team 4 dotPDN LLC 5 Amazon Services LLC 4 Dropbox 5 Apache Software Foundation 4 E Apple 4 Evernote Corporation 5 Atlassian 4 F Audacity 4 FileZilla 5 Azul Systems, Inc 4 Foxit Corporation 5 B G Bandicam Company 4 GIT 5 Barco, Inc 4 GIMP.org 5 BlueJeans Network, Inc. 4 Glavsoft 5 Botkind, Inc. 4 Google 5 Box.com 4 Gretech Corp 5 C Inkscape 5 CDBurnerXP 4 IrfanView 5 Cisco 4 Software Catalog for Patch Management and Software Deployment J P Jabra 5 PeaZip 10 JAM Software 5 Pidgin 10 Juraj Simlovic 5 Piriform 11 K Plantronics, Inc. 11 KeePass 5 Plex, Inc 11 L Prezi Inc 11 LibreOffice 5 Programmer‘s Notepad 11 Lightning UK 5 PSPad 11 LogMeIn, Inc. 5 Q M QSR International 11 Malwarebytes Corporation 5 Quest Software, Inc 11 Microsoft 6 R MIT 10 R Foundation 11 Morphisec 10 RarLab 11 Mozilla Foundation 10 Real 11 N RealVNC 11 Neevia Technology 10 RingCentral, Inc. 11 NextCloud GmbH 10 S Nitro Software, Inc. 10 Scooter Software, Inc 11 Nmap Project 10 Siber Systems 11 Node.js Foundation 10 Simon Tatham 11 Notepad++ 10 Skype Technologies S.A. -

Using Remote Desktop Services with Ifix 1

Proficy iFIX 6.5 Using Remote Desktop Services GE Digital Proficy Historian and Operations Hub: Data Analysis in Context 1 Proprietary Notice The information contained in this publication is believed to be accurate and reliable. However, General Electric Company assumes no responsibilities for any errors, omissions or inaccuracies. Information contained in the publication is subject to change without notice. No part of this publication may be reproduced in any form, or stored in a database or retrieval system, or transmitted or distributed in any form by any means, electronic, mechanical photocopying, recording or otherwise, without the prior written permission of General Electric Company. Information contained herein is subject to change without notice. © 2021, General Electric Company. All rights reserved. Trademark Notices GE, the GE Monogram, and Predix are either registered trademarks or trademarks of General Electric Company. Microsoft® is a registered trademark of Microsoft Corporation, in the United States and/or other countries. All other trademarks are the property of their respective owners. We want to hear from you. If you have any comments, questions, or suggestions about our documentation, send them to the following email address: [email protected] Table of Contents Using Remote Desktop Services with iFIX 1 Reference Documents 1 Introduction to Remote Desktop Services 2 Using iClientTS 2 Understanding the iFIX and Remote Desktop Services 3 File System Support 5 Where to Find More Information on Remote Desktop Services 5 Getting -

Realvnc @ LC August 2018 LC User Meeting

RealVNC @ LC August 2018 LC User Meeting Cameron Harr Title (optional) Aug. 21, 2018 LLNL-PRES-XXXXXX This work was performed under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory under contract DE-AC52-07NA27344. Lawrence Livermore National Security, LLC RealVNC @ LC § What is RealVNC? § What did we have? § What do we have now? § What’s coming in the future? § How do you use it? 2 LLNL-PRES-xxxxxx Cloud versus direct with VNC Connect VNC Connect is unique among remote access software in its ability to offer both cloud and direct connectivity methods within a single product. At first glance, knowing whether to connect directly or via our cloud service can seem confusing. However, there are clear benefits to each connection method. The trick is knowing how to maximize these benefits. This brief product guide provides an overview of the differences between cloud and direct connectivity, and offers some advice on how each method can be used to your greatest advantage. Key terminology Throughout this guide, we refer to certain RealVNC-specific terminology. VNC Connect is comprised of two separate apps: VNC Server and VNC Viewer. You must install and license VNC Server on the computer you want to control. This is known as your VNC Server computer. You must then install VNC Viewer on the computer or device you want to take control from, which is known as your VNC Viewer device. You do not need to license this device, meaning you can freely connect to your VNC Server computer from as many devices as you wish. -

Accelerometer Sensor Emulation!!!! by Columbia University NYC Thesis Raghavan Santhanam Benchmark

Enabling the Virtual Phones to remotely sense the Real Phones in real-time ~ A Sensor Emulation initiative for virtualized Android-x86 ~ Raghavan Santhanam Submitted in partial fulfillment of the requirements for the degree of Master of Science in Computer Science in the School of Engineering and Applied Science 2014 © 2014 Raghavan Santhanam All Rights Reserved ABSTRACT Enabling the Virtual Phones to remotely sense the Real Phones in real-time ~ A Sensor Emulation initiative for virtualized Android-x86 ~ Smartphones nowadays have the ground-breaking features that were only a figment of one’s imagination. For the ever-demanding cellphone users, the exhaustive list of features that a smartphone supports just keeps getting more exhaustive with time. These features aid one’s personal and professional uses as well. Extrapolating into the future the features of a present-day smartphone, the lives of us humans using smartphones are going to be unimaginably agile. With the above said emphasis on the current and future potential of a smartphone, the ability to virtualize smartphones with all their real-world features into a virtual platform, is a boon for those who want to rigorously experiment and customize the virtualized smartphone hardware without spending an extra penny. Once virtualizable independently on a larger scale, the idea of virtualized smartphones with all the virtualized pieces of hardware takes an interesting turn with the sensors being virtualized in a way that’s closer to the real-world behavior. When accessible remotely with the real-time responsiveness, the above mentioned real-world behavior will be a real dealmaker in many real-world systems, namely, the life-saving systems like the ones that instantaneously get alerts about harmful magnetic radiations in the deep mining areas, etc. -

A Java Implementation of a Portable Desktop Manager Scott .J Griswold University of North Florida

UNF Digital Commons UNF Graduate Theses and Dissertations Student Scholarship 1998 A Java Implementation of a Portable Desktop Manager Scott .J Griswold University of North Florida Suggested Citation Griswold, Scott .,J "A Java Implementation of a Portable Desktop Manager" (1998). UNF Graduate Theses and Dissertations. 95. https://digitalcommons.unf.edu/etd/95 This Master's Thesis is brought to you for free and open access by the Student Scholarship at UNF Digital Commons. It has been accepted for inclusion in UNF Graduate Theses and Dissertations by an authorized administrator of UNF Digital Commons. For more information, please contact Digital Projects. © 1998 All Rights Reserved A JAVA IMPLEMENTATION OF A PORTABLE DESKTOP MANAGER by Scott J. Griswold A thesis submitted to the Department of Computer and Information Sciences in partial fulfillment of the requirements for the degree of Master of Science in Computer and Information Sciences UNIVERSITY OF NORTH FLORIDA DEPARTMENT OF COMPUTER AND INFORMATION SCIENCES April, 1998 The thesis "A Java Implementation of a Portable Desktop Manager" submitted by Scott J. Griswold in partial fulfillment of the requirements for the degree of Master of Science in Computer and Information Sciences has been ee Date APpr Signature Deleted Dr. Ralph Butler Thesis Advisor and Committee Chairperson Signature Deleted Dr. Yap S. Chua Signature Deleted Accepted for the Department of Computer and Information Sciences Signature Deleted i/2-{/1~ Dr. Charles N. Winton Chairperson of the Department Accepted for the College of Computing Sciences and E Signature Deleted Dr. Charles N. Winton Acting Dean of the College Accepted for the University: Signature Deleted Dr. -

VNC Connect Security Whitepaper

VNC Connect security whitepaper VNC Connect security whitepaper Version 1.3 Contents Contents .................................................................................................................................................................... 2 Introduction ............................................................................................................................................................... 3 Security architecture ................................................................................................................................................. 4 Cloud infrastructure ................................................................................................................................................... 7 Client security ........................................................................................................................................................... 9 Development procedures ........................................................................................................................................ 12 Summary ................................................................................................................................................................. 13 VNC Connect security whitepaper Introduction Customer security is of paramount importance to RealVNC. As such, our security strategy is ingrained in all aspects of our VNC Connect software. We have invested extensively in our security, and take great pride in our successful -

Organizing Screens with Mission Control | 61

Organizing Screens with 7 Mission Control If you’re like a lot of Mac users, you like to do a lot of things at once. No matter how big your screen may be, it can still feel crowded as you open and arrange multiple windows on the desktop. The solution to the problem? Mission Control. The idea behind Mission Control is to show what you’re running all at once. It allows you to quickly swap programs. In addition, Mission Control lets you create multiple virtual desktops (called Spaces) that you can display one at a time. By storing one or more program windows in a single space, you can keep open windows organized without cluttering up a single screen. When you want to view another window, just switch to a different virtual desktop. Project goal: Learn to use Mission Control to create and manage virtual desktops (Spaces). My New Mac, Lion Edition © 2011 by Wallace Wang lion_book-4c.indb 59 9/9/2011 12:04:57 PM What You’ll Be Using To learn how to switch through multiple virtual desktops (Spaces) on your Macintosh using Mission Control, you’ll use the following: > Mission Control > The Safari web browser > The Finder program Starting Mission Control Initially, your Macintosh displays a single desktop, which is what you see when you start up your Macintosh. When you want to create additional virtual desktops, or Spaces, you’ll need to start Mission Control. There are three ways to start Mission Control: > Start Mission Control from the Applications folder or Dock. > Press F9. -

Vmware Horizon 7 Datasheet

DATASHEET VMWARE HORIZON 7 AT A GLANCE End-User Computing Today VMware Horizon® 7 delivers virtualized or Today, end users are leveraging new types of devices for work—accessing hosted desktops and applications through a Windows and Linux applications alongside iOS or Android applications—and single platform to end users. These desktop are more mobile than ever. and application services—including Remote Desktop Services (RDS) hosted apps, In this new mobile cloud world, managing and delivering services to end users packaged apps with VMware ThinApp®, with traditional PC-centric tools have become increasingly di cult. Data loss software-as-a-service (SaaS) apps, and and image drift are real security and compliance concerns. And organizations even virtualized apps from Citrix—can all are struggling to contain costs. Horizon 7 provides IT with a new streamlined be accessed from one digital workspace approach to deliver, protect, and manage Windows and Linux desktops and across devices, locations, media, and applications while containing costs and ensuring that end users can work connections without compromising quality anytime, anywhere, on any device. and user experience. Leveraging complete workspace environment Horizon 7: Delivering Desktops and Applications as a Service management and optimized for the software– defi ned data center, Horizon 7 helps IT control, Horizon 7 enables IT to centrally manage images to streamline management, manage, and protect all of the Windows reduce costs, and maintain compliance. With Horizon 7, virtualized or hosted resources end users want, at the speed they desktops and applications can be delivered through a single platform to end expect, with the e ciency business demands. -

AFRC Desktop Anywhere Windows 10 Installation Guide Page | 1

Updated: 2/28/2020 AFRC Desktop Anywhere Windows 10 Installation Guide Page | 1 AFRC DESKTOP ANYWHERE WINDOWS 10 INSTALLATION GUIDE AFRC's Desktop-as-a-Service, aka Desktop Anywhere (DA), utilizes a user's personal computer (Mac/Windows), valid AF CAC, and additional applications (described below). Once launched on the computer, the DA session is a separate, containerized session completely isolated from the user's data, settings, hard drive, browser history or information of all kinds. There is no intermingling of anything between the secure, DA government session and the personal side of the computer, Essentially the DA session is a 'dumb' terminal that displays and accepts input---nothing is actually downloaded or uploaded to the user's computer. CONTENTS Quick Links ................................................................................................................................................................................................. 2 DoD Certificates......................................................................................................................................................................................... 3 Download InstallRoot ............................................................................................................................................................................. 3 Install InstallRoot .................................................................................................................................................................................. -

Using a Remote Desktop Connection with Filemaker Pro 12 © 2007–2012 Filemaker, Inc

FileMaker® Pro 12 Using a Remote Desktop Connection with FileMaker Pro 12 © 2007–2012 FileMaker, Inc. All Rights Reserved. FileMaker, Inc. 5201 Patrick Henry Drive Santa Clara, California 95054 FileMaker and Bento are trademarks of FileMaker, Inc. registered in the U.S. and other countries. The file folder logo and the Bento logo are trademarks of FileMaker, Inc. All other trademarks are the property of their respective owners. FileMaker documentation is copyrighted. You are not authorized to make additional copies or distribute this documentation without written permission from FileMaker. You may use this documentation solely with a valid licensed copy of FileMaker software. All persons, companies, email addresses, and URLs listed in the examples are purely fictitious and any resemblance to existing persons, companies, email addresses, or URLs is purely coincidental. Credits are listed in the Acknowledgements documents provided with this software. Mention of third-party products and URLs is for informational purposes only and constitutes neither an endorsement nor a recommendation. FileMaker, Inc. assumes no responsibility with regard to the performance of these products. For more information, visit our website at http://www.filemaker.com. Edition: 01 Contents Chapter 1 Introduction to Remote Desktop Services and Citrix XenApp 4 About Remote Desktop Services 4 Remote Desktop Services server 4 Remote Desktop Services client (Remote Desktop Connection) 4 Remote Desktop Protocol (RDP) 4 Benefits of using Remote Desktop Services 4 System -

Accessing Remote Desktop Using VNC on Dell Poweredge Servers

Accessing Remote Desktop using VNC on Dell PowerEdge Servers and MX7000 Modular Infrastructure This technical white paper provides information about establishing secure remote desktop connections to server host operating systems (OS) by using the standard VNC clients. Abstract Dell EMC PowerEdge servers support efficient and secure remote management tools. Virtual Network Computing (VNC) technology is incorporated in the iDRAC Enterprise firmware to support open and easy-to-use remote desktop functionality. This functionality is in addition to the browser-based remote console support accessible on the iDRAC GUI. September 2018 Dell EMC Technical White Paper Revisions Revisions Date Description Sep 2018 Initial release Acknowledgements This paper was produced by the following members of the Dell EMC Server and Infrastructure Systems team: Authors Saurabh Kishore — Software Principal Engineer Alex Rote — Software Senior Engineer The information in this publication is provided “as is.” Dell Inc. makes no representations or warranties of any kind with respect to the information in this publication, and specifically disclaims implied warranties of merchantability or fitness for a particular purpose. Use, copying, and distribution of any software described in this publication requires an applicable software license. © <Sep/12/2018> Dell Inc. or its subsidiaries. All Rights Reserved. Dell, EMC, Dell EMC and other trademarks are trademarks of Dell Inc. or its subsidiaries. Other trademarks may be trademarks of their respective owners. Dell believes the information in this document is accurate as of its publication date. The information is subject to change without notice. 2 Accessing Remote Desktop using VNC on Dell PowerEdge Servers and MX7000 Modular Infrastructure Acknowledgements Contents Revisions............................................................................................................................................................................ -

Virtualization with Limited Hardware Support

Virtualization with Limited Hardware Support by Bi Wu Department of Computer Science Duke University Date: Approved: Landon Cox, Supervisor Jeffrey Chase Alvin Lebeck Harvey Tuch Dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Department of Computer Science in the Graduate School of Duke University 2013 Abstract Virtualization with Limited Hardware Support by Bi Wu Department of Computer Science Duke University Date: Approved: Landon Cox, Supervisor Jeffrey Chase Alvin Lebeck Harvey Tuch An abstract of a dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Department of Computer Science in the Graduate School of Duke University 2013 Copyright c 2013 by Bi Wu All rights reserved except the rights granted by the Creative Commons Attribution-Noncommercial Licence Abstract Over the past 15 years, virtualization has become a standard way to migrate old software to new hardware, isolate untrusted code, and encapsulate application state. However, many of the techniques for implementing common virtualization functional- ity, such as transparent isolation and full-system record and replay, rely on hardware features provided by the x86 architecture. As the mainstream computing landscape diversifies to include less feature-rich mobile and embedded systems, it is critical to rethink how to efficiently provide core virtualization functionality with limited hardware support. As a result, this dissertation explores the feasibility of providing common virtu- alization functionality with limited hardware support. We propose three approaches to virtualization using limited hardware support. First, we describe a way to transparently virtualize a CPU using hardware break- points and on-demand control-flow analysis.