PERL Workbook

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Learning Perl Through Examples Part 2 L1110@BUMC 2/22/2017

www.perl.org Learning Perl Through Examples Part 2 L1110@BUMC 2/22/2017 Yun Shen, Programmer Analyst [email protected] IS&T Research Computing Services Spring 2017 Tutorial Resource Before we start, please take a note - all the codes and www.perl.org supporting documents are accessible through: • http://rcs.bu.edu/examples/perl/tutorials/ Yun Shen, Programmer Analyst [email protected] IS&T Research Computing Services Spring 2017 Sign In Sheet We prepared sign-in sheet for each one to sign www.perl.org We do this for internal management and quality control So please SIGN IN if you haven’t done so Yun Shen, Programmer Analyst [email protected] IS&T Research Computing Services Spring 2017 Evaluation One last piece of information before we start: www.perl.org • DON’T FORGET TO GO TO: • http://rcs.bu.edu/survey/tutorial_evaluation.html Leave your feedback for this tutorial (both good and bad as long as it is honest are welcome. Thank you) Yun Shen, Programmer Analyst [email protected] IS&T Research Computing Services Spring 2017 Today’s Topic • Basics on creating your code www.perl.org • About Today’s Example • Learn Through Example 1 – fanconi_example_io.pl • Learn Through Example 2 – fanconi_example_str_process.pl • Learn Through Example 3 – fanconi_example_gene_anno.pl • Extra Examples (if time permit) Yun Shen, Programmer Analyst [email protected] IS&T Research Computing Services Spring 2017 www.perl.org Basics on creating your code How to combine specs, tools, modules and knowledge. Yun Shen, Programmer Analyst [email protected] IS&T Research Computing -

Coleman-Coding-Freedom.Pdf

Coding Freedom !" Coding Freedom THE ETHICS AND AESTHETICS OF HACKING !" E. GABRIELLA COLEMAN PRINCETON UNIVERSITY PRESS PRINCETON AND OXFORD Copyright © 2013 by Princeton University Press Creative Commons Attribution- NonCommercial- NoDerivs CC BY- NC- ND Requests for permission to modify material from this work should be sent to Permissions, Princeton University Press Published by Princeton University Press, 41 William Street, Princeton, New Jersey 08540 In the United Kingdom: Princeton University Press, 6 Oxford Street, Woodstock, Oxfordshire OX20 1TW press.princeton.edu All Rights Reserved At the time of writing of this book, the references to Internet Web sites (URLs) were accurate. Neither the author nor Princeton University Press is responsible for URLs that may have expired or changed since the manuscript was prepared. Library of Congress Cataloging-in-Publication Data Coleman, E. Gabriella, 1973– Coding freedom : the ethics and aesthetics of hacking / E. Gabriella Coleman. p. cm. Includes bibliographical references and index. ISBN 978-0-691-14460-3 (hbk. : alk. paper)—ISBN 978-0-691-14461-0 (pbk. : alk. paper) 1. Computer hackers. 2. Computer programmers. 3. Computer programming—Moral and ethical aspects. 4. Computer programming—Social aspects. 5. Intellectual freedom. I. Title. HD8039.D37C65 2012 174’.90051--dc23 2012031422 British Library Cataloging- in- Publication Data is available This book has been composed in Sabon Printed on acid- free paper. ∞ Printed in the United States of America 1 3 5 7 9 10 8 6 4 2 This book is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE !" We must be free not because we claim freedom, but because we practice it. -

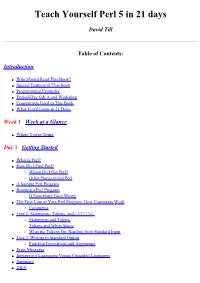

Teach Yourself Perl 5 in 21 Days

Teach Yourself Perl 5 in 21 days David Till Table of Contents: Introduction ● Who Should Read This Book? ● Special Features of This Book ● Programming Examples ● End-of-Day Q& A and Workshop ● Conventions Used in This Book ● What You'll Learn in 21 Days Week 1 Week at a Glance ● Where You're Going Day 1 Getting Started ● What Is Perl? ● How Do I Find Perl? ❍ Where Do I Get Perl? ❍ Other Places to Get Perl ● A Sample Perl Program ● Running a Perl Program ❍ If Something Goes Wrong ● The First Line of Your Perl Program: How Comments Work ❍ Comments ● Line 2: Statements, Tokens, and <STDIN> ❍ Statements and Tokens ❍ Tokens and White Space ❍ What the Tokens Do: Reading from Standard Input ● Line 3: Writing to Standard Output ❍ Function Invocations and Arguments ● Error Messages ● Interpretive Languages Versus Compiled Languages ● Summary ● Q&A ● Workshop ❍ Quiz ❍ Exercises Day 2 Basic Operators and Control Flow ● Storing in Scalar Variables Assignment ❍ The Definition of a Scalar Variable ❍ Scalar Variable Syntax ❍ Assigning a Value to a Scalar Variable ● Performing Arithmetic ❍ Example of Miles-to-Kilometers Conversion ❍ The chop Library Function ● Expressions ❍ Assignments and Expressions ● Other Perl Operators ● Introduction to Conditional Statements ● The if Statement ❍ The Conditional Expression ❍ The Statement Block ❍ Testing for Equality Using == ❍ Other Comparison Operators ● Two-Way Branching Using if and else ● Multi-Way Branching Using elsif ● Writing Loops Using the while Statement ● Nesting Conditional Statements ● Looping Using -

Perl for Windows NT Administrators

BY ROBERT MANGOLD Perl for Windows NT Administrators s the author demonstrates, scripting in Perl can save Windows NT administrators time when Aperforming a variety of tasks. this ever happened to you? The phone rings at WHY PERL? HAS your NT support desk. You are the person on duty, and the security enforcement officer tells you that the screen What is Perl? Perl stands for “Practical Extraction and saver should start after 10 minutes of idle time, not 15 minutes. Report Language,” or “Pathologically Eclectic Rubbish You’re faced with the agonizing task of changing the screen Lister”— both definitions are sanctioned by the Perl com- saver start time on the 1,000 Windows NT workstations that munity (seriously!). Perl is a programming language, like C you just deployed. What can you do? or Java. Wait! Before you mutter in disgust and hastily flip to In another scenario, say you are in a meeting with your the next article, bear with me. You may be thinking, “I’m not boss, and he tells you to rename the server that holds the a programmer, nor do not want to be a programmer. I will not roaming profiles. You will need to change the profile path on ‘go gentle into that good night.’” (I could not resist using a roughly 700 user accounts. Your boss asks you how long it quote from Dylan Thomas.) will take to accomplish this task, and you know that you will So, why should you become familiar with Perl? Simple. It have to make the changes after hours, but it’s your son’s second can save you time, win you friends, allow you to have a more birthday party tonight. -

Programming Perl (Nutshell Handbooks) Randal L. Schwartz

[PDF] Programming Perl (Nutshell Handbooks) Randal L. Schwartz, Larry Wall - pdf download free book Programming Perl (Nutshell Handbooks) PDF, Programming Perl (Nutshell Handbooks) Download PDF, Programming Perl (Nutshell Handbooks) by Randal L. Schwartz, Larry Wall Download, Programming Perl (Nutshell Handbooks) Full Collection, Read Best Book Online Programming Perl (Nutshell Handbooks), Read Online Programming Perl (Nutshell Handbooks) Ebook Popular, Download Free Programming Perl (Nutshell Handbooks) Book, Download PDF Programming Perl (Nutshell Handbooks), pdf free download Programming Perl (Nutshell Handbooks), by Randal L. Schwartz, Larry Wall Programming Perl (Nutshell Handbooks), the book Programming Perl (Nutshell Handbooks), Download Programming Perl (Nutshell Handbooks) Online Free, Read Online Programming Perl (Nutshell Handbooks) Book, Read Programming Perl (Nutshell Handbooks) Full Collection, Programming Perl (Nutshell Handbooks) PDF read online, Programming Perl (Nutshell Handbooks) Ebooks, Programming Perl (Nutshell Handbooks) Free Download, Programming Perl (Nutshell Handbooks) Free PDF Download, Programming Perl (Nutshell Handbooks) Books Online, PDF Download Programming Perl (Nutshell Handbooks) Free Collection, CLICK HERE FOR DOWNLOAD pdf, mobi, epub, azw, kindle Description: About the Author Randal L. Schwartz is a two-decade veteran of the software industry. He is skilled in software design, system administration, security, technical writing, and training. Randal has coauthored the "must-have" standards: Programming Perl, Learning Perl, Learning Perl for Win32 Systems, and Effective Perl Learning, and is a regular columnist for WebTechniques, PerformanceComputing, SysAdmin, and Linux magazines. He is also a frequent contributor to the Perl newsgroups, and has moderated comp.lang.perl.announce since its inception. His offbeat humor and technical mastery have reached legendary proportions worldwide (but he probably started some of those legends himself). -

Intermediate Perl

SECOND EDITION Intermediate Perl Randal L. Schwartz, brian d foy, and Tom Phoenix Beijing • Cambridge • Farnham • Köln • Sebastopol • Tokyo Intermediate Perl, Second Edition by Randal L. Schwartz, brian d foy, and Tom Phoenix Copyright © 2012 Randal Schwartz, brian d foy, Tom Phoenix. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://my.safaribooksonline.com). For more information, contact our corporate/institutional sales department: 800-998-9938 or [email protected]. Editors: Simon St. Laurent and Shawn Wallace Indexer: Lucie Haskins Production Editor: Kristen Borg Cover Designer: Karen Montgomery Copyeditor: Absolute Service, Inc. Interior Designer: David Futato Proofreader: Absolute Service, Inc. Illustrator: Rebecca Demarest March 2006: First Edition. August 2012: Second Edition. Revision History for the Second Edition: 2012-07-20 First release See http://oreilly.com/catalog/errata.csp?isbn=9781449393090 for release details. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly Media, Inc. Intermediate Perl, the image of an alpaca, and related trade dress are trademarks of O’Reilly Media, Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly Media, Inc., was aware of a trademark claim, the designations have been printed in caps or initial caps. While every precaution has been taken in the preparation of this book, the publisher and authors assume no responsibility for errors or omissions, or for damages resulting from the use of the information con- tained herein. -

Table of Contents • Index • Reviews • Reader Reviews • Errata Perl 6 Essentials by Allison Randal, Dan Sugalski, Leopold Tötsch

• Table of Contents • Index • Reviews • Reader Reviews • Errata Perl 6 Essentials By Allison Randal, Dan Sugalski, Leopold Tötsch Publisher: O'Reilly Pub Date: June 2003 ISBN: 0-596-00499-0 Pages: 208 Slots: 1 Perl 6 Essentials is the first book that offers a peek into the next major version of the Perl language. Written by members of the Perl 6 core development team, the book covers the development not only of Perl 6 syntax but also Parrot, the language-independent interpreter developed as part of the Perl 6 design strategy. This book is essential reading for anyone interested in the future of Perl. It will satisfy their curiosity and show how changes in the language will make it more powerful and easier to use. 1 / 155 • Table of Contents • Index • Reviews • Reader Reviews • Errata Perl 6 Essentials By Allison Randal, Dan Sugalski, Leopold Tötsch Publisher: O'Reilly Pub Date: June 2003 ISBN: 0-596-00499-0 Pages: 208 Slots: 1 Copyright Preface How This Book Is Organized Font Conventions We'd Like to Hear from You Acknowledgments Chapter 1. Project Overview Section 1.1. The Birth of Perl 6 Section 1.2. In the Beginning . Section 1.3. The Continuing Mission Chapter 2. Project Development Section 2.1. Language Development Section 2.2. Parrot Development Chapter 3. Design Philosophy Section 3.1. Linguistic and Cognitive Considerations Section 3.2. Architectural Considerations Chapter 4. Syntax Section 4.1. Variables Section 4.2. Operators Section 4.3. Control Structures Section 4.4. Subroutines Section 4.5. Classes and Objects Section 4.6. -

![Learning Perl. 5Th Edition [PDF]](https://docslib.b-cdn.net/cover/6878/learning-perl-5th-edition-pdf-1776878.webp)

Learning Perl. 5Th Edition [PDF]

Learning Perl ,perlroadmap.24755 Page ii Tuesday, June 17, 2008 8:15 AM Other Perl resources from O’Reilly Related titles Advanced Perl Programming Perl Debugger Pocket Intermediate Perl Reference Mastering Perl Perl in a Nutshell Perl 6 and Parrot Essentials Perl Testing: A Developer’s Perl Best Practices Notebook Perl Cookbook Practical mod-perl Perl Books perl.oreilly.com is a complete catalog of O’Reilly’s books on Perl Resource Center and related technologies, including sample chapters and code examples. Perl.com is the central web site for the Perl community. It is the perfect starting place for finding out everything there is to know about Perl. Conferences O’Reilly brings diverse innovators together to nurture the ideas that spark revolutionary industries. We specialize in document- ing the latest tools and systems, translating the innovator’s knowledge into useful skills for those in the trenches. Visit conferences.oreilly.com for our upcoming events. Safari Bookshelf (safari.oreilly.com) is the premier online refer- ence library for programmers and ITprofessionals. Conduct searches across more than 1,000 books. Subscribers can zero in on answers to time-critical questions in a matter of seconds. Read the books on your Bookshelf from cover to cover or sim- ply flip to the page you need. Try it today with a free trial. main.title Page iii Monday, May 19, 2008 11:21 AM FIFTH EDITION LearningTomcat Perl™ The Definitive Guide Randal L. Schwartz,Jason Tom Brittain Phoenix, and and Ian brian F. Darwin d foy Beijing • Cambridge • Farnham • Köln • Sebastopol • Taipei • Tokyo Learning Perl, Fifth Edition by Randal L. -

Pragmaticperl-Interviews-A4.Pdf

Pragmatic Perl Interviews pragmaticperl.com 2013—2015 Editor and interviewer: Viacheslav Tykhanovskyi Covers: Marko Ivanyk Revision: 2018-03-02 11:22 © Pragmatic Perl Contents 1 Preface .......................................... 1 2 Alexis Sukrieh (April 2013) ............................... 2 3 Sawyer X (May 2013) .................................. 10 4 Stevan Little (September 2013) ............................. 17 5 chromatic (October 2013) ................................ 22 6 Marc Lehmann (November 2013) ............................ 29 7 Tokuhiro Matsuno (January 2014) ........................... 46 8 Randal Schwartz (February 2014) ........................... 53 9 Christian Walde (May 2014) .............................. 56 10 Florian Ragwitz (rafl) (June 2014) ........................... 62 11 Curtis “Ovid” Poe (September 2014) .......................... 70 12 Leon Timmermans (October 2014) ........................... 77 13 Olaf Alders (December 2014) .............................. 81 14 Ricardo Signes (January 2015) ............................. 87 15 Neil Bowers (February 2015) .............................. 94 16 Renée Bäcker (June 2015) ................................ 102 17 David Golden (July 2015) ................................ 109 18 Philippe Bruhat (Book) (August 2015) . 115 19 Author .......................................... 123 i Preface 1 Preface Hello there! You have downloaded a compilation of interviews done with Perl pro- grammers in Pragmatic Perl journal from 2013 to 2015. Since the journal itself is in Russian -

Effective Perl Programming

Effective Perl Programming Second Edition The Effective Software Development Series Scott Meyers, Consulting Editor Visit informit.com/esds for a complete list of available publications. he Effective Software Development Series provides expert advice on Tall aspects of modern software development. Books in the series are well written, technically sound, and of lasting value. Each describes the critical things experts always do—or always avoid—to produce outstanding software. Scott Meyers, author of the best-selling books Effective C++ (now in its third edition), More Effective C++, and Effective STL (all available in both print and electronic versions), conceived of the series and acts as its consulting editor. Authors in the series work with Meyers to create essential reading in a format that is familiar and accessible for software developers of every stripe. Effective Perl Programming Ways to Write Better, More Idiomatic Perl Second Edition Joseph N. Hall Joshua A. McAdams brian d foy Upper Saddle River, NJ • Boston • Indianapolis • San Francisco New York • Toronto • Montreal • London • Munich • Paris • Madrid Capetown • Sydney • Tokyo • Singapore • Mexico City Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and the publisher was aware of a trademark claim, the designations have been printed with initial capital letters or in all capitals. The authors and publisher have taken care in the preparation of this book, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. -

Perl by Example, Third Edition—This Book Is a Superb, Well-Written Programming Book

Praise for Ellie Quigley’s Books “I picked up a copy of JavaScript by Example over the weekend and wanted to thank you for putting out a book that makes JavaScript easy to understand. I’ve been a developer for several years now and JS has always been the ‘monster under the bed,’ so to speak. Your book has answered a lot of questions I’ve had about the inner workings of JS but was afraid to ask. Now all I need is a book that covers Ajax and Coldfusion. Thanks again for putting together an outstanding book.” —Chris Gomez, Web services manager, Zunch Worldwide, Inc. “I have been reading your UNIX® Shells by Example book, and I must say, it is brilliant. Most other books do not cover all the shells, and when you have to constantly work in an organization that uses tcsh, bash, and korn, it can become very difficult. However, your book has been indispensable to me in learning the various shells and the differences between them…so I thought I’d email you, just to let you know what a great job you have done!” —Farogh-Ahmed Usmani, B.Sc. (Honors), M.Sc., DIC, project consultant (Billing Solutions), Comverse “I have been learning Perl for about two months now; I have a little shell scripting experience but that is it. I first started withLearning Perl by O’Reilly. Good book but lacking on the examples. I then went to Programming Perl by Larry Wall, a great book for intermediate to advanced, didn’t help me much beginning Perl. -

Programming Perl, 3Rd Edition

Programming Perl Programming Perl Third Edition Larry Wall, Tom Christiansen & Jon Orwant Beijing • Cambridge • Farnham • Köln • Paris • Sebastopol • Taipei • Tokyo Programming Perl, Third Edition by Larry Wall, Tom Christiansen, and Jon Orwant Copyright © 2000, 1996, 1991 O’Reilly & Associates, Inc. All rights reserved. Printed in the United States of America. Published by O’Reilly & Associates, Inc., 101 Morris Street, Sebastopol, CA 95472. Editor, First Edition: Tim O’Reilly Editor, Second Edition: Steve Talbott Editor, Third Edition: Linda Mui Technical Editor: Nathan Torkington Production Editor: Melanie Wang Cover Designer: Edie Freedman Printing History: January 1991: First Edition. September 1996: Second Edition. July 2000: Third Edition. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly & Associates, Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly & Associates, Inc. was aware of a trademark claim, the designations have been printed in caps or initial caps. The association between the image of a camel and the Perl language is a trademark of O’Reilly & Associates, Inc. Permission may be granted for non-commercial use; please inquire by sending mail to [email protected]. While every precaution has been taken in the preparation of this book, the publisher assumes no responsibility for errors or omissions, or for damages resulting from the use of the information contained herein. Library of Congress Cataloging-in-Publication Data Wall, Larry. Programming Perl/Larry Wall, Tom Christiansen & Jon Orwant.--3rd ed. p. cm. ISBN 0-596-00027-8 1.