SC505 STOCHASTIC PROCESSES Class Notes

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Scalable Nonparametric Bayesian Inference on Point Processes with Gaussian Processes

Scalable Nonparametric Bayesian Inference on Point Processes with Gaussian Processes Yves-Laurent Kom Samo [email protected] Stephen Roberts [email protected] Deparment of Engineering Science and Oxford-Man Institute, University of Oxford Abstract 2. Related Work In this paper we propose an efficient, scalable Non-parametric inference on point processes has been ex- non-parametric Gaussian process model for in- tensively studied in the literature. Rathbum & Cressie ference on Poisson point processes. Our model (1994) and Moeller et al. (1998) used a finite-dimensional does not resort to gridding the domain or to intro- piecewise constant log-Gaussian for the intensity function. ducing latent thinning points. Unlike competing Such approximations are limited in that the choice of the 3 models that scale as O(n ) over n data points, grid on which to represent the intensity function is arbitrary 2 our model has a complexity O(nk ) where k and one has to trade-off precision with computational com- n. We propose a MCMC sampler and show that plexity and numerical accuracy, with the complexity being the model obtained is faster, more accurate and cubic in the precision and exponential in the dimension of generates less correlated samples than competing the input space. Kottas (2006) and Kottas & Sanso (2007) approaches on both synthetic and real-life data. used a Dirichlet process mixture of Beta distributions as Finally, we show that our model easily handles prior for the normalised intensity function of a Poisson data sizes not considered thus far by alternate ap- process. Cunningham et al. -

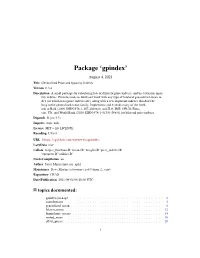

Gpindex: Generalized Price and Quantity Indexes

Package ‘gpindex’ August 4, 2021 Title Generalized Price and Quantity Indexes Version 0.3.4 Description A small package for calculating lots of different price indexes, and by extension quan- tity indexes. Provides tools to build and work with any type of bilateral generalized-mean in- dex (of which most price indexes are), along with a few important indexes that don't be- long to the generalized-mean family. Implements and extends many of the meth- ods in Balk (2008, ISBN:978-1-107-40496-0) and ILO, IMF, OECD, Euro- stat, UN, and World Bank (2020, ISBN:978-1-51354-298-0) for bilateral price indexes. Depends R (>= 3.5) Imports stats, utils License MIT + file LICENSE Encoding UTF-8 URL https://github.com/marberts/gpindex LazyData true Collate 'helper_functions.R' 'means.R' 'weights.R' 'price_indexes.R' 'operators.R' 'utilities.R' NeedsCompilation no Author Steve Martin [aut, cre, cph] Maintainer Steve Martin <[email protected]> Repository CRAN Date/Publication 2021-08-04 06:10:06 UTC R topics documented: gpindex-package . .2 contributions . .3 generalized_mean . .8 lehmer_mean . 12 logarithmic_means . 15 nested_mean . 18 offset_prices . 20 1 2 gpindex-package operators . 22 outliers . 23 price_data . 25 price_index . 26 transform_weights . 33 Index 37 gpindex-package Generalized Price and Quantity Indexes Description A small package for calculating lots of different price indexes, and by extension quantity indexes. Provides tools to build and work with any type of bilateral generalized-mean index (of which most price indexes are), along with a few important indexes that don’t belong to the generalized-mean family. Implements and extends many of the methods in Balk (2008, ISBN:978-1-107-40496-0) and ILO, IMF, OECD, Eurostat, UN, and World Bank (2020, ISBN:978-1-51354-298-0) for bilateral price indexes. -

Deep Neural Networks As Gaussian Processes

Published as a conference paper at ICLR 2018 DEEP NEURAL NETWORKS AS GAUSSIAN PROCESSES Jaehoon Lee∗y, Yasaman Bahri∗y, Roman Novak , Samuel S. Schoenholz, Jeffrey Pennington, Jascha Sohl-Dickstein Google Brain fjaehlee, yasamanb, romann, schsam, jpennin, [email protected] ABSTRACT It has long been known that a single-layer fully-connected neural network with an i.i.d. prior over its parameters is equivalent to a Gaussian process (GP), in the limit of infinite network width. This correspondence enables exact Bayesian inference for infinite width neural networks on regression tasks by means of evaluating the corresponding GP. Recently, kernel functions which mimic multi-layer random neural networks have been developed, but only outside of a Bayesian framework. As such, previous work has not identified that these kernels can be used as co- variance functions for GPs and allow fully Bayesian prediction with a deep neural network. In this work, we derive the exact equivalence between infinitely wide deep net- works and GPs. We further develop a computationally efficient pipeline to com- pute the covariance function for these GPs. We then use the resulting GPs to per- form Bayesian inference for wide deep neural networks on MNIST and CIFAR- 10. We observe that trained neural network accuracy approaches that of the corre- sponding GP with increasing layer width, and that the GP uncertainty is strongly correlated with trained network prediction error. We further find that test perfor- mance increases as finite-width trained networks are made wider and more similar to a GP, and thus that GP predictions typically outperform those of finite-width networks. -

Vector Calculus and Multiple Integrals Rob Fender, HT 2018

Vector Calculus and Multiple Integrals Rob Fender, HT 2018 COURSE SYNOPSIS, RECOMMENDED BOOKS Course syllabus (on which exams are based): Double integrals and their evaluation by repeated integration in Cartesian, plane polar and other specified coordinate systems. Jacobians. Line, surface and volume integrals, evaluation by change of variables (Cartesian, plane polar, spherical polar coordinates and cylindrical coordinates only unless the transformation to be used is specified). Integrals around closed curves and exact differentials. Scalar and vector fields. The operations of grad, div and curl and understanding and use of identities involving these. The statements of the theorems of Gauss and Stokes with simple applications. Conservative fields. Recommended Books: Mathematical Methods for Physics and Engineering (Riley, Hobson and Bence) This book is lazily referred to as “Riley” throughout these notes (sorry, Drs H and B) You will all have this book, and it covers all of the maths of this course. However it is rather terse at times and you will benefit from looking at one or both of these: Introduction to Electrodynamics (Griffiths) You will buy this next year if you haven’t already, and the chapter on vector calculus is very clear Div grad curl and all that (Schey) A nice discussion of the subject, although topics are ordered differently to most courses NB: the latest version of this book uses the opposite convention to polar coordinates to this course (and indeed most of physics), but older versions can often be found in libraries 1 Week One A review of vectors, rotation of coordinate systems, vector vs scalar fields, integrals in more than one variable, first steps in vector differentiation, the Frenet-Serret coordinate system Lecture 1 Vectors A vector has direction and magnitude and is written in these notes in bold e.g. -

A Brief Tour of Vector Calculus

A BRIEF TOUR OF VECTOR CALCULUS A. HAVENS Contents 0 Prelude ii 1 Directional Derivatives, the Gradient and the Del Operator 1 1.1 Conceptual Review: Directional Derivatives and the Gradient........... 1 1.2 The Gradient as a Vector Field............................ 5 1.3 The Gradient Flow and Critical Points ....................... 10 1.4 The Del Operator and the Gradient in Other Coordinates*............ 17 1.5 Problems........................................ 21 2 Vector Fields in Low Dimensions 26 2 3 2.1 General Vector Fields in Domains of R and R . 26 2.2 Flows and Integral Curves .............................. 31 2.3 Conservative Vector Fields and Potentials...................... 32 2.4 Vector Fields from Frames*.............................. 37 2.5 Divergence, Curl, Jacobians, and the Laplacian................... 41 2.6 Parametrized Surfaces and Coordinate Vector Fields*............... 48 2.7 Tangent Vectors, Normal Vectors, and Orientations*................ 52 2.8 Problems........................................ 58 3 Line Integrals 66 3.1 Defining Scalar Line Integrals............................. 66 3.2 Line Integrals in Vector Fields ............................ 75 3.3 Work in a Force Field................................. 78 3.4 The Fundamental Theorem of Line Integrals .................... 79 3.5 Motion in Conservative Force Fields Conserves Energy .............. 81 3.6 Path Independence and Corollaries of the Fundamental Theorem......... 82 3.7 Green's Theorem.................................... 84 3.8 Problems........................................ 89 4 Surface Integrals, Flux, and Fundamental Theorems 93 4.1 Surface Integrals of Scalar Fields........................... 93 4.2 Flux........................................... 96 4.3 The Gradient, Divergence, and Curl Operators Via Limits* . 103 4.4 The Stokes-Kelvin Theorem..............................108 4.5 The Divergence Theorem ...............................112 4.6 Problems........................................114 List of Figures 117 i 11/14/19 Multivariate Calculus: Vector Calculus Havens 0. -

Gaussian Process Dynamical Models for Human Motion

IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, VOL. 30, NO. 2, FEBRUARY 2008 283 Gaussian Process Dynamical Models for Human Motion Jack M. Wang, David J. Fleet, Senior Member, IEEE, and Aaron Hertzmann, Member, IEEE Abstract—We introduce Gaussian process dynamical models (GPDMs) for nonlinear time series analysis, with applications to learning models of human pose and motion from high-dimensional motion capture data. A GPDM is a latent variable model. It comprises a low- dimensional latent space with associated dynamics, as well as a map from the latent space to an observation space. We marginalize out the model parameters in closed form by using Gaussian process priors for both the dynamical and the observation mappings. This results in a nonparametric model for dynamical systems that accounts for uncertainty in the model. We demonstrate the approach and compare four learning algorithms on human motion capture data, in which each pose is 50-dimensional. Despite the use of small data sets, the GPDM learns an effective representation of the nonlinear dynamics in these spaces. Index Terms—Machine learning, motion, tracking, animation, stochastic processes, time series analysis. Ç 1INTRODUCTION OOD statistical models for human motion are important models such as hidden Markov model (HMM) and linear Gfor many applications in vision and graphics, notably dynamical systems (LDS) are efficient and easily learned visual tracking, activity recognition, and computer anima- but limited in their expressiveness for complex motions. tion. It is well known in computer vision that the estimation More expressive models such as switching linear dynamical of 3D human motion from a monocular video sequence is systems (SLDS) and nonlinear dynamical systems (NLDS), highly ambiguous. -

Multivariable and Vector Calculus

Multivariable and Vector Calculus Lecture Notes for MATH 0200 (Spring 2015) Frederick Tsz-Ho Fong Department of Mathematics Brown University Contents 1 Three-Dimensional Space ....................................5 1.1 Rectangular Coordinates in R3 5 1.2 Dot Product7 1.3 Cross Product9 1.4 Lines and Planes 11 1.5 Parametric Curves 13 2 Partial Differentiations ....................................... 19 2.1 Functions of Several Variables 19 2.2 Partial Derivatives 22 2.3 Chain Rule 26 2.4 Directional Derivatives 30 2.5 Tangent Planes 34 2.6 Local Extrema 36 2.7 Lagrange’s Multiplier 41 2.8 Optimizations 46 3 Multiple Integrations ........................................ 49 3.1 Double Integrals in Rectangular Coordinates 49 3.2 Fubini’s Theorem for General Regions 53 3.3 Double Integrals in Polar Coordinates 57 3.4 Triple Integrals in Rectangular Coordinates 62 3.5 Triple Integrals in Cylindrical Coordinates 67 3.6 Triple Integrals in Spherical Coordinates 70 4 Vector Calculus ............................................ 75 4.1 Vector Fields on R2 and R3 75 4.2 Line Integrals of Vector Fields 83 4.3 Conservative Vector Fields 88 4.4 Green’s Theorem 98 4.5 Parametric Surfaces 105 4.6 Stokes’ Theorem 120 4.7 Divergence Theorem 127 5 Topics in Physics and Engineering .......................... 133 5.1 Coulomb’s Law 133 5.2 Introduction to Maxwell’s Equations 137 5.3 Heat Diffusion 141 5.4 Dirac Delta Functions 144 1 — Three-Dimensional Space 1.1 Rectangular Coordinates in R3 Throughout the course, we will use an ordered triple (x, y, z) to represent a point in the three dimensional space. -

Modelling Multi-Object Activity by Gaussian Processes 1

LOY et al.: MODELLING MULTI-OBJECT ACTIVITY BY GAUSSIAN PROCESSES 1 Modelling Multi-object Activity by Gaussian Processes Chen Change Loy School of EECS [email protected] Queen Mary University of London Tao Xiang E1 4NS London, UK [email protected] Shaogang Gong [email protected] Abstract We present a new approach for activity modelling and anomaly detection based on non-parametric Gaussian Process (GP) models. Specifically, GP regression models are formulated to learn non-linear relationships between multi-object activity patterns ob- served from semantically decomposed regions in complex scenes. Predictive distribu- tions are inferred from the regression models to compare with the actual observations for real-time anomaly detection. The use of a flexible, non-parametric model alleviates the difficult problem of selecting appropriate model complexity encountered in parametric models such as Dynamic Bayesian Networks (DBNs). Crucially, our GP models need fewer parameters; they are thus less likely to overfit given sparse data. In addition, our approach is robust to the inevitable noise in activity representation as noise is modelled explicitly in the GP models. Experimental results on a public traffic scene show that our models outperform DBNs in terms of anomaly sensitivity, noise robustness, and flexibil- ity in modelling complex activity. 1 Introduction Activity modelling and automatic anomaly detection in video have received increasing at- tention due to the recent large-scale deployments of surveillance cameras. These tasks are non-trivial because complex activity patterns in a busy public space involve multiple objects interacting with each other over space and time, whilst anomalies are often rare, ambigu- ous and can be easily confused with noise caused by low image quality, unstable lighting condition and occlusion. -

Linear Discriminant Analysis Using a Generalized Mean of Class Covariances and Its Application to Speech Recognition

IEICE TRANS. INF. & SYST., VOL.E91–D, NO.3 MARCH 2008 478 PAPER Special Section on Robust Speech Processing in Realistic Environments Linear Discriminant Analysis Using a Generalized Mean of Class Covariances and Its Application to Speech Recognition Makoto SAKAI†,††a), Norihide KITAOKA††b), Members, and Seiichi NAKAGAWA†††c), Fellow SUMMARY To precisely model the time dependency of features is deal with unequal covariances because the maximum likeli- one of the important issues for speech recognition. Segmental unit input hood estimation was used to estimate parameters for differ- HMM with a dimensionality reduction method has been widely used to ent Gaussians with unequal covariances [9]. Heteroscedas- address this issue. Linear discriminant analysis (LDA) and heteroscedas- tic extensions, e.g., heteroscedastic linear discriminant analysis (HLDA) or tic discriminant analysis (HDA) was proposed as another heteroscedastic discriminant analysis (HDA), are popular approaches to re- objective function which employed individual weighted duce dimensionality. However, it is difficult to find one particular criterion contributions of the classes [10]. The effectiveness of these suitable for any kind of data set in carrying out dimensionality reduction methods for some data sets has been experimentally demon- while preserving discriminative information. In this paper, we propose a ffi new framework which we call power linear discriminant analysis (PLDA). strated. However, it is di cult to find one particular crite- PLDA can be used to describe various criteria including LDA, HLDA, and rion suitable for any kind of data set. HDA with one control parameter. In addition, we provide an efficient selec- In this paper we show that these three methods have tion method using a control parameter without training HMMs nor testing a strong mutual relationship, and provide a new interpreta- recognition performance on a development data set. -

Unsupervised Machine Learning for Pathological Radar Clutter Clustering: the P-Mean-Shift Algorithm Yann Cabanes, Frédéric Barbaresco, Marc Arnaudon, Jérémie Bigot

Unsupervised Machine Learning for Pathological Radar Clutter Clustering: the P-Mean-Shift Algorithm Yann Cabanes, Frédéric Barbaresco, Marc Arnaudon, Jérémie Bigot To cite this version: Yann Cabanes, Frédéric Barbaresco, Marc Arnaudon, Jérémie Bigot. Unsupervised Machine Learning for Pathological Radar Clutter Clustering: the P-Mean-Shift Algorithm. C&ESAR 2019, Nov 2019, Rennes, France. hal-02875430 HAL Id: hal-02875430 https://hal.archives-ouvertes.fr/hal-02875430 Submitted on 19 Jun 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Unsupervised Machine Learning for Pathological Radar Clutter Clustering: the P-Mean-Shift Algorithm Yann Cabanes1;2, Fred´ eric´ Barbaresco1, Marc Arnaudon2, and Jer´ emie´ Bigot2 1 Thales LAS, Advanced Radar Concepts, Limours, FRANCE [email protected]; [email protected] 2 Institut de Mathematiques´ de Bordeaux, Bordeaux, FRANCE [email protected]; [email protected] Abstract. This paper deals with unsupervised radar clutter clustering to char- acterize pathological clutter based on their Doppler fluctuations. Operationally, being able to recognize pathological clutter environments may help to tune radar parameters to regulate the false alarm rate. This request will be more important for new generation radars that will be more mobile and should process data on the move. -

On the Theory of Cyclostationary Signals

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/35160200 On the Theory of Cyclostationary Signals Article in Signal Processing · October 1989 DOI: 10.1016/0165-1684(89)90055-8 · Source: OAI CITATIONS READS 51 623 1 author: William A Brown 15 PUBLICATIONS 802 CITATIONS SEE PROFILE All content following this page was uploaded by William A Brown on 23 May 2018. The user has requested enhancement of the downloaded file. On the Theory of Cycl ostati on ary S i g n als By William Al exander Brown lll Department of Electrical and Computer Engineering University of California, Davis September 1987 On the Theory of Cyclostationary Signals By Williano Alexander Brown III B.s. (california state university, chico) tsz+ M.S. (lllinois lnstitute of Technology, Chicago) toz s DISSERTATION Submitted in partial satisfaction of the requirements for the degree of DOCTOR OF PHILOSOPFTY ln Engineerin g in the Graduate Division of the UNMERS ITY OII C,\IIFOII\L\ DA\;IS Corn rnittee in Chargc Dcposited in rhe University Library_ September 1987 The pagc numberu of thil aiogle apaced verrion do not corrcspond to pagc numbert of thc originel diarcrtetion. Acknowledgement I wish to thank Professor William A. Gardner for suggesting this fascinating subject of research and for his guidance and criticism during the writing of this dissertation. On The Theory of Cyclostationary Signals William A. Brown Cyclostationary signals are time-series whose statistical (average) behavior varies periodically with time. Closely related are almost cyclostationory (AC) signals with sta- tistical behavior characterized by almost periodic functions of time, i.e., trigonometric series with possibly non-harmonically related frequencies. -

Online Discrimination of Nonlinear Dynamics with Switching Differential Equations

Online Discrimination of Nonlinear Dynamics with Switching Differential Equations Sebastian Bitzer, Izzet B. Yildiz and Stefan J. Kiebel Max Planck Institute for Human Cognitive and Brain Sciences, Leipzig fbitzer,yildiz,[email protected] Abstract How to recognise whether an observed person walks or runs? We consider a dy- namic environment where observations (e.g. the posture of a person) are caused by different dynamic processes (walking or running) which are active one at a time and which may transition from one to another at any time. For this setup, switch- ing dynamic models have been suggested previously, mostly, for linear and non- linear dynamics in discrete time. Motivated by basic principles of computations in the brain (dynamic, internal models) we suggest a model for switching non- linear differential equations. The switching process in the model is implemented by a Hopfield network and we use parametric dynamic movement primitives to represent arbitrary rhythmic motions. The model generates observed dynamics by linearly interpolating the primitives weighted by the switching variables and it is constructed such that standard filtering algorithms can be applied. In two experi- ments with synthetic planar motion and a human motion capture data set we show that inference with the unscented Kalman filter can successfully discriminate sev- eral dynamic processes online. 1 Introduction Humans are extremely accurate when discriminating rapidly between different dynamic processes occurring in their environment. For example, for us it is a simple task to recognise whether an observed person is walking or running and we can use subtle cues in the structural and dynamic pattern of an observed movement to identify emotional state, gender and intent of a person.