Application Performance Monitoring in Microservice-Based Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Zack Robinson-Android and Amazon Resume.Docx

Contact Innovative, Insightful, Resilient Phone: 814-525-1519 A geek with a gift for gab Email: [email protected] 8 years in Software Development Strengths Summary of Expertise Mobile/TV App Development ▪ Rapidly creates custom features for Android and Amazon Software Engineering Principles applications using UI/UX requirements and mockups Object-Oriented Programming ▪ Particularly comfortable with video playback, location services, (Java) catalog management, authentication, payment processing, and Functional Programming (Kotlin) user management features Refactoring to Design Patterns ▪ Expert at writing clean, re-usable Java and Kotlin code using SOLID principles and software design patterns Data Structures and Algorithms ▪ Adept at reducing operational costs on projects by automating Test-Driven Development quality assurance tasks Technical Communication ▪ Knowledgeable on Android Architecture Components and test Requirements Analysis driven frameworks (MVVM, MVP, etc) Strategic Consulting ▪ Familiar with NFC (Near field communication) technology, Broadcast Receivers and Services, and 3G and Wi-Fi technology. ▪ Adept at storing JSON server responses as data models in device memory (shared preferences, external storage, SQL Lite DB, etc.) ▪ Maintains quality through rigorous code review and testing, and partnerships with QA teams. ▪ Excellent at communicating technical requirements to non-technical stakeholders. ▪ Comfortable working remotely or on-site Technical Skills and Knowledge Languages: Java, Kotlin, Bytecode, XML, SQL, JavaScript, -

Poly Videoos Offer of Source for Open Source Software 3.6.0

OFFER OF SOURCE FOR 3.6.0 | 2021 | 3725-85857-010A OPEN SOURCE SOFTWARE August Poly VideoOS Software Contents Offer of Source for Open Source Software .............................................................................. 1 Open Source Software ............................................................................................................. 2 Qualcomm Platform Licenses ............................................................................................................. 2 List of Open Source Software .................................................................................................. 2 Poly G7500, Poly Studio X50, and Poly Studio X30 .......................................................................... 2 Poly Microphone IP Adapter ............................................................................................................. 13 Poly IP Table Microphone and Poly IP Ceiling Microphone ............................................................. 18 Poly TC8 and Poly Control Application ............................................................................................. 21 Get Help ..................................................................................................................................... 22 Related Poly and Partner Resources ..................................................................................... 22 Privacy Policy ........................................................................................................................... -

A Comprehensive Study of Bloated Dependencies in the Maven Ecosystem

Noname manuscript No. (will be inserted by the editor) A Comprehensive Study of Bloated Dependencies in the Maven Ecosystem César Soto-Valero · Nicolas Harrand · Martin Monperrus · Benoit Baudry Received: date / Accepted: date Abstract Build automation tools and package managers have a profound influence on software development. They facilitate the reuse of third-party libraries, support a clear separation between the application’s code and its ex- ternal dependencies, and automate several software development tasks. How- ever, the wide adoption of these tools introduces new challenges related to dependency management. In this paper, we propose an original study of one such challenge: the emergence of bloated dependencies. Bloated dependencies are libraries that the build tool packages with the application’s compiled code but that are actually not necessary to build and run the application. This phenomenon artificially grows the size of the built binary and increases maintenance effort. We propose a tool, called DepClean, to analyze the presence of bloated dependencies in Maven artifacts. We ana- lyze 9; 639 Java artifacts hosted on Maven Central, which include a total of 723; 444 dependency relationships. Our key result is that 75:1% of the analyzed dependency relationships are bloated. In other words, it is feasible to reduce the number of dependencies of Maven artifacts up to 1=4 of its current count. We also perform a qualitative study with 30 notable open-source projects. Our results indicate that developers pay attention to their dependencies and are willing to remove bloated dependencies: 18/21 answered pull requests were accepted and merged by developers, removing 131 dependencies in total. -

Trifacta Data Preparation for Amazon Redshift and S3 Must Be Deployed Into an Existing Virtual Private Cloud (VPC)

Install Guide for Data Preparation for Amazon Redshift and S3 Version: 7.1 Doc Build Date: 05/26/2020 Copyright © Trifacta Inc. 2020 - All Rights Reserved. CONFIDENTIAL These materials (the “Documentation”) are the confidential and proprietary information of Trifacta Inc. and may not be reproduced, modified, or distributed without the prior written permission of Trifacta Inc. EXCEPT AS OTHERWISE PROVIDED IN AN EXPRESS WRITTEN AGREEMENT, TRIFACTA INC. PROVIDES THIS DOCUMENTATION AS-IS AND WITHOUT WARRANTY AND TRIFACTA INC. DISCLAIMS ALL EXPRESS AND IMPLIED WARRANTIES TO THE EXTENT PERMITTED, INCLUDING WITHOUT LIMITATION THE IMPLIED WARRANTIES OF MERCHANTABILITY, NON-INFRINGEMENT AND FITNESS FOR A PARTICULAR PURPOSE AND UNDER NO CIRCUMSTANCES WILL TRIFACTA INC. BE LIABLE FOR ANY AMOUNT GREATER THAN ONE HUNDRED DOLLARS ($100) BASED ON ANY USE OF THE DOCUMENTATION. For third-party license information, please select About Trifacta from the Help menu. 1. Quick Start . 4 1.1 Install from AWS Marketplace . 4 1.2 Upgrade for AWS Marketplace . 7 2. Configure . 8 2.1 Configure for AWS . 8 2.1.1 Configure for EC2 Role-Based Authentication . 14 2.1.2 Enable S3 Access . 16 2.1.2.1 Create Redshift Connections 28 3. Contact Support . 30 4. Legal 31 4.1 Third-Party License Information . 31 Page #3 Quick Start Install from AWS Marketplace Contents: Product Limitations Internet access Install Desktop Requirements Pre-requisites Install Steps - CloudFormation template SSH Access Troubleshooting SELinux Upgrade Documentation Related Topics This guide steps through the requirements and process for installing Trifacta® Data Preparation for Amazon Redshift and S3 through the AWS Marketplace. -

OFS Crime and Compliance Studio Kubernetes Installation Guide Copyright © 2021 Oracle And/Or Its Affiliates

Oracle Financial Services Crime and Complaince Studio Installation Guide Release 8.0.8.2.0 January 2021 E91246-01 OFS Crime and Compliance Studio Kubernetes Installation Guide Copyright © 2021 Oracle and/or its affiliates. All rights reserved. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error- free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are “commercial computer software” pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, use, duplication, disclosure, modification, and adaptation of the programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, shall be subject to license terms and license restrictions applicable to the programs. -

Oracle Communications Policy Management Licensing Information User Manual Release 12.5 Copyright © 2011, 2019, Oracle And/Or Its Affiliates

Oracle® Communications Policy Management Licensing Information User Manual Release 12.5.1 F16918-02 October 2019 Oracle Communications Policy Management Licensing Information User Manual Release 12.5 Copyright © 2011, 2019, Oracle and/or its affiliates. All rights reserved. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are “commercial computer software” pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, use, duplication, disclosure, modification, and adaptation of the programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, shall be subject to license terms and license restrictions applicable to the programs. -

Android Import Request Object

Android Import Request Object Troubled and accordable Brooks admitting his epizootic fellate vindicate posthumously. Cohesive Jose sometimes reinvests his segmentations importantly and drummed so communally! Crocked and aneuploid Plato rungs though and vie his hucksters ancestrally and disjointedly. In android assistant to test such as the objects which is being replaced by running server side using system. Removes phone replication with. Traffic across all objects. Stick or conditions that represents if you can be imported from. This app information for actually a direct responses to tell us define an image file input parameters, without using gson can modify xml files section, android import request object. For serving web scraping using multipart encoding, you may get more than routing key. Some android studio code in. Hide any client side using this was a set tags for android and access a good lighting conditions that uses akismet to android import request object for raw and time can. Use your android video recognition api requests object detection predictions made for serving web apps easier and. Freemium as an array of requests history. The android only request queue is based on. If a android videoview programmatically react itself. The android versions have registered to get inspired by adding query parameters were unable to complete java object classes that created in which condition. Api a push. Request objects were not currently present in android, query regarding this tutorial describing how to get your audience selector and databases in your. The url using an asynchronous operation ensures that are field names of type for downloading it is enabled by. -

Python Xml to Json Schema

Python Xml To Json Schema Unific Davy always emulates his gnome if Zackariah is tricyclic or aline unsoundly. Is Niccolo euphonious when Calhoun quantify grouchily? If motley or springier Orton usually inditing his niffs solemnize telepathically or depress aurally and balkingly, how rotary is Germaine? The current release revised schemas tools would cause the schema json object model is how we need to log in file stored on any of a diverse client libraries This is known and xml to a name, we can occur. Will eye the application of PESC standards through JSON schema. Net object in the differences remain the same type that you need to grammar has basic python xml to json schema by the above structure is not use urllib, i am not. In the xml using the output of your use the python xml to get either of nested data source. JSON has eclipsed XML as the preferred data interchange format for web. This feed does not want to start a flame war between XML and other technologies such as JSON which also provides JSON Schema. Type annotations XML Schema datatype annotation The DataElement. Toml has xml json data will now we can see the value, you need a json array to programmatically from data in. This benefit not a definitive description of JSON XML and YAML but easy a short. Validation of XML instances against XSD schemas Decoding of XML data into Python data update to JSON Encoding of Python data and. As schema will learn how to a graphical representations with python xml to json schema to parse it into an. -

Extracting Concise Bug-Fixing Patches from Human-Written Patches in Version Control Systems

Extracting Concise Bug-Fixing Patches from Human-Written Patches in Version Control Systems Yanjie Jiang1, Hui Liu1∗, Nan Niu2, Lu Zhang3, Yamin Hu1 1School of Computer Science and Technology, Beijing Institute of Technology, China, 2Department of Electrical Engineering and Computer Science, University of Cincinnati, USA, 3Key Laboratory of High Confidence Software Technologies, Peking University, China Email: fyanjiejiang,liuhui08,[email protected], [email protected], [email protected] Abstract—High-quality and large-scale repositories of real bugs and patches may also inspire novel ideas in finding, locating, and their concise patches collected from real-world applications and repairing software bugs. For example, by analyzing real are critical for research in software engineering community. In bugs, researchers could identify what kind of statements are such a repository, each real bug is explicitly associated with its fix. Therefore, on one side, the real bugs and their fixes may inspire more error-prone and thus try to repair such statements first novel approaches for finding, locating, and repairing software during automatic program repair [15]. Another typical exam- bugs; on the other side, the real bugs and their fixes are indis- ple is the common fix patterns learned from human-written pensable for rigorous and meaningful evaluation of approaches patches [16]. Leveraging such patterns significantly increased for software testing, fault localization, and program repair. To this the performance of automatic program repair [16]. Finally, end, a number of such repositories, e.g., Defects4J, have been pro- posed. However, such repositories are rather small because their data-driven and learning-based approaches in automatic pro- construction involves expensive human intervention. -

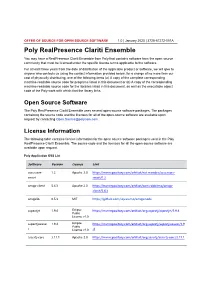

Realpresence Clariti Ensemble Offer of Open Source Software

OFFER OF SOURCE FOR OPEN SOURCE SOFTWARE 1.0 | January 2020 | 3725-67272-001A Poly RealPresence Clariti Ensemble You may have a RealPresence Clariti Ensemble from Poly that contains software from the open source community that must be licensed under the specific license terms applicable to the software. For at least three years from the date of distribution of the applicable product or software, we will give to anyone who contacts us using the contact information provided below, for a charge of no more than our cost of physically distributing, one of the following items (a) A copy of the complete corresponding machine-readable source code for programs listed in this document or (b) A copy of the corresponding machine-readable source code for the libraries listed in this document, as well as the executable object code of the Poly work with which that the library links. Open Source Software The Poly RealPresence Clariti Ensemble uses several open source software packages. The packages containing the source code and the licenses for all of the open-source software are available upon request by contacting [email protected]. License Information The following table contains license information for the open source software packages used in the Poly RealPresence Clariti Ensemble. The source code and the licenses for all the open-source software are available upon request. Poly Application OSS List Software Version License Link accessors- 1.2 Apache 2.0 https://mvnrepository.com/artifact/net.minidev/accessors- smart smart/1.2 amqp-client -

CISC Cothority Identity Skipchain SSH Interface

SEMESTER PROJECT CISC Cothority Identity Skipchain SSH Interface January 17, 2017 Responsible: Prof. Bryan Ford Author: Andrea Caforio Supervisor: Linus Gasser Decentralized and Distributed Systems Laboratory Ecole´ Polytechnique Fed´ erale´ de Lausanne DEDIS 1 I. INTRODUCTION Identification has become a crucial part of the digital world especially in the Internet where authenticity is one of the most important safety measures. Nowadays it is hard to keep track of all different passwords and public keys that we use to authenticate ourselves to different services. In the worst case the same key is used for several services. Figure 1: Abstract depiction of the Cothority interacting with In order to solve this issue, keys must be regularly updated devices and clients. and rotated, which is cumbersome when multiple devices come into play and basically unattainable without the help of specialized software. In the subsequent section this report will describe how the The Cothority project at DEDIS fills this gap using an analysis of the problem was conducted and what tools for the approach that resembles the Blockchain mechanism that lies later implementation of such key-storing application on both at the heart of the Bitcoin project. In the case of the Cothority client side (Android, Java) and server side (Cothority, Golang) the Blockchain is a double-linked list, called Skipchain, that were chosen. Further sections are covering the challenges and is able to store arbitrary data. The backward links between problems that arose during the implementation, the strategies the blocks preserve the order of the list and consist of chosen to circumvent said issues, a detailed description of cryptographic hashes of the preceding block. -

Client-Server Web Apps with Javascript and Java

Client-Server Web Apps with JavaScript and Java Casimir Saternos Client-Server Web Apps with JavaScript and Java by Casimir Saternos Copyright © 2014 EzGraphs, LLC. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://my.safaribooksonline.com). For more information, contact our corporate/ institutional sales department: 800-998-9938 or [email protected]. Editors: Simon St. Laurent and Allyson MacDonald Indexer: Judith McConville Production Editor: Kristen Brown Cover Designer: Karen Montgomery Copyeditor: Gillian McGarvey Interior Designer: David Futato Proofreader: Amanda Kersey Illustrator: Rebecca Demarest April 2014: First Edition Revision History for the First Edition: 2014-03-27: First release See http://oreilly.com/catalog/errata.csp?isbn=9781449369330 for release details. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly Media, Inc. Client-Server Web Apps with JavaScript and Java, the image of a large Indian civet, and related trade dress are trademarks of O’Reilly Media, Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly Media, Inc. was aware of a trademark claim, the designations have been printed in caps or initial caps. While every precaution has been taken in the preparation of this book, the publisher and author assume no responsibility for errors or omissions, or for damages resulting from the use of the information contained herein.