Data Mining with Rattle and R: Survival Guide

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Release Notes for Debian 11 (Bullseye), 32-Bit PC

Release Notes for Debian 11 (bullseye), 32-bit PC The Debian Documentation Project (https://www.debian.org/doc/) September 27, 2021 Release Notes for Debian 11 (bullseye), 32-bit PC This document is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License, version 2, as published by the Free Software Foundation. This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details. You should have received a copy of the GNU General Public License along with this program; if not, write to the Free Software Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA. The license text can also be found at https://www.gnu.org/licenses/gpl-2.0.html and /usr/ share/common-licenses/GPL-2 on Debian systems. ii Contents 1 Introduction 1 1.1 Reporting bugs on this document . 1 1.2 Contributing upgrade reports . 1 1.3 Sources for this document . 2 2 What’s new in Debian 11 3 2.1 Supported architectures . 3 2.2 What’s new in the distribution? . 3 2.2.1 Desktops and well known packages . 3 2.2.2 Driverless scanning and printing . 4 2.2.2.1 CUPS and driverless printing . 4 2.2.2.2 SANE and driverless scanning . 4 2.2.3 New generic open command . 5 2.2.4 Control groups v2 . 5 2.2.5 Persistent systemd journal . -

Rkward: a Comprehensive Graphical User Interface and Integrated Development Environment for Statistical Analysis with R

JSS Journal of Statistical Software June 2012, Volume 49, Issue 9. http://www.jstatsoft.org/ RKWard: A Comprehensive Graphical User Interface and Integrated Development Environment for Statistical Analysis with R Stefan R¨odiger Thomas Friedrichsmeier Charit´e-Universit¨atsmedizin Berlin Ruhr-University Bochum Prasenjit Kapat Meik Michalke The Ohio State University Heinrich Heine University Dusseldorf¨ Abstract R is a free open-source implementation of the S statistical computing language and programming environment. The current status of R is a command line driven interface with no advanced cross-platform graphical user interface (GUI), but it includes tools for building such. Over the past years, proprietary and non-proprietary GUI solutions have emerged, based on internal or external tool kits, with different scopes and technological concepts. For example, Rgui.exe and Rgui.app have become the de facto GUI on the Microsoft Windows and Mac OS X platforms, respectively, for most users. In this paper we discuss RKWard which aims to be both a comprehensive GUI and an integrated devel- opment environment for R. RKWard is based on the KDE software libraries. Statistical procedures and plots are implemented using an extendable plugin architecture based on ECMAScript (JavaScript), R, and XML. RKWard provides an excellent tool to manage different types of data objects; even allowing for seamless editing of certain types. The objective of RKWard is to provide a portable and extensible R interface for both basic and advanced statistical and graphical analysis, while not compromising on flexibility and modularity of the R programming environment itself. Keywords: GUI, integrated development environment, plugin, R. -

A Word from Our 2006 Section Chairs

VOLUME 17, NO 1, JUNE 2006 A joint newsletter of the Statistical Computing & Statistical Graphics Sections of the American Statistical Association A Word from our 2006 Section Chairs PAUL MURRELL STEPHAN R. SAIN GRAPHICS COMPUTING I would like to begin by When Carey Priebe asked me highlighting a couple of to run for one of the section interesting recent offices a couple of years ago, I developments in the area of wasn’t exactly sure what I was Statistical Graphics. getting into. Now that I have There has been a lot of been chair for a couple of activity on the GGobi project months, I’m still not totally lately, with an updated web sure what I’ve gotten into. site, new versions, and The one thing I do know, improved links to R. I though, is that I’m very happy encourage you to (re)visit www.ggobi.org and see what to be involved in the section. they’ve been up to. There are a lot of very interesting things going on and, as always, a lot of opportunity for people who have an The third volume of the Handbook of Computational interest in statistical computing. Statistics, which is focused on Data Visualization, is scheduled for publication at the end of this year and Continues on Page 2.......... there will be a workshop as a satellite of Compstat 2006. This important volume will contain over 30 contributions and will provide a comprehensive overview of all areas of data visualization. Information about this project is available at gap.stat.sinica.edu.tw/ HBCSC. -

Debian Basic Packaging Workshop

Debian Basic Packaging Workshop Per Andersson <avtobiff@gmail.com> http://sigsucc.se/talks/debian-basic/ FOSS-STHLM, 2010 Outline Why Package for Debian How Does Software Enter Debian? Debian Infrastructure Debian Package Wedge Package Into Debian Maintaining, or, Keeping Package in Debian Tools References and Resources Tools and Practice Why Package for Debian • Help maintain a very popular GNU distribution • GNU and kernels Linux, HURD, kFreeBSD... • 12 arches, 25 000+ packages • Debian is free software with a social contract • Large user base, user and developer communities • Goal: The Universal Operating System • ...i.e. WORLD DOMINATION • Robust package management system • dpkg • APT • Contribute because it is a Good ThingTM • Also, very fun and rewarding How Does Software Enter Debian? • Upstream source • Voluntary work • Request For Package (RFP) • You want someone else to do the job • Intend To Package (ITP) • You will do the job • Checking existing work • Work Needing and Prospective Packages (WNPP) Debian Infrastructure • dpkg, debs • APT • apt-get • aptitude • synaptic • wajig • ... • Repository • dist: Directory containing "distributions", canonical entry point (meta information) • pool: Physical location for all packages of Debian (pre-)releases Debian Package • Source Package • Upstream source with debian/ dir or patched with diff.gz • debian/ • control • copyright • changelog • rules • Package related files • debian/bin-pkg-name • Binary Package • deb or udeb • Package name listed in control field Package • ar(1) archive with -

The Rattle Package September 30, 2007

The rattle Package September 30, 2007 Type Package Title A graphical user interface for data mining in R using GTK Version 2.2.64 Date 2007-09-29 Author Graham Williams <[email protected]> Maintainer Graham Williams <[email protected]> Depends R (>= 2.2.0) Suggests RGtk2, ada, amap, arules, bitops, cairoDevice, cba, combinat, doBy, e1071, ellipse, fEcofin, fCalendar, fBasics, foreign, fpc, gdata, gtools, gplots, Hmisc, kernlab, MASS, Matrix, mice, network, pmml, randomForest, rggobi, ROCR, RODBC, rpart, RSvgDevice, XML Description Rattle provides a Gnome (RGtk2) based interface to R functionality for data mining. The aim is to provide a simple and intuitive interface that allows a user to quickly load data from a CSV file (or via ODBC), transform and explore the data, and build and evaluate models, and export models as PMML (predictive modelling markup language). All of this with knowing little about R. All R commands are logged and available for the user, as a tool to then begin interacting directly with R itself, if so desired. Rattle also exports a number of utility functions and the graphical user interface does not need to be run to deploy these. License GPL version 2 or newer URL http://rattle.togaware.com/ R topics documented: audit . 2 calcInitialDigitDistr . 3 calculateAUC . 3 centers.hclust . 4 drawTreeNodes . 5 drawTreesAda . 6 evaluateRisk . 7 1 2 audit genPlotTitleCmd . 8 rattle_gui . 9 listRPartRules . 9 listTreesAda . 10 plotBenfordsLaw . 11 plotNetwork . 11 plotOptimalLine . 12 plotRisk . 14 printRandomForests . 16 randomForest2Rules . 17 rattle . 18 savePlotToFile . 19 treeset.randomForest . 19 Index 21 audit Sample dataset for illustration Rattle functionality. -

Uros2018.Pdf

Use of R in O cial Statistics 6th International Conference 2018 2018OV010 Eventbanner uRos2018 Rolbanner 100x200_DEF OPTIES .indd 1 23-7-2018 09:58:34 Eventbanner uRos2018 1920x400.jpg Eventbanner uRos2018 1920x400.bb Welcome The global community of R users is growing, and the number of Naonal and Interna- onal Stascal Offices that are adopng R is growing as well. About five years ago, when this conference was organized as an internaonal conference for the first me in Romania, we felt a bit like outlaws using Free and Open Source Soware (FOSS) in an area where commercial packages rule the land. How mes have changed: in the mean me FOSS, and in parcular R is considered a driving force of innovaon in academia, industry and government. The popularity of R is demonstrated by the hundreds of local R user groups, the thousands of R packages, and the RConsorum. The current conference, at Stascs Netherlands, marks the first occasion outside of the place where it was conceived: Romania. We are therefore especially pleased that our keynote speakers have roots in both countries. Alina Matei is a professor of stascs in Switzerland with Romanian roots. She will talk about opmal sample coordinaon using R. An important topic in mes where the reducon of response burden and increasing nonresponse rates force us to use smaller, more complex sampling methods. Not many R users are aware that there is a ‘touch of Dutch’ in R. Since 2017, Jeroen Ooms (UC Berkeley) is the maintainer of both Rtools and R for Windows. He will tell us about what it takes to compile, release, and modernize a system on which more than 12,500 R packages and millions of users rely every day. -

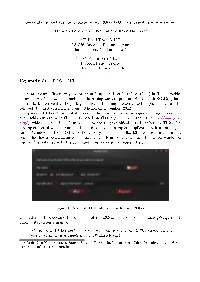

Statcharrms R Version Installation Guide

StatCharrms R Version Installation Guide 2014-07-14 Written and Programmed By: Joe Swintek, BTS Based off StatCharrms SAS version developed by: Dr. John Green, DuPont Applied Statistics Group, Stine-Haskell Research Center Additional Testing By: Kevin Flynn, USEPA Jon Haselman, USEPA Funded By: USEPA Under Contract EP-D-13-052 Installation StatCharrms is a graphical user interface front end for R, designed for ease of operation that performs the recommended statistical procedure used in the Medaka Extended One Generation Test (MEOGRT) and Larval Amphibian Growth and Development Assay (LAGDA). The statistical procedures implemented within StatCharrms are; the Rao-Scott adjusted Cochran-Armitage trend test by slices (RSCABS), a repeated measures ANVOA using time and treatment as fixed effects, Jonckheere-Terpstra trend test, Dunnett test, Kruskal Wallis, Dunns Test, one way ANOVA, weighted one way ANOVA, mixed effect ANOVA for imbalanced replicate structures, and a mixed effect Cox proportional model for imbalanced replicate structures. StatCharrms is implemented as an R workspace preloaded with the required functions. To Start StatCharrms double click on the R icon labeled StatCharrms-V##.RData. Now the installation of the required packages can begin by typing : Install.StatCharrms() into the R console and then hitting enter. R is case sensitive so you will need to type the command exactly as it is above. Figure one shows what is should look like. Executing the installation command will, by default, create a folder on the C drive called “RLib” that will contain the libraries needed for StatCharrms to run. Figure 1: Next a window asking to select CRAN (Comprehensive R Archive Network) mirror will popup. -

Sexy-Rgtk: a Package for Programming Rgtk2 GUI in a User-Friendly Manner

sexy-rgtk: a package for programming RGtk2 GUI in a user-friendly manner Damien Lerouxa and Nathalie Villa-Vialaneixa;b a INRA, UR875, MIAT F-31326 Castanet Tolosan - France [email protected] b SAMM, Université Paris 1 F-75634 Paris - France [email protected] Keywords: Gtk2, RGtk2, GUI There are many dierent ways to program Graphical User Interfaces (GUI) in R.[1] provides an overview of the available methods, describing ways to program R GUI with RGtk2, qtbase and tcltk. More recently, the package shiny, for building interactive web applications, was also released (the rst version has been published on December, 2012). The package RGtk2 [2] is probably one of the most complete packages to program complex and highly customizable GUI. It is based on GTK2 (the GIMP Toolkit, http://www.gtk. org/), which is a multi-platform toolkit for creating Graphical User Interfaces. GTK2 oers a complete set of widgets and can be used to develop complete application suites working on Linux, Windows and Mac OS X. Although very exible, each RGtk2 interface results in a long script that has a counterintuitive syntax for most R users. For instance, the simple window of Figure1 1 is obtained with the command lines provided in Figure2 (left). Figure 1: A simple GUI interface made with RGtk2. One attempt to overcome the diculty of the RGtk2 syntax is the package gWidgets but, quoting its reference manual The excellent RGtk2 package opens up the full power of the GTK2 toolkit, only a fraction of which is available though gWidgetsRGtk2. -

Deducer: a Data Analysis GUI for R

JSS Journal of Statistical Software June 2012, Volume 49, Issue 8. http://www.jstatsoft.org/ Deducer: A Data Analysis GUI for R Ian Fellows University of California, Los Angeles Abstract While R has proven itself to be a powerful and flexible tool for data exploration and analysis, it lacks the ease of use present in other software such as SPSS and Minitab. An easy to use graphical user interface (GUI) can help new users accomplish tasks that would otherwise be out of their reach, and improves the efficiency of expert users by replacing fifty key strokes with five mouse clicks. With this in mind, Deducer presents dialogs that are understandable for the beginner, and yet contain all (or most) of the options that an experienced statistician, performing the same task, would want. An Excel-like spreadsheet is included for easy data viewing and editing. Deducer is based on Java's Swing GUI library and can be used on any common operating system. The GUI is independent of the specific R console and can easily be used by calling a text-based menu system. Graphical menus are provided for the JGR console and the Windows R GUI. Keywords: GUI, R. 1. Introduction R (R Development Core Team 2012) is a powerful statistical programming language that places the latest statistical techniques at one's fingertips through thousands of add-on packages available on the Comprehensive R Archive Network (CRAN) download servers. The price for all of this power is complexity. Because R analyses must be called as text commands, the user is required to find out the name of the function that will accomplish their task, and then remember that name along with the names of the variables to feed it, and its argument options. -

Pipenightdreams Osgcal-Doc Mumudvb Mpg123-Alsa Tbb

pipenightdreams osgcal-doc mumudvb mpg123-alsa tbb-examples libgammu4-dbg gcc-4.1-doc snort-rules-default davical cutmp3 libevolution5.0-cil aspell-am python-gobject-doc openoffice.org-l10n-mn libc6-xen xserver-xorg trophy-data t38modem pioneers-console libnb-platform10-java libgtkglext1-ruby libboost-wave1.39-dev drgenius bfbtester libchromexvmcpro1 isdnutils-xtools ubuntuone-client openoffice.org2-math openoffice.org-l10n-lt lsb-cxx-ia32 kdeartwork-emoticons-kde4 wmpuzzle trafshow python-plplot lx-gdb link-monitor-applet libscm-dev liblog-agent-logger-perl libccrtp-doc libclass-throwable-perl kde-i18n-csb jack-jconv hamradio-menus coinor-libvol-doc msx-emulator bitbake nabi language-pack-gnome-zh libpaperg popularity-contest xracer-tools xfont-nexus opendrim-lmp-baseserver libvorbisfile-ruby liblinebreak-doc libgfcui-2.0-0c2a-dbg libblacs-mpi-dev dict-freedict-spa-eng blender-ogrexml aspell-da x11-apps openoffice.org-l10n-lv openoffice.org-l10n-nl pnmtopng libodbcinstq1 libhsqldb-java-doc libmono-addins-gui0.2-cil sg3-utils linux-backports-modules-alsa-2.6.31-19-generic yorick-yeti-gsl python-pymssql plasma-widget-cpuload mcpp gpsim-lcd cl-csv libhtml-clean-perl asterisk-dbg apt-dater-dbg libgnome-mag1-dev language-pack-gnome-yo python-crypto svn-autoreleasedeb sugar-terminal-activity mii-diag maria-doc libplexus-component-api-java-doc libhugs-hgl-bundled libchipcard-libgwenhywfar47-plugins libghc6-random-dev freefem3d ezmlm cakephp-scripts aspell-ar ara-byte not+sparc openoffice.org-l10n-nn linux-backports-modules-karmic-generic-pae -

Package 'Rattle'

Package ‘rattle’ September 5, 2017 Type Package Title Graphical User Interface for Data Science in R Version 5.1.0 Date 2017-09-04 Depends R (>= 2.13.0) Imports stats, utils, ggplot2, grDevices, graphics, magrittr, methods, RGtk2, stringi, stringr, tidyr, dplyr, cairoDevice, XML, rpart.plot Suggests pmml (>= 1.2.13), bitops, colorspace, ada, amap, arules, arulesViz, biclust, cba, cluster, corrplot, descr, doBy, e1071, ellipse, fBasics, foreign, fpc, gdata, ggdendro, ggraptR, gplots, grid, gridExtra, gtools, gWidgetsRGtk2, hmeasure, Hmisc, kernlab, Matrix, mice, nnet, odfWeave, party, playwith, plyr, psych, randomForest, rattle.data, RColorBrewer, readxl, reshape, rggobi, ROCR, RODBC, rpart, SnowballC, survival, timeDate, tm, verification, wskm, RGtk2Extras, xgboost Description The R Analytic Tool To Learn Easily (Rattle) provides a Gnome (RGtk2) based interface to R functionality for data science. The aim is to provide a simple and intuitive interface that allows a user to quickly load data from a CSV file (or via ODBC), transform and explore the data, build and evaluate models, and export models as PMML (predictive modelling markup language) or as scores. All of this with knowing little about R. All R commands are logged and commented through the log tab. Thus they are available to the user as a script file or as an aide for the user to learn R or to copy-and-paste directly into R itself. Rattle also exports a number of utility functions and the graphical user interface, invoked as rattle(), does not need to be run to deploy these. License -

Oresat Linux Updater

OreSat Linux Updater Ryan Medick Apr 22, 2021 CONTENTS 1 Glossary of Terms Used 3 2 OreSat Linux Updater Daemon5 2.1 Daemon..................................................5 3 Update Maker 9 3.1 Update Maker..............................................9 4 Files 11 4.1 Update Archive.............................................. 11 4.2 Status Archive.............................................. 13 5 Internals 15 5.1 OreSat Linux Updater’s Internal..................................... 15 6 Indices and tables 23 Index 25 i ii OreSat Linux Updater Warning: This is still a work in progress. CONTENTS 1 OreSat Linux Updater 2 CONTENTS CHAPTER ONE GLOSSARY OF TERMS USED D-Bus Inter-process communication system provided by systemd. See https://www.freedesktop.org/wiki/Software/ dbus/ Daemon Long running, background process on Linux. dpkg The low level package manager for install and removing packages on Debian Linux. APT is build ontop of it. OreSat PSAS’s open source CubeSat. See https://www.oresat.org/ OreSat Linux Manager (OLM) The front end daemon for all OreSat Linux boards. It converts CANopen message into DBus messages and vice versa. See https://github.com/oresat/oresat-linux-manager OreSat Linux Updater The common daemon found on all OreSat Linux boards, that handles updating the board with update archives. Status Archive A tar file with two status files produced by the OreSat Linux updater. One with a JSON list of the update archive in OreSat Linux updater’s cache and the other will be a copy of dpkg status file. The Update Maker will uses these to make future update archives. Update Archive A tar file used by the OreSat Linux updater daemon to update the board it is running on.