Grafanalib's Documentation!

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

SQL Server 2017 on Linux Quick Start Guide | 4

SQL Server 2017 on Linux Quick Start Guide Contents Who should read this guide? ........................................................................................................................ 4 Getting started with SQL Server on Linux ..................................................................................................... 5 Why SQL Server with Linux? ..................................................................................................................... 5 Supported platforms ................................................................................................................................. 5 Architectural changes ............................................................................................................................... 6 Comparing SQL on Windows vs. Linux ...................................................................................................... 6 SQL Server installation on Linux ................................................................................................................ 8 Installing SQL Server packages .................................................................................................................. 8 Configuration capabilities ....................................................................................................................... 11 Licensing .................................................................................................................................................. 12 Administering and -

Latest Pgwatch2 Docker Image with Built-In Influxdb Metrics Storage DB

pgwatch2 Sep 30, 2021 Contents: 1 Introduction 1 1.1 Quick start with Docker.........................................1 1.2 Typical “pull” architecture........................................2 1.3 Typical “push” architecture.......................................2 2 Project background 5 2.1 Project feedback.............................................5 3 List of main features 7 4 Advanced features 9 4.1 Patroni support..............................................9 4.2 Log parsing................................................ 10 4.3 PgBouncer support............................................ 10 4.4 Pgpool-II support............................................. 11 4.5 Prometheus scraping........................................... 12 4.6 AWS / Azure / GCE support....................................... 12 5 Components 13 5.1 The metrics gathering daemon...................................... 13 5.2 Configuration store............................................ 13 5.3 Metrics storage DB............................................ 14 5.4 The Web UI............................................... 14 5.5 Metrics representation.......................................... 14 5.6 Component diagram........................................... 15 5.7 Component reuse............................................. 15 6 Installation options 17 6.1 Config DB based operation....................................... 17 6.2 File based operation........................................... 17 6.3 Ad-hoc mode............................................... 17 6.4 Prometheus -

Grafana and Mysql Benefits and Challenges 2 About Me

Grafana and MySQL Benefits and Challenges 2 About me Philip Wernersbach Software Engineer Ingram Content Group https://github.com/philip-wernersbach https://www.linkedin.com/in/pwernersbach 3 • I work in Ingram Content Group’s Automated Print On Demand division • We have an automated process in which publishers (independent or corporate) request books via a website, and we automatically print, bind, and ship those books to them • This process involves lots of hardware devices and software components 4 The Problem 5 The Problem “How do we aggregate and track metrics from our hardware and software sources, and display those data points in a graph format to the end user?” à Grafana! 6 Which data store should we use with Grafana? ▸ Out of the box, Grafana supports Elasticsearch, Graphite, InfluxDB, KairosDB, OpenTSDB 7 Which data store should we use with Grafana? ▸ We compared the options and tried InfluxDB ▸ There were several sticking points with InfluxDB, both technical and organizational, that caused us to rule it out 8 Which data store should we use with Grafana? ▸ We already have a MySQL cluster deployed, System Administrators and Operations know how to manage it ▸ Decided to go with MySQL as a data store for Grafana 9 The Solution: Ingram Content’s Grafana-MySQL Integration 10 ▸ Written in Nim ▸ Emulates an InfluxDB server ▸ Connects to an existing The Integration MySQL server ▸ Protocol compatible with InfluxDB 0.9.3 ▸ Acts as a proxy that converts the InfluxDB protocol to the MySQL protocol and vice- versa 11 Grafana The Integration -

Monitoring Container Environment with Prometheus and Grafana

Matti Holopainen Monitoring Container Environment with Prometheus and Grafana Metropolia University of Applied Sciences Bachelor of Engineering Information and Communication Technology Bachelor’s Thesis 3.5.2021 Abstract Tekijä Matti Holopainen Otsikko Monitoring Container Environment with Prometheus and Grafana Sivumäärä Aika 50 sivua 3.5.2021 Tutkinto Insinööri (AMK) Tutkinto-ohjelma Tieto- ja viestintätekniikka Ammatillinen pääaine Ohjelmistotuotanto Ohjaajat Nina Simola, Projektipäällikkö Auvo Häkkinen, Yliopettaja Insinöörityön tavoitteena oli oppia pystyttämään monitorointijärjestelmä konttiympäristön re- surssien käytön seuraamista, monitorointia ja analysoimista varten. Tavoitteena oli helpot- taa monitorointijärjestelmän käyttöönottoa. Työ tehtiin käytettävien ohjelmistojen dokumen- taation ja käytännön tekemisellä opittujen asioiden pohjalta. Insinöörityön alussa käytiin läpi työssä käytettyjä teknologioita. Tämän jälkeen käytiin läpi monitorointi järjestelmän konfiguraatio ja käyttöönotto. Seuraavaksi tutustuttiin PromQL-ha- kukieleen, jonka jälkeen näytettiin kuinka pystyttää valvontamonitori ja hälytykset sähköpos- timuistutuksella. Työn lopussa käydään läpi kuinka monitorointijärjestelmässä saatua dataa analysoidaan ja mietitään miten monitorointijärjestelmää voisi parantaa. Keywords Monitorointi, Kontti, Prometheus, Grafana, Docker Abstract Author Matti Holopainen Title Monitoring Container Environment with Prometheus and Grafana Number of Pages Date 50 pages 3.5.2021 Degree Bachelor of Engineering Degree Programme Information -

Eventindex Monitoring

BigData tools for the monitoring of the ATLAS EventIndex Evgeny Alexandrov1, Andrei Kazymov1, Fedor Prokoshin2, on behalf of the ATLAS collaboration 1Joint Institute for Nuclear Research, Dubna, Russia. 2Centro Científico Tecnológico de Valparaíso-CCTVal, Universidad Técnica Federico Santa María. GRID Conference at JINR 12 September 2018 Introduction • The EventIndex is the complete catalogue of all ATLAS events, keeping the references to all files that contain a given event in any processing stage. • The ATLAS EventIndex collects event information from data both at CERN and Grid sites. • It uses the Hadoop system to store the results, and web services to access them. • Its successful operation depends on a number of different components. • Each component has completely different sets of parameters and states and requires a special approach. Monitoring Components Prodsys EIOracle ??? Event Picking Tests XML ORACLE? Old Monitoring System based on Kibana Disadvantages of Kibana Slow dashboard retrieving time: - for two days period: 15 seconds; - for 7 days period: 1 minute 30 seconds; - for a longer periods: it may take tens of minutes and eventually get stuck. Not very comfortable way of editing the dashboard’s page Grafana Grafana is one of the most popular packages for visualizing monitoring data. Uses modern technologies: - back-end is written using Go programming language; - front-end is written on typescript and uses angular approach. The following datasources are officially supported: Graphite InfluxDB MySQL Elasticsearch OpenTSDB Postgres CloudWatch Prometheus Microsoft SQL Server (MSSQL) InfluxDB InfluxDB is InfluxData's open source time series database designed to handle high write and query loads. Uses modern technologies: - it is written on GO; - It has the possibility of working in cluster mode; - availability of libraries for a large number of programming languages (Python, JavaScript, PHP, Haskell and others); - SQL-like query language, with which you can perform various operations with time series (merging, splitting). -

Zabbix 4.2 Data Processing and More Sponsors Gold Sponsors Co-Organizer

Zabbix 4.2 data processing and more Sponsors Gold sponsors Co-organizer 2 What is Zabbix? Zabbix is a universal Open Source enterprise level monitoring solution 3 4 We have found a good balance between giving away free software and having a sustainable growth 5 Free Software Services Services that save your time and money Free Software Services Services that save your time and money Customers in 75+ countries 8 Some of our users 9 Zabbix Real-time Alerting & Data collection problem Visualization Remediation detection 10 Where we are currently? 3.0 LTS 3.2 3.4 4.0 LTS 11 Zabbix 4.0 LTS 12 Data preprocessing { “host": { "name": "Linux #2356", "vms": [{ “name”: “vm034”, “network": { "read": “0xfa673”, "write": “0x45b30” } }, { “name”: “vm076”, “network": { "read": “0x76ab”, “write": “0xff3a” } }] } } 13 Data preprocessing "write": “0x45b30” } }, { “name”: “vm076”, “network": { "read": “0x76ab”, 0x76ab “write": “0xff3a” } }] JSON Path: .host.vms[1].network.read } } 14 Data preprocessing 0x76ab JSON Path: .host.vms[1].network.read 15 Data preprocessing 0x76ab 76ab Regexp: 0x(.*) 16 Data preprocessing 0x76ab 76ab 330379 Hex to Decimal 17 Data preprocessing 0x76ab 76ab 330379 338308096 KB -> bytes 18 Data preprocessing 19 Efficiency Zabbix server mysql[status] 20 Efficiency Master item 21 Efficiency Dependent items 22 Efficiency mysql[questions] mysql[status] Zabbix server mysql[reads] 23 Efficiency mysql[questions] mysql[status] Zabbix server mysql[reads] Performance, less user parameters, all logic in templates 24 Limitations 4.0 LTS 4.2 Not flexible -

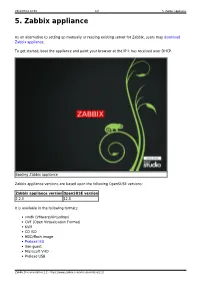

5. Zabbix Appliance 5

2021/07/12 12:09 1/8 5. Zabbix appliance 5. Zabbix appliance As an alternative to setting up manually or reusing existing server for Zabbix, users may download Zabbix appliance. To get started, boot the appliance and point your browser at the IP it has received over DHCP. Booting Zabbix appliance Zabbix appliance versions are based upon the following OpenSUSE versions: Zabbix appliance version OpenSUSE version 2.2.0 12.3 It is available in the following formats: vmdk (VMware/Virtualbox) OVF (Open Virtualisation Format) KVM CD ISO HDD/flash image Preload ISO Xen guest Microsoft VHD Preload USB Zabbix Documentation 2.2 - https://www.zabbix.com/documentation/2.2/ Last update: 2018/09/04 11:39 manual:appliance https://www.zabbix.com/documentation/2.2/manual/appliance It has Zabbix server configured and running on MySQL, as well as frontend available. The appliance has been built using SUSE Studio. 1 Changes to SUSE configuration There are some changed applied to the base OpenSUSE configuration. 1.1 MySQL configuration changes Binary log is disabled; InnoDB is configured to store data for each table in a separate file. 1.2 Using a static IP address By default the appliance uses DHCP to obtain IP address. To specify a static IP address: Log in as root user; Open file /etc/sysconfig/network/ifcfg-eth0 in your favourite editor; Set BOOTPROTO variable to static; Set IPADDR, NETMASK and any other parameters as required for your network; Create file /etc/sysconfig/network/routes. For the default route, use default 192.168.1.1 - - (replacing with your gateway address). -

5 Installation from Containers Docker

2021/09/23 12:33 1/9 5 Installation from containers 5 Installation from containers Docker Zabbix provides Docker images for each Zabbix component as portable and self-sufficient containers to speed up deployment and update procedure. Zabbix components come with MySQL and PostgreSQL database support, Apache2 and Nginx web server support. These images are separated into different images. Docker base images Zabbix components are provided on Ubuntu, Alpine Linux and CentOS base images: Image Version alpine 3.9 ubuntu bionic centos latest All images are configured to rebuild latest images if base images are updated. Zabbix appliance images are based on Alpine Linux version 3.4, all other images are based on Alpine Linux 3.9. Docker file sources Everyone can follow Docker file changes using the Zabbix official repository on github.com. You can fork the project or make your own images based on official Docker files. Structure All Zabbix components are available in the following Docker repositories: Zabbix appliance with MySQL database support and Nginx web-server - zabbix/zabbix-appliance Zabbix agent - zabbix/zabbix-agent Zabbix server Zabbix server with MySQL database support - zabbix/zabbix-server-mysql Zabbix server with PostgreSQL database support - zabbix/zabbix-server-pgsql Zabbix web-interface Zabbix web-interface based on Apache2 web server with MySQL database support - zabbix/zabbix-web-apache-mysql Zabbix web-interface based on Apache2 web server with PostgreSQL database support - zabbix/zabbix-web-apache-pgsql Zabbix web-interface based on -

Mysql Performance Monitoring with Zabbix an Alternative

MySQL Performance Monitoring with Zabbix An alternative to the MySQL Enterprise Monitor? by Oli Sennhauser [email protected] http://www.fromdual.com www.fromdual.com 1 How many of you ... ● ¼ monitor their database servers? ● ¼ monitor their (MySQL) databases? ● ¼ monitor performance metrics? ● ¼ all the others: Why not? www.fromdual.com 2 Linux monitoring ● Question: What is your favourite Linux monitoring application? ● 1187 votes, April 2010 ● Manipulated? ● Lit: Linux Journal, [1] www.fromdual.com 3 What to monitor? ● Devices / Software ● Server, Router, Switches, I/O systems etc. ● Operating System, Networks, Applications, etc. ● Incidents ● DB down, Replication stopped, Server not reachable, etc. ● Critical Events ● Disk more than n% full or less than m Gbyte free, Replication more than n seconds lagging, Data node down, 100% CPU utilization, etc. → Alert, immediate intervention, fire fighting www.fromdual.com 4 What to monitor? ● Trends (includes time!) → Graphs ● How long does it take until ¼ ● ¼ my disk is full? ● ¼ my Index Memory is filled up? ● When does it happen? ● Peak? Backup? ● How often does it happen? Does it happen periodically? ● Once a day? Always at Sunday night? ● How does it correlate to other informations? ● I/O problems during our backup window? ● Reading the patterns! → this can help us to find the root cause of problems¼ www.fromdual.com 5 How to monitor? ● Basic solutions: ● top, vmstat, iostat, mytop, innotop, SHOW GLOBAL STATUS, SHOW INNODB STATUS ● CLI!, no graphs, no log term information, but good for adhoc analysis! ● Graphical solutions ● Nagios, Cactii, Zabbix, ¼ ● Typically NOT specialised in DB monitoring... www.fromdual.com 6 Focus ● Database + Server ● Trends Not the other stuff.. -

Monitoring with Influxdb and Grafana

Monitoring with InfluxDB and Grafana Andrew Lahiff STFC RAL ! HEPiX 2015 Fall Workshop, BNL Introduction Monitoring at RAL • Like many (most?) sites, we use Ganglia • have ~89000 individual metrics • What’s wrong with Ganglia? Problems with ganglia • Plots look very dated Problems with ganglia • Difficult & time-consuming to make custom plots • currently use long, complex, messy Perl scripts • e.g. HTCondor monitoring > 2000 lines Problems with ganglia • Difficult & time-consuming to make custom plots • Ganglia UI for making customised plots is restricted & doesn’t give good results Problems with ganglia • Ganglia server has demanding host requirements • e.g. we store all rrds in a RAM disk • have problems if trying to use a VM • Doesn’t handle dynamic resources well • Occasional problems with gmond using too much memory, affecting other processes on machines • Not really suitable for Ceph monitoring A possible alternative • InfluxDB + Grafana • InfluxDB is a time-series database • Grafana is a metrics dashboard • originally a fork of Kibana • can make plots of data from InfluxDB, Graphite, others… • Very easy to make (nice) plots • Easy to install InfluxDB • Time series database written in Go • No external dependencies • SQL-like query language • Distributed • can be run as a single node • can be run as a cluster for redundancy & performance (not suitable for production use yet) • Data can be written in using REST, or an API (e.g. Python) • or from collectd or graphite InfluxDB • Data organised by time series, grouped together into databases • Time series have zero to many points • Each point consists of: • time - the timestamp • a measurement (e.g. -

Clúster, Cloud Y Devops

Clúster, Cloud y DevOps Remo Suppi Boldrito PID_00212475 GNUFDL • PID_00212475 Clúster, Cloud y DevOps Índice Introducción .......................................................... 5 Objetivos ............................................................... 8 1. Clusterización ..................................................... 9 1.1. Virtualización ................................................. 9 1.1.1. Plataformas de virtualización ......................... 10 1.2. Beowulf ....................................................... 12 1.2.1. ¿Cómo configurar los nodos? ......................... 14 1.3. Beneficios del cómputo distribuido ........................... 15 1.3.1. ¿Cómo hay que programar para aprovechar la concurrencia? ........................................ 17 1.4. Memoria compartida. Modelos de hilos (threading) ........... 19 1.4.1. Multihilos (multithreading)............................ 19 1.5. OpenMP ...................................................... 23 1.6. MPI, Message Passing Interface ................................. 27 1.6.1. Configuración de un conjunto de máquinas para hacer un clúster adaptado a OpenMPI ................ 28 1.7. Rocks Cluster ................................................. 31 1.7.1. Guía rápida de instalación ............................ 32 1.8. FAI ............................................................ 33 1.8.1. Guía rápida de instalación ............................ 34 1.9. Logs ........................................................... 36 1.9.1. Octopussy ........................................... -

Centralized and Structured Log File Analysis with Open Source and Free Software Tools

Bachelor Thesis Summer Semester 2013 at Fachhochschule Frankfurt am Main University of Applied Sciences Department of Computer Science and Engineering towards Bachelor of Science Computer Science submitted by Jens Kühnel Centralized and structured log file analysis with Open Source and Free Software tools 1. Supervisor: Prof. Dr. Jörg Schäfer 2. Supervisor: Prof. Dr. Matthias Schubert topic received: 11. 07. 2013 thesis delivered: 30. 08. 2013 Abstract This thesis gives an overview on the Open Source and Free Software tools available for a centralized and structured log file analysis. This includes the tools to convert unstructured logs into structured log and different possibilities to transport this log to a central analyzing and storage station. The different storage and analyzing tools will be introduced, as well as the different web front ends to be used by the system administrator. At the end different tool chains will be introduced, that are well tested in this field. Revisions Rev. 269: Official Bachelor these sent to FH Rev. 273: Removal of Affidavit, fix of Pagenumber left/right II Table of Contents 1 Introduction.......................................................................................................................................1 1.1 Selection criteria........................................................................................................................1 1.2 Programs that are included in this thesis...................................................................................2 1.3 What