Productive High Performance Parallel Programming with Auto-Tuned Domain-Specific Embedded Languages

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Aigaion: a Web-Based Open Source Software for Managing the Bibliographic References

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by E-LIS repository Aigaion: A Web-based Open Source Software for Managing the Bibliographic References Sanjo Jose ([email protected]) Francis Jayakanth ([email protected]) National Centre for Science Information, Indian Institute of Science, Bangalore – 560 012 Abstract Publishing research papers is an integral part of a researcher's professional life. Every research article will invariably provide large number of citations/bibliographic references of the papers that are being cited in that article. All such citations are to be rendered in the citation style specified by a publisher and they should be accurate. Researchers, over a period of time, accumulate a large number of bibliographic references that are relevant to their research and cite relevant references in their own publications. Efficient management of bibliographic references is therefore an important task for every researcher and it will save considerable amount of researchers' time in locating the required citations and in the correct rendering of citation details. In this paper, we are reporting the features of Aigaion, a web-based, open-source software for reference management. 1. Introduction A citation or bibliographic citation is a reference to a book, article, web page, or any other published item. The reference will contain adequate details to facilitate the readers to locate the publication. Different citation systems and styles are being used in different disciplines like science, social science, humanities, etc. Referencing is also a standardised method of acknowledging the original source of information or idea. -

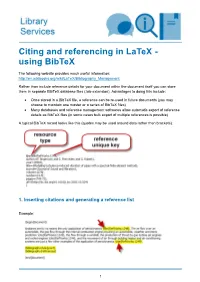

Citing and Referencing in Latex - Using Bibtex

Citing and referencing in LaTeX - using BibTeX The following website provides much useful information: http://en.wikibooks.org/wiki/LaTeX/Bibliography_Management Rather than include reference details for your document within the document itself you can store them in separate BibTeX database files (.bib extension). Advantages to doing this include: • Once stored in a BibTeX file, a reference can be re-used in future documents (you may choose to maintain one master or a series of BibTeX files) • Many databases and reference management softwares allow automatic export of reference details as BibTeX files (in some cases bulk export of multiple references is possible) A typical BibTeX record looks like this (quotes may be used around data rather than brackets): 1. Inserting citations and generating a reference list Example: 1 • To specify the output style of citations and references - insert the \bibliographystyle command e.g. \bibliographystyle{unsrt} where unsrt.bst is an available style file (a basic numeric style). Basic LaTeX comes with a few .bst style files; others can be downloaded from the web • To insert a citation in the text in the specified output style - insert the \cite command e.g. \cite{1942} where 1942 is the unique key for that reference. Variations on the \cite command can be used if using packages such as natbib (see below) • More flexible citing and referencing may be achieved by using other packages such as natbib (see below) or Biblatex • To generate the reference list in the specified output style - insert the \bibliography command e.g. \bibliography{references} where your reference details are stored in the file references.bib (kept in the same folder as the document). -

The Apacite Package: Citation and Reference List with Latex and Bibtex According to the Rules of the American Psychological Asso

The apacite package∗ Citation and reference list with LATEX and BibTEX according to the rules of the American Psychological Association Erik Meijer apacite at gmail.com 2013/07/21 Abstract This document describes and tests the apacite package [2013/07/21]. This is a package that can be used with LATEX and BibTEX to generate citations and a reference list, formatted according to the rules of the American Psy- chological Association. Furthermore, apacite contains an option to (almost) automatically generate an author index as well. The package can be cus- tomized in many ways. ∗This document describes apacite version v6.03 dated 2013/07/21. 1 Contents 1 Introduction 3 2 Installation, package loading, and running BibTEX 5 3 Package options 7 4 The citation commands 10 4.1 The \classic" apacite citation commands . 11 4.2 Using natbib for citations . 15 5 Contents of the bibliography database file 16 5.1 Types of references . 18 5.2 Fields . 22 5.3 Overriding the default sorting orders . 32 6 Customization 32 6.1 Punctuation and small formatting issues . 33 6.2 Labels . 36 6.3 More drastic formatting changes to the reference list . 40 7 Language support 42 7.1 Language-specific issues . 43 7.2 Setting up MiKTEX .......................... 44 8 Compatibility 45 8.1 natbib .................................. 47 8.2 hyperref, backref, and url ........................ 47 8.3 Multiple bibliographies . 48 8.4 bibentry ................................. 50 8.5 Programs for conversion to html, rtf, etc. 50 9 Generating an author index 52 10 Annotated bibliographies 56 11 Auxiliary, ad hoc, and experimental commands in apacdoc.sty 56 12 Known problems and todo-list 61 13 Examples of the APA manual 63 References 89 Author Index 100 2 1 Introduction The American Psychological Association (APA) is very strict about the style in which manuscripts submitted to its journals are written and formatted. -

Publishing Bibliographic Data on the Semantic Web Using Bibbase

Undefined 0 (0) 1 1 IOS Press Publishing Bibliographic Data on the Semantic Web using BibBase Editor(s): Carsten Keßler, University of Münster, Germany; Mathieu d’Aquin, The Open University, UK; Stefan Dietze, L3S Research Center, Germany Solicited review(s): Kai Eckert, University of Mannheim, Germany; Antoine Isaac, Vrije Universiteit Amsterdam, The Netherlands; Jan Brase, German National Library of Science and Technology, Germany Reynold S. Xin a, Oktie Hassanzadeh b,∗, Christian Fritz c, Shirin Sohrabi b and Renée J. Miller b a Department of EECS, University of California, Berkeley, California, USA E-mail: [email protected] b Department of Computer Science, University of Toronto, 10 King’s College Rd., Toronto, Ontario, M5S 3G4, Canada E-mail: {oktie,shirin,miller}@cs.toronto.edu c Palo Alto Research Center, 3333 Coyote Hill Road, Palo Alto, California, USA E-mail: [email protected] Abstract. We present BibBase, a system for publishing and managing bibliographic data available in BiBTeX files. BibBase uses a powerful yet light-weight approach to transform BiBTeX files into rich Linked Data as well as custom HTML code and RSS feed that can readily be integrated within a user’s website while the data can instantly be queried online on the system’s SPARQL endpoint. In this paper, we present an overview of several features of our system. We outline several challenges involved in on-the-fly transformation of highly heterogeneous BiBTeX files into high-quality Linked Data, and present our solution to these challenges. Keywords: Bibliographic Data Management, Linked Data, Data Integration 1. Introduction as DBLP Berlin [19] and RKBExplorer [24]). -

The NASA Astrophysics Data System: Free Access to the Astronomical Literature on -Line and Through Email

High Energy Physics Libraries Webzine Issue 5 / November 2001 http://library.cern.ch/HEPLW/5/papers/1 The NASA Astrophysics Data System: Free Access to the Astronomical Literature On -line and through Email Guenther Eichhorn , Alberto Accomazzi, Carolyn S. Grant, Michael J. Kurtz and Stephen S. Murray (*) 28/09/2001 Abstract: The Astrophysics Data System (ADS) provides access to the astronomical literature through the World Wide Web. It is a NASA funded project and access to all the ADS services is free to everybody world -wide. The ADS Abstract Service allows the searching of four databases with abstracts in Astronomy, Instrumentation, Physics/Geophysics, and the LANL Preprints with a total of over 2.2 million references. The system also provides access to reference and citation information, links to on-line data, electronic journal articles, and other on-line information. The ADS Article Service contains the full articles for most of the astronomical literature back to volume 1. It contains the scanned pages of all the major journals (Astrophysical Journal, Astronomical Journal, Astronomy & Astrophysics, Monthly Notices of the Royal Astronomical Society, and Solar Physics), as well as most smaller journals back to volume 1. The ADS can be accessed through any web browser without signup or login. Alternatively an email interfa ce is available that allows our users to execute queries via email and to retrieve scanned articles via email. This might be interesting for users on slow or unreliable links, since the email system will retry sending information automatically until the tr ansfer is complete. There are now 9 mirror sites of the ADS available in different parts of the world to improve access. -

Referencing Sources of Molecular Spectroscopic Data in the Era of Data Science: Application to the HITRAN and AMBDAS Databases

atoms Article Referencing Sources of Molecular Spectroscopic Data in the Era of Data Science: Application to the HITRAN and AMBDAS Databases Frances M. Skinner 1,2,* , Iouli E. Gordon 1,* , Christian Hill 3,* , Robert J. Hargreaves 1 , Kelly E. Lockhart 4 and Laurence S. Rothman 1 1 Atomic and Molecular Physics, Center for Astrophysics|Harvard & Smithsonian, Cambridge, MA 02138, USA; [email protected] (R.J.H.); [email protected] (L.S.R.) 2 Undergraduate Chemistry Department, University of Massachusetts Lowell, Lowell, MA 01854, USA 3 Nuclear Data Section, International Atomic Energy Agency, Vienna International Centre, PO Box 100, A-1400 Vienna, Austria 4 The SAO/NASA Astrophysics Data System (ADS), Center for Astrophysics|Harvard & Smithsonian, Cambridge, MA 02138, USA; [email protected] * Correspondence: [email protected] (F.M.S.); [email protected] (I.E.G.); [email protected] (C.H.) Received: 27 February 2020; Accepted: 25 April 2020; Published: 30 April 2020 Abstract: The application described has been designed to create bibliographic entries in large databases with diverse sources automatically, which reduces both the frequency of mistakes and the workload for the administrators. This new system uniquely identifies each reference from its digital object identifier (DOI) and retrieves the corresponding bibliographic information from any of several online services, including the SAO/NASA Astrophysics Data Systems (ADS) and CrossRef APIs. Once parsed into a relational database, the software is able to produce bibliographies in any of several formats, including HTML and BibTeX, for use on websites or printed articles. The application is provided free-of-charge for general use by any scientific database. -

A Bibtex Style for Astronomical Journals

ABibTEX Style for Astronomical Journals (for use with BibTeX 0.99c) Sake J. Hogeveen This is a preliminary version. Please report any bugs in the style files, and errors or omissions in the docu- mentation to one of the E-mail addresses below. This package is sent to several astronomical journals, with a request for their official approval of its use. Version 1.0 will hopefully contain a list of journals that have given their consent. Copyright c 1990, Sake J. Hogeveen. The files astron.bst, astron.sty, astdoc.tex, astdoc.bib, mnemonic.bib, example.bib, example.tex, and template.bib are a package. You may copy and distribute them freely for non-commercial purposes, provided that you keep the package together and this copyright notice in tact. You may not alter or modify the files; this helps to ensure that all distributions of astron.bst and related files are the same. If you make any modifications, then you must give the files new names, other than the present. The author bears no responsibilities for errors in this document or the soft- ware it describes; and shall not be held liable for any indirect, incidental, or consequential damages. Astronomical Institute ‘Anton Pannekoek’, Roetersstraat 15, 1018 wb Amsterdam, The Netherlands E-mail: Earn/Internet: [email protected]; UUCP: [email protected] Contents Introduction 2 1 BibTeX 2 2 The ‘astron’ style files 2 2.1 \cite and \cite* ................................. 2 2.2 astron.bst and astron.sty ........................... 3 2.3 Required, optional, and ignored fields . 3 3 Examples 3 4 Abbreviations 3 5 Maintaining the database 4 6 Credits 4 A Aspects of publishing with TEX and LaTeX 5 A.1 Generalized Mark-up . -

Learning and Teaching Funding Guidelines

Proof of concept: using search technologies to enhance teaching public policy issues facing small developing states Graham Hassall School of Government Victoria University of Wellington 10 March 2011 1 Table of Contents I. Research Intent .............................................................................................................. 4 II. Theoretical approach ...................................................................................................... 5 III. Tools assessment ........................................................................................................ 8 A. Creating information .................................................................................................. 9 B. Storing information .................................................................................................. 12 C. Retrieving information ............................................................................................. 16 D. Integrating information ............................................................................................. 16 E. Communicating information ..................................................................................... 17 1. Findings ....................................................................................................................... 18 IV. Additional references on the use of ICTs in teaching ................................................ 19 V. Appendix: NZ and Australian based digital collections ............................................... -

An Automated Approach to Organizing Bibtex Files

An automated approach to organizing BibTEX files Rohde Fischer - 20052356 Master’s Thesis, Computer Science June 12, 2016 Advisor: Olivier Danvy Abstract We successively describe the bibliographic software tool BibTEX (both how to use it in principle and how it is used in practice), list a range of practical issues BibTEX users encounter, propose an approach to handling these issues, and review how they are tackled in related work. We then present an analysis of BibTEX files that detects these issues and we describe how to solve them by organizing BibTEX files using the results of the analysis. We implemented a proof of concept, Orangutan, that analyzes existing BibTEX files and emits diagnostics and suggestions. i ii Resumé Vi beskriver successivt det bibliografiske software-værktøj BibTEX (både, hvordan det bruges i princippet og hvordan det bruges i praksis), lister en række praktiske problemer BibTEX-brugere møder, foreslår en frem- gangsmåde til håndtering af problemerne og en gennemgang af, hvordan de takles i relateret arbejde. Vi præsenterer en analyse af BibTEX-filer, der påviser problemerne og vi beskriver, hvordan disse kan løses ved at organisere BibTEX-filerne ved hjælp af resultaterne fra analysen. Vi har implementeret et proof of concept, Orangutan, der analyserer eksisterende BibTEX-filer og udskriver diagnoser og forslag. iii iv Acknowledgments Olivier Danvy advised the work that led up to this dissertation. His constant support and help have been of immense value. René Rydhof Hansen served as an external evaluator and asked insightful questions at the defense. I am grateful for his time and attention. Anders Lindkvist, Troels Fleischer Skov Jensen, and Martin Eik Kors- gaard Rasmussen were fantastic office mates. -

Revista Eletrônica Atoz: Novas Práticas Em Informação E Conhecimento

UFPR/SCSA/Grupo de Pesquisa “Metodologias em Gestão da Informação” Revista eletrônica AtoZ: novas práticas em informação e conhecimento MSc Eduardo Michelotti Bettoni1 Marcelo Batista de Carvalho Profa. Dra. Patricia Zeni Marchiori GERAÇÃO DE INDICADORES PARA PERIÓDICOS CIENTÍFICOS: UM ESTUDO NA ATOZ Apêndice do Relatório final referente ao Edital de Apoio à Editoração e Publicação de Periódicos Científicos - 2016 (UFPR/PRPPG/SIBI) Curitiba 2016 1 Email para contato com os autores: [email protected] RESUMO Projeto aprovado no Edital de apoio à editoração de periódicos científicos (PRPPG/UFPR - 2016), para aplicação do recurso nas atividades do periódico AtoZ: Novas práticas em informação e conhecimento (PPGCGTI/UFPR). Objetivou constituir uma base de referências dos trabalhos publicados e planejar indicadores de desempenho da revista. Verificou-se que não somente o corpus de referências mas também a conversão dos metadados da revista em indicadores podem servir como orientação para melhores tomadas de decisão no processo editorial em vista dos critérios postulados pelas agências indexadoras. Por sua vez, a análise da base constituída também permite um melhor entendimento sobre as relações entre o periódico e seus pares, além de dar suporte à um processo de editoração mais assertivo com o uso do formato BibTeX. Palavras-chave: Periódico. Bibliometria. Análise de Produção Científica. SUMÁRIO 1 INTRODUÇÃO.................................................................................................4 2 BASE DE REFERÊNCIAS - A BASE BIB -

Managing Bibliographies with LATEX Lapo F

36 TUGboat, Volume 30 (2009), No. 1 Multiple citations can be added by separating Bibliographies with a comma the bibliographic keys inside the same \cite command; for example \cite{Goossens1995,Kopka1995} Managing bibliographies with LATEX Lapo F. Mori gives Abstract (Goossens et al., 1995; Kopka and Daly, 1995) The bibliography is a fundamental part of most scien- Bibliographic entries that are not cited in the tific publications. This article presents and analyzes text can be added to the bibliography with the the main tools that LATEX offers to create, manage, \nocite{key} command. The \nocite{*} com- and customize both the references in the text and mand adds all entries to the bibliography. the list of references at the end of the document. 2.2 Automatic creation with BibTEX A 1 Introduction BibTEX is a separate program from LTEX that allows creating a bibliography from an external database Bibliographic references are an important, sometimes (.bib file). These databases can be conveniently fundamental, part of academic documents. In the shared by different LATEX documents. BibTEX, which past, preparation of a bibliography was difficult and will be described in the following paragraphs, has tedious mainly because the entries were numbered many advantages over the thebibliography envi- and ordered by hand. LATEX, which was developed ronment; in particular, automatic formatting and with this kind of document in mind, provides many ordering of the bibliographic entries. tools to automatically manage the bibliography and make the authors’ work easier. How to create a 2.2.1 How BibTEX works A bibliography with LTEX is described in section2, BibTEX requires: starting from the basics and arriving at advanced 1. -

Reference Management a Product Comparison

Reference Management A Product Comparison | | 1 Exponential growth of scientific publications continues | | 2 Choices from the Bazar JabRef | | 3 Core Task: Collecting Literature . Add entries manually. Single or collections of entries are imported from webpages. Bibliographic metadata, full texts and/or screenshort can be stored. Entries or whole literature collections are to be imported from external sources such as other reference managers. | | 4 Core Task: Manage and Edit References . Organize entries in and move them between collections . Edit bibliographic information . Attach keywords and tags for personalized indexing . Compose and add exzerpts to entries . Read and annotate full texts of entries | | 5 Core Task: Cite Literature Extract bibliographic data from the reference data base and import it in the manuscript being typed up. Two approaches: Word Processing . Import reference in a WYSIWYG environment: Citation in the text as well as the entry in the reference list according to the specifications of the publisher. LaTeX/LyX . BibTeX file,with all cited references in the text – formatting done during compiling process according to specific .bst style file. | | 6 Collaborative Working Maintain a shared reference collection within a group of collaborators: . Collective r+w access to the whole reference collection . Share fulltext documents to collectively work on them (Annotation and assessment of PDF files). Beware of Copyright restrictions on licenced sources! | | 7 Collaborative Working Product Collaboration Options Group size